3 Olympic exchange theory

Here is a debunk. Titanic Switch Theory conclusions and references

3 Olympic exchange theory

Here is a debunk. Titanic Switch Theory conclusions and references

This summer, unprecedented wildfires also burned in Greenland, Alaska, and Siberia, contributing to ice melt as their soot and smoke traveled across the Arctic.

It is not unprecedented. Large areas of the Arctic burn every year and this is part of the natural cycle. ‘Arctic On Fire’ Is Normal, Part Of Nature - Moose, Bears, Voles, Foxes, Owls, Birds Of Prey All Depend On Wild Fires

Democratic People's Republic of Korea[a]

North Korea itself rejects communism.

“There are two ways of looking at a place: There is what it calls itself, and there is what analysts or journalists want to say a place is,” Owen Miller, who lectures in Korean history and culture at London’s School of Oriental and African Studies (SOAS), told Newsweek.

“On neither of those counts is North Korea Communist. It doesn’t call itself Communist—it doesn’t use the Korean word for Communist. It uses the word for socialism but decreasingly, less and less over the decades.”

The state’s official ideology is juche, a Sino-Korean word used in both North and South Korea that roughly translates as “independence, or the independent status of a subject,” according to Miller.

“Juche is enshrined in North Korea’s constitution, explicated in thousands of propaganda texts and books, while teachers indoctrinate North Korean children with the ideology at an early age.

The concept evolved in the 1950s, in the wake of the Korean War, as North Korea sought to distance itself from the influence of the big socialist powers: Russia and China. However the concept has a more profound resonance for North Koreans, alluding to the centuries when Korea was a vassal state of the Chinese.

“When Kim Il Sung started using the word, he was using [it] to refer to this sense of injured pride, going back decades and much further, hundreds of years under Chinese control. He is saying North Korea is going to be an independent nation in the world, independent of other nations,” Miller says.”

The accelerating capacity build-out is changing the power sector landscape. Wind and solar will contribute 24% of power supply by 2040 compared with 7% today. Although the competitiveness is improving, there are practical limitations to reaching a fuel mix comprised of 50% or greater share for solar and wind. We see growth in energy storage to almost 600 GW. But without long-duration storage, on a much higher scale, high solar and wind yields negative prices and grid shape, design and stability issues.

This does not mention that hydro is the preferred long-duration storage for solar and wind energy and most countries have plenty of hydro storage available which is very low cost, far less cost than batteries.

The EU alone has enoughpotential for developing future pumped hydro storage for 123 TWh according to a 2013 report. That is enough to store the entire output from 5000 gigawatts of power stations for one day.

This is how the UK plans to do it and we have a fully worked out transition plan through to 2050 which is how the UK government knew it can commit to it. The EU has many projects either in place or proposed for both extra pumped hydro storage and long range UHVDC power lines.

China uses hydro storage big time. The US can definitely do it. Australia can do it, plenty of hydro storage to do the peak demand for solar power.

The Australia National University has found 22,000 potential pump hydro sites in Australia. So many that they say that you only need to use the best 0.1% of them, and can afford to be choosy.

It's not just conventional hydro. An Australian company Genex is building solar power on the site of a disused goldmine. They used pumped hydro for power storage using the old mine shafts. It pumps water up and down between the lower and upper galleries of the old mine. See video.

There's an especially good synergy if you have solar panels floating on top of the lakes behind hydro electric dams.

The potential for solar panels floating on hydro-electric dams is vast. If 10% of the available surface area is used worldwide for solar power it would produce at total of 5.211 million gigawat hours a year of power. That’s 5,211 terawatt hours.

Where Sun Meets Water: Floating Solar Market Report

There are many other ways to do it. Carbon zero transport is likely to involve a transition to electrical vehicles. Once nearly everyone is running them, then they can use a fraction of the battery charge for the car to buy electricity from the grid when it is in surplus and costs less and sell it back at a profit when it is in demand, and so make money from their cars when parked.

Other methods include solar thermal storage already used in solar plants in deserts.

See also my Do renewables for power generation take up more land area than fossil fuels? Well - not really! which has more links to follow up on peaking power.

https://astrosociety.org/edu/publications/tnl/23/23.html

Broken link - is in archive.org though. Here is the quote:

Marsden continued to refine his calculations, and discovered that he could trace Comet Swift- Tuttle's orbit back almost two thousand years, to match comets observed in 188 AD and possibly even 69 BC. The orbit turned out to be more stable than he had originally thought, with the effects of the comet's jets less pronounced. Marsden concluded that it is highly unlikely the comet will be 15 days off in 2126, and he called off his warning of a possible collision. His new calculations show Comet Swift-Tuttle will pass a comfortable 15 million miles from Earth on its next trip to the inner solar system. However, when Marsden ran his orbital calculations further into the future, he found that, in 3044, Comet Swift-Tuttle may pass within a million miles of Earth, a true cosmic "near miss.''

Marsden's prediction, and later retraction, of a possible collision between the Earth and the comet highlight that fact that we will most likely have century-long warnings of any potential collision, based on calculations of orbits of known and newly discovered asteroids and comets. Plenty of time to decide what to do.

https://web.archive.org/web/20130402063233/https://astrosociety.org/edu/publications/tnl/23/23.html

an active supervolcano

It is not a supervolcano. Its VEI (Volcanic Explosivity Index) is variously estimated as 6 or 7. A super volcano has VEI at least 8. This is just taken from the title of the New Scientist article - NS does tend to use hyperbole (exaggeration for emotional effect) sometimes.

This is a recent paper labeling it as VEI 7

Pan, B., de Silva, S.L., Xu, J., Chen, Z., Miggins, D.P. and Wei, H., 2017. The VEI-7 Millennium eruption, Changbaishan-Tianchi volcano, China/DPRK: New field, petrological, and chemical constraints on stratigraphy, volcanology, and magma dynamics. Journal of Volcanology and Geothermal Research, 343, pp.45-59.

This 2016 paper calls it VEI <=6

Despite its historical and geological significance, relatively little is known about Paektu, a volcano that has produced multiple large (volcanic explosivity index ≤ 6) explosive eruptions, including the ME, one of the largest volcanic events on Earth in the last 2000 years

The explosive ME deposited 23 ± 5 km3 dense rock equivalent (DRE) of material emplaced in two chemically distinct phases in the form of ash, pumice, and pyroclastic flow deposits

Iacovino, K., Ju-Song, K., Sisson, T., Lowenstern, J., Kuk-Hun, R., Jong-Nam, J., Kun-Ho, S., Song-Hwan, H., Oppenheimer, C., Hammond, J.O. and Donovan, A., 2016. Quantifying gas emissions from the “Millennium Eruption” of Paektu volcano, Democratic People’s Republic of Korea/China. Science advances, 2(11), p.e1600913. Press release

Study provides new evidence about gas emissions from ancient North Korean volcanic eruption

USGS definition of a supervolano:

The term "supervolcano" implies a volcanic center that has had an eruption of magnitude 8 on the Volcano Explosivity Index (VEI), meaning that at one point in time it erupted more than 1,000 cubic kilometers (240 cubic miles) of material. Eruptions of that size generally create a circular collapse feature called a caldera.

The NS article is just using hyperbole for a more dramatic headline for emotional effect

Andy Coghlan (15 April 2016). "Waking supervolcano makes North Korea and West join forces". NewScientist. Retrieved 17 May 2019.

In your own supervolcano article it is listed as a Vel 7, a "super eruption'" as your page puts it, but not quite a supervolcano. The page itself explains that a supervolcano is 8 or more.

However difficult climate diplomacy might be, we should not shy away from clearly stating that nothing has been achieved as long as we cannot see an effect on global measurements. Therefore, the political response has been inadequate.

China is the key here. Half of our CO2 emissions increase is due to China. It is rapidly industrializing and rapidly decarbonizing at the same time. At present it is still continuing to use coal to get more power but it is on the point of a massive switch over. It may have already passed over the change from increasing to level emissions, it's hard to tell, last year could be the highest. Certainly will be before 2030 and probably before 2025. They recently said they will increase their pledge in 2020 and give a road map towards carbon zero at that point.

For more background and cites see my

Many other countries are already rapidly decreasing in emissions. UK Is an example. We are on pretty much a straight line to 20% by 2050 and so it is not too much of an ask to dip that down a bit more to reach 0% by 2050.

But we can say that the currently observed warming is very much consistent with our understanding of the climate system, which goes back to the end of the 19th century. Back then, a Swedish physicist, named Svant Arrhenius, already calculated the expected degree of warming without the use of computer models, and his estimate is still very much in line with our current understanding.

He was the first to develop the ideas of global warming due to CO2 but if his prediction was the same as ours it is just coincidence - he used it to explain us exiting from the ice age and hadn't got the modern understanding e.g. of the Milankovitch cycles, and all the complexities of the interactions involved. Up to the 70s scientists were thinking in terms of climate following a random walk back to a future next ice age.

https://www.sciencehistory.org/distillations/magazine/future-calculations

What we often hear is that increased levels of greenhouse gases will make such extreme heat events more likely, but that a direct link cannot be proven. In my view, this level of precaution is unfounded.

There is pretty robust research suggesting that we have more and stronger heat waves in a warming world.

This is my paraphrase from the IPCC 2018 report Chapter 3

Number of exceptionally hot days to increase most in the tropics, extreme heat waves emerge early and expected to be widespread already at 1.5°C of global warming

1.5°C instead of 2°C means around 420 million fewer people frequently exposed to extreme heatwaves and 65 million fewer exposed to exceptional heat waves assuming constant vulnerability (i.e. they don't migrate to avoid them)

This is my summary of a recent paper:

They retropredicted that in 2000 37% of the population had an increased susceptiblity to dying due to a heat wave (1 in 10,000 if you don't take adequate precautions). The actual figure is 30.6%. From that they forward predicted to 47.6% (+-9.6%) at 1.5°C and 53.7% (+/-8.7%) at 3°C .

It's not possible to know statistically about any particular heat wave but the predictions are reasonably robust for the numbers affected per year increasing.

The new models are producing much scarier projections on temperature increase

There was some recent research suggesting we may need to adjust the long term climate sensitivity - over thousands of years. But there is such a vast range of values there it's not likely one study signfiicantly changes it. We need to wait for the next high level review.

This is a carbon brief article on it from last year, you can see how the projections are very variable between below 1.5 C through to above 6 C. Mostly between 1.5 and 4.5 C.for the response to doubling CO2 levels - but that's about how much it responds in equilibrium over thousands of years, e.g. after all the ice in Greenland and Antarctica that is long term unstable melts.

And though there is still a huge range, the outliers are more clearly outliers than they used to be, there is a better understanding of the science and it is expected to be in the middle.

https://www.carbonbrief.org/explainer-how-scientists-estimate-climate-sensitivity

There is ongoing work, mostly for embedded Linux systems, to support 64-bit time_t on 32-bit architectures, too

The kernel is now just about fixed, with the end in site and it is over to the glibc library writers and only then can application writers update to be 2038 compatible:

2019 update: Approaching the kernel year-2038 end game By Jonathan Corbet January 11, 2019

Strangelets are small pieces of strange matter, perhaps as small as nuclei. They would be produced when strange stars are formed or collide, or when a nucleus decays

An excellent cite here for strangelets is the LHC safety review in 2011. It also gives additional details that would be useful for the article and includes a short summary of the state of current research on strangelet production. The supplement to the review describes how the LHC confirmed the emerging picture, which is that strange matter does not form at high energies

Also, just as icecubes are not produced in furnaces, the high temperatures expected in heavy-ion collisions at the LHC would not allow the production of heavy nuclear matter, whethernormal nuclei or hypothetical strangelets.

Review of the Safety of LHC Collisions LHC Safety Assessment Group, 2011

Implications of LHC heavy ion data for multi-strange baryon production LHC Safety Assessment GroupSept 26, 201

, “Limiting global warming to 1.5°C would require rapid, far-reaching and unprecedented changes in all aspects of society.”

It did not however say that those changes are sacrificies or that they make society worse. The IPBES report goes into it far mroe, these are chagnes that have huge social benefit, a circular sustainable economy, local people more involved in decisions about what they do, valuing, preserving and restoring nature services. And - reducing pollution also, and with electric cars, a quieter world too (though they may need to add noises to electric cars to make them easier to hear). Like the post I shared a while back,

“It is no coincidence that the deepest and most protracted recessions in recent decades have taken hold in countries that experienced booms.”

It is possible to have endless economic growth. For instance increasing economic growth does not need to lead to increasing energy use, often leads to reduced energy use.

Plainly enough then, the moneyed world’s worries are beginning to sound a lot like those voiced by advocates of the Green New Deal and campaigners of Fridays for the Future and the Extinction Rebellion

There are big savings to be made for sure. One estimate is that we save $20 trillion by 2100 if we limit warming to 1.5°C compared to 2°C

Burke, M., Davis, W.M. and Diffenbaugh, N.S., 2018. Large potential reduction in economic damages under UN mitigation targets. Nature, 557(7706), p.549.

Even if it only wiped out all but 3.5 billion, it would wipe out half of today’s human population. Human die off at even this less extreme scale would put the politically popular cause of economic growth in sharp reverse.

This is not happening. The population is leveling off over much of the world due to prosperity not scarcity.

There is inefficient agriculture in Africa especially that could produce ten times as much food if it was optimized like the best agriculture in the US and China. Most of the predicted population increase is in Africa. Worldwide our population is leveling off, no longer exponential. Not leveling off due to scarcity as used to be predicted but due to prosperity. Reduced child mortality, better education, and greater equality of women in decision making all play a role here. Wth fewer children dying, parents have smaller families because they know that they have a good chance of surviving to adulthood.

Japan is one of the nations with the most rapidly declining populations. We are already at peak child, or close to, we jave roughly the same number of children as a decade ago and the world population continues to grow rapidly mainly beause of the extraordinary advances in health care. Worldwide we live ten years longer than 50 years ago. For some countries it is 20 years longer, for China it is a truly remarkable 30 years increase in life expectancy from 1960 to 2010.

This expands on some of that, and goes into other things such as resources and energy return on energy invested for fossil fuels compared to renewables

Debunked: Soon we won’t be able to feed everyone because the world population is growing so quickly

“I think it’s extremely unlikely that we wouldn’t have mass death at 4C. If you have got a population of nine billion by”

This is a very out of date quote from 2009. "Warming will 'wipe out billions'". scotsman.com.

It is not what modern research is finding, we can feed everyone through to 2100 on all the scenarios.

Summarize some of this research here: We can feed everyone through to 2100 and beyond

this term is used to refer to any Eastern Orthodox Christian

This explains how in the Eastern Orthodox church all Christians think of themselves as being the servant of God. And about how Jesus was as well. Being a Servant

the existential threat posed by climate change.

This is from journalist exaggerations and junk science. The IPCC's own worst case is a scenario where we can still feed everyone through to 2100 but with reduced food security.

See Box 8 of chapter 3of the 2018 IPCC report

I summarize it as:

This is one of their scenarios from chapter 3 of the 2018 report. There is nothing remotely like extinction or end of civilization in this scenario. We can still feed everyone as well, though with less food security. It is still a world with much of our natural world still here, the majority of the species survive, not a desert. However it is a world we would not want to head for, with the corals nearly all gone, many areas of the world facing problems, severe loss of biodiversity and increasing rather than decreasing world poverty by 2100.

[The IPCC’s own worst case climate change example - a 3°C rise by 2100(https://www.quora.com/q/duzzmyeobxjljrpq/The-IPCC-s-own-worst-case-climate-change-example-a-3-C-rise-by-2100)

“Revolution or collapse — in either case, the good life as we know it is no longer viable.”

Renewables do work and we can have a fossil fuel free economy. Many countries are already rapidly transitioning to a largely renewables based civilization.

[Do renewables for power generation take up more land area than fossil fuels? Well - not really!]https://www.science20.com/robert_walker/ipcc_did_not_say_12_years_or_18_months_to_save_planet_no_scientific_cliff_edge_should_they_challenge_it_or_who) Yes, climate change can be beaten by 2050. Here’s how.

“Revolution or collapse — in either case, the good life as we know it is no longer viable.”

Renewables do work and we can have a fossil fuel free economy. Many countries are already rapidly transitioning to a largely renewables based civilization.

[Do renewables for power generation take up more land area than fossil fuels? Well - not really!]https://www.science20.com/robert_walker/ipcc_did_not_say_12_years_or_18_months_to_save_planet_no_scientific_cliff_edge_should_they_challenge_it_or_who) Yes, climate change can be beaten by 2050. Here’s how.

key tipping points

This article does not mention any tipping points. The IPCC found that there are no tipping points up to 2 C and beyond for climate except the Western Antarctic and Greenland ice melt and those unfold over many thousands of years.

Although Britain boosted the Paris Agreement in June by committing to net zero carbon emissions by 2050, the country, preoccupied by Brexit, is far from on a climate war footing.

We do not need to be on a climate war footing -- that can lead to autocratic decisions and mistakes. It's what sociologists call a "wicked problem" - solutions are complex and need to be done carefully.

But we are working on this. See my.

The UK public are pretty much fed up about Brexit and the politicians know they have to find a solution. Maybe we leave this autumn, maybe we have a general election, one way or another we'll be resolving this.

But much else is happening in the UK it's not just Brexit!

Likewise, a push led by France and Germany for the European Union to adopt a similar target was relegated to a footnote at a summit in Brussels after opposition from Poland, the Czech Republic and Hungary.

Poland, the Czech Republic and Hungary face major issues in transitioning fast because of the coal industries.

This is something to fix in future deals. The Green New Deal will deal with these issues, making it easier for them to transition. That's a proposal by Ursula von der Leyen', new president of the European Comission, and we now have a "Green Wave" in European politics that will make a big difference going into the future.

In October, the U.N.-backed Intergovernmental Panel on Climate Change (IPCC) warned emissions must start falling next year at the latest to stand a chance of achieving the deal’s goal of holding the global temperature rise to 1.5 degrees Celsius.

They did not say that. They said that there are many ways to stay within 1.5°C. The easiest way to do it, the "Low Energy Demand" scenario, involves a 45% reduction in emissions by 2030 tapering down to zero emissions by 2050. But there are many alternatives.

That foretaste of a radically hotter world underscored what is at stake in a decisive phase of talks to implement the 2015 Paris Agreement, a collective shot at avoiding climate breakdown.

These areo often misunderstood. It is not about lethal heat. Already 30% of the world was experiencing these heat waves every year. On the 3 C path that we are on now, this is projected to increase to a little over 50%. It's not a big deal, you do not die of a heat wave. Not if you take the right precautions.

existential threat posed by climate change.

This is from journalist exaggerations and junk science. The IPCC's own worst case is a scenario where we can still feed everyone through to 2100 but with reduced food security.

See Box 8 of chapter 3of the 2018 IPCC report

I summarize it as:

This is one of their scenarios from chapter 3 of the 2018 report. There is nothing remotely like extinction or end of civilization in this scenario. We can still feed everyone as well, though with less food security. It is still a world with much of our natural world still here, the majority of the species survive, not a desert. However it is a world we would not want to head for, with the corals nearly all gone, many areas of the world facing problems, severe loss of biodiversity and increasing rather than decreasing world poverty by 2100.

[The IPCC’s own worst case climate change example - a 3°C rise by 2100(https://www.quora.com/q/duzzmyeobxjljrpq/The-IPCC-s-own-worst-case-climate-change-example-a-3-C-rise-by-2100)

Timeline of carbon capture and storage

Not been updated for a decade (as of 2019). Lots of newer material in https://en.wikipedia.org/wiki/Carbon_capture_and_storage

The total carbon capture capacity of the facility is 800,000 tonnes per year.

They plan to expand to capture 2.3 million tonnes per year by 2025 and 5 million tonnes per year before 2030. They say they are commercially self-sustaining, with no government subsidies.

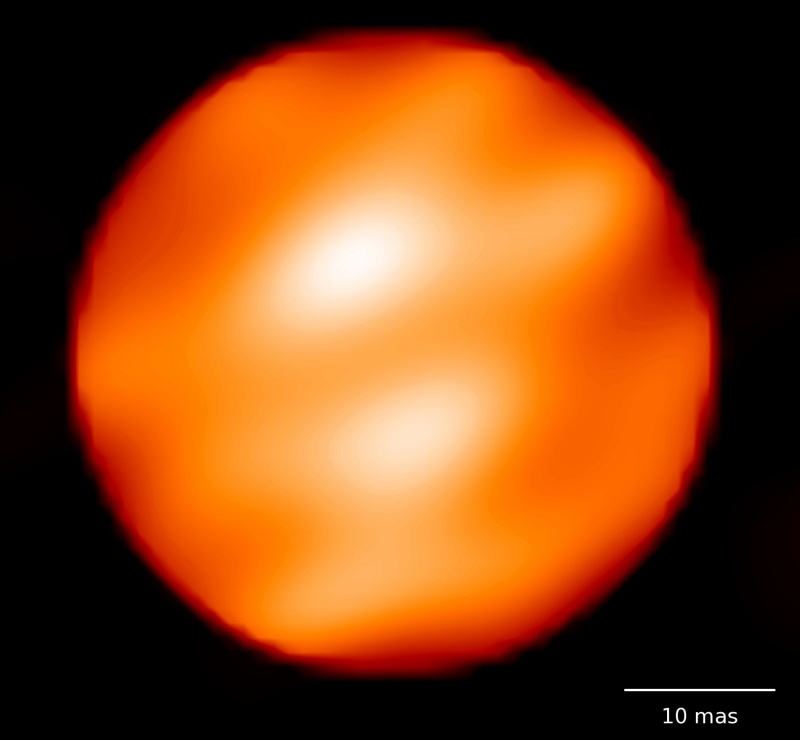

This is the first time that ALMA has ever observed the surface of a star and this first attempt has resulted in the highest-resolution image of Betelgeuse available.

This is about a decade out of date. There is a higher resolution image from 2009

This is about a decade out of date. There is a higher resolution image from 2009

The Spotty Surface of Betelgeuse Credit: Xavier Haubois (Observatoire de Paris) et al.

The figure in the paper itself is this one:

The paper is here:

Haubois, X., Perrin, G., Lacour, S., Verhoelst, T., Meimon, S., Mugnier, L., Thiébaut, E., Berger, J.P., Ridgway, S.T., Monnier, J.D. and Millan-Gabet, R., 2009. Imaging the spotty surface of Betelgeuse in the H band . Astronomy & Astrophysics, 508(2), pp.923-932.

There are other images of similar resolution. This is an article from 2018.

Ariste, A.L., Mathias, P., Tessore, B., Lèbre, A., Aurière, M., Petit, P., Ikhenache, N., Josselin, E., Morin, J. and Montargès, M., 2018. Convective cells in Betelgeuse: imaging through spectropolarimetry. Astronomy & Astrophysics, 620, p.A199.

He lost the title of "World's Shortest Man"

He is still in the record as the shortest mobile man

Khagendra Thapa Magar is currently listed on the Guiness world records site as the shortest man living (mobile))

Junrey Balawing is listed as the shortest man - living (non-mobile)

Balawing is the world's shortest man alive

He is in the record as the shortest non mobile man

Khagendra Thapa Magar is currently listed on the Guiness world records site as the shortest man living (mobile))

Junrey Balawing is listed as the shortest man - living (non-mobile)

Well known potentially hazardous asteroids are normally only a hazard on a time scale of hundreds of years

Many are only potentially hazardous on a timescale of thousands of years or millions of years. Example, Swift-Tuttle's first chance of impact is a small chance of impact in 4479 of 1 in a million.

For the alternative formulation, where X is the number of trials up to and including the first success, the expected value is E(X) = 1/p.

No cite given, the calculation can be done as here

Thus a wet bulb temperature of 35 °C (95 °F) is the threshold beyond which the body is no longer able to adequately cool itself

Confusingly doesn't explain that wet bulb temperatures differ significantly from the heat index. The US system of heat index is roughly the “perceived heat”, how warm it feels, and is used mainly for public outreach such as heat wave warmings, rather than scientific research.

Sadly, because the conversion depends on radiant heat (as well as humidity), there is no systematic way to convert one to the other. It gives an idea of what the temperature feels like - but how well you can tolerate it may depend on the amount of humidity and how much of the perceived heat is due to radiant heat.

A wet bulb temperature of 33°C (92°F) corresponds very roughly to a heat index of around 57°C (135°F) in the absence of radiant heat.

But with radiant heat the heat index can increase relative to those values and be larger than you’d expect from the wet bulb temperature by over 7°C (11°F) for indoor conditions and over 11°C (18°F) for outdoor conditions with direct sunlight.

Iheanacho, I., 2014. Can the USA National Weather Service Heat Index Substitute for Wet Bulb Globe Temperature for Heat Stress Exposure Assessment?.

Because of this factor, it was once believed that the highest heat index reading actually attainable anywhere on Earth was approximately 71 °C (160 °F)

Says who??

believe that a limited convention is possible.

So does James Kenneth Roger, Attorney at Osborn Maledon, P.A more about him - cited later in this article

He uses various arguments against this, mainly that it would defeat its purpose if it was unlimited because States would be reluctant to call such a convention.

However, he acknowledges that the Philadelphia convention in 1787) (not called under article V) went beyond its own remits and then he says that the main protection is that 3/4 of States have to support any amendments made by any such convention, which would include a majority of at least 22 out of the original at least 34 who called the convention in the first place (at most 12 total can be against any ammendment).

For more details: later annotation in page

The fact that Congress has not called such a convention, and that courts have rejected all attempts to force Congress to call a convention, has been cited as persuasive evidence that Paulsen's view is incorrect

Rogers’ legal opinion about Paulson’s argument is misparaphrased in this article. He does not use the fact that no convention has been held yet as a reason to suppose that the convention has to be limited, indeed he acknowledges that the Philadelphia convention in 1787 ) (although not called under article V) and cites this as a reasonable concern.

He uses other arguments against this, mainly that it would defeat its purpose if it was unlimited because States would be reluctant to call such a convention out of fear of what other things it might decide. He also says that if they thought this would happen the States would immediately rescind their applications, so preventing the convention, something Idaho has already done.

He does say that if the convention was unlimited then all existing applications could be aggregated together to call a single convention to discuss them all but he does not use the fact that this has not happened as an argument to say that such a convention is impossible. However, he says that the main protection is that 3/4 of States have to support any amendments made by any such convention. This would include a majority of the original 34 or more States that called for it (at most 12 of them could refuse to ratify). The arguments are

It then says

It gives the example of the Philadelphia Convention of 1787 which exceeded its mandate of revising the Articles of Confederation to show that there are well founded concerns about whether a modern convention with a limited mandate could exceed its original scope.

It says it would be difficult for a government to intervene as a constitutional convention could concievably claim independent authority.

However it goes on to say that any ammendments have to be ratified by 3/4 of the States. So, if the convention proposes extra amendments the would only be accepted if ratified by 38 States. This would mean that most of the States that originally requested it would also ratify it thus legitimizing their actions.

(This is the maths here: 38 out of 50 have to ratify so that means up to 12 could refuse to ratify, and a convention requres 2/3 of 50 or 34 States to be initiated. If the ones that don't ratify are all from the original States, then that would make it 22 that ratify of the original 34 or over 64% of them)

The section concludes that

The second argument—that the States have no power beyond initiating a convention—is partially correct. They do, however, have indirect authority to limit the convention. Congress’s obligation to call a convention upon the application of two thirds of the States is mandatory, so it must call the convention that the States have requested. Thus, Congress may not impose its own will on the convention. As argued above, the purpose of the Convention Clause is to allow the States to circumvent a recalcitrant Congress. The Convention Clause, therefore, must allow the States to limit a convention in order to accomplish this purpose. The prospect of a general convention would raise the specter of drastic change and upheaval in our constitutional system. State legislatures would likely never apply for a convention in the face of such uncertainties about its results, especially in the face of a hostile national legislature. [73] States are far more likely to be motivated to call a convention to address particular issues. If the States were unable to limit the scope of a convention, and therefore never applied for one, the purpose of the Convention Clause would be frustrated.

A related concern is whether States’ applications that are limited to a particular subject should be considered jointly regardless of subject or tallied separately by subject matter to reach the twothirds threshold necessary for the calling of a convention. [74] This is an important question because if all applications are considered jointly regardless of subject matter, Congress may have the duty to call a convention immediately based on the number of presently outstanding applications from states on single issues[74].

If the above arguments about the States’ power to limit a convention are valid, however, then applications for a convention for different subjects should be counted separately. This would ensure that the intent of the States’ applications is given proper effect. An application for an amendment addressing a particular issue, therefore, could not be used to call a convention that ends up proposing an amendment about a subject matter the state did not request be addressed. [76]

Footnote

73

These fears, however, are mitigated by the States’ own powers over ratification.

74 . Paulsen, supra note 3, at 737–43. 75 . Id. at 764. Paulsen counts forty ‐ five valid applications as of 1993.

76

If it were established that applications on different topics are considered jointly when determining if the twothirds threshold has been reached, states would almost certainly rescind their outstanding applications to prevent a general constitutional convention. Some states have already acted based on fears of a general convention. For example, in 1999 the Idaho legislature adopted a resolution rescinding all of its outstanding applications for a constitutional convention. S.C.R. 129, 1999 Leg. (Idaho 1999). Georgia passed a similar resolution in 2004. H.R. 1343, Gen. Assemb. 2004 (Ga. 2004). Both resolutions were motivated by a fear that a convention could exceed its scope and propose sweeping changes to the Constitution.

pdf here

.[29]

There is a formatting error in all these Rogers bookmarks. Should ilnk to this cite pdf here

Rogers 2007.

There is a formatting error in all these Rogers bookmarks. Should ilnk to this cite pdf here

Rogers 2007, p. 1007

There is a formatting error in all these Rogers bookmarks. Should ilnk to this cite pdf here

" (PDF).

pdf here

Rogers, 2007 & 1014–20.

Rogers & 2007 1008.

Rogers & 2007 1009.

Rogers & 2007 1010.

Rogers, 2007 & 1010–20.

Rogers, 2007 & 1014–19.

There is a formatting error in all these Rogers bookmarks. Should ilnk to this cite pdf here

; some studies have reported that in adult humans about 700 new neurons are added in the hippocampus every day

2019 study finds thousands of young neurons in brain tissue through to the ninth decade of life.

By utilizing highly controlled tissue collection methods and state-of-the-art tissue processing techniques, the researchers found thousands of newly formed neurons in 13 healthy brains from age 43 up to age 87 with a slight age-related decline in neurogenesis (about 30% from youngest to oldest).

Old Brain, New Neurons? Harvard University press release

New neurons in red in brain tissue from a 68-year-old Original paper: Moreno-Jiménez, E.P., Flor-García, M., Terreros-Roncal, J., Rábano, A., Cafini, F., Pallas-Bazarra, N., Ávila, J. and Llorens-Martín, M., 2019. Adult hippocampal neurogenesis is abundant in neurologically healthy subjects and drops sharply in patients with Alzheimer’s disease. Nature medicine, 25(4), p.554.

This all sounds like a recipe for a completely unworkable set of complex requirements that once again will favor big companies with deep pockets and big legal departments.

The draft says the opposite.

Even if consumer rules, data protection rules, as weil as contract rules, have converged across the EU, in today's regulatory environment, only the big platform companies can grow and survive. A fragmented market with divergent rules is difficult to contest for newcomers, and the absence of dear, uniform, and updated rules in areas such as illegal content online is dissuasive for new innovators. This is a major strategic weakness for the EU in the digital economy and increase reliance on non-EU services for essential services used by all citizens on a daily basis.

The Iack of legal clarity also entails a regulatory disincentive for platforms and other intermediaries to act proactively to tackle illegal content, as weil as to adequately address harmful content online, especially when combined with the issue of fragmentation of rules addressed above. As a consequence, many digital services avoid taking on more responsibility in tackling illegal content, for fear of becoming liable for content they intermediate. This Ieads to an environment in which especially small and medium-sized platforms face a regulatory risk which is unhelpful in the fight against online harms in the broad sense. At the same time, when companies do take measures against potentially illegal content, they have limited legal incentives for taking appropriate measures to protect legal content.

Service interoperabillty. Where equivalent services exist, the framewerk should take account of the ernerging application of existing data portability rules and explore further options for facilitating data transfers and improve service interoperability - where such interoperability makes sense, is technically feasible, and can increase consumer choice without hindering the ability of (in particular, Smaller) companies to grow.

There is not even the slightest suggestion of making things easier for big companies at the expense of small. Instead the ambition is to make it easier for smaller companies and innovative startups, including native EU startups, to compete on a level playing field in the EU with the US tech giants.

The EU has no overwhelming motivation to protect US tech giants and has often shown it is willing to stand up against them.

The draft itself is here

That's a classic: affirming that general monitoring is prohibited, while bringing in rules for proactive automated filtering technologies -- aka general monitoring.

It doesn't say that the filtering would be required. It says

specific provisions governing algorithms for automated filtering technologies -- where these are used -- .should be considered, to provide the necessary transparency and accountability of automated content moderation

If it is like the copyright directive on this point, what they are saying there is that if content is modified it has to be transparent to the user. For instance, you'd have a right to get the online provider to explain to you why your content was taken down and to address it. As an example, I have some animated gifs to help panicked people to breathe slowly that I used to add to my posts to reassure panicked people. For no apparent reason Facebook now blocks them. Including one I made myself that I know is absolutely fine and the ones by DestressMonday that say it is okay to use. There is currently no way to even contact them and get an answer as to why they block this content. This would give me a way to do that, and they would have to explain what happened or sort it out. Article 13/17 has this right to redress:

- Member States shall provide that online content-sharing service providers put in place an effective and expeditious complaint and redress mechanism that is available to users of their services in the event of disputes over the disabling of access to, or the removal of, works or other subject matter uploaded by them.

That would surely be included amongst the rules that would govern pro-active upload filters which are currently operated without any regulation on what they do and without any requirement to explain anything about what their upload filters do to anyone.

a binding "Good Samaritan provision" would encourage and incentivise proactive measures, by clarifying the lack of liability as a result of Such measures

The companies already do proactive measures. E.g. Facebook blocks many sites so that you can't even link to them. With the New Zealand terrorism video, then Facebook stopped 1.5 million copies of it before they were seen, 1.2 million before upload and shared 90 digital signatures with other companies so they could do the same. Faceboook tweet see also Update on New Zealand | Facebook Newsroom

Here the important point in this paragraph is not the pro-active moderating, which is already happening - but that they are saying that they will clarify the lack of liability to companies for pro-active moderating (similarly to the section 230 in the US which we don't have in the EU).

Finally, a binding "Good Samaritan provision" would encourage and incentivise proactive measures, by clarifying the Iack of liability as a result of Such measures, on the basis of the notions already included in the Illegal Content Communication.

attacks on the fundamental principles underlying the open Internet that began with the Copyright Directive.

The copyright directive is motivated by the wish to stop copyright violations, not to attack the openness and freedom of the internet. It has many provisions to protect digital freedom.

I am talking about its stated objectives. But if it is law then the law is what you see, not any hidden objective. Lawyers and judges don't use hidden objectives to decide legal cases but just go by what the law says.

If things like upload filters and the imposition of intermediary liability become widely implemented as the result of legal requirements in the field of copyright, it would only be a matter of time before they were extended to other domains.

Non sequitor, doesn't follow. Indeed if wide implementation of a law leads to problems, it would have the opposite effect.

If some law is implemented and is widely accepted as a good law and sensible provisions it can be a model for ther new laws. If it turns out to be problematical then new laws would have a lot of opposition based on the experience of the previous one. Problematical laws can also be modified or repealed.

was nicknamed the annus confusionis ("year of confusion")

It was actually called the ultima annus confusionis, or the "final year of confusion". Also the primary source says it was 443 days.

The primary source here is Macrobius, in his Saturnalia) 1, 14, 3:, 400 AD

He first describes various Roman theories about when intercalation) (insertion of leap days or months) started, the earliest being an idea from Varro that it was a very ancient law inscribed on a bronze column around 472 BCE .

He then goes on to say (page 165 and page 166):

There was a time when intercalation) was entirely neglected out of superstition, while sometimes the influence of the priests, who wanted the year to be longer or shorter to suit the tax farmers,298 saw to it that the number of days in the year was now increased, now decreased, and under the cover of a scrupulous precision the opportunity for confusion increased.299

But Gaius Caesar took all this chronological inconsistency, which he found still ill-sorted and fluid, and reduced it to a regular and well-defined order;300 in this he was assisted by the scribe Marcus Flavius, who presented a table of the individual days to Caesar in a form that allowed both their order to be determined and, once that was determined, their relative position to remain fixed.301

When he was on the point of starting this new arrangement, then, Gaius Caesar let pass all the days still capable of creating confusion: when that was done, the final year of confusion was extended to 443 days.302 Then imitating the Egyptians, who alone know all things concerned with the divine, he undertook to reckon the year according to the sun, which completes its course in 365¼ days.303

The source says

Caesar called 46 BC the ultimus annus confusionis ("The final year of confusion")

Roman wits, however, called it the annus confusionis ("Year of Confusion").

Your ref 2 says

We think of the calendar as a universal measure of time. It's like a perfect grid that can be extended endlessly into the future. There's a website that tells me my birthday in the year 2128 will fall on a Monday.

But in antiquity, calendars were simply ways of organizing religious festivals, the terms of contracts, and other social arrangements. People knew calendars could be shifted and manipulated-even for political reasons. Priests and officials "kept" the time, and different calendars were in use throughout the world. Calendar time simply wasn't as fixed back then. An ancient calendar was more like a schedule, subject to change and revision.

So Caesar's reform was all the more remarkable. As both high priest and dictator of Rome, he had the authority to impose a whole new scheme on the Roman world. Cicero joked that this man now wished to control the very stars, which rose according to his new calendar as if by edict. Caesar's calendar still needed some minor adjustments, but Europe never got another jumbo year like 46 BC. And to this day, we are still marching along on Caesar's time.

The Longest year in History University of Houston scholar Richard Armstrong

Here is an academic secondary source

... our seasons come always at very nearly the same time, as fixed by our calendar, so much so that if ther is any variety, we remark on it, and say that spring is late, or autumn early, this year. It needs some little historical knowledge and imaagination to remind us of a time when it was not so; when months were lunar, many days were named and not numbered, and the year had so little to do with the seasons that it was quite possible for November or December to arrive before the summer was well over. Yet this was the case in the greatest civilizations of classical antiquity until a comparatively late date. For Rome, the year which we call 46 B.C. is called by Macrobius the last year of the muddled reckoning, annus confusionis ultimus, and it was 445 days long, so much had the nominal dates got behind the real ones; with the next year began the Julian reckoning, albeit with sundry boggles on the part of the Roman officials who did not quite undersatnd it, and long delays before the whole Western world adopted it.

Footnote: Macrobius, Saturnalia) 1, 14, 3: no one, except moderns who sould know better, ever calls it the annus confusionis simply.

Rose HJ. The Pre-Caesarian Calendar: Facts and Reasonable Guesses. The Classical Journal. 1944 Nov 1;40(2):65-76.

"This is further evidence that temperatures will keep rising until government policies that decrease greenhouse gas emissions are actually implemented," emphasized Green.

We need to do lots more but we are already doing a lot, it's not inaction.

Heat waves are increasing in duration and frequency, while smashing records.

Heat waves are expected to increase in duration and frequency - but we can also cope with those also - they don't kill you unless you neglect to take the right precautions - e.g. running in hot weather and not drinking enough water, not looking after infants properly to protect them from heat, making sure old people take care of themselves or are looked after in hot weather etc.

Since 1961, Earth's glaciers lost 9 trillion tons of ice. That's the weight of 27 billion 747s.

Yes we are losing glacier ice. India especially needs to build in climate resilience for this.

Greenland — home to the second largest ice sheet on Earth — is melting at unprecedented rates.

Greenland ice is melting more this year, but last year it actually increased because of an unusual amount of snowfall in the winter of 2017-8. You need to be wary of looking at particular months or particular years and generalizing from them. Yes the world is warming but it is as the scientists predicted, it's not something unexpected. There are plus sides too, the world has become greener as a result of the CO2 fertilization. As they say there this is an El Nino year so it's not surprising that we get record temperatures this year, but we can also expect to get records anyway

Warming climes have doubled the amount of land burned by wildfires in the U.S. over the last 30 years, as plants and trees, notably in California, get baked dry.

The fires in California are part of the natural order, there have been wildfires there since long before humans got to America, and part of the problem is that they have let them grow on and on without the regular controlled fires the American Indians did. It needs careful forest management to reduce the risk. A warming world has more risk from wildfires - but the fires themselves can be prevented / controlled in the same way they are anyway in dry weather.

Its mission is to use biologically-detailed digital reconstructions and simulations of the mammalian brain to identify the fundamental principles of brain structure and function

Most neuroscientists think this is impossible with current knowledge.

This annotation paraphrases parts of the article in BBC Futures, Will we ever ... simulate the human brain?, which is cited as a summary of the issues on page 9 of the 2015 mediation report on the Blue Brain project

A billion dollar project claims it will recreate the most complex organ in the human body in just 10 years. But detractors say it is impossible. Who is right?

Is it even possible to build a computer simulation of the most powerful computer in the world – the 1.4-kg (3 lb) cluster of 86 billion neurons that sits inside our skulls?

The very idea has many neuroscientists in an uproar, and the HBP’s substantial budget, awarded at a tumultuous time for research funding, is not helping

The problem is that though neuroscientists have built neural nets since the 1950s, the vast majority treat each neuron as a single abstract point.

Markram wants to treat each neuron as a complex entity together with the active genes that switch on and off inside them, the 3000 synapses that let each neuron connect with its neighbours, the ion channels (molecular gates) that allow them to build up a voltage by moving charged particles in and out of membrane boreders and the electrical activity.

Critics say that even building a single neuron model in this way is feindishly difficult. Then we have even less knowledge about how these cells connect.

Markram's idea was to do a complete inventory of which genes are switched on in which cells in which parts of the brain, the "single-cell transcriptome" and then based on that he thinks he can recreate the electrical behaviour of each cell and how the neurons branches grow from scratch.

Eugene Izhikevich from the Brain Corporation thinks we should be able to build a network with the connectivity and anatomy of a real brain, but that it would just be a fantastically detailed simulation of a dead brain in a vat - that it would not be possible to simulate an active brain.

Markram himself says that his aim is not to build a brain that could act like us.

“People think I want to build this magical model that will eventually speak or do something interesting,” says Markram. “I know I’m partially to blame for it – in a TED lecture, you have to speak in a very general way. But what it will do is secondary. We’re not trying to make a machine behave like a human. We’re trying to organise the data.”

Chris Eliasmith from University of Waterloo, Canada, told BBC Futures:

“The project is impressive but might leave people baffled that someone would spend a lot of time and effort building something that doesn’t do anything,”

He is involved in the IBM brain simulation called SyNAPSE which also doesn't do very much. He says

“Markram would complain that those neurons aren’t realistic enough, but throwing a ton of neurons together and approximately wiring them according to biology isn’t going to bridge this gap,”

Grandparents

This leaves out his maternal grandparents, Malcolm (1866–1954) and Mary MacLeod (née Smith; 1867–1963)

Ruttan Walker told me she often uses grief as a way to process her emotions about climate. "We have to acknowledge that we've changed our planet. We've made it more dangerous and we've done harm," she said.

We are also doing many good things. E.g.

24 ways the world is getting better - good news journalists rarely share

even Wallace-Wells

Wallace Wells is just a journalist, and gets the science wrong in a big way. It's not "even him" - he also way over exaggerates based on sources that already exaggerate and junk science.

For many reactions by scientists to his article that later became his book, see

Scientists explain what New York Magazine article on "The Uninhabitable Earth" gets wrong

and see my:

Deep Adaptation: A Map for Navigating Climate Tragedy

This non peer reviewed 'paper' relies on the worst kind of non peer reviewed junk science.

The author makes a big thing about it failing peer review as if that gave it additional credentials. But it was failed because it didn't pass the academic standards set by the journal, not because of the views peresented. It is by a sociologist, and uses another non peer reviewed article as its main source on the science which is a big no-no in publishing, to rely on another article rejected from peer review.That in turn uses the now largely disproved clathrate gun hypothesis.

It wasn't published because it fails even the most basic criteria for a peer reviewed academic paper. Even without looking at the ideas, that it relies on non peer reviewed material for some of its main points is already a major no-no especially since it uses it to support material the author is not expert on themselves.

mong them are the notorious melting sea ice exaggerator Peter Wadhams and the ecologist and the-end-is-nigh-fabulist Guy McPherson.

Guy McPherson is junk science. E.g. he relies on Sam Carana's absurd use of a quadratic to extrapolate!

To see how bizarre that is bear in mind that a quadratic always goes to infinity in both directions, future and past.

[Absurd Blog Post At Arctic News Predicts 10 °C Rise In Global Temperatures By 2026 - QUADRATIC To EXTRAPOLATE!](https://www.science20.com/print/237501

"I think it's clear that emissions will come down to zero and stabilize the climate sometime this century. But taking 50 years to do that will yield a different world than if we do it in 20 years. It's up to us to decide which of these worlds we want to live in."

According to the IPCC to take 50 years to do it ,zero emissiosn by 2070 means we stay itin 2 C. To take 30 years by 2050, keeps us within 1.5 C. Both those targets hafve a wide range - and the global temperature changes a lot from year to year but the main thing is that we can stay within around 1.5 C. The IPCC also did an example worst case which was dismantling the Paris agreement and then acting far too late in the 2030s in a non coordinated way. We are not on that path. That path is a 3 C by 2100 with little by way of margin to improve on it.

It is a future to avoid but it is not human extinction or collapse of cvilizaiton. And we can stil lfeed everyone by 2100 but it's a less biodiverse world to leave to the next generation and with many problems and reversing some of the progress towards sustainability goals of this century, and reducing food security and increasing global poverty, flooding, storms, drought etc.

The IPCC’s own worst case climate change example - a 3°C rise by 2100

Models that use the status quo—a.k.a. "not changing anything" as a baseline—show that we're headed off a cliff in terms of planetary habitability.

The linked to paper misunderstands many things. China is arapidly industrializing and at the same time rapidly increasing its renewables percentage. But until it has a very high percentage of renewables it can't stop its increase in CO2 emissions. It is going to happen, they pledged to peak emissions before 2030 and it's generally accepted they will, but until then, then half the increase recently is just due to China. They have the largest renewables industry in the world and it developed from almost nothing in a few years. They have to increase pledges, but they first needed the technology in place before this is possible.

This does not mean it is impossible to stay within 1.5 C. The curves for all the scenarios are almost identical until 2030 but we are on the 3 C path now by the unconditional pledges and policies and are on track to increase on them.

in May, an Australian think tank called climate change "a near- to mid-term existential threat to human civilization."

This had no scientific credibility. written by a couple of businessmen, with no scientific peer review.

Michael Mann, respected climate scientist at Pennsylvannia State University, calls their report

"overblown rhetoric, exaggeration, and unsupportable doomist framing":

Richard Betts, Professor, Met Office Hadley Centre & University of Exeter, put it like this:

The “report” is not a peer-reviewed scientific paper. It’s from some sort of “think tank” who can basically write what they like. The report itself misunderstands / misrepresents science, and does not provide traceable links to the science it is based on so it cannot easily be checked (although someone familiar with the literature can work it out, and hence see where the report’s conclusions are ramped-up from the original research).

One of their central points was that they took an analysis of heat waves which lead to 1 in 10,000 to be at risk of dying if they do not take precautions such as the old and infants, and said it meant that

"Thirty-five percent of the global land area, and 55 percent of the global population, are subject to more than 20 days a year of lethal heat conditions, beyond the threshold of human survivability,"

The paper was calibrated to 30% of us facing "deadly heat" in 2000. We didn't get death of 30% of the world population in 2000. They totally misunderstood the paper.

Another example, they read a figure of 1 billion people at risk of sea level rise of 20 meters, way beyond anything possible this century or centuries into the future, and said this was the number of people who would be climate migrants by 2050. With sea level rise you can stay where you are if you build sea walls and the rise at even the highest level with "business as usual" and worst case scenario is 2.5 meters.

Many mistakes like this - they weren't scientists, were clearly not used to reading science papers, and can't have run it past anyone who understands climate science to check it.

did the one from May about how 1 million species are on track to go extinct due to human-caused environmental degradation, assuming we don't change our course and stop generating greenhouse gases (alongside other forms of environmental havoc).

First the million species was just an extrapolation of the IUCN red list to insects and minute and microscopic sear creatures. It didn't mean that more species are at risk than the IUCN red list already says.

They analysed the reasons we continue to lose species to extinction, and found a solution. Not only that, they found a solution that is practical, feasible, makes economic sense, and has preliminary government interest too, to the extent that over 100 governments were happy to sign off on their conclusions as a “summary for policy makers”.

This was the central point they wanted to present to the world. They did the most rigorous analysis ever done of such a situation involving experts in social sciences and economics as well as the natural sciences. From their analysis it has become clear, not only why we are losing so many species, not only how to fix it, but that we all benefit too, individuals, businesses, governments.

If we want it, this is a future world we can choose for ourselves by the actions we do right now. What’s more, we have time to act too. My short summary of their central message is

“Make Biodiversity Great Again, We Know How to do it”.

Let's Save A Million Species, And Make Biodiversity Great Again, UN Report Shows How

Last year's UN report on humanity's probable failure to stop warming short of the 1.5 degree Celsius threshold had a similar message

That is what journalists said, not the report and not the co-chairs. For example this is what Jim Skea said:

“The key message is that we can keep global warming below 1.5 degrees °C. It is possible within the laws of physics and chemistry. But it will require huge transitions in all sorts of systems, energy, land, transportation, but what the report has done is to send out a clear message to the governments that it is physically possible, it is now up to them to decide whether they want to take up the challenge.”

They said we can do it, that we an stay within 1.5 C and they outlnied four different ways we can do it. The easiest is to reduce emissions to zero by 2050, and this is the best for GDP growth as well. It's not more expensive, it's less expensive to do this than inaction.

As David Wallace-Wells noted in his 2019 bestseller The Uninhabitable Earth

No we are not headed for an uninhabitable world, or collapse of civilization. Many of these stories are based on this sensationalist book that exaggerates climate change scenarios. Sometimes the author is referred to as a “Climate scientist” but he is not. He is a general interest journalist who says himself that he has only been interested in climate change for one to two years.

He wrote a journalist article which he then expanded into a book on the topic which has been criticized by climate scientists as exaggerated and full of mistakes. He knows how to write engaging and imaginative prose but he doesn’t know how to evaluate climate change research.

Jetsun Milarepa (Tibetan: .mw-parser-output .uchen{font-family:"Qomolangma-Dunhuang","Qomolangma-Uchen Sarchen","Qomolangma-Uchen Sarchung","Qomolangma-Uchen Suring","Qomolangma-Uchen Sutung","Qomolangma-Title","Qomolangma-Subtitle","Qomolangma-Woodblock","DDC Uchen","DDC Rinzin",Kailash,"BabelStone Tibetan",Jomolhari,"TCRC Youtso Unicode","Tibetan Machine Uni",Wangdi29,"Noto Sans Tibetan","Microsoft Himalaya"}.mw-parser-output .ume{font-family:"Qomolangma-Betsu","Qomolangma-Chuyig","Qomolangma-Drutsa","Qomolangma-Edict","Qomolangma-Tsumachu","Qomolangma-Tsuring","Qomolangma-Tsutong","TibetanSambhotaYigchung","TibetanTsugRing","TibetanYigchung"}རྗེ་བཙུན་མི་ལ་རས་པ, Wylie: rje btsun mi la ras pa, 1028/40–1111/23)[1] was a Tibetan siddha, who famously was a murderer as a young man then turned to Buddhism to become an accomplished buddha despite his past. He is generally considered as one of Tibet's most famous yogis and poets, serving as an example for the Buddhist life. He was a student of Marpa Lotsawa, and a major figure in the history of the Kagyu school of Tibetan Buddhism.[1]

This is a hagiography completed in 1488,three and a half centuries after his death It was written by an inspirational poet and nyönpa or "religious madman" Gtsang-smyon He-ru-ka. It is a classic of Tibetan literature, but is not a biography. This article only presents this later acount.

The earliest known account of his life is strikingly different, attributed to Milarepa's principle disciple, Gampopa, though it's probably lecture notes by one of his students.

In this earliest account, he is not a murderer. There is no mention of him killing anyone with black magic, or of his trial constructing towers under Marpa. It's his mother who dies when he is young, not his father. T

Andrew Quintman whose thesis and then book was about Milarepa's life hasn't attempted to deduce his "real life". Though he does say there is good evidence he existed as a historical figure.

For an expanded version of this article with cites, and discussion of the earlier accounts, see Milarepa

10,000 are passing inside the orbit of Neptune on any given day.

A better cite as the Sky at Night is no longer available to watch:

The hunt is now on for more 'Oumuamua-like objects. Extrapolating from this one discovery, there ought to be some 10,000 of them passing through our Solar System inside the orbit of Neptune.

'Oumuamua: 'space cigar's' tumble hints at violent past - BBC News

Breaching a 'carbon threshold' could lead to mass extinction

If it is true then it plays out over a timescale of around 10.000 years of a slightly reduced amount of CO2 being sequestered in the oceans due to acidification. The mass extinctions he studies have many different hypotheses about why they happened.

It is a simple model where he just looks at inputs to the ocean and changing the rate at which carbon is added and removed. These "toy models" often leave out important things that would change the picture. He tries to get a kind of uniform explanation of many past extinctions. So at present it is leading edge science and speculative.

He published a previous paper in 2017. It's only had 21 cites in google Scholar most not that related to the topic.

This is his 2019 press release

http://news.mit.edu/2019/carbon-threshold-mass-extinction-0708

This is his 2017 press release http://news.mit.edu/2017/mathematics-predicts-sixth-mass-extinction-0920

and paper

https://advances.sciencemag.org/content/advances/3/9/e1700906.full.pdf

It says at the end: > Any spike would reach its maximum after about 10,000 years. Hopefully that would give us time to find a solution."

There would be lots we could do with 10,000 years to do it, including maybe technological ways to directly remove CO2 from the water. There isn't that much on this yet ,but are a few projects, enough so that one can suppose that our future civilization may well be able to do it, and it is possible we are on the point of doing it already.

This is direct extraction of CO2 from seawater

This is a similar idea of using the hydrogen from seawater + CO2 to make methanol - the CO2 is 125 times more concentrated in the sea which may make it easier to extract than from the atmosphere.

https://arstechnica.com/science/2019/06/creative-thinking-researchers-propose-solar-methanol-island-using-ocean-co%E2%82%82/ https://www.pnas.org/content/116/25/12212

You can also use seawater plus scrap iron and aluminium to make bcarbonates from CO2 from some other source (power station?) that counteract acidity of the oceans. Traps it as a mineral called dawsonite that is a natural component of Earth's crust.

https://www.youtube.com/watch?v=GsCZm5QPxO8

https://www.sciencedaily.com/releases/2018/06/180625192825.htm

Given the current annual production rate for aluminium and its recycling rate, this technology could be used to miner alise 20–45 million tonnes of carbon dioxide per annum, which would make it the third largest carbon-dioxide-utilising chemi-cal process. Analysis of the energetics of the electrochemical mineralisation shows it is 33% more energy efficient to use waste aluminium this way rather than to recycle it. A similar analysis for using non-recycled scrap steel suggests this could capture 822 million tonnes of carbon dioxide, enough to negate the effect of refineries worldwide. Even if the required electrical energy came from a coal-fuelled power station, the overall process using either aluminium or steel is carbon negative, with more carbon dioxide being mineralised than would be released by the power station

Paper here: https://onlinelibrary.wiley.com/doi/pdf/10.1002/cssc.201702087

The report estimated 86,000 casualties, including 3,500 fatalities, 715,000 damaged buildings, and 7.2 million people displaced, with two million of those seeking shelter, primarily due to the lack of utility services. Direct economic losses, according to the report, would be at least $300 billion

This is not modeling a single event. The cite itself explains that it is for all three segments of the fault hypothetically rupturing as a single faujlt of magnitude 7.7. In actuality it would be three separate earthquakes.

The combined rupture of all three segments simultaneously is designed to approximate the sequential rupture of all three segments over time. The magnitude of Mw7.7 is retained for the combined rupture.

It also explains that these are mainly minor injuries.

Nearly 86,000 total casualties are expected for the 2:00AM event. A large portion of these casualties are minor injuries, approximately 63,300, though 3,500 fatalities are also expected. It goes on to explain that these are immediate deaths from buildings and bridges Those estimates include casualties resulting from structural building and bridge damage only. Therefore, the estimates do not included injuries and fatalities related to transportation accidents, fires, or hazmat exposure. This section deals only with injuries. Fatalities are addressed under mortuary services. The injuries and casualties estimated by the model are only for those that occur at the time of the event. The model does not provide for increases in these numbers that occur post event. For example, those that sustain injuries may die later, or injuries incurred as a result of response activities may result in fatalities

Under mortuary services it has this table which breaks down the 3,500 by state:

That’s for the eight states of Missouri, Illinois, Indiana, Kentucky, Tennessee, Alabama and Missisicpi. Total population 43 million according to the 2000 data they were using.

Most in Tennessee which had a population of 5.69 million and would have 1,319 casualties in this scenario.

By comparison, the US yearly death rate is 8.1 per thousand so for Tennessse, about 45,000 a year.

In the conclusion it says

“Some impacts may be mitigated by retrofitting infrastructure in the most vulnerable areas. By addressing infrastructure vulnerability prior to such a catastrophic event, the consequences described in this report may be reduced substantially.The resource gaps and infrastructure damage described in this analysis present significant unresolved strategic and tactical challenges to response and recovery planners. It is highly unlikely that the resource gaps identified can be closed without developing new strategies and tactics and expanded collaborative relationships.”

A 2015 study suggested that the AMOC has weakened by 15-20% in 200 years

This doesn't seem to have been updated since 2015. The IPCC report in 2018 (chapter 3) says

It is more likely than not that the Atlantic Meridional Overturning Circulation (AMOC) has been weakening in recent decades, given the detection of the cooling of surface waters in the North Atlantic and evidence that the Gulf Stream has slowed since the late 1950s. There is only limited evidence linking the current anomalously weak state of AMOC to anthropogenic warming. It is very likely that the AMOC will weaken over the 21st century. The best estimates and ranges for the reduction based on CMIP5 simulations are 11% (1– 24%) in RCP2.6 and 34% (12– 54%) in RCP8.5 (AR5). There is no evidence indicating significantly different amplitudes of AMOC weakening for 1.5°C versus 2°C of global warming.

Hoegh-Guldberg, O., Jacob, D., Taylor, M., Bindi, M., Brown, S., Camilloni, I., Diedhiou, A., Djalante, R., Ebi, K., Engelbrecht, F. and Guiot, K., 2018. Impacts of 1.5 ºC global warming on natural and human systems. Chapter 3, section 3.3.8

Apart from a few asteroids whose densities have been investigated,[6] one has to resort to enlightened guesswork.

The field has moved on a lot since then. This gives an idea of the range of values for NEO's

So, for a 200 meter asteroid it’s between 1 and 3, most likely around 1.75 or so, but a small chance of an iron meteorite of 6 to 7. For a 20 meter asteroid it’s a similar range but with two very likely densities of around 2.2 and around 2.8 (just going by eye from that diagram). 100 meter size range similar.

It is based on this analysis by the structural type and composition.

Although numerous studies point to resistance to some of Mars conditions, they do so separately, and none has considered the full range of Martian surface conditions, including temperature, pressure, atmospheric composition, radiation, humidity, oxidizing regolith, and others, all at the same time and in combination.[230] Laboratory simulations show that whenever multiple lethal factors are combined, the survival rates plummet quickly.[21]

The researchers are of the view that their work strongly supports the possibility that terrestrial microbes most likely can adapt physiologically to live on Mars

"This work strongly supports the interconnected notions (i) that terrestrial life most likely can adapt physiologically to live on Mars (hence justifying stringent measures to prevent human activities from contaminating / infecting Mars with terrestrial organisms); (ii) that in searching for extant life on Mars we should focus on "protected putative habitats"; and (ii) that early-originating (Noachian period) indigenous Martian life might still survive in such micro-niches despite Mars' cooling and drying during the last 4 billion years"

de Vera, Jean-Pierre; Schulze-Makuch, Dirk; Khan, Afshin; Lorek, Andreas; Koncz, Alexander; Möhlmann, Diedrich; Spohn, Tilman (2014). "Adaptation of an Antarctic lichen to Martian niche conditions can occur within 34 days". Planetary and Space Science. 98: 182–190. Bibcode:2014P&SS...98..182D. doi:10.1016/j.pss.2013.07.014. ISSN 0032-0633.

Currently, the surface of Mars is bathed with radiation, and when reacting with the perchlorates on the surface, it may be more toxic to microorganisms than thought earlier.[11][12] Therefore, the consensus is that if life exists —or existed— on Mars, it could be found or is best preserved in the subsurface, away from present-day harsh surface processes.

This is the old view from around 2007. Nowadays the surface is also thought to be of interest for the search for present day life on Mars.

Cites here are from "A new analysis of Mars “special regions”: findings of the second MEPAG Special Regions Science Analysis Group (SR-SAG2)." 2014

(see section 2.1, page 891)

Finding 2-1: Modern martian environments may contain molecular fuels and oxidants that are known to support metabolism and cell division of chemolithoautotrophic microbes on Earth

3.6. Ionizing radiation at the surface page 891 of[1]).

Finding 3-8: From MSL RAD measurements, ionizing radiation from GCRs at Mars is so low as to be negligible. Intermittent SPEs can increase the atmospheric ionization down to ground level and increase the total dose, but these events are sporadic and last at most a few (2–5)days. These facts are not used to distinguish Special Regions on Mars.

Over a 500-year time frame, the martian surface could be estimated to receive a cumulative ionizing radiation dose of less than 50 Gy, much lower than the LD90 (lethal dose where 90% of subjects would die) for even a radiation-sensitive bacterium such as E. coli (LD90 of ~200–400 Gy)

(see 3.7. Polyextremophiles: combined effects of environmental stressors of[1]).

Finding 3-9: The effects on microbial physiology of more than one simultaneous environmental challenge are poorly understood. Communities of organisms may be able totolerate simultaneous multiple challenges more easily than individual challenges presented separately. What little is known about multiple resistance does not affect our current limits of microbial cell division or metabolism in response to extreme single parameters.

All citing:

Rummel, J.D., Beaty, D.W., Jones, M.A., Bakermans, C., Barlow, N.G., Boston, P.J., Chevrier, V.F., Clark, B.C., de Vera, J.P.P., Gough, R.V. and Hallsworth, J.E., 2014. A new analysis of Mars “special regions”: findings of the second MEPAG Special Regions Science Analysis Group (SR-SAG2).

The search for evidence of habitability, taphonomy (related to fossils), and organic compounds on Mars is now a primary NASA and ESA objective.

Since the article is about Life on Mars it should surely mention the first of NASA’S four science goals:

Goal I: determine if Mars ever supported life

- Objective A: determine if environments having high potential for prior habitability and preservation of biosignatures contain evidence of past life.

- Objective B: determine if environments with high potential for current habitability and expression of biosignatures contain evidence of extant life."

From: Hamilton, V.E., Rafkin, S., Withers, P., Ruff, S., Yingst, R.A., Whitley, R., Center, J.S., Beaty, D.W., Diniega, S., Hays, L. and Zurek, R., Mars Science Goals, Objectives, Investigations, and Priorities: 2015 Version.

There is an almost universal consensus among scholars that the Exodus story is best understood as myth

This is an over simplification, it's possible that some of the population of Israelites did come from Egypt, possibly many thousands of them, and that the story has elements from the experiences of those who did.

"While there is a consensus among scholars that the Exodus did not take place in the manner described in the Bible, surprisingly most scholars agree that the narrative has a historical core, andthat some of the highland settlers came, one wayor another, from Egypt "