- Apr 2024

-

huyenchip.com huyenchip.com

-

[M] You’re building a neural network and you want to use both numerical and textual features. How would you process those different features?

I would pass textual features through a language model encoder in order to represent them as dense vectors (using models such as word2vec, BERT, ...) and concatenate the numerical features to the resulting test embeddings.

-

-

huyenchip.com huyenchip.com

-

[E] Compare batch norm and layer norm.

Layer norm is more adapted when the batch size is small (because then the batch norm metrics are too noisy)

-

- Sep 2022

-

huyenchip.com huyenchip.com

-

[M] For logistic regression, why is log loss recommended over MSE (mean squared error)?

-

-

huyenchip.com huyenchip.com

-

[E] Explain the chain rule.

Given f,g the chain rule allows to calculate the derivative of f(g(x)): $$ \frac{df}{dx} = \frac{df}{dg} \frac{dg}{dx} $$

derivative of the exterior times derivative of the interior: $$ ( f(g(x) )' = f'(g(x))g'(x) $$

-

- Aug 2022

-

huyenchip.com huyenchip.com

-

collaborative filtering

Collaborative filtering is the application of matrix factorization to identify the relationship between items’ and users’ entities

-

- Jun 2022

-

huyenchip.com huyenchip.com

-

[E] A lot of machine learning models aim to approximate probability distributions. Let’s say P is the distribution of the data and Q is the distribution learned by our model. How do measure how close Q is to P?

Using Kullback–Leibler divergence:

$$ D_{KL}(P, Q) = \sum P(x) log(\frac{P(x)}{Q(x)}) $$

-

[M] You want to build a model to classify whether a comment is spam or not spam. You have a dataset of a million comments over the period of 7 days. You decide to randomly split all your data into the train and test splits. Your co-worker points out that this can lead to data leakage. How?

It might be that comments are not independent with respect to time: spams are not uniformly distributed w.r.t time

-

-

huyenchip.com huyenchip.com

-

[E] Why does an ML model’s performance degrade in production?

Concept drift: distribution of the target changes

Predictors drift: distribution of the predictors changes

-

-

huyenchip.com huyenchip.com

-

Explain frequentist vs. Bayesian statistics

Frequentist: probabilities of events are derived from their observed frequencies of occurrence after an infinite number of trials (taking to the limit).

It calculates the probability that the experiment would have the same outcomes if you were to replicate the same conditions again. This model only uses data from the current experiment when evaluating outcomes.

Bayesian: interpret probability distributions as quantifying our uncertainty about the world. In particular, this means that we can now meaningfully talk about probability distributions of parameters, since even though the parameter is fixed, our knowledge of its true value may be limited.

We have to introduce the prior distribution into our analysis - this reflects our belief about the value of p before seeing the actual values of the Xi. The role of the prior is often criticised in the frequentist approach, as it is argued that it introduces subjectivity into the otherwise austere and object world of probability.

-

-

huyenchip.com huyenchip.com

-

[E] Given a uniform random variable X

0 because X is continuous and the probability of a continuous random variable taking exactly a value is zero (integral of a continuous distribution from a to a is zero)

-

-

huyenchip.com huyenchip.com

-

[E] If the labels are known, how would you evaluate the performance of your k-means clustering algorithm?

Consider that as a classification problem so use a regular classification metric such as F1 score

-

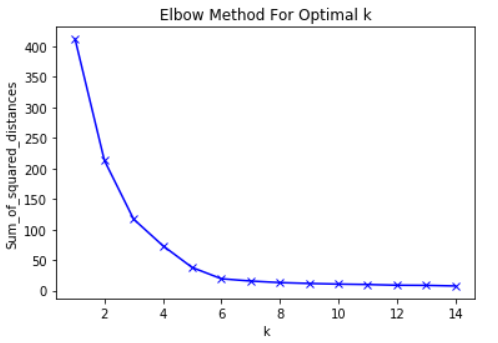

[E] How would you choose the value of k?

Using the elbow method: we plot sum of squared distances of samples to their closest cluster center versus number of clusters and look at inflection point

-

- May 2022

-

huyenchip.com huyenchip.com

-

[E] What does it mean when a function is differentiable?

That the limit

$$ \frac{ f(x+h) - f(x) }{ h } $$ when h -> 0 exists for every x

-

[E] Jensen’s inequality forms the basis for many algorithms for probabilistic inference, including Expectation-Maximization and variational inference.. Explain what Jensen’s inequality is.

Soient f une fonction convexe, (x1, … , xn) un n-uplet de réels appartenant à l'intervalle de définition de f et (λ1, … , λn) un n-uplet de réels positifs tels que:

$$ \sum_{i} \lambda_{i} = 1 $$

Alors

$$ f( \sum_{i} \lambda_{i} x_{i} ) \leq \sum_{i} \lambda_{i} f(x_{i} ) $$

-

[E] Why is convexity desirable in an optimization problem?

Cause any gradient based algo is certain to find the global extremum at some point

-

[M] Give an example of non-differentiable functions that are frequently used in machine learning. How do we do backpropagation if those functions aren’t differentiable?

relu activation function is not differentiable at 0

We use the fact that it is piecewise differentiable and connect the pieces derivatives by assuming a value of the derivative where it's not defined (such as relu'(0) = 0)

-

[E] Give an example of when a function doesn’t have a derivative at a point.

f(x) = abs(x) at 0

-

-

huyenchip.com huyenchip.com

-

Without explicitly using the equation for calculating determinants, what can we say about this matrix’s determinant?

Rank(M) < dim(M) as first column vector and third one are linearly dependent, thus the determinant is 0

-

[E] What does the determinant of a matrix represent?

The determinant of a matrix is the factor by which areas are scaled by this matrix.

-

[E] What’s the inverse of a matrix? Do all matrices have an inverse? Is the inverse of a matrix always unique?

if matrices are linear transformations such that Au = v, matrix inverse A' of A is the transformation such that A'v = u

Only square matrices with non zero determinant have an inverse

Matrix inverse is unique only for bijections

-

[E] Why do we say that matrices are linear transformations?

$$ A \lambda (u + v) = \lambda Au + \lambda Av $$

-

-

huyenchip.com huyenchip.com

-

[E] What does it mean for two vectors to be linearly independent?

it means that they are not proportional, i.e there is no number c such that u = cv

-

[E] Given a vector u

$$ v = \frac{u}{\mid \mid u \mid \mid} $$

-

[E] What’s the geometric interpretation of the dot product of two vectors?

it represents the product of the length of one vector and the projection of another on the former

dot product of two colinear vectors is the product of their length while the dot product of two orthogonal vectors is zero

-

-

huyenchip.com huyenchip.com

-

[E] Why is it empirical?

Because we usually don't know $$ P(x,y) $$ so we take the average loss over the training set, called empirical risk

-

[E] What’s the risk in empirical risk minimization?

Expectation of the loss function

$$ R(h) = E[L(h(x), y)] = \int L(h(x), y) dP(x,y) $$

-