Author response:

The following is the authors’ response to the current reviews.

eLife Assessment<br />

This study offers valuable insights into how humans detect and adapt to regime shifts, highlighting distinct contributions of the frontoparietal network and ventromedial prefrontal cortex to sensitivity to signal diagnosticity and transition probabilities. The combination of an innovative task design, behavioral modeling, and model-based fMRI analyses provides a solid foundation for the conclusions; however, the neuroimaging results have several limitations, particularly a potential confound between the posterior probability of a switch and the passage of time that may not be fully controlled by including trial number as a regressor. The control experiments intended to address this issue also appear conceptually inconsistent and, at the behavioral level, while informing participants of conditional probabilities rather than requiring learning is theoretically elegant, such information is difficult to apply accurately, as shown by well-documented challenges with conditional reasoning and base-rate neglect. Expressing these probabilities as natural frequencies rather than percentages may have improved comprehension. Overall, the study advances understanding of belief updating under uncertainty but would benefit from more intuitive probabilistic framing and stronger control of temporal confounds in future work.

We thank the editors for the assessment. The editor added several limitations based on the new reviewer 3 in this round, which we address below.

With regard to temporal confounds, we clarified in the main text and response to Reviewer 3 that we had already addressed the potential confound between posterior probability of a switch and passage of time in GLM-2 with the inclusion of intertemporal prior. After adding intertemporal prior in the GLM, we still observed the same fMRI results on probability estimates. In addition, we did two other robustness checks, which we mentioned in the manuscript.

With regard to response mode (probability estimation rather than choice or indicating natural frequencies), we wish to point out that the in previous research by Massey and Wu (2005), which the current study was based on, the concern of participants showing system-neglect tendencies due to the mode of information delivery, namely indicating beliefs through reporting probability estimates rather than through choice or other response mode was addressed. Massy and Wu (2005, Study 3) found the same biases when participants performed a choice task that did not require them to indicate probability estimates.

With regard to the control experiments, the control experiments in fact were not intended to address the confounds between posterior probability and passage of time. Rather, they aimed to address whether the neural findings were unique to change detection (Experiment 2) and to address visual and motor confounds (Experiment 3). These and the results of the control experiments were mentioned on page 18-19.

Finally, we wish to highlight that we had performed detailed model comparisons after reviewer 2’s suggestions. Although reviewer 2 was unable to re-review the manuscript, we believe this provides insight into the literature on change detection. See “Incorporating signal dependency into system-neglect model led to better models for regime-shift detection” (p.27-30). The model comparison showed that system-neglect models that incorporate signal dependency are better models than the original system-neglect model in describing participants probability estimates. This suggests that people respond to change-consistent and change-inconsistent signals differently when judging whether the regime had changed. This was not reported in previous behavioral studies and was largely inspired by the neural finding on signal dependency in the frontoparietal cortex. It indicates that neural findings can provide novel insights into computational modeling of behavior.

Public Reviews:

Reviewer #1 (Public review):

Summary:

The study examines human biases in a regime-change task, in which participants have to report the probability of a regime change in the face of noisy data. The behavioral results indicate that humans display systematic biases, in particular, overreaction in stable but noisy environments and underreaction in volatile settings with more certain signals. fMRI results suggest that a frontoparietal brain network is selectively involved in representing subjective sensitivity to noise, while the vmPFC selectively represents sensitivity to the rate of change.

Strengths:

- The study relies on a task that measures regime-change detection primarily based on descriptive information about the noisiness and rate of change. This distinguishes the study from prior work using reversal-learning or change-point tasks in which participants are required to learn these parameters from experiences. The authors discuss these differences comprehensively.

- The study uses a simple Bayes-optimal model combined with model fitting, which seems to describe the data well. The model is comprehensively validated.

- The authors apply model-based fMRI analyses that provide a close link to behavioral results, offering an elegant way to examine individual biases.

We thank the reviewer for the comments.

Weaknesses:

The authors have adequately addressed most of my prior concerns.

We thank the reviewer for recognizing our effort in addressing your concerns.

My only remaining comment concerns the z-test of the correlations. I agree with the non-parametric test based on bootstrapping at the subject level, providing evidence for significant differences in correlations within the left IFG and IPS.

However, the parametric test seems inadequate to me. The equation presented is described as the Fisher z-test, but the numerator uses the raw correlation coefficients (r) rather than the Fisher-transformed values (z). To my understanding, the subtraction should involve the Fisher z-scores, not the raw correlations.

More importantly, the Fisher z-test in its standard form assumes that the correlations come from independent samples, as reflected in the denominator (which uses the n of each independent sample). However, in my opinion, the two correlations are not independent but computed within-subject. In such cases, parametric tests should take into account the dependency. I believe one appropriate method for the current case (correlated correlation coefficients sharing a variable [behavioral slope]) is explained here:

Meng, X.-l., Rosenthal, R., & Rubin, D. B. (1992). Comparing correlated correlation coefficients. Psychological Bulletin, 111(1), 172-175. https://doi.org/10.1037/0033-2909.111.1.172

It should be implemented here:

Diedenhofen B, Musch J (2015) cocor: A Comprehensive Solution for the Statistical Comparison of Correlations. PLoS ONE 10(4): e0121945. https://doi.org/10.1371/journal.pone.0121945

My recommendation is to verify whether my assumptions hold, and if so, perform a test that takes correlated correlations into account. Or, to focus exclusively on the non-parametric test.

In any case, I recommend a short discussion of these findings and how the authors interpret that some of the differences in correlations are not significant.

Thank you for the careful check. Yes. This was indeed a mistake from us. We also agree that the two correlations are not independent. Therefore, we modified the test that accounts for dependent correlations by following Meng et al. (1992) suggested by the reviewer.

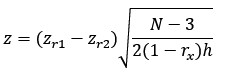

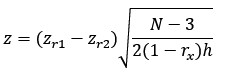

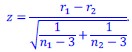

We referred to the correlation between neural and behavioral sensitivity at change-consistent (blue) signals as , and that at change-inconsistent (red) signals as 𝑟<sub>𝑟𝑒𝑑</sub>. To statistically compare these two correlations, we adopted the approach of Meng et al. (1992), which specifically tests differences between dependent correlations according to the following equation

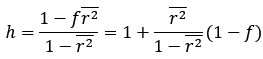

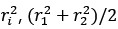

where is the number of subjects, 𝑧<sub>𝑟𝑖</sub> is the Fisher z-transformed value of 𝑟<sub>𝑖</sub>, 𝑟<sub>1</sub> = 𝑟<sub>𝑏𝑙𝑢𝑒</sub> and 𝑟<sub>2</sub> = 𝑟<sub>𝑟𝑒𝑑</sub>. 𝑟<sub>𝑥</sub> is the correlation between the neural sensitivity at change-consistent signals and change-inconsistent signals.

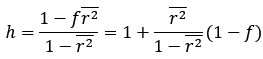

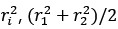

Where  is the mean of the

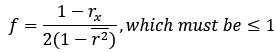

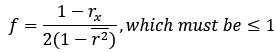

is the mean of the  , and 𝑓 should be set to 1 if > 1.

, and 𝑓 should be set to 1 if > 1.

We found that among the five ROIs in the frontoparietal network, two of them, namely the left IFG and left IPS, the difference in correlation was significant (one-tailed z test; left IFG: 𝑧 = 1.8908, 𝑝 = 0.0293; left IPS: 𝑧 = 2.2584, 𝑝 = 0.0049). For the remaining three ROIs, the difference in correlation was not significant (dmPFC: 𝑧 = 0.9522, 𝑝 = 0.1705; right IFG: 𝑧 = 0.9860, 𝑝 = 0.1621; right IPS: 𝑧 = 1.4833, 𝑝 = 0.0690). We chose one-tailed test because we already know the correlation under the blue signals was significantly greater than 0. These updated results are consistent with the nonparametric tests we had already performed and we will update them in the revised manuscript.

Reviewer #3 (Public review):

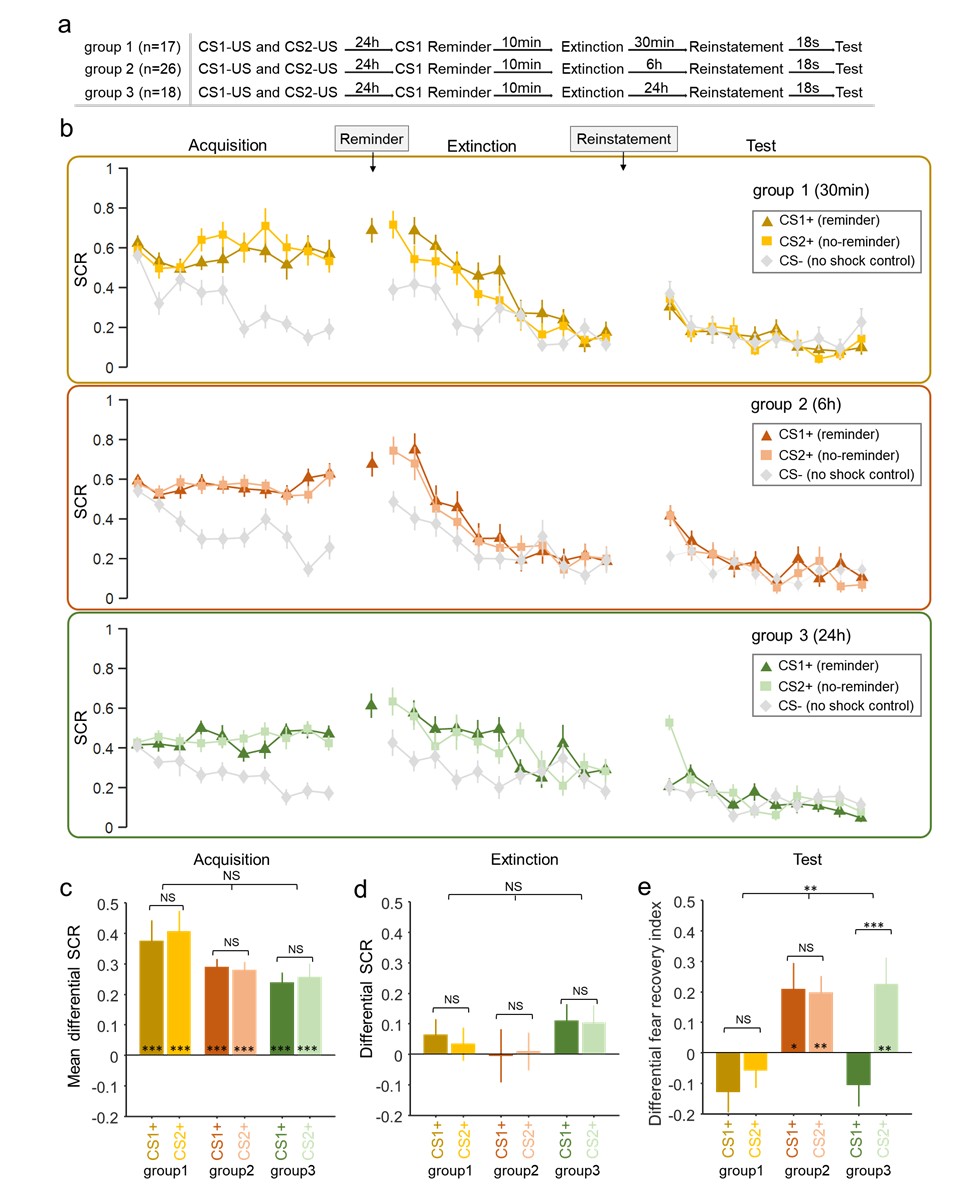

This study concerns how observers (human participants) detect changes in the statistics of their environment, termed regime shifts. To make this concrete, a series of 10 balls are drawn from an urn that contains mainly red or mainly blue balls. If there is a regime shift, the urn is changed over (from mainly red to mainly blue) at some point in the 10 trials. Participants report their belief that there has been a regime shift as a % probability. Their judgement should (mathematically) depend on the prior probability of a regime shift (which is set at one of three levels) and the strength of evidence (also one of three levels, operationalized as the proportion of red balls in the mostly-blue urn and vice versa). Participants are directly instructed of the prior probability of regime shift and proportion of red balls, which are presented on-screen as numerical probabilities. The task therefore differs from most previous work on this question in that probabilities are instructed rather than learned by observation, and beliefs are reported as numerical probabilities rather than being inferred from participants' choice behaviour (as in many bandit tasks, such as Behrens 2007 Nature Neurosci).

The key behavioural finding is that participants over-estimate the prior probability of regime change when it is low, and under estimate it when it is high; and participants over-estimate the strength of evidence when it is low and under-estimate it when it is high. In other words participants make much less distinction between the different generative environments than an optimal observer would. This is termed 'system neglect'. A neuroeconomic-style mathematical model is presented and fit to data.

Functional MRI results how that strength of evidence for a regime shift (roughly, the surprise associated with a blue ball from an apparently red urn) is associated with activity in the frontal-parietal orienting network. Meanwhile, at time-points where the probability of a regime shift is high, there is activity in another network including vmPFC. Both networks show individual differences effects, such that people who were more sensitive to strength of evidence and prior probability show more activity in the frontal-parietal and vmPFC-linked networks respectively.

We thank the reviewer for the overall descriptions of the manuscript.

Strengths:

(1) The study provides a different task for looking at change-detection and how this depends on estimates of environmental volatility and sensory evidence strength, in which participants are directly and precisely informed of the environmental volatility and sensory evidence strength rather than inferring them through observation as in most previous studies

(2) Participants directly provide belief estimates as probabilities rather than experimenters inferring them from choice behaviour as in most previous studies<br />

(3) The results are consistent with well-established findings that surprising sensory events activate the frontal-parietal orienting network whilst updating of beliefs about the word ('regime shift') activates vmPFC.

Thank you for these assessments.

Weaknesses:

(1) The use of numerical probabilities (both to describe the environments to participants, and for participants to report their beliefs) may be problematic because people are notoriously bad at interpreting probabilities presented in this way, and show poor ability to reason with this information (see Kahneman's classic work on probabilistic reasoning, and how it can be improved by using natural frequencies). Therefore the fact that, in the present study, people do not fully use this information, or use it inaccurately, may reflect the mode of information delivery.

We appreciate the reviewer’s concern on this issue. The concern was addressed in Massey and Wu (2005) as participants performed a choice task in which they were not asked to provide probability estimates (Study 3 in Massy and Wu, 2005). Instead, participants in Study 3 were asked to predict the color of the ball before seeing a signal. This was a more intuitive way of indicating his or her belief about regime shift. The results from the choice task were identical to those found in the probability estimation task (Study 1 in Massey and Wu). We take this as evidence that the system-neglect behavior the participants showed was less likely to be due to the mode of information delivery.

(2) Although a very precise model of 'system neglect' is presented, many other models could fit the data.

For example, you would get similar effects due to attraction of parameter estimates towards a global mean - essentially application of a hyper-prior in which the parameters applied by each participant in each block are attracted towards the experiment-wise mean values of these parameters. For example, the prior probability of regime shift ground-truth values [0.01, 0.05, 0.10] are mapped to subjective values of [0.037, 0.052, 0.069]; this would occur if observers apply a hyper-prior that the probability of regime shift is about 0.05 (the average value over all blocks). This 'attraction to the mean' is a well-established phenomenon and cannot be ruled out with the current data (I suppose you could rule it out by comparing to another dataset in which the mean ground-truth value was different).

We thank the reviewer for this comment. It is true that the system-neglect model is not entirely inconsistent with regression to the mean, regardless of whether the implementation has a hyper prior or not. In fact, our behavioral measure of sensitivity to transition probability and signal diagnosticity, which we termed the behavioral slope, is based on linear regression analysis. In general, the modeling approach in this paper is to start from a generative model that defines ideal performance and consider modifying the generative model when systematic deviations in actual performance from the ideal is observed. In this approach, a generative model with hyper-prior would be more complex to begin with, and a regression to the mean idea by itself does not generate a priori predictions.

More generally, any model in which participants don't fully use the numerical information they were given would produce apparent 'system neglect'. Four qualitatively different example reasons are: 1. Some individual participants completely ignored the probability values given. 2. Participants did not ignore the probability values given, but combined them with a hyperprior as above. 3. Participants had a reporting bias where their reported beliefs that a regime-change had occurred tend to be shifted towards 50% (rather than reporting 'confident' values such 5% or 95%). 4. Participants underweighted probability outliers resulting in underweighting of evidence in the 'high signal diagnosticity' environment (10.1016/j.neuron.2014.01.020 )

In summary I agree that any model that fits the data would have to capture the idea that participants don't differentiate between the different environments as much as they should, but I think there are a number of qualitatively different reasons why they might do this - of which the above are only examples - hence I find it problematic that the authors present the behaviour as evidence for one extremely specific model.

Thank you for raising this point. The modeling principle we adopt is the following. We start from the normative model—the Bayesian model—that defined what normative behavior should look like. We compared participants’ behavior with the Bayesian model and found systematic deviations from it. To explain those systematic deviations, we considered modeling options within the confines of the same modeling framework. In other words, we considered a parameterized version of the Bayesian model, which is the system-neglect model and examined through model comparison the best modeling choice. This modeling approach is not uncommon, and many would agree this is the standard approach in economics and psychology. For example, Kahneman and Tversky adopted this approach when proposing prospect theory, a modification of expected utility theory where expected utility theory can be seen as one specific model for how utility of an option should be computed.

(3) Despite efforts to control confounds in the fMRI study, including two control experiments, I think some confounds remain.

For example, a network of regions is presented as correlating with the cumulative probability that there has been a regime shift in this block of 10 samples (Pt). However, regardless of the exact samples shown, doesn't Pt always increase with sample number (as by the time of later samples, there have been more opportunities for a regime shift)? Unless this is completely linear, the effect won't be controlled by including trial number as a co-regressor (which was done).

Thank you for raising this concern. Yes, Pt always increases with sample number regardless of evidence (seeing change-consistent or change-inconsistent signals). This is captured by the ‘intertemporal prior’ in the Bayesian model, which we included as a regressor in our GLM analysis (GLM-2), in addition to Pt. In short, GLM-1 had Pt and sample number. GLM-2 had Pt, intertemporal prior, and sample number, among other regressors. And we found that, in both GLM-1 and GLM-2, both vmPFC and ventral striatum correlated with Pt.

To make this clearer, we updated the main text to further clarify this on p.18:

On the other hand, two additional fMRI experiments are done as control experiments and the effect of Pt in the main study is compared to Pt in these control experiments. Whilst I admire the effort in carrying out control studies, I can't understand how these particular experiment are useful controls. For example in experiment 3 participants simply type in numbers presented on the screen - how can we even have an estimate of Pt from this task?

We thank the reviewer for this comment. The purpose of Experiment 3 was to control for visual and motor confounds. In other words, if subjects saw the similar visual layout and were just instructed to press numbers, would we observe the vmPFC, ventral striatum, and the frontoparietal network like what we did in the main experiment (Experiment 1)?

The purpose of Experiment 2 was to establish whether what we found about Pt was unique to change detection. In Experiment 2, subjects estimated the probability that the current regime is the blue regime (just as they did in Experiment 1) except that there were no regime shifts involved. In other words, it is possible that the regions we identified were generally associated with probability estimation and not particularly about change detection. And we used Experiment 2 to examine whether this were true.

(4) The Discussion is very long, and whilst a lot of related literature is cited, I found it hard to pin down within the discussion, what the key contributions of this study are. In my opinion it would be better to have a short but incisive discussion highlighting the advances in understanding that arise from the current study, rather than reviewing the field so broadly.

Thank you. We received different feedbacks from previous reviews on what to include in Discussion. To address the reviewer’s concern, we will revise the Discussion to better highlight the key contributions of the current study at the beginning of Discussion.

Recommendations for the authors:

Reviewer #3 (Recommendations for the authors):

Many of the figures are too tiny - the writing is very small, as are the pictures of brains. I'd suggest adjusting these so they will be readable without enlarging.

Thank you. We will enlarge the figures to make them more readable.

The following is the authors’ response to the original reviews.

Public Reviews:

Reviewer #1 (Public review):

Summary:

The study examines human biases in a regime-change task, in which participants have to report the probability of a regime change in the face of noisy data. The behavioral results indicate that humans display systematic biases, in particular, overreaction in stable but noisy environments and underreaction in volatile settings with more certain signals. fMRI results suggest that a frontoparietal brain network is selectively involved in representing subjective sensitivity to noise, while the vmPFC selectively represents sensitivity to the rate of change.

Strengths:

(1) The study relies on a task that measures regime-change detection primarily based on descriptive information about the noisiness and rate of change. This distinguishes the study from prior work using reversal-learning or change-point tasks in which participants are required to learn these parameters from experiences. The authors discuss these differences comprehensively.

Thank you for recognizing our contribution to the regime-change detection literature and our effort in discussing our findings in relation to the experience-based paradigms.

(2) The study uses a simple Bayes-optimal model combined with model fitting, which seems to describe the data well.

Thank you for recognizing the contribution of our Bayesian framework and systemneglect model.

(3) The authors apply model-based fMRI analyses that provide a close link to behavioral results, offering an elegant way to examine individual biases.

Thank you for recognizing our execution of model-based fMRI analyses and effort in using those analyses to link with behavioral biases.

Weaknesses:

My major concern is about the correlational analysis in the section "Under- and overreactions are associated with selectivity and sensitivity of neural responses to system parameters", shown in Figures 5c and d (and similarly in Figure 6). The authors argue that a frontoparietal network selectively represents sensitivity to signal diagnosticity, while the vmPFC selectively represents transition probabilities. This claim is based on separate correlational analyses for red and blue across different brain areas. The authors interpret the finding of a significant correlation in one case (blue) and an insignificant correlation (red) as evidence of a difference in correlations (between blue and red) but don't test this directly. This has been referred to as the "interaction fallacy" (Niewenhuis et al., 2011; Makin & Orban de Xivry 2019). Not directly testing the difference in correlations (but only the differences to zero for each case) can lead to wrong conclusions. For example, in Figure 5c, the correlation for red is r = 0.32 (not significantly different from zero) and r = 0.48 (different from zero). However, the difference between the two is 0.1, and it is likely that this difference itself is not significant. From a statistical perspective, this corresponds to an interaction effect that has to be tested directly. It is my understanding that analyses in Figure 6 follow the same approach.

Relevant literature on this point is:

Nieuwenhuis, S, Forstmann, B & Wagenmakers, EJ (2011). Erroneous analyses of interactions in neuroscience: a problem of significance. Nat Neurosci 14, 11051107. https://doi.org/10.1038/nn.2886

Makin TR, Orban de Xivry, JJ (2019). Science Forum: Ten common statistical mistakes to watch out for when writing or reviewing a manuscript. eLife 8:e48175. https://doi.org/10.7554/eLife.48175

There is also a blog post on simulation-based comparisons, which the authors could check out: https://garstats.wordpress.com/2017/03/01/comp2dcorr/

I recommend that the authors carefully consider what approach works best for their purposes. It is sometimes recommended to directly compare correlations based on Monte-Carlo simulations (cf Makin & Orban). It might also be appropriate to run a regression with the dependent variable brain activity (Y) and predictors brain area (X) and the model-based term of interest (Z). In this case, they could include an interaction term in the model:

Y = \beta_0 + \beta_1 \cdot X + \beta_2 \cdot Z + \beta_3 \cdot X \cdot Z

The interaction term reflects if the relationship between the model term Z and brain activity Y is conditional on the brain area of interest X.

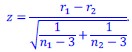

Thank you for the suggestion. In response, we tested for the difference in correlation both parametrically and nonparametrically. The results were identical. In the parametric test, we used the Fisher z transformation to transform the difference in correlation coefficients to the z statistic. That is, for two correlation coefficients, 𝑟<sub>1</sub> (with sample size 𝑛<sub>1</sub>) and 𝑟<sub>2</sub>, (with sample size 𝑛<sub>2</sub>), the z statistic of the difference in correlation is given by

We referred to the correlation between neural and behavioral sensitivity at change-consistent (blue) signals as 𝑟<sub>𝑏𝑙𝑢𝑒</sub>, and that at change-inconsistent (red) signals as 𝑟<sub>𝑟𝑒𝑑</sub>. For the Fisher z transformation 𝑟<sub>1</sub>= 𝑟<sub>𝑏𝑙𝑢𝑒</sub> and 𝑟<sub>2</sub> \= 𝑟<sub>𝑟𝑒𝑑</sub>. We found that among the five ROIs in the frontoparietal network, two of them, namely the left IFG and left IPS, the difference in correlation was significant (one-tailed z test; left IFG: 𝑧 = 1.8355, 𝑝 =0.0332; left IPS: 𝑧 = 2.3782, 𝑝 = 0.0087). For the remaining three ROIs, the difference in correlation was not significant (dmPFC: 𝑧 = 0.7594, 𝑝 = 0.2238; right IFG: 𝑧 = 0.9068, 𝑝 = 0.1822; right IPS: 𝑧 = 1.3764, 𝑝 = 0.0843). We chose one-tailed test because we already know the correlation under the blue signals was significantly greater than 0.

In the nonparametric test, we performed nonparametric bootstrapping to test for the difference in correlation (Efron & Tibshirani, 1994). We resampled with replacement the dataset (subject-wise) and used the resampled dataset to compute the difference in correlation. We then repeated the above for 100,000 times so as to estimate the distribution of the difference in correlation coefficients, tested for significance and estimated p-value based on this distribution. Consistent with our parametric tests, here we also found that the difference in correlation was significant in left IFG and left IPS (left IFG: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.46, 𝑝 = 0.0496; left IPS: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.5306, 𝑝 = 0.0041), but was not significant in dmPFC, right IFG, and right IPS (dmPFC: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.1634, 𝑝 = 0.1919; right IFG: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.2123, 𝑝 = 0.1681; right IPS: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.3434, 𝑝 = 0.0631).

In summary, we found that neural sensitivity to signal diagnosticity in the frontoparietal network measured at change-consistent signals significantly correlated with individual subjects’ behavioral sensitivity to signal diagnosticity (𝑟<sub>𝑏𝑙𝑢𝑒</sub>). By contrast, neural sensitivity to signal diagnosticity measured at change-inconsistent did not significantly correlate with behavioral sensitivity (𝑟<sub>𝑟𝑒𝑑</sub>). The difference in correlation, 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub>, however, was statistically significant in some (left IPS and left IFG) but not all brain regions within the frontoparietal network.

To incorporate these updates, we added descriptions of the methods and results in the revised manuscript. In the Results section (p.26-27):

“We further tested, for each brain region, whether the difference in correlation was significant using both parametric and nonparametric tests (see Parametric and nonparametric tests for difference in correlation coefficients in Methods). The results were identical. In the parametric test, we used the Fisher 𝑧 transformation to transform the difference in correlation coefficients to the 𝑧 statistic. We found that among the five ROIs in the frontoparietal network, two of them, namely the left IFG and left IPS, the difference in correlation was significant (one-tailed z test; left IFG: 𝑧 = 1.8355, 𝑝 = 0.0332; left IPS: 𝑧 = 2.3782, 𝑝 = 0.0087). For the remaining three ROIs, the difference in correlation was not significant (dmPFC: 𝑧 = 0.7594, 𝑝 = 0.2238; right IFG: 𝑧 = 0.9068, 𝑝 = 0.1822; right IPS: 𝑧 = 1.3764, 𝑝 = 0.0843). We chose one-tailed test because we already know the correlation under change-consistent signals was significantly greater than 0. In the nonparametric test, we performed nonparametric bootstrapping to test for the difference in correlation. We referred to the correlation between neural and behavioral sensitivity at change-consistent (blue) signals as 𝑟<sub>𝑏𝑙𝑢𝑒</sub>, and that at change-inconsistent (red) signals as 𝑟<sub>𝑟𝑒𝑑</sub>. Consistent with the parametric tests, we also found that the difference in correlation was significant in left IFG and left IPS (left IFG: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.46, 𝑝 = 0.0496; left IPS: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.5306, 𝑝 = 0.0041), but was not significant in dmPFC, right IFG, and right IPS (dmPFC: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \=0.1634, 𝑝 = 0.1919; right IFG: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.2123, 𝑝 = 0.1681; right IPS: 𝑟<sub>𝑏𝑙𝑢𝑒</sub> − 𝑟<sub>𝑟𝑒𝑑</sub> \= 0.3434, 𝑝 = 0.0631). In summary, we found that neural sensitivity to signal diagnosticity measured at change-consistent signals significantly correlated with individual subjects’ behavioral sensitivity to signal diagnosticity. By contrast, neural sensitivity to signal diagnosticity measured at change-inconsistent signals did not significantly correlate with behavioral sensitivity. The difference in correlation, however, was statistically significant in some (left IPS and left IFG) but not all brain regions within the frontoparietal network.”

In the Methods section, we added on p.53:

“Parametric and nonparametric tests for difference in correlation coefficients. We implemented both parametric and nonparametric tests to examine whether the difference in Pearson correlation coefficients was significant. In the parametric test, we used the Fisher 𝑧 transformation to transform the difference in correlation coefficients to the 𝑧 statistic. That is, for two correlation coefficients, 𝑟<sub>1</sub> (with sample size 𝑛<sub>2</sub>) and 𝑟<sub>2</sub>, (with sample size 𝑛<sub>1</sub>), the 𝑧 statistic of the difference in correlation is given by

We referred to the correlation between neural and behavioral sensitivity at changeconsistent (blue balls) signals as 𝑟<sub>𝑏𝑙𝑢𝑒</sub>, and that at change-inconsistent (red balls) signals as 𝑟<sub>𝑟𝑒𝑑</sub>. For the Fisher 𝑧 transformation, 𝑟<sub>1</sub> \= 𝑟 𝑟<sub>𝑏𝑙𝑢𝑒</sub> and 𝑟<sub>2</sub> \= 𝑟<sub>𝑟𝑒𝑑</sub>. In the nonparametric test, we performed nonparametric bootstrapping to test for the difference in correlation (Efron & Tibshirani, 1994). That is, we resampled with replacement the dataset (subject-wise) and used the resampled dataset to compute the difference in correlation. We then repeated the above for 100,000 times so as to estimate the distribution of the difference in correlation coefficients, tested for significance and estimated p-value based on this distribution.”

Another potential concern is that some important details about the parameter estimation for the system-neglect model are missing. In the respective section in the methods, the authors mention a nonlinear regression using Matlab's "fitnlm" function, but it remains unclear how the model was parameterized exactly. In particular, what are the properties of this nonlinear function, and what are the assumptions about the subject's motor noise? I could imagine that by using the inbuild function, the assumption was that residuals are Gaussian and homoscedastic, but it is possible that the assumption of homoscedasticity is violated, and residuals are systematically larger around p=0.5 compared to p=0 and p=1. Relatedly, in the parameter recovery analyses, the authors assume different levels of motor noise. Are these values representative of empirical values?

We thank the reviewer for this excellent point. The reviewer touched on model parameterization, assumption of noise, and parameter recovery analysis. We answered these questions point-by-point below.

On how our model was parameterized

We parameterized the model according to the system-neglect model in Eq. (2) and estimated the alpha parameter separately for each level of transition probability and the beta parameter separately for each level of signal diagnosticity. As a result, we had a total of 6 parameters (3 alpha and 3 beta parameters) in the model. The system-neglect model is then called by fitnlm so that these parameters can be estimated. The term ‘nonlinear’ regression in fitnlm refers to the fact that you can specify any model (in our case the system-neglect model) and estimate its parameters when calling this function. In our use of fitnlm, we assume that the noise is Gaussian and homoscedastic (the default option).

On the assumptions about subject’s motor noise

We actually never called the noise ‘motor’ because it can be estimation noise as well. In the context of fitnlm, we assume that the noise is Gaussian and homoscedastic.

On the possibility that homoscedasticity is violated

We take the reviewer’s point. In response, we separately estimated the residual standard deviation at different probability intervals ([0.0–0.2), [0.2–0.4), [0.4–0.6), [0.6– 0.8), and [0.8–1.0]). The result is shown in the figure below. The black data points are the average residual standard deviation (across subjects) and the error bars are the standard error of the mean. The residual standard deviation is indeed heteroscedastic— smallest at 0.1 probability and increasing as probability increases and asymptote at 0.5 (Fig. S4).

To examine how this would affect model fitting (parameter estimation), we performed parameter recovery analysis based on these empirically estimated, probabilitydependent residual standard deviation. That is, we simulated subjects’ probability estimates using the system-neglect model and added the heteroscedastic noise according to the empirical values and then estimated the parameter estimates of the system-neglect model. The recovered parameter estimates did not seem to be affected by the heteroscedasticity of the variance. The parameter recovery results were identical to the parameter recovery results when homoscedasticity was assumed. This suggested that although homoscedasticity was violated, it did not affect the accuracy of the parameter estimates (Fig.S4).

We added a section ‘Impact of noise homoscedasticity on parameter estimation’ in Methods section (p.47-48) and a figure in the supplement (Fig. S4) to describe this:

On whether the noise levels in parameter recovery analysis are representative of empirical values

To address the reviewer’s question, we conducted a new analysis using maximum likelihood estimation to simultaneously estimate the system-neglect model and the noise level of each individual subject. To estimate each subject’s noise level, we incorporated a noise parameter into the system-neglect model. We assumed that probability estimates are noisy and modeled them with a Gaussian distribution where the noise parameter (𝜎,-./&) is the standard deviation. At each period, a probability estimate of regime shift was computed according to the system-neglect model where Θ is the set of parameters including parameters in the system-neglect model and the noise parameter. The likelihood function, 𝐿(Θ), is the probability of observing the subject’s actual probability estimate at period 𝑡, 𝑝), given Θ, 𝐿(Θ) = 𝑃(𝑝)|Θ). Since we modeled the noisy probability estimates with a Gaussian distribution, we can therefore express 𝐿(Θ) as 𝐿(Θ)~𝑁(𝑝); 𝑝)*+, 𝜎,-./&) where 𝑝)*+ is the probability estimate predicted by the system-neglect (SN) model at period 𝑡. As a reminder, we referred to a ‘period’ as the time when a new signal appeared during a trial (for a given transition probability and signal diagnosticity). To find that maximum likelihood estimates of ΘMLE, we summed over all periods the negative natural logarithm of likelihood and used MATLAB’s fmincon function to find ΘMLE. Across subjects, we found that the mean noise estimate was 0.1735 and ranged from 0.1118 to 0.2704 (Supplementary Figure S3).”

Compared with our original parameter recovery analysis where the maximum noise level was set at 0.1, our data indicated that some subjects’ noise was larger than this value. Therefore, we expanded our parameter recovery analysis to include noise levels beyond 0.1 to up to 0.3. The results are now updated in Supplementary Fig. S3.

We updated the parameter recovery section (p. 47) in Methods:

The main study is based on N=30 subjects, as are the two control studies. Since this work is about individual differences (in particular w.r.t. to neural representations of noise and transition probabilities in the frontoparietal network and the vmPFC), I'm wondering how robust the results are. Is it likely that the results would replicate with a larger number of subjects? Can the two control studies be leveraged to address this concern to some extent?

We can address the issue of robustness through looking at the effect size. In particular, with respect to individual differences in neural sensitivity of transition probability and signal diagnosticity, since the significant correlation coefficients between neural and behavioral sensitivity were between 0.4 and 0.58 for signal diagnosticity in frontoparietal network (Fig. 5C), and -0.38 and -0.37 for transition probability in vmPFC (Fig. 5D), the effect size of these correlation coefficients was considered medium to large (Cohen, 1992).

It would be challenging to use the control studies to address the robustness concern. The two control studies did not allow us to examine individual differences – in particular with respect to neural selectivity of noise and transition probability – and therefore we think it is less likely to leverage the control studies. Having said that, it is possible to look at neural selectivity of noise (signal diagnosticity) in the first control experiment where subjects estimated the probability of blue regime in a task where there was no regime change (transition probability was 0). However, the fact that there were no regime shifts changed the nature of the task. Instead of always starting at the Red regime in the main experiment, in the first control experiment we randomly picked the regime to draw the signals from. It also changed the meaning and the dynamics of the signals (red and blue) that would appear. In the main experiment the blue signal is a signal consistent with change, but in the control experiment this is no longer the case. In the main experiment, the frequency of blue signals is contingent upon both noise and transition probability. In general, blue signals are less frequent than red signals because of small transition probabilities. But in the first control experiment, the frequency of blue signals may not be less frequent because the regime was blue in half of the trials. Due to these differences, we do not see how analyzing the control experiments could help in establishing robustness because we do not have a good prediction as to whether and how the neural selectivity would be impacted by these differences.

It seems that the authors have not counterbalanced the colors and that subjects always reported the probability of the blue regime. If so, I'm wondering why this was not counterbalanced.

We are aware of the reviewer’s concern. The first reason we did not do these (color counterbalancing and report blue/red regime balancing) was to not confuse the subjects in an already complicated task. Balancing these two variables also comes at the cost of sample size, which was the second reason we did not do it. Although we can elect to do these balancing at the between-subject level to not impact the task complexity, we could have introduced another confound that is the individual differences in how people respond to these variables. This is the third reason we were hesitant to do these counterbalancing.

Reviewer #2 (Public review):

Summary:

This paper focuses on understanding the behavioral and neural basis of regime shift detection, a common yet hard problem that people encounter in an uncertain world.

Using a regime-shift task, the authors examined cognitive factors influencing belief updates by manipulating signal diagnosticity and environmental volatility. Behaviorally, they have found that people demonstrate both over and under-reaction to changes given different combinations of task parameters, which can be explained by a unified system-neglect account. Neurally, the authors have found that the vmPFC-striatum network represents current belief as well as belief revision unique to the regime detection task. Meanwhile, the frontoparietal network represents cognitive factors influencing regime detection i.e., the strength of the evidence in support of the regime shift and the intertemporal belief probability. The authors further link behavioral signatures of system neglect with neural signals and have found dissociable patterns, with the frontoparietal network representing sensitivity to signal diagnosticity when the observation is consistent with regime shift and vmPFC representing environmental volatility, respectively. Together, these results shed light on the neural basis of regime shift detection especially the neural correlates of bias in belief update that can be observed behaviorally.

Strengths:

(1) The regime-shift detection task offers a solid ground to examine regime-shift detection without the potential confounding impact of learning and reward. Relatedly, the system-neglect modeling framework provides a unified account for both over or under-reacting to environmental changes, allowing researchers to extract a single parameter reflecting people's sensitivity to changes in decision variables and making it desirable for neuroimaging analysis to locate corresponding neural signals.

Thank you for recognizing our task design and our system-neglect computational framework in understanding change detection.

(2) The analysis for locating brain regions related to belief revision is solid. Within the current task, the authors look for brain regions whose activation covary with both current belief and belief change. Furthermore, the authors have ruled out the possibility of representing mere current belief or motor signal by comparing the current study results with two other studies. This set of analyses is very convincing.

Thank you for recognizing our control studies in ruling out potential motor confounds in our neural findings on belief revision.

(3) The section on using neuroimaging findings (i.e., the frontoparietal network is sensitive to evidence that signals regime shift) to reveal nuances in behavioral data (i.e., belief revision is more sensitive to evidence consistent with change) is very intriguing. I like how the authors structure the flow of the results, offering this as an extra piece of behavioral findings instead of ad-hoc implanting that into the computational modeling.

Thank you for appreciating how we showed that neural insights can lead to new behavioral findings.

Weaknesses:

(1) The authors have presented two sets of neuroimaging results, and it is unclear to me how to reason between these two sets of results, especially for the frontoparietal network. On one hand, the frontoparietal network represents belief revision but not variables influencing belief revision (i.e., signal diagnosticity and environmental volatility). On the other hand, when it comes to understanding individual differences in regime detection, the frontoparietal network is associated with sensitivity to change and consistent evidence strength. I understand that belief revision correlates with sensitivity to signals, but it can probably benefit from formally discussing and connecting these two sets of results in discussion. Relatedly, the whole section on behavioral vs. neural slope results was not sufficiently discussed and connected to the existing literature in the discussion section. For example, the authors could provide more context to reason through the finding that striatum (but not vmPFC) is not sensitive to volatility.

We thank the reviewer for the valuable suggestions.

With regard to the first comment, we wish to clarify that we did not find frontoparietal network to represent belief revision. It was the vmPFC and ventral striatum that we found to represent belief revision (delta Pt in Fig. 3). For the frontoparietal network, we identified its involvement in our task through finding that its activity correlated with strength of change evidence (Fig. 4) and individual subjects’ sensitivity to signal diagnosticity (Fig. 5). Conceptually, these two findings reflect how individuals interpret the signals (signals consistent or inconsistent with change) in light of signal diagnosticity. This is because (1) strength of change evidence is defined as signals (+1 for signal consistent with change, and -1 for signal inconsistent with change) multiplied by signal diagnosticity and (2) sensitivity to signal diagnosticity reflects how individuals subjectively evaluate signal diagnosticity. At the theoretical level, these two findings can be interpreted through our computational framework in that both the strength of change evidence and sensitivity to signal diagnosticity contribute to estimating the likelihood of change (Eqs. 1 and 2). We added a paragraph in Discussion to talk about this.

We added on p. 36:

“For the frontoparietal network, we identified its involvement in our task through finding that its activity correlated with strength of change evidence (Fig. 4) and individual subjects’ sensitivity to signal diagnosticity (Fig. 5). Conceptually, these two findings reflect how individuals interpret the signals (signals consistent or inconsistent with change) in light of signal diagnosticity. This is because (1) strength of change evidence is defined as signals (+1 for signal consistent with change, and −1 for signal inconsistent with change) multiplied by signal diagnosticity and (2) sensitivity to signal diagnosticity reflects how individuals subjectively evaluate signal diagnosticity. At the theoretical level, these two findings can be interpreted through our computational framework in that both the strength of change evidence and sensitivity to signal diagnosticity contribute to estimating the likelihood of change (Equations 1 and 2 in Methods).”

With regard to the second comment, we added a discussion on the behavioral and neural slope comparison. We pointed out previous papers conducting similar analysis (Vilares et al., 2011; Ting et al., 2015; Yang & Wu, 2020), their findings and how they relate to our results. Vilares et al. found that sensitivity to prior information (uncertainty in prior distribution) in the orbitofrontal cortex (OFC) and putamen correlated with behavioral measure of sensitivity to prior. In the current study, transition probability acts as prior in the system-neglect framework (Eq. 1) and we found that ventromedial prefrontal cortex represents subjects’ sensitivity to transition probability. Together, these results suggest that OFC (with vmPFC being part of OFC, see Wallis, 2011) is involved in the subjective evaluation of prior information in both static (Vilares et al., 2011) and dynamic environments (current study).

We added on p. 37-38:

“In the current study, our psychometric-neurometric analysis focused on comparing behavioral sensitivity with neural sensitivity to the system parameters (transition probability and signal diagnosticity). We measured sensitivity by estimating the slope of behavioral data (behavioral slope) and neural data (neural slope) in response to the system parameters. Previous studies had adopted a similar approach (Ting et al., 2015a; Vilares et al., 2012; Yang & Wu, 2020). For example, Vilares et al. (2012) found that sensitivity to prior information (uncertainty in prior distribution) in the orbitofrontal cortex (OFC) and putamen correlated with behavioral measure of sensitivity to the prior.

In the current study, transition probability acts as prior in the system-neglect framework (Eq. 2 in Methods) and we found that ventromedial prefrontal cortex represents subjects’ sensitivity to transition probability. Together, these results suggest that OFC (with vmPFC being part of OFC, see Wallis, 2011) is involved in the subjective evaluation of prior information in both static (Vilares et al., 2012) and dynamic environments (current study). In addition, distinct from vmPFC in representing sensitivity to transition probability or prior, we found through the behavioral-neural slope comparison that the frontoparietal network represents how sensitive individual decision makers are to the diagnosticity of signals in revealing the true state (regime) of the environment.”

(2) More details are needed for behavioral modeling under the system-neglect framework, particularly results on model comparison. I understand that this model has been validated in previous publications, but it is unclear to me whether it provides a superior model fit in the current dataset compared to other models (e.g., a model without \alpha or \beta). Relatedly, I wonder whether the final result section can be incorporated into modeling as well - i.e., the authors could test a variant of the model with two \betas depending on whether the observation is consistent with a regime shift and conduct model comparison.

Thank you for the great suggestion. We rewrote the final Results section to specifically focus on model comparison. To address the reviewer’s suggestion (separately estimate beta parameters for change-consistent and change-inconsistent signals), we indeed found that these models were better than the original system-neglect model.

To incorporate these new findings, we rewrote the entire final result section “Incorporating signal dependency into system-neglect model led to better models for regime-shift detection “(p.28-30).

Recommendations for the authors:

Reviewer #1 (Recommendations for the authors):

(1) Use line numbers for the next round of reviews.

We added line numbers in the revised manuscript.

(2) Figure 2b: Can the empirical results be reproduced by the system-neglect model? This would complement the analyses presented in Figure S4.

Yes. We now add Figure S6 based on system-neglect model fits. For each subject, we first computed period-by-period probability estimates based on the parameter estimates of the system-neglect model. Second, we computed index of overreaction (IO) for each combination of transition probability and signal diagnosticity. Third, we plot the IO like we did using empirical results in Fig. 2b. We found that the empirical results in Fig. 2b are similar to the system-neglect model shown in Figure S6, indicating that the empirical results can be reproduced by the model.

(3) Page 14: Instead of referring to the "Methods" in general, you could be more specific about where the relevant information can be found.

Fixed. We changed “See Methods” to “See System-neglect model in Methods”.

(4) Page 18: Consider avoiding the term "more significantly". Consider effect sizes if interested in comparing effects to each other.

Fixed. On page 19, we changed that to

“In the second analysis, we found that for both vmPFC and ventral striatum, the regression coefficient of 𝑃) was significantly different between Experiment 1 and Experiment 2 (Fig. 3C) and between Experiment 1 and Experiment 3 (Fig. 3D; also see Tables S5 and S6 in SI).”

(5) Page 30: Cite key studies using reversal-learning paradigms. Currently, readers less familiar with the literature might have difficulties with this.

We now cite key studies using reversal-learning paradigms on p.32:

“Our work is closely related to the reversal-learning paradigm—the standard paradigm in neuroscience and psychology to study change detection (Fellows & Farah, 2003; Izquierdo et al., 2017; O'Doherty et al., 2001; Schoenbaum et al., 2000; Walton et al., 2010). In a typical reversal-learning task, human or animal subjects choose between two options that differ in the reward magnitude or probability of receiving a reward. Through reward feedback the participants gradually learn the reward contingencies associated with the options and have to update knowledge about reward contingencies when contingencies are switched in order to maximize rewards.”

Reviewer #2 (Recommendations for the authors):

(1) Some literature on change detection seems missing. For example, the author should also cite Muller, T. H., Mars, R. B., Behrens, T. E., & O'Reilly, J. X. (2019). Control of entropy in neural models of environmental state. elife, 8, e39404. This paper suggests that medial PFC is correlated with the entropy of the current state, which is closely related to regime change and environmental volatility.

Thank you for pointing to this paper. We have now added it and other related papers in the Introduction and Discussion.

In Introduction, we added on p.5-6:

“Different behavioral paradigms, most notably reversal learning, and computational models were developed to investigate its neurocomputational substrates (Behrens et al., 2007; Izquierdo et al., 2017; Payzan-LeNestour et al., 2011, 2013; Nasser et al., 2010; McGuire et al., 2014; Muller et al., 2019). Key findings on the neural implementations for such learning include identifying brain areas and networks that track volatility in the environment (rate of change) (Behrens et al., 2007), the uncertainty or entropy of the current state of the environment (Muller et al., 2019), participants’ beliefs about change (Payzan-LeNestour et al., 2011; McGuire et al., 2014; Kao et al., 2020), and their uncertainty about whether a change had occurred (McGuire et al., 2014; Kao et al., 2020).”

In Discussion (p.35), we added a new paragraph:

“Related to OFC function in decision making and reinforcement learning, Wilson et al. (2014) proposed that OFC is involved in inferring the current state of the environment. For example, medial OFC had been shown to represent probability distribution on possible states of the environment (Chan et al., 2016), the current task state (Schuck et al., 2016) and uncertainty or entropy associated with the state of the environment (Muller et al., 2019). In the context of regime-shift detection, regimes can be regarded as states of the environment and therefore a change in regime indicates a change in the state of the environment. Muller et al. (2019) found that in dynamic environments where changes in the state of the environment happen regularly, medial OFC represented the level of uncertainty in the current state of the environment. Our finding that vmPFC represented individual participants’ probability estimates of regime shifts suggest that vmPFC and/or OFC are involved in inferring the current state of the environment through estimating whether the state has changed. Our finding that vmPFC represented individual participants’ sensitivity to transition probability further suggest that vmPFC and/or OFC contribute to individual participants’ biases in state inference (over- and underreactions to change) in how these brain areas respond to the volatility of the environment.”

(2) The language used when describing the selective relationship between frontoparietal network activation and change-consistent signal can be clearer. When describing separating those two signals, the authors refer to them as when the 'blue' signal shows up and when the 'red' signal shows up, assuming that the current belief state is blue. This is a little confusing cuz it is hard to keep in mind what is the default color in this example. It would be more intuitive if the author used language such as the 'change consistent' signal.

Thank you for the suggestion. We have changed the wording according to your suggestion. That is, we say ‘change-consistent (blue) signals’ and ‘change-inconsistent (red) signals’ throughout pages 22-28.

(3) Figure 4B highlights dmPFC. However, in the associated text, it says p = .10 so it is not significant. To avoid misleading readers, I would recommend pointing this out explicitly beyond saying 'most brain regions in the frontoparietal network also correlated with the intertemporal prior'.

Thank you for pointing this out. We now say on p.20

“With independent (leave-one-subject-out, LOSO) ROI analysis, we examined whether brain regions in the frontoparietal network (shown to represent strength of change evidence) correlated with intertemporal prior and found that all brain regions, with the exception of dmPFC, in the frontoparietal network correlated with the intertemporal prior.”

(4) There is a full paragraph in the discussion talking about the central opercular cortex, but this terminology has not shown up in the main body of the paper. If this is an important brain region to the authors, I would recommend mentioning it more often in the result section.

Thank you for this suggestion. We have now added central opercular cortex in the Results section (p.18):

“For 𝑃<sub>𝑡</sub>, we found that the ventromedial prefrontal cortex (vmPFC) and ventral striatum correlated with this behavioral measure of subjects’ belief about change. In addition, many other brain regions, including the motor cortex, central opercular cortex, insula, occipital cortex, and the cerebellum also significantly correlated with 𝑃<sub>𝑡</sub>.”

(5) The authors have claimed that people make more extreme estimates under high diagnosticity (Supplementary Figure 1). This is an interesting point because it seems to be different from what is shown in the main graph where it seems that people are not extreme enough compared to an ideal Bayesian observer. I understand that these are effects being investigated under different circumstances. It would be helpful if for Supplementary Figure 1 the authors could overlay, or generate a different figure showing what an ideal Bayesian observer would do in this situation.

We thank the reviewer for pointing this out. We wish to clarify that when we said “more extreme estimates under high diagnosticity” we meant compared with low diagnosticity and not with the ideal Bayesian observer. We clarified this point by rephrasing our sentence on p.11:

“We also found that subjects tended to give more extreme Pt under high signal diagnosticity than low diagnosticity (Fig. S1 in Supplementary Information, SI).”

When it comes to comparing subjects’ probability estimates with the normative Bayesian, subjects tended to “underreact” under high diagnosticity. This can be seen in Fig. 4B, which shows a trend of increasing underreaction (or decreasing overreaction) as diagnosticity increased (row-wise comparison for a given transition probability).

We see the reviewer’s point in overlaying the Bayesian on Fig. S1 and update it by adding the normative Bayesian in orange.