Mean-field Analysis of Batch Normalization

BN 的平均场理论

Mean-field Analysis of Batch Normalization

BN 的平均场理论

LocalNorm: Robust Image Classification through Dynamically Regularized Normalization

提出了新的 LocalNorm。既然Norm都玩得这么嗨了,看来接下来就可以研究小 GeneralizedNorm 或者 AnyRandomNorm 啥的了。。。[doge]

Fixup Initialization: Residual Learning Without Normalization

关于拟合的表现,Regularization 和 BN 的设计总是很微妙,尤其是 learning rate 再掺和进来以后。此 paper 的作者也就相关问题结合自己的文章在 Reddit 上有所讨论。

Generalized Batch Normalization: Towards Accelerating Deep Neural Networks

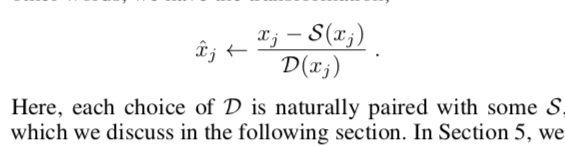

核心是这么一句话: Generalized Batch Normalization (GBN) to be identical to conventional BN but with

standard deviation replaced by a more general deviation measure D(x)

and the mean replaced by a corresponding statistic S(x).

How Does Batch Normalization Help Optimization? (No, It Is Not About Internal Covariate Shift)

BatchNorm有效性原理探索——使优化目标函数更平滑