this happens with getClient and setClient because it is a svelte context which is only available at component initialization (construction) and cannot be in an event handler.

13 Matching Annotations

- Jul 2021

-

github.com github.com

-

- Feb 2021

-

github.com github.com

-

Setting it to a lambda will lazily set the default value for that input.

-

-

github.com github.com

-

The rationale is that it's actually clearer to eager initialize. You don't need to worry about timing/concurrency that way. Lazy init is inherently more complex than eager init, so there should be a reason to choose lazy over eager rather than the other way around.

-

- Nov 2020

-

stackoverflow.com stackoverflow.com

- Oct 2020

-

github.com github.com

- Sep 2020

-

stackoverflow.com stackoverflow.com

-

stackoverflow.com stackoverflow.com

-

setContext must be called synchronously during component initialization. That is, from the root of the <script> tag

-

-

github.com github.com

-

github.com github.com

-

It looks like the issue stems from having "svelte" as a dependency instead of a devDependencies in package.json within the sapper project. This causes import 'svelte' to load the rollup excluded npm package's set_current_component instead of from within the sapper generated server.js.

-

- Jun 2019

-

iphysresearch.github.io iphysresearch.github.io

-

RandomOut: Using a convolutional gradient norm to rescue convolutional filters

或许导师这回可以相信初始化网络后的稳定性一直就是一个问题了吧~ 另外,此文还是在优秀的 MXNet 框架上跑的,赞一个~

-

- Feb 2019

-

iphysresearch.github.io iphysresearch.github.io

-

Fixup Initialization: Residual Learning Without Normalization

关于拟合的表现,Regularization 和 BN 的设计总是很微妙,尤其是 learning rate 再掺和进来以后。此 paper 的作者也就相关问题结合自己的文章在 Reddit 上有所讨论。

-

- Jan 2019

-

iphysresearch.github.io iphysresearch.github.io

-

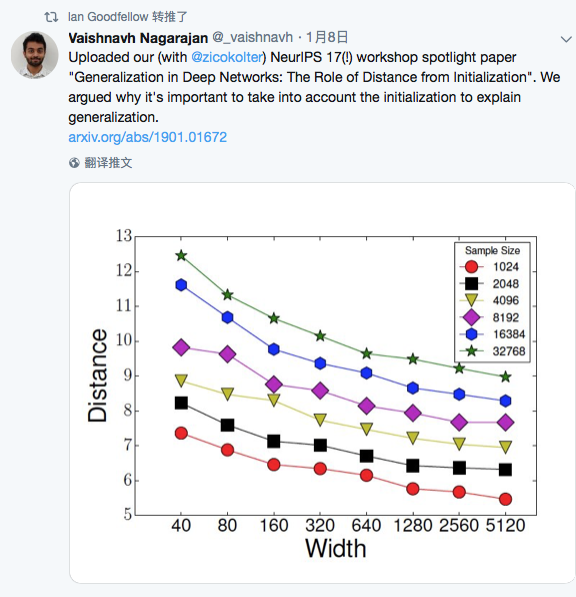

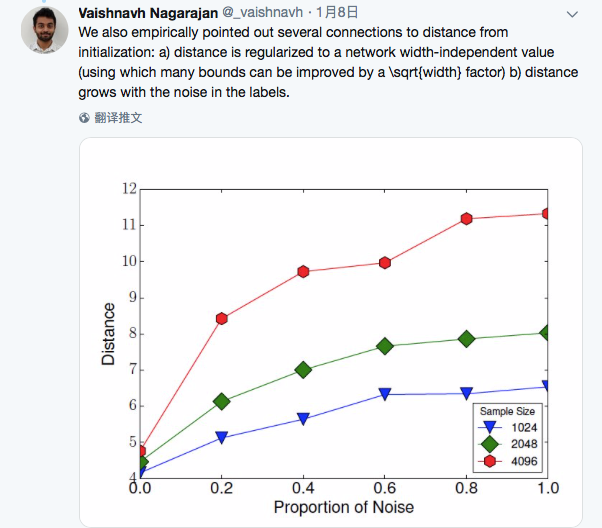

Generalization in Deep Networks: The Role of Distance from Initialization

Goodfellow 转推了此文。

作者强调了模型的初始化参数对解释泛化能力的重要性!

-