- Last 7 days

-

k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link

-

k51qzi5uqu5dgbb7ivfscw95jb8zh8n2roliqvb5ri1kw974tjf7fn6281ppgt.ipns.dweb.link k51qzi5uqu5dgbb7ivfscw95jb8zh8n2roliqvb5ri1kw974tjf7fn6281ppgt.ipns.dweb.link

-

mirror between Peergos & IPFS infracons

Exploriment

= infracons

- commons based

- Peer-to-Peer,

- co-

- evolving

- Produced

- auto

- poietic

- nomous

- permanent

- evergreen

- un

- en-closeable

- unstopable

- surveiable

- born multiplayer

- human Actor Centric

- as opposed to machine/provider centric

named networks based InterPlanetary omn-optinal intentionally transparent

holonic info-norphic-com(unication|putation) infrastructure

built from Trust for Trust

autopoietic evergreen

designed to be easy to emulate compelling to do

Flip the Web Open

permanent link to mutable content on both Peergos and IPNS

-

-

bafybeig7nrhxx3nyb5rfmuj7cfy5xbl4ldtwr57ol6lykibww625qkxnke.ipfs.dweb.link bafybeig7nrhxx3nyb5rfmuj7cfy5xbl4ldtwr57ol6lykibww625qkxnke.ipfs.dweb.link

-

Origo Folder for my hyperpost Peergos Account

? - filename=hyperpost-0.html - urn=/💻/asus/🧊/♖/hyperpost/0/

No Graon Zome

-

-

bafybeihj2isaoi7y4ulvilk2kjbfhnw6cvjxk5d7j6ucsm3f7f6hbtsl7u.ipfs.dweb.link bafybeihj2isaoi7y4ulvilk2kjbfhnw6cvjxk5d7j6ucsm3f7f6hbtsl7u.ipfs.dweb.link

-

Convergence appeared on the horizon some 3 month ago

-

the Groan Zone

-

-

search.brave.com search.brave.com

-

:do.search.brave?q=hermeticism+role+in+heuristic

-

-

-

-

gentleman's

úi

lordly

-

-

www.youtube.com www.youtube.com

-

gamatria

GAMATRIA

-

-

www.youtube.com www.youtube.com

-

-

https://via.hypothes.is/https://www.youtube.com/watch?v=Kij6yNenOF4

-

Richard Feynman OBSERVED VON NEUMANN's BRAIN And Saw Something NOT HUMAN

Richard Feynman OBSERVED VON NEUMANN's BRAIN And Saw Something NOT HUMAN

-

-

www.google.com www.google.com

-

www.inkandswitch.com www.inkandswitch.com

-

search for a solution that gives seven green checkmarks.

seven green checkmarks

what is required above the threshold, the sweet spot

- Fast

- Multi-device 3

- Offline

- Collaboration

- Longevity

- Privacy

- User control

-

real-time collaboration in a local-first setting

continuous collaboration without the need to be real time and synchronous

that is the challenge

-

moved to a “conflicting changes” notebook

-

DiffMerge,

-

-

www.youtube.com www.youtube.com

-

Annotating this presentation with comments connecting it to current thinking

-

- Feb 2026

-

peersuite.space peersuite.space

-

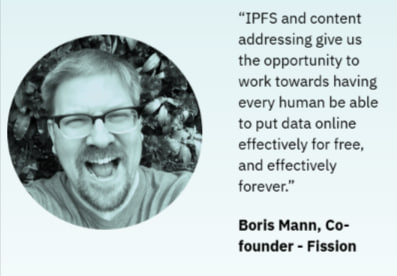

Note that uploading data to IPFS is not a direct upload to a centralized server but rather storing the data on your node or a pinning service, which then makes it available to the network

IPFS is Magic

-

To upload a string to IPFS

import { createHelia } from 'helia'; import { unixfs } from '@helia/unixfs';

const projectId = 'your-project-id'; const projectSecret = 'your-project-secret'; const auth = 'Basic ' + Buffer.from(

${projectId}:${projectSecret}).toString('base64'); -

Saved PeerSuite Document 12_16_2025

scenario

use peersute space document for inotes

and

Web Snarfing

-

-

bafybeieqimlibuvbuddoenauopk7xbldaw22ngslkkwd6evey76qhupalq.ipfs.dweb.link bafybeieqimlibuvbuddoenauopk7xbldaw22ngslkkwd6evey76qhupalq.ipfs.dweb.link

-

experiment - ~ peer.suite.space.instant-co-laboration

for - PeerSuite.Space - trailblazing - innotations - clie0anmes - named focus of interest - first experiment - atomic formulae

helia save string

-

experiment~peer.suite.space.instant-co-laboration

experiment~peer.suite.space.instant-co-laboration

-

-

eriktorenberg.substack.com eriktorenberg.substack.com

-

Gyuri Lajos Oct 8, 2021The best moat comes from persistence and long term dedication. Engage with something wich is important for the world for which the world i snot ready yet, but you love to do and engages with your whole being. Subsidize "your habit", your "side hustle" with something adjacent, as Frank Herbert would advise you. When the time comes, you will be ready, and it cannot easily be re-engineered. There is no short term fix for levelling up what Long Term Attention can create.It is like the joke, do all that with your lawn, but keep doing it for hundreds of years.

The best moat

-

-

www.google.com www.google.com

-

-

https://thenewstack.io/google-docs-switches-to-canvas-rendering-sidelining-the-dom/

-

Google Docs Switches to Canvas Rendering, Sidelining the DOM

-

-

stackoverflow.com stackoverflow.com

-

-

How to force Google Docs to render HTML instead of Canvas from Chrome Extension?

google docs render HTML

-

-

www.bidx.ai www.bidx.aiAbout Us4

-

-

deep tech

sounds intriguing

-

It takes what’s real in your business and helps you present it

takes real presents

-

The problem isn’t lack of capital. It’s the process.

indeed

-

-

www.linkedin.com www.linkedin.com

-

from - BidX

-

crowdsourcing and citizen science projects.

citizen scinece

-

-

www.linkedin.com www.linkedin.com

-

www.linkedin.com www.linkedin.com

-

-

Terry Yoell 3rd degree connection 3rd Co-Founder & CEO at Flock

-

-

guide.bidx.ai guide.bidx.ai

-

Looks like I am unable to annotate this

-

Just installed Http hypothesis bookmarklet So that I can annotate this on mobile

-

-

www.youtube.com www.youtube.com

-

past 15 years

2003 DTI Smart Award for Innovation Feasability study:

"Personalized Mobile Conputing"

all about Personal Knowledge Work interchangeably on Desktop and Mobile Devices (Palm and Windows Mobile)

Doing DocBook Structure without the complexity of MarkDown

but morphic MarkIn Notation

HyperText without the complexiies of XML/XSD

Extensible Augmented Morphic Writing

Rendering was slow, 10 years ahead of the capabilities

The hidden goal was to eventually go from Peronal Learning Networks

towards Personal first, Autonomous InterPersonal InerPlanetary Mutual Learning Networks

Indy Learning Commons

-

five minutes uh talk

more like 10

-

-

www.inkandswitch.com www.inkandswitch.com

-

jonudell.info jonudell.info

-

-

Instant: a graph-based, Firebase-like platform with:

firebase like platform

-

a "reverse design" approach that starts from the value driven objective of local-first, offline first approach that helps to "Lock the Web Open"

- reverse design

- lock the web open

-

database

database is not what we need

we need infobase

-

Unified local/remote architecture: The same query/mutation language runs in the browser and on the server.

unified loacl/remote architecture

-

Optimistic updates (instant UI feedback)

in a "local-first" infrastructure updates are instant. The fastest remote api call is the one you do not need to make

when updates are local-first updates can be relied on

-

delightful apps like Figma, Notion, and Linear share three standou

notion

-

-

jonudell.info jonudell.info

-

:do.search.hypothesis.faceted~local-first

-

-

search.brave.com search.brave.com

-

https://hypothes.is/a/vKsdGAR_EfGnmh_yIpWeWQ

research~local-first

-

-

github.com github.com

-

local-first cyberspace

local-first cyberpsace

-

people can dig around and reuse ideas

re-use, re-purpose, refactor, exapt, revise, extend

-

-

www.inkandswitch.com www.inkandswitch.com

-

We believe professional and creative users deserve software that realizes the local-first goals, helping them collaborate seamlessly while also allowing them to retain full ownership of their work.

This objective deserves a

new design approach to software, a "reverse design" approach that starts from the value driven objective of local-first, offline first approach that helps to "Lock the Web Open" and make it - human centric, - instead of being provider centric, that - for the benefit of the participants in the long tail of the internet

- instead of big tech

- maximize autonomy for people

- scale their unenclosable reach

- helps them to find the Other and collaborate

- private, permanent custody of all their data

- along with the open commons based peer produced capabilities that they make use of

- facilitating interpersonal trusted secure communication

- unenclosable carrier

- and ownership of the means of computations interaction

-

-

hyperpost.peergos.me hyperpost.peergos.me

-

/hyperpost/🌐/🎭/gyuri/x

Gyuri's PostBox Folder

-

-

hypothes.is hypothes.is

-

search.brave.com search.brave.com

-

search~brave?nexialitc

-

-

www.youtube.com www.youtube.com

-

Metabolic ambush fruit

Pattern not perfection

-

Beaking fast

-

-

web.archive.org web.archive.orgTaoism3

-

This philosophical Taoism, individualistic by nature

individualistic

-

ineffable: "The Tao that can be told is not the eternal Tao."

inefable

-

both the source and the driving force behind everything that exists.

source and the force

-

-

search.brave.com search.brave.com

-

https://bafybeihpze7gndqzby6veqwyue6sj64f7zxvinypcwatnrlg6c2mpiqoru.ipfs.dweb.link?filename=Taoism%20(03_02_2026%2014%EF%BC%9A01%EF%BC%9A20).html

-

brave.searh - does taoism individualistic or cummunitarian

-

without imposing will

not will

-

spontaneity (wu-wei)

non-action

-

"one's 'self' cannot be understood or fulfilled without reference to other persons, and to the broader set of realities.

self through the other

-

the self is not separate but deeply interconnected

self interconnected

-

-

bafybeiatl36hmx3mlokjpcy432zgb6tdlh7vwto2konmz4cna3lbft2wqm.ipfs.dweb.link bafybeiatl36hmx3mlokjpcy432zgb6tdlh7vwto2konmz4cna3lbft2wqm.ipfs.dweb.link

-

/hyperpost/~/indyweb/📓/20/26/14/setup-indy0wiki.pad.html

moving it to /hyperpost/~/indyweb/📓/20/26/14/01/setup-indy0wiki.pad.html

-

- Jan 2026

-

www.youtube.com www.youtube.com

-

How the reliance on computers in classrooms impacts students

143

schools have replaced academic rigour with academic rigour with convenience

180 offloading production

tools for off loading

not for learning

adult level tools to avoid s thinking

320

go back to analogue learning

-

-

www.youtube.com www.youtube.com

-

Palantir C.E.O. Alex Karp Defends Aiding Trump’s Immigration Policies

palantir

-

-

www.crunchbase.com www.crunchbase.com

-

writing is a tool for thinking

Yes

WikiNizer was ther 5 years earlier

the time is still not right

-

-

bafybeidrtgnu5v7ftuvre6d4tl6p44jd5xwzvljurnpqvvpuyhxqfyuaoy.ipfs.dweb.link bafybeidrtgnu5v7ftuvre6d4tl6p44jd5xwzvljurnpqvvpuyhxqfyuaoy.ipfs.dweb.link

-

-

-

Peersuite [source code] - Peer to peer workspace

Found out about peersuite.space

back in May 2025

-

-

-

Peersuite

Peer to peer workspace

-

-

bafybeialrttnptshyxshhiqt4kh2j4hhc5svthgagqqoeyx4rwokiw26a4.ipfs.dweb.link bafybeialrttnptshyxshhiqt4kh2j4hhc5svthgagqqoeyx4rwokiw26a4.ipfs.dweb.link

-

path= /🧊/♖/hyperpost/~/indyweb/📓/20/25/11/3

web+indy://get?

ipns=k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x

uri - local: /🧊/♖/hyperpost/~/indyweb/📓/20/25/11/ - dweb: /🧊/♖/hyperpost/~/indyweb/📓/20/25/11/ - ipfs.io: /🧊/♖/hyperpost/~/indyweb/📓/20/25/11/

ipfs= https://bafybeialrttnptshyxshhiqt4kh2j4hhc5svthgagqqoeyx4rwokiw26a4.ipfs.dweb.link

http://k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.localhost:8080/%E2%99%96/hyperpost/~/indyweb/%F0%9F%93%93/20/25/11/3/setup/-/indy0wiki.pad/

-

-

k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.localhost:8080 k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.localhost:8080

-

http://k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.localhost:8080/%E2%99%96/hyperpost/~/indyweb/%F0%9F%93%93/20/25/11/3/setup/-/indy0wiki.pad/

https://bafybeialrttnptshyxshhiqt4kh2j4hhc5svthgagqqoeyx4rwokiw26a4.ipfs.dweb.link

-

-

k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link

-

/🧊/♖/hyperpost/~/indyweb/📓/20/25/11/3

-

http://k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.localhost:8080/%E2%99%96/hyperpost/~/indyweb/%F0%9F%93%93/20/25/11/3/setup/-/indy0wiki.pad/

-

indyweb.link/♖/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

indyweb.link/♖/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

https://k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link/%E2%99%96/hyperpost/~/indyweb/%F0%9F%93%93/20/25/11/3/setup/-/indy0wiki.pad/

-

for - indy/0/wiki/Pad

/♖/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

![/♖/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad]

-

-

indyweb.link/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

-

-

k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link

-

indyweb.link.♖/hyperpost/~/indyweb/📓/20/25/11/3/

-

indyweb.link/♖/hyperpost/~/indyweb/📓/20/25/11/3/

-

.do.how - instance first experiment - to save work on Peergos

save work via Peergos

-

setup - indy0wiki.pad

make it work with peersuite.space

-

-

www.youtube.com www.youtube.com

-

-

Author of Dune: Frank Herbert's extraordinary life

x

-

-

search.brave.com search.brave.com

-

Ceteris paribus

"all other things being equal", …

-

"all other things being equal"

Ceteris paribus

-

-

-

distributed© ° network,

distirbuted networks are important

shift wowrds decentralization is a decne move

but we tend to loose sight that the networks that are truly matters are what people themsleves form

interpersonal trust networks

-

Nested levels of intention

Nesting levels of intention

singular?

if transpires is a result of coherence

Buttoleneck we have very poor means of even articulating intentions

individually let alone co-laboratively

Capacity for symmathesy Mutual Learing is key

-

Small islands of coherence

in a sea of chaos have the capacity “A to shift the entire system.” — Ilya Prigogine =

-

choosing responses

rather than reacting

-

https://bafybeieeiicvdgmtqbxqokzyujayy5jukamko7yrusawxmugagqghpb64y.ipfs.dweb.link?filename=The_Three_Operating_States_of_People_in_Organizations-ohqpm_ocr.pdf

/💻/asus/🧊/♖/hyperpost/~/indyweb/📓

-

-

search.brave.com search.brave.com

-

search.brave.com search.brave.com

-

-

https://bafybeidu3kag7x2zrux3brle5zrikywtelo4uvvue6y45unt7g5vpus6we.ipfs.dweb.link?filename=~hamann%2Bphilosopher-Brave.Search.html

Successfully published under the key:

/💻/asus/🧊/♖/hyperpost/~/indyweb/📓

k51qzi5uqu5dkz0r74zg5yd4g8j3kfo08td4htqrfp8ebu3x17pil37zuulrzk

Copy the link below and share it with others. The IPNS address will resolve as long as your node remains available on the network once a day to refresh the IPNS record.

-

-

-

recording ipns keys

/💻/asus/🧊/♖/hyperpost/~/indyweb/📓 k51qzi5uqu5dkz0r74zg5yd4g8j3kfo08td4htqrfp8ebu3x17pil37zuulrzk

https://ipfs.io/ipns/k51qzi5uqu5dkz0r74zg5yd4g8j3kfo08td4htqrfp8ebu3x17pil37zuulrzk

-

-

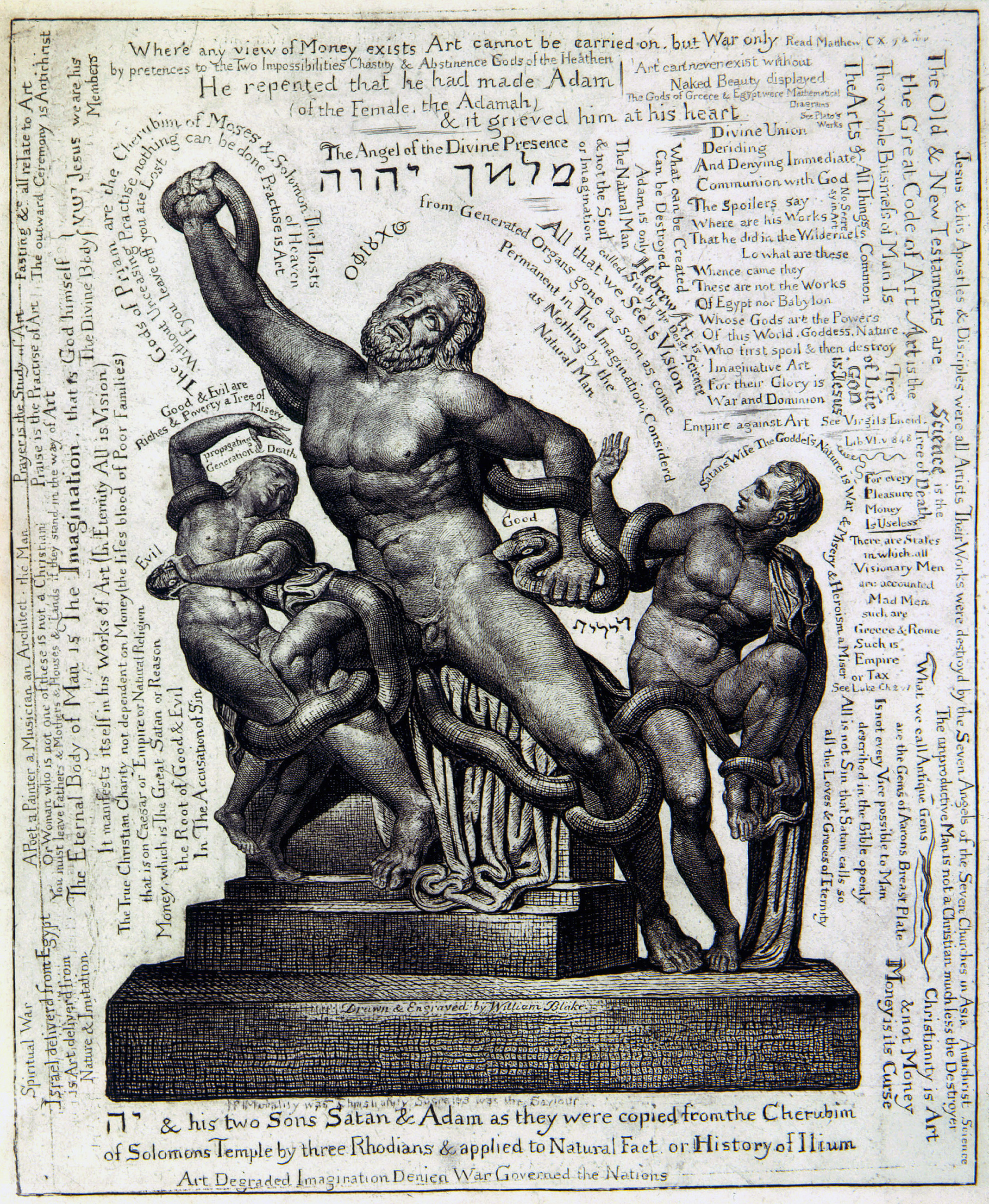

www.arthistoryproject.com www.arthistoryproject.comLaocoön1

-

Laocoön William Blake, 1825 – 1827

-

-

raypodder.medium.com raypodder.medium.com

-

https://bafybeigi4urr6jumopybpwxfu2i5edncg4e64c2z6dgtgm2clro7ibxmpe.ipfs.dweb.link/?filename=O%20%E2%80%94%20The%20Last%20Debt.%20When%20the%20empire%E2%80%99s%20money%20lies%2C%20its%E2%80%A6%20%EF%BD%9C%20by%20Ray%20Podder%20%EF%BD%9C%20Medium.html

Page saved with SingleFile web-indy://💻/asus/ 🧊/♖//hyperpost/~/indyweb/📓/20/26/15/o-the-last-debt

Page saved with SingleFile url: https://raypodder.medium.com/o-the-last-debt-3c12a1d998e7 saved date: Sat Dec 27 2025 21:56:44 GMT+0100 (Central European Standard Time)

https://raypodder.medium.com/o-the-last-debt-3c12a1d998e7

-

Page saved with SingleFile indyweb.link/♖.hyperpost/~/indyweb/📓/20/26/15/o-the-last-debt

-

ESG

https://bafybeihbbq7hyuv6t5atovt2y5pfj6w4ixp55vco345xj2ozojdlxc635y.ipfs.dweb.link?filename=esg%20-%20Brave%20Search%20(15_01_2026%2016%EF%BC%9A07%EF%BC%9A10).html / 💻/ asus/ 🧊/ ♖/ hyperpost/ ~/ gyuri/ 🏛️/ 20/ 26/ 01/ 15 https://bafybeihbbq7hyuv6t5atovt2y5pfj6w4ixp55vco345xj2ozojdlxc635y.ipfs.dweb.link?filename=esg%20-%20Brave%20Search%20(15_01_2026%2016%EF%BC%9A07%EF%BC%9A10).html

-

machine

didn’t just replace workers — it replaced wages.

-

morals

O will give them =

-

the flow

is just getting started.

-

next world order

isn’t another empire

???

-

filling

no one’s.

???

-

O gives it

context.

-

AI gave us

power without purpose.

-

Intelligence

Finds Its Mirror

-

value system

where abundance doesn’t collapse the price of trust.

-

thermodynamics

with conscience.

-

isn’t socialism

with better UX.

-

Oconomy

living proof engine

-

O offers direction

a way

-

O delivers discipline

mary that with interpersonal connectivity and verifyabe recapitulable info-communication-flows privately securely

trust but verify to built from personal trust for trust to scale

-

Fairness

not policy

law of motion

-

Burn to mint. Proof to earn. Flow to grow.

ming earn flow to grow

-

Individual Success Rate

fairness field, a living measure of reciprocity.

-

two-way contract of outcome, not promise

contract of outcome

-

service fee on disbelief

inflation

-

-

www.facebook.com www.facebook.com

-

Holographic Algebra

-

-

futurepolis.substack.com futurepolis.substack.com

-

en.wikipedia.org en.wikipedia.org

-

John Wycliffe

-

-

-

norabateson.wordpress.com norabateson.wordpress.com

-

Symmathesy: A Word in Progress November 3, 2015November 3, 2015 ~ Nora Bateson

-

-

search.brave.com search.brave.comEsg1

-

/ 💻/ asus/ 🧊/ ♖/ hyperpost/ ~/ gyuri/ 🏛️/ 20/ 26/ 01/ 15

-

-

k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link

-

dot.doc

-

https://k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x.ipns.dweb.link/%E2%99%96/hyperpost/~/indyweb/%F0%9F%93%93/20/26/14/setup-indy0wiki.pad.html

-

-

-

ipfs.tech ipfs.tech

-

-

en.wikipedia.org en.wikipedia.org

-

Bernard Lazare's term paria conscient (conscious pariah)

paria conscient

-

a personal trait that Arendt had recognized in herself, although she did not embrace the term until later

embraced

-

Rahel Varnhagen's discovery of living with her destiny as being a "conscious pariah"

social nonconformism is the condition sine qua non of intellectual achievement

-

-

k51qzi5uqu5djd3o0ovsrj50zh66awinzu4cw6bgc9heh0gtuzhu6zsl2z05xy.ipns.dweb.link k51qzi5uqu5djd3o0ovsrj50zh66awinzu4cw6bgc9heh0gtuzhu6zsl2z05xy.ipns.dweb.link

-

indyweb.link/hyperpost/0/♖-hyperpost-0.html

This is the first instance of virtual indyweb.link that resolves via hypothesis to an ipns.dweb.link

indyweb.inks are virtual interpersonal interplanetary links

that rely on Open Commons Based Infrastructural constellations powered by hypothes.is, dweb.link IPF/NS and Peergos

-

-

k51qzi5uqu5dgbb7ivfscw95jb8zh8n2roliqvb5ri1kw974tjf7fn6281ppgt.ipns.dweb.link k51qzi5uqu5dgbb7ivfscw95jb8zh8n2roliqvb5ri1kw974tjf7fn6281ppgt.ipns.dweb.link

-

candidate canonical

indyweb.link/♖/hyperpost/0/♖-hyperpost-0.html

-

-

dl.acm.org dl.acm.org

-

TimeChain: A Secure and Decentralized Off-chain Storage System for IoT Time Series Data

-

-

stackoverflow.com stackoverflow.com

-

I love using Shift + Option + F on my Mac to clean up my HTML code. It's like hitting a magic button.

magivc

-

How do I modify the VS Code HTML formatter?

x

-

-

www.youtube.com www.youtube.com

-

The Nobel Laureate Who (Also) Says Quantum Theory Is "Totally Wrong"

-

The Nobel Laureate Who (Also) Says Quantum Theory Is "Totally Wrong"

The Nobel Laureate Who (Also) Says Quantum Theory Is "Totally Wrong"

-

-

www.youtube.com www.youtube.com

-

Yakir Aharonov: “Heisenberg Was Right and We Ignored Him”

Quantum theory is wrong

https://www.youtube.com/watch?v=gsSJPLX-BTA

-

-

bafybeifcipwsimdiduf5maaoso75nhg5a6umbymqxe6tfu6jfrun66klny.ipfs.dweb.link bafybeifcipwsimdiduf5maaoso75nhg5a6umbymqxe6tfu6jfrun66klny.ipfs.dweb.link

-

bafybeic7eloobibivdoj5rvgwmv2og6yewyxx35eomvd2edzbclfck3gtq.ipfs.dweb.link bafybeic7eloobibivdoj5rvgwmv2og6yewyxx35eomvd2edzbclfck3gtq.ipfs.dweb.link

-

bafybeihj2isaoi7y4ulvilk2kjbfhnw6cvjxk5d7j6ucsm3f7f6hbtsl7u.ipfs.dweb.link bafybeihj2isaoi7y4ulvilk2kjbfhnw6cvjxk5d7j6ucsm3f7f6hbtsl7u.ipfs.dweb.link

-

Origo Folder for my hyperpost Peergos Account

x

-

-

search.brave.com search.brave.com

-

x

-

-

search.brave.com search.brave.com

-

brave.search

Nonstandard second-person plural pronouns

-

-

hyperpost.peergos.me hyperpost.peergos.me

-

from - ipfs

resolve ipns

identify ipns local name

on thinkpad IPFS Desktop IPNS Name is

🧊ipfs k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x

-

https://ipfs.io/ipns/k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x/

identify ipns local name

on thinkpad IPFS Desktop IPNS Name is

🧊ipfs k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x

-

new version that contains stuff addsd under IPFS version added card to about section

-

-

bafybeieyxekcifewqgfmxaccbgqcklsalmsrd6hzjwfquas7f2eq7q4vym.ipfs.dweb.link bafybeieyxekcifewqgfmxaccbgqcklsalmsrd6hzjwfquas7f2eq7q4vym.ipfs.dweb.link

-

https://ipfs.io/ipns/k51qzi5uqu5div1auuxm59ygav4p7gdg9z4e9iggtu6m43rmc3xw75mczx2b7x/

identify ipns key

on thinkpad

poiting to Indy 0 Pad editor assets folder copied there

-

the autopoiesis of the IndyWeb

x

-

-

blog.index.network blog.index.network

-

“I trust opportunities will find me.”

opportunities

-

No explaining your story for the tenth time this week.

explain yur story

-

letting agents optimize for mutual intent and alignment

mutual intent and alignment

-

designed to filter for quality

folter for quality

-

“It feels safe to think out loud.”

think out loud

-

ambient optimism

x

-

don’t need to broadcast themselves to be found

no need to broadcast to e found

-

shift from performance to presence

presence

-

the right people could find you just

What if the right people could find you just because your intent naturally aligned with theirs?

Yes, solve interpersonal visibility

because everything is shared by you with implicate intent rendered explicit via naming things

-

dentity as content,

idenity as content

-

rewriting the same paragraph for different people

rewriting

-

taught to market what you’re looking for like a product

market what u r looking for in public

-

The Shape of What You Meant

.4 cosmik hyperpost

-

the way discovery works online

yes serendipiity engine hyperpost

-

places to post, share, search, and shout

post share search and shout

-

-

hyperpost.co hyperpost.co

-

Create Hyperlinks to People in posts

all posta link to their own authonomous<br /> interpersonal interplanetary evergreen indranet.work spaces with full verifiable provenance with recapitulable history

-

-

bmannconsulting.com bmannconsulting.com

-

The Shape of What You Meant

cosmik

kosmik

-

Hi 👋 Welcome to Boris Mann's Homepage

sounds like kosmik

https://jonudell.info/h/facet/?user=gyuri&max=50&exactTagSearch=true&addQuoteContext=true&any=kosmik

-

-

cosmik.network cosmik.networkPeople3

-

Boris Mann

sounds like kosmik

https://jonudell.info/h/facet/?user=gyuri&max=50&exactTagSearch=true&addQuoteContext=true&any=kosmik

.4 - kosmik - cosmik

-

sounds like kosmik

https://jonudell.info/h/facet/?user=gyuri&max=50&exactTagSearch=true&addQuoteContext=true&any=kosmik

-

Cosmik is a mission and product-driven R&D lab working at the intersection of social networking protocols, AI and next-generation collaborative research tools.

Weaving the IndyWeb of interplanetary autonomous mutual learning networks connection people ideas and things

-

-

hyperpost.peergos.me hyperpost.peergos.me

-

♖🌐 .🌌💬.🎭.📓

Gyuri's Daily Notes - 2025 Sept Week 4

-

-

bafybeicur2gxakzniazhvcafajsgo5wm4ur4i3rqz5wumke6vjsnskw2le.ipfs.dweb.link bafybeicur2gxakzniazhvcafajsgo5wm4ur4i3rqz5wumke6vjsnskw2le.ipfs.dweb.link

-

/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

🧊/ me/ 🏛️/ 2026/ 09/setup-indy0wiki.pad.html to.do

setup for editing this with Indy0Pad Inter planetary constellation edition

-

/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

dweb.link: setup-indy0wiki.pad.html

>> /hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

Using the latest indy0pad that produces HTML documents that on Peergos at the point of creation of an index,htmlk file in the right path do - embed hypothesis social annotation tool - set encoding to UTF8 - set title based on the as the last three elements of the path - has a folly operational counterpart in the Inter Planetary Constellation wich is now ready for prime time as IPFS Desktop is now able to share because it is using the new sweep algorithm

The current verison as of 2025-12-09

take this published version as the basis and will be edited with the InterPlanetary Indy0Pad

will use the annotation margin to save updated dweb.links and add them to the document using the Indy0Pad editor @ IPFS which actually save changes into local Storage

and will be able to save to IPFS in the next round of development

-

-

bafybeicur2gxakzniazhvcafajsgo5wm4ur4i3rqz5wumke6vjsnskw2le.ipfs.dweb.link bafybeicur2gxakzniazhvcafajsgo5wm4ur4i3rqz5wumke6vjsnskw2le.ipfs.dweb.link

-

bafybeihswifqidikgla72xjkzfyzewk6hfhyraln5oueimvzbvawt4ixqi.ipfs.dweb.link bafybeihswifqidikgla72xjkzfyzewk6hfhyraln5oueimvzbvawt4ixqi.ipfs.dweb.link

-

/hyperpost/~/indyweb/📓/20/25/11/3/setup/-/indy0wiki.pad/

-

-

bafybeifqdrztyv2xbnfoll3sx7exf5utwugdevtzlv7rqc5dgvqpbw7prm.ipfs.dweb.link bafybeifqdrztyv2xbnfoll3sx7exf5utwugdevtzlv7rqc5dgvqpbw7prm.ipfs.dweb.link

-

www.youtube.com www.youtube.com

-

LIVE From AI Summit: AI That Only Gets Paid When It Works (Inside Tinman AI) via.hypothes.is

AWS moment for the mortgage industry https://hyp.is/sBrKlO0yEfCTchfkDkhPug/www.youtube.com/watch?v=1EtaTLWsZEA

-

Tin Man embeds generative AI across the entire mortgage tech stack

tin man generative AI

mortgage tech stack

-

-

hyperpost.peergos.me hyperpost.peergos.me

-

Gyuri's 📓diary

-

-

brave.com brave.com

-

LIVE From AI Summit: AI That Only Gets Paid When It Works (Inside Tinman AI)

-

-

www.linkedin.com www.linkedin.com

-

Ray Podder 1st degree connection 1st Time Traveler. System Rewriter. Creator-OS of Us.

-

-

search.brave.com search.brave.com

-

-

grounded in intuition, faith, and lived experience

intuition faith experience

-

mystical experience in London in 1758

year after Blake was born

-

"reason is language" (Vernunft ist Sprache)

externalized through the medium of language

-

-

poets.org poets.org

-

silken

Translucent shimmering Silver shinning

Silken Twine

-

-

www.youtube.com www.youtube.com

-

The Philosophy of Spirit: Language, Culture, and Art in Johann Hamann and Johann von Herder

-

-

www.goodreads.com www.goodreads.com

-

Johann Georg Hamann

-