GDPR introduces a list of data subjects’ rights that should be obeyed by both data processors and data collectors. The list includes: Right of access by the data subject (Section 2, Article 15). Right to rectification (Section 3, Art 16). Right to object to processing (Section 4, Art 21). Right to erasure, also known as ‘right to be forgotten’ (Section 3, Art 17). Right to restrict processing (Section 3, Art 18). Right to data portability (Section 3, Art 20).

- Mar 2020

-

-

-

www.i-scoop.eu www.i-scoop.eu

-

some examples of legitimate interest (although their main aim is to emphasize what rights, freedoms and so on override legitimate interest)

-

-

-

An example of reliance on legitimate interests includes a computer store, using only the contact information provided by a customer in the context of a sale, serving that customer with direct regular mail marketing of similar product offerings — accompanied by an easy-to-select choice of online opt-out.

-

-

econsultancy.com econsultancy.com

-

This is no different where legitimate interests applies – see the examples below from the DPN. It should also be made clear that individuals have the right to object to processing of personal data on these grounds.

-

Individuals can object to data processing for legitimate interests (Article 21 of the GDPR) with the controller getting the opportunity to defend themselves, whereas where the controller uses consent, individuals have the right to withdraw that consent and the ‘right to erasure’. The DPN observes that this may be a factor in whether companies rely on legitimate interests.

.

-

-

stackoverflow.com stackoverflow.com

-

Consent is one of six lawful grounds for processing data. It may be arguable that anti-spam measures such as reCaptcha can fall under "legitimate interests" (ie you don't need to ask for consent)

-

- Apr 2019

-

dev.padigital.org dev.padigital.org

-

337

without 300 field, these are the CMCs generated

-

-

www.facebook.com www.facebook.com

-

Virtual reality meets this bar when it comes to one-on-one conversations: when we analyzed the EEG results of participants who chatted in virtual reality, we found that on average they were within the optimal range of cognitive effort. To put it another way, participants in virtual reality were neither bored nor overstimulated. They were also in the ideal zone for remembering and processing information.

-

- Feb 2019

-

dev.padigital.org dev.padigital.org

-

$vIndexes.$

Why didn't this generate a 655?

-

- Jan 2019

-

wendynorris.com wendynorris.comTitle4

-

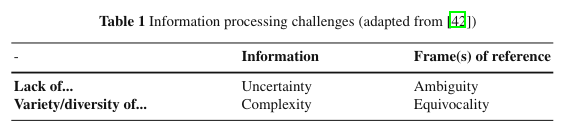

Zack [42] distinguished these four termsaccording to two dimensions: the nature of what is being processed and the consti-tution of the processing problem.The nature of what is being processed is either information or frames of ref-erence. With information, we mean “observations that have been cognitively pro-cessed and punctuated into coherent messages” [42]. Frames of reference [4, p.108], on the other hand, are the interpretative frames which provide the context forcreating and understanding information. There can be situations in which there is alack of information or a frame of reference, or too much information or too manyframes of reference to process.

Description of information processing challenges and breakdowns.

Uncertainty -- not enough information

Complexity -- too much information

Ambiguity -- lack of clear meaning

Equivocality -- multiple meanings

-

Sensemaking is about contextual rationality, built out of vaguequestions, muddy answers, and negotiated agreements that attempt to reduce ambi-guity and equivocality. The genesis of Sensemaking is a lack of fit between whatwe expect and what we encounter [40]. With Sensemaking, one does not look at thequestion of “which course of action should we choose?”, but instead at an earlierpoint in time where users are unsure whether there is even a decision to be made,with questions such as “what is going on here, and should I even be asking this ques-tion just now?” [40]. This shows that Sensemaking is used to overcome situationsof ambiguity. When there are too many interpretations of an event, people engagein Sensemaking too, to reduce equivocality.

Definition of sensemaking and how the process interacts with ambiguity and equivocality in framing information.

"Sensemaking is about coping with information processing challenges of ambiguity and equivocality by dealing with frames of reference."

-

Decision making is traditionally viewed as a sequential process of problem classifi-cation and definition, alternative generation, alternative evaluation, and selection ofthe best course of action [26]. This process is about strategic rationality, aimed atreducing uncertainty [6, 36]. Uncertainty can be reduced through objective analysisbecause it consists of clear questions for which answers exist [5, 40]. Complex-ity can also be reduced by objective analysis, as it requires restricting or reducingfactual information and associated linkages [42]

Definition of decision making and how this process interacts with uncertainty and complexity in information.

"Decision making is about coping with information processing challenges of uncertainty and complexity by dealing with information"

-

Crisis environments are characterized by various types of information problemsthat complicate the response, such as inaccurate, late, superficial, irrelevant, unreli-able, and conflicting information [30, 32]. This poses difficulties for actors to makesense of what is going on and to take appropriate action. Such issues of informationprocessing are a major challenge for the field of crisis management, both concep-tually and empirically [19].

Description of information problems in crisis environments.

-

-

imagej.nih.gov imagej.nih.gov

-

Surface/Interior Depth-Cueing Depth cues can contribute to the three-dimensional quality of projection images by giving perspective to projected structures. The depth-cueing parameters determine whether projected points originating near the viewer appear brighter, while points further away are dimmed linearly with distance. The trade-off for this increased realism is that data points shown in a depth-cued image no longer possess accurate densitometric values. Two kinds of depth-cueing are available: Surface Depth-Cueing and Interior Depth-Cueing. Surface Depth-Cueing works only on nearest-point projections and the nearest-point component of other projections with opacity turned on. Interior Depth-Cueing works only on brightest-point projections. For both kinds, depth-cueing is turned off when set to zero (i.e.100% of intensity in back to 100% of intensity in front) and is on when set at 0 < n 100 (i.e.(100 − n)% of intensity in back to 100% intensity in front). Having independent depth-cueing for surface (nearest-point) and interior (brightest-point) allows for more visualization possibilities.

-

Opacity Can be used to reveal hidden spatial relationships, especially on overlapping objects of different colors and dimensions. The (surface) Opacity parameter permits the display of weighted combinations of nearest-point projection with either of the other two methods, often giving the observer the ability to view inner structures through translucent outer surfaces. To enable this feature, set Opacity to a value greater than zero and select either Mean Value or Brightest Point projection.

-

Interpolate Check Interpolate to generate a temporary z-scaled stack that is used to generate the projections. Z-scaling eliminates the gaps seen in projections of volumes with slice spacing greater than 1.0 pixels. This option is equivalent to using the Scale plugin from the TransformJ package to scale the stack in the z-dimension by the slice spacing (in pixels). This checkbox is ignored if the slice spacing is less than or equal to 1.0 pixels.

-

Lower/Upper Transparency Bound Determine the transparency of structures in the volume. Projection calculations disregard points having values less than the lower threshold or greater than the upper threshold. Setting these thresholds permits making background points (those not belonging to any structure) invisible. By setting appropriate thresholds, you can strip away layers having reasonably uniform and unique intensity values and highlight (or make invisible) inner structures. Note that you can also use Image▷Adjust▷Threshold… [T]↑ to set the transparency bounds.

-

-

www.sciencedirect.com www.sciencedirect.com

-

Orchestrating the execution of many command line tools is a task for Galaxy, while an analysis of life science data with subsequent statistical analysis and visualization is best carried out in KNIME or Orange. Orange with its “ad-hoc” execution of nodes caters to scientists doing quick analyses on small amounts of data, while KNIME is built from the ground up for large tables and images. Noteworthy is that none of the mentioned tools provide image processing capabilities as extensive as those of the KNIME Image Processing plugin (KNIP).

-

In conclusion, the KNIME Image Processing extensions not only enable scientists to easily mix-and-match image processing algorithms with tools from other domains (e.g. machine-learning), scripting languages (e.g. R or Python) or perform a cross-domain analysis using heterogenous data-types (e.g. molecules or sequences), they also open the doors for explorative design of bioimage analysis workflows and their application to process hundreds of thousands of images.

-

In order to further foster this “write once, run anywhere” framework, several independent projects collaborated closely in order to create ImageJ-Ops, an extensible Java framework for image processing algorithms. ImageJ-Ops allows image processing algorithms to be used within a wide range of scientific applications, particularly KNIME and ImageJ and consequently, users need not choose between those applications, but can take advantage of both worlds seamlessly.

-

Most notably, integrating with ImageJ2 and FIJI allows scientists to easily turn ImageJ2 plugins into KNIME nodes, without having to be able to script or program a single line of code

-

- Aug 2018

-

emlis.pair.com emlis.pair.com

-

This is related to the fact that biology researchers are in a creative process and reflect on their decisions in order to explore new leads or justify their decisions. Paper laboratory notebooks show this temporality ofthoughts.

The iterative self-reflection process described in biology research seems relatively undeveloped in DHN work. I don't know that I've seen much negotiation/reflection/critical analysis take place between the moment the data is collected by volunteers and the maps/viz/data/after-action reports created after the fact by the Core Team.

Perhaps that's a missing element that should be more deeply explored in thinking about data having both a time attribute and being in a state of change? Is there a needed intermediate validation step between data cleaning and creating a data analysis product.

-

- Oct 2016

-

it.wikipedia.org it.wikipedia.org

-

Esempi noti di operatori spaziali sono il filtro media, che calcola la media aritmetica dei pixel all'interno della "finestra" e impone tale valore, e il filtro mediano, il quale invece calcola la mediana statistica.

Alcuni dei principali operatori spaziali utilizzati nell'image processing.

-

- Jun 2016

-

ebeowulf.uky.edu ebeowulf.uky.edu

-

A case in point is the obliterated text between syððan and þ on fol. 179r10. Any attempt at restoration is complicated by the fact that some of the ink traces, as conclusively shown by an overlay in Electronic Beowulf 4.0, come from an offset from the facing fol. 178v. Digital technology allows us to subtract these false leads and arrive at a more plausible restoration

Great use of image processing to estimate what could be the conjectural readings.

-

- Aug 2015

-

www.connscameras.ie www.connscameras.ie

-

The Conns Photolab (introduced in 1990) offers a high quality and cost effective range of services including developing, printing and scanning for film, digital or print reproduction.

-

-

ukfilmlab.com ukfilmlab.comPricing1

-

Pricing shown is per roll in GBP and Euros.

-