We find that, during the pandemic, no-vax communities became more central in the country-specificdebates and their cross-border connections strengthened, revealing a global Twitter anti-vaccinationnetwork. U.S. users are central in this network, while Russian users also become net exporters ofmisinformation during vaccination roll-out. Interestingly, we find that Twitter’s content moderationefforts, and in particular the suspension of users following the January 6th U.S. Capitol attack, had aworldwide impact in reducing misinformation spread about vaccines. These findings may help publichealth institutions and social media platforms to mitigate the spread of health-related, low-credibleinformation by revealing vulnerable online communities

7 Matching Annotations

- Dec 2022

-

arxiv.org arxiv.org

-

-

arxiv.org arxiv.org

-

On Facebook, we identified 51,269 posts (0.25% of all posts)sharing links to Russian propaganda outlets, generating 5,065,983interactions (0.17% of all interactions); 80,066 posts (0.4% of allposts) sharing links to low-credibility news websites, generating28,334,900 interactions (0.95% of all interactions); and 147,841 postssharing links to high-credibility news websites (0.73% of all posts),generating 63,837,701 interactions (2.13% of all interactions). Asshown in Figure 2, we notice that the number of posts sharingRussian propaganda and low-credibility news exhibits an increas-ing trend (Mann-Kendall 𝑃 < .001), whereas after the invasion ofUkraine both time series yield a significant decreasing trend (moreprominent in the case of Russian propaganda); high-credibilitycontent also exhibits an increasing trend in the Pre-invasion pe-riod (Mann-Kendall 𝑃 < .001), which becomes stable (no trend)in the period afterward. T

-

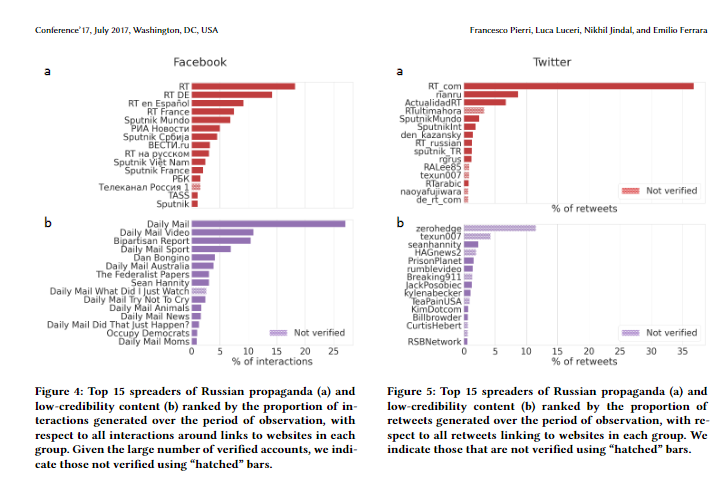

We estimated the contribution of veri-fied accounts to sharing and amplifying links to Russian propagandaand low-credibility sources, noticing that they have a dispropor-tionate role. In particular, superspreaders of Russian propagandaare mostly accounts verified by both Facebook and Twitter, likelydue to Russian state-run outlets having associated accounts withverified status. In the case of generic low-credibility sources, a sim-ilar result applies to Facebook but not to Twitter, where we alsonotice a few superspreaders accounts that are not verified by theplatform.

-

On Twitter, the picture is very similar in the case of Russianpropaganda, where all accounts are verified (with a few exceptions)and mostly associated with news outlets, and generate over 68%of all retweets linking to these websites (see panel a of Figure 4).For what concerns low-credibility news, there are both verified (wecan notice the presence of seanhannity) and not verified users,and only a few of them are directly associated with websites (e.g.zerohedge or Breaking911). Here the top 15 accounts generateroughly 30% of all retweets linking to low-credibility websites.

-

Figure 5: Top 15 spreaders of Russian propaganda (a) andlow-credibility content (b) ranked by the proportion ofretweets generated over the period of observation, with re-spect to all retweets linking to websites in each group. Weindicate those that are not verified using “hatched” bars

-

-

ieeexplore.ieee.org ieeexplore.ieee.org

-

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

-

-

www.mdpi.com www.mdpi.com

-

We analyzed and visualized Twitter data during the prevalence of the Wuhan lab leak theory and discovered that 29% of the accounts participating in the discussion were social bots. We found evidence that social bots play an essential mediating role in communication networks. Although human accounts have a more direct influence on the information diffusion network, social bots have a more indirect influence. Unverified social bot accounts retweet more, and through multiple levels of diffusion, humans are vulnerable to messages manipulated by bots, driving the spread of unverified messages across social media. These findings show that limiting the use of social bots might be an effective method to minimize the spread of conspiracy theories and hate speech online.

-