A sample of 489 self-identified Australian gay men 18–72 years old participated in an online survey on masculinity and homosexuality. Descriptive statistics, bivariate correlations, and sequential multiple regressions were used to test the study’s aims. Sequential multiple regressions revealed that conformity to masculine norms and threats to masculinity contingency were stronger predictors of internalized homophobia over and above demographic and other factors.

- May 2023

-

www.mdpi.com www.mdpi.com

Tags

Annotators

URL

-

- Mar 2023

-

www.ndss-symposium.org www.ndss-symposium.org

-

krebsonsecurity.com krebsonsecurity.com

-

A new breach involving data from nine million AT&T customers is a fresh reminder that your mobile provider likely collects and shares a great deal of information about where you go and what you do with your mobile device — unless and until you affirmatively opt out of this data collection. Here’s a primer on why you might want to do that, and how.

-

- Jan 2023

-

pubmed.ncbi.nlm.nih.gov pubmed.ncbi.nlm.nih.gov

-

Zika virus as a cause of birth defects: Were the teratogenic effects of Zika virus missed for decades?

Although it is not possible to prove definitively that ZIKV had teratogenic properties before 2013, several pieces of evidence support the hypothesis that its teratogenicity had been missed in the past. These findings emphasize the need for further investments in global surveillance for emerging infections and for birth defects so that infectious teratogens can be identified more expeditiously in the future.

-

-

archive.org archive.org

-

T he REVELATIONS about the possible complicity of the Bulgarian secret police in the shooting of the Pope have produced a grudging admission, even in previously skeptical quarters, that the Soviet Union may be involved in international terrorism. Some patterns have emerged in the past few years that tell us some- thing about the extent to which the Kremlin may use terrorism as an instrument of policy. A great deal of information has lately come to light, some of it accurate, some of it not. One of the most interesting developments appears to be the emergence of a close working relation- ship between organized crime (especially drug smug- glers and dealers) and some of the principal groups in the terrorist network.

-

-

www.sciencedirect.com www.sciencedirect.com

-

Highlights

- We exploit language differences to study the causal effect of fake news on voting.

- Language affects exposure to fake news.

- German-speaking voters from South Tyrol (Italy) are less likely to be exposed to misinformation.

- Exposure to fake news favours populist parties regardless of prior support for populist parties.

- However, fake news alone cannot explain most of the growth in populism.

-

-

www.science.org www.science.org

-

Results for the YouTube field experiment (study 7), showing the average percent increase in manipulation techniques recognized in the experimental (as compared to control) condition. Results are shown separately for items (headlines) 1 to 3 for the emotional language and false dichotomies videos, as well as the average scores for each video and the overall average across all six items. See Materials and Methods for the exact wording of each item (headline). Error bars show 95% confidence intervals.

-

-

www.sciencedirect.com www.sciencedirect.com

-

Who falls for fake news? Psychological and clinical profiling evidence of fake news consumers

Participants with a schizotypal, paranoid, and histrionic personality were ineffective at detecting fake news. They were also more vulnerable to suffer its negative effects. Specifically, they displayed higher levels of anxiety and committed more cognitive biases based on suggestibility and the Barnum Effect. No significant effects on psychotic symptomatology or affective mood states were observed. Corresponding to these outcomes, two clinical and therapeutic recommendations related to the reduction of the Barnum Effect and the reinterpretation of digital media sensationalism were made. The impact of fake news and possible ways of prevention are discussed.

Fake news and personality disorders

The observed relationship between fake news and levels of schizotypy was consistent with previous scientific evidence on pseudoscientific beliefs and magical ideation (see Bronstein et al., 2019; Escolà-Gascón, Marín, et al., 2021). Following the dual process theory model (e.g., Pennycook & Rand, 2019), when a person does not correctly distinguish between information with scientific arguments and information without scientific grounds it is because they predominantly use cognitive reasoning characterized by intuition (e.g., Dagnall, Drinkwater, et al., 2010; Swami et al., 2014; Dagnall et al., 2017b; Williams et al., 2021).

Concomitantly, intuitive thinking correlates positively with magical beliefs (see Šrol, 2021). Psychopathological classifications include magical beliefs as a dimension of schizotypal personality (e.g., Escolà-Gascón, 2020a). Therefore, it is possible that the high schizotypy scores in this study can be explained from the perspective of dual process theory (Denovan et al., 2018; Denovan et al., 2020; Drinkwater, Dagnall, Denovan, & Williams, 2021). Intuitive thinking could be the moderating variable that explains why participants who scored higher in schizotypy did not effectively detect fake news.

Something similar happened with the subclinical trait of paranoia. This variable scored the highest in both group 1 and group 2 (see Fig. 1). Intuition is also positively related to conspiratorial ideation (see Drinkwater et al., 2020; Gligorić et al., 2021). Similarly, psychopathology tends to classify conspiracy ideation as a frequent belief system in paranoid personality (see Escolà-Gascón, 2022). This is because conspiracy beliefs are based on systematic distrust of the systems that structure society (political system), knowledge (science) and economy (capitalism) (Dagnall et al., 2015; Swami et al., 2014). Likewise, it is known that distrust is the transversal characteristic of paranoid personality (So et al., 2022). Then, in this case the use of intuitive thinking and dual process theory could also justify the obtained paranoia scores. The same is not true for the histrionic personality.

The Barnum Effect

The Barnum Effect consists of accepting as exclusive a verbal description of an individual's personality, when, the description employs contents applicable or generalizable to any profile or personality that one wishes to describe (see Boyce & Geller, 2002; O’Keeffe & Wiseman, 2005). The error of this bias is to assume as exclusive or unique information that is not. This error can occur in other contexts not limited to personality descriptions. Originally, this bias was studied in the field of horoscopes and pseudoscience's (see Matute et al., 2011). Research results suggest that people who do not effectively detect fake news regularly commit the Barnum Effect. So, one way to prevent fake news may be to educate about what the Barnum Effect is and how to avoid it.

Conclusions

The conclusions of this research can be summarized as follows: (1) The evidence obtained proposes that profiles with high scores in schizotypy, paranoia and histrionism are more vulnerable to the negative effects of fake news. In clinical practice, special caution is recommended for patients who meet the symptomatic characteristics of these personality traits.

(2) In psychiatry and clinical psychology, it is proposed to combat fake news by reducing or recoding the Barnum effect, reinterpreting sensationalism in the media and promoting critical thinking in social network users. These suggestions can be applied from intervention programs but can also be implemented as psychoeducational programs for massive users of social networks.

(3) Individuals who do not effectively detect fake news tend to have higher levels of anxiety, both state and trait anxiety. These individuals are also highly suggestible and tend to seek strong emotions. Profiles of this type may inappropriately employ intuitive thinking, which could be the psychological mechanism that.

(4) Positive psychotic symptomatology, affective mood states and substance use (addiction risks) were not affected by fake news. In the field of psychosis, it should be analyzed whether fake news influences negative psychotic symptomatology.

-

-

euvsdisinfo.eu euvsdisinfo.eu

-

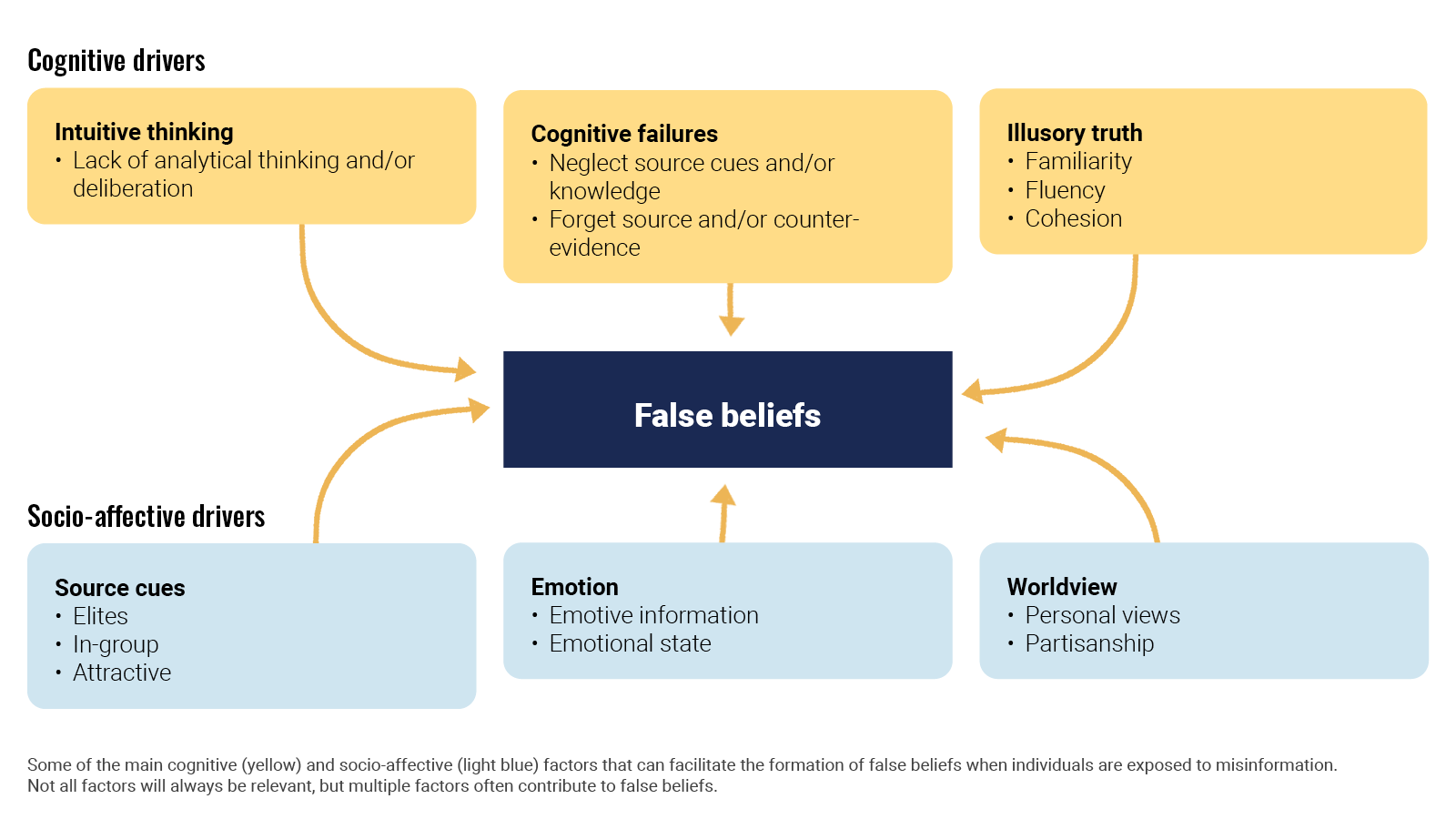

The uptake of mis- and disinformation is intertwined with the way our minds work. The large body of research on the psychological aspects of information manipulation explains why.

In an article for Nature Review Psychology, Ullrich K. H. Ecker et al looked(opens in a new tab) at the cognitive, social, and affective factors that lead people to form or even endorse misinformed views. Ironically enough, false beliefs generally arise through the same mechanisms that establish accurate beliefs. It is a mix of cognitive drivers like intuitive thinking and socio-affective drivers. When deciding what is true, people are often biased to believe in the validity of information and to trust their intuition instead of deliberating. Also, repetition increases belief in both misleading information and facts.

Ecker, U.K.H., Lewandowsky, S., Cook, J. et al. (2022). The psychological drivers of misinformation belief and its resistance to correction.

Going a step further, Álex Escolà-Gascón et al investigated the psychopathological profiles that characterise people prone to consuming misleading information. After running a number of tests on more than 1,400 volunteers, they concluded that people with high scores in schizotypy (a condition not too dissimilar from schizophrenia), paranoia, and histrionism (more commonly known as dramatic personality disorder) are more vulnerable to the negative effects of misleading information. People who do not detect misleading information also tend to be more anxious, suggestible, and vulnerable to strong emotions.

-

-

www.nytimes.com www.nytimes.com

-

The military has long engaged in information warfare against hostile nations -- for instance, by dropping leaflets and broadcasting messages into Afghanistan when it was still under Taliban rule.But it recently created the Office of Strategic Influence, which is proposing to broaden that mission into allied nations in the Middle East, Asia and even Western Europe. The office would assume a role traditionally led by civilian agencies, mainly the State Department.

US IO capacity was poor and the campaigns were ineffective.

-

-

www.danielpipes.org www.danielpipes.org

-

John B. Kelly highlighted this disparity in a memorable passage published in 1973:

Distance, the filtering of news through so many intermediate channels, and the habitual tendency to discuss and interpret Middle Eastern politics in the political terminology of the West, have all contrived to impart a certain blandness to the reporting and analysis of Middle Eastern affairs in Western countries. ... To read, for instance, the extracts from the Cairo and Baghdad press and radio ... is to open a window upon a strange and desolate landscape, strewn with weird, amorphous shapes cryptically inscribed "imperialist plot," "Zionist crime," "Western exploitation," ... and "the revolution betrayed." Around and among these enigmatic structures, curious figures, like so many mythical beats, caper and cavort - "enemies," "traitors," "stooges," "hyenas," "puppets," "lackeys," "feudalists," "gangsters," "tyrants," "criminals," "oppressors," "plotters" and deviationists". ... It is all rather like a monstrous playing board for some grotesque and sinister game, in which the snakes are all hydras, the ladders have no rungs, and the dice are blank.

-

Americans especially tend reflexively to dismiss the idea of conspiracy. Living in a political culture ignorant of secret police, a political underground, and coups d'état,

Not anymore.

-

-

www.ncbi.nlm.nih.gov www.ncbi.nlm.nih.gov

-

PMC full text: Glob Public Health. 2013 Dec; 8(10): 1138–1150. Published online 2013 Dec 3. doi: 10.1080/17441692.2013.859720 Copyright/LicenseRequest permission to reuse Figure 1.

Interactions between global actors working to resume polio eradication in Kano State.

Interactions between global actors working to resume polio eradication in Kano State.Source: Kaufman & Feldbaum, 2009.

-

In 2003 five northern Nigerian states boycotted the oral polio vaccine due to fears that it was unsafe. Though the international responses have been scrutinised in the literature, this paper argues that lessons still need to be learnt from the boycott: that the origins and continuation of the boycott were due to specific local factors.

Origin and continuation boycott made this unique.

-

-

www.ids.ac.uk www.ids.ac.uk

-

Indeed ‘anti-vaccination rumours’ have been defined as a major threat to achieving vaccine coverage goals. This is demonstrated in this paper through a case study of responses to the Global Polio Eradication Campaign (GPEI) in northern Nigeria where Muslim leaders ordered the boycott of the Oral Polio Vaccine (OPV). A 16-month controversy resulted from their allegations that the vaccines were contaminated with anti-fertility substances and the HIV virus was a plot by Western governments to reduce Muslim populations worldwide.

-

- Dec 2022

-

www.danielpipes.org www.danielpipes.org

-

Fears of a petty conspiracy — a political rival or business competitor plotting to do you harm — are as old as the human psyche. But fears of a grand conspiracy — that the Illuminati or Jews plan to take over the world — go back only 900 years and have been operational for just two centuries, since the French Revolution.

-

The connection of conspiracy theorists and occultists follows from their common, crooked premises. First, "any widely accepted belief must necessarily be false." Second, rejected knowledge — what the establishment spurns — must be true. The result is a large, self-referential network. Flying saucer advocates promote anti-Jewish phobias. Anti-Semites channel in Peru. Some anti-Semites see extraterrestrials functioning as surrogate Jews; others believe the Protocols of the Elders of Zion are the joint product of "the Rothschilds and the reptile-Aryans." By the late 1980s, Mr. Barkun found that "virtually all of the radical right's ideas about the New World Order had found their way into UFO literature."

-

-

historymatters.gmu.edu historymatters.gmu.edu

-

Protestant Paranoia: The American Protective Association Oath

In 1887, Henry F. Bowers founded the nativist American Protective Association (APA) in Clinton, Iowa. Bowers was a Mason, and he drew from its fraternal ritual—elaborate regalia, initiation ceremonies, and a secret oath—in organizing the APA. He also drew many Masons, an organization that barred Catholics. The organization quickly acquired an anti-union cast. Among other things, the APA claimed that the Catholic leader of the Knights, Terence V. Powderly, was part of a larger conspiracy against American institutions. Even so, the APA successfully recruited significant numbers of disaffected trade unionists in an era of economic hard times and the collapse of the Knights of Labor. This secret oath taken by members of the American Protective Association in the 1890s revealed the depth of Protestant distrust and fear of Catholics holding public office.

I do most solemnly promise and swear that I will always, to the utmost of my ability, labor, plead and wage a continuous warfare against ignorance and fanaticism; that I will use my utmost power to strike the shackles and chains of blind obedience to the Roman Catholic church from the hampered and bound consciences of a priest-ridden and church-oppressed people; that I will never allow any one, a member of the Roman Catholic church, to become a member of this order, I knowing him to be such; that I will use my influence to promote the interest of all Protestants everywhere in the world that I may be; that I will not employ a Roman Catholic in any capacity if I can procure the services of a Protestant.

I furthermore promise and swear that I will not aid in building or maintaining, by my resources, any Roman Catholic church or institution of their sect or creed whatsoever, but will do all in my power to retard and break down the power of the Pope, in this country or any other; that I will not enter into any controversy with a Roman Catholic upon the subject of this order, nor will I enter into any agreement with a Roman Catholic to strike or create a disturbance whereby the Catholic employes may undermine and substitute their Protestant co-workers; that in all grievances I will seek only Protestants and counsel with them to the exclusion of all Roman Catholics, and will not make known to them anything of any nature matured at such conferences.

I furthermore promise and swear that I will not countenance the nomination, in any caucus or convention, of a Roman Catholic for any office in the gift of the American people, and that I will not vote for, or counsel others to vote for, any Roman Catholic, but will vote only for a Protestant, so far as may lie in my power. Should there be two Roman Catholics on opposite tickets, I will erase the name on the ticket I vote; that I will at all times endeavor to place the political positions of this government in the hands of Protestants, to the entire exclusion of the Roman Catholic church, of the members thereof, and the mandate of the Pope.

To all of which I do most solemnly promise and swear, so help me God. Amen.

Source: "The Secret Oath of the American Protective Association, October 31, 1893," in Michael Williams, The Shadow of the Pope (New York: McGraw-Hill Book Co., Inc., 1932), 103–104. Reprinted in John Tracy Ellis, ed., Documents of American Catholic History (Milwaukee: The Bruce Publishing Company, 1956), 500–501.

-

-

www.nytimes.com www.nytimes.com

-

In 1988, when polio was endemic in 125 countries, the annual assembly of national health ministers, meeting in Geneva, declared their intent to eradicate polio by 2000. That target was missed, but a $3 billion campaign had it contained in six countries by early 2003.

-

-

www.danielpipes.org www.danielpipes.org

-

The polio-vaccine conspiracy theory has had direct consequences: Sixteen countries where polio had been eradicated have in recent months reported outbreaks of the disease – twelve in Africa (Benin, Botswana, Burkina Faso, Cameroon, Central African Republic, Chad, Ethiopia, Ghana, Guinea, Mali, Sudan, and Togo) and four in Asia (India, Indonesia, Saudi Arabia, and Yemen). Yemen has had the largest polio outbreak, with more than 83 cases since April. The WHO calls this "a major epidemic."

-

-

historyofvaccines.org historyofvaccines.org

-

History of Anti-Vaccination Movements

- What do Vaccines do?

- How are Vaccines Made?

- Ethical Issues and Vaccines

- Misconceptions about Vaccines

- Debunked: The Polio Vaccine and HIV Link

- History of Anti-Vaccination Movements

- The Future of Immunization

- Careers in Vaccine Research

- General Vaccine Timeline

-

-

eva.ecdc.europa.eu eva.ecdc.europa.eu

-

Aim: This study aimed to investigate how exposure to online misinformation around COVID-19 vaccines affects intention to vaccinate in the UK and US.

Method: Participants were shown images of misinformation related to COVID-19.

Findings: The researchers found that exposure to misinformation led to a decline in intention to vaccinate of approximately 6 percentage points among those who previously said they would definitely accept a vaccine. They also found that some groups were affected more than others by exposure to misinformation, and scientific-sounding misinformation was also more strongly associated with declines in vaccination intent. These findings have important implications for informing the design of vaccination campaigns and combatting online misinformation.

Reference: Loomba, S., de Figueiredo, A., Piatek, S.J. et al. Measuring the impact of COVID-19 vaccine misinformation on vaccination intent in the UK and USA. Nat Hum Behav 5, 337–348 (2021)

-

The five Cs model

The five Cs model of vaccine acceptance is based on five factors that can affect an individual's vaccination behaviour: confidence, constraints, complacency, calculation, and collective responsibility.

-

-

misinforeview.hks.harvard.edu misinforeview.hks.harvard.edu

-

Surveys of nearly 2,500 Americans, conducted during a measles outbreak, suggest that users oftraditional media are less likely to be misinformed about vaccines than are users of social media. Resultsalso suggest that an individual’s level of trust in medical experts affects the likelihood that a person’sbeliefs about vaccination will change

-

-

journals.plos.org journals.plos.org

-

Beliefs in the autism/vaccines link and in vaccines side effects, along with intention to vaccinate a future child, were evaluated both immediately after the correction intervention and after a 7-day delay to reveal possible backfire effects. Results show that existing strategies to correct vaccine misinformation are ineffective and often backfire, resulting in the unintended opposite effect, reinforcing ill-founded beliefs about vaccination and reducing intentions to vaccinate. The implications for research on vaccines misinformation and recommendations for progress are discussed.

Tags

Annotators

URL

-

-

arxiv.org arxiv.org

-

nalyze the content of 69,907 headlines pro-duced by four major global media corporations duringa minimum of eight consecutive months in 2014. In or-der to discover strategies that could be used to attractclicks, we extracted features from the text of the newsheadlines related to the sentiment polarity of the head-line. We discovered that the sentiment of the headline isstrongly related to the popularity of the news and alsowith the dynamics of the posted comments on that par-ticular news

-

-

fedvte.usalearning.gov fedvte.usalearning.gov

-

Investigating social structures through the use of network or graphs Networked structures Usually called nodes ((individual actors, people, or things within the network) Connections between nodes: Edges or Links Focus on relationships between actors in addition to the attributes of actors Extensively used in mapping out social networks (Twitter, Facebook) Examples: Palantir, Analyst Notebook, MISP and Maltego

-

-

humanrightsfirst.org humanrightsfirst.org

-

Q-associated influencers strategically center the U.S. military in their narratives. This appearance of analliance with the military enhances their credibility and attracts followers, including veterans. Thesuggestion of an alliance also creates a cadre of committed adherents.

-

-

www.sciencedirect.com www.sciencedirect.com

-

On the one hand, conspiracy theorists seem to disregard accuracy; they tend to endorse mutually incompatible conspiracies, think intuitively, use heuristics, and hold other irrational beliefs. But by definition, conspiracy theorists reject the mainstream explanation for an event, often in favor of a more complex account. They exhibit a general distrust of others and expend considerable effort to find ‘evidence’ supporting their beliefs. In searching for answers, conspiracy theorists likely expose themselves to misleading information online and overestimate their own knowledge. Understanding when elaboration and cognitive effort might backfire is crucial, as conspiracy beliefs lead to political disengagement, environmental inaction, prejudice, and support for violence.

-

-

www.rferl.org www.rferl.org

-

A map showing the locations of significant recent fires across Russia.

-

-

www.opensocietyfoundations.org www.opensocietyfoundations.org

-

The Open Society Foundations extend our condolences to the friends and family of loved ones on Malaysia Airlines Flight MH17. We are deeply saddened to learn of the loss of the HIV/AIDS researchers and advocates onboard traveling to the 20th International AIDS Conference in Melbourne, Australia, along with all the other people who perished.

-

-

dl.acm.org dl.acm.org

-

We found that while some participants were aware of bots’ primary characteristics, others provided abstract descriptions or confused bots with other phenomena. Participants also struggled to classify accounts correctly (e.g., misclassifying > 50% of accounts) and were more likely to misclassify bots than non-bots. Furthermore, we observed that perceptions of bots had a significant effect on participants’ classification accuracy. For example, participants with abstract perceptions of bots were more likely to misclassify. Informed by our findings, we discuss directions for developing user-centered interventions against bots.

Tags

Annotators

URL

-

-

link.springer.com link.springer.com

-

We analyzed URLs cited in Twitter messages before and after the temporary interruption of the vaccine development on September 9, 2020 to investigate the presence of low credibility and malicious information. We show that the halt of the AstraZeneca clinical trials prompted tweets that cast doubt, fear and vaccine opposition. We discovered a strong presence of URLs from low credibility or malicious websites, as classified by independent fact-checking organizations or identified by web hosting infrastructure features. Moreover, we identified what appears to be coordinated operations to artificially promote some of these URLs hosted on malicious websites.

-

-

www.sciencedirect.com www.sciencedirect.com

-

Drawing from negativity bias theory, CFM, ICM, and arousal theory, this study characterizes the emotional responses of social media users and verifies how emotional factors affect the number of reposts of social media content after two natural disasters (predictable and unpredictable disasters). In addition, results from defining the influential users as those with many followers and high activity users and then characterizing how they affect the number of reposts after natural disasters

-

-

www.sciencedirect.com www.sciencedirect.com

-

When public health emergencies break out, social bots are often seen as the disseminator of misleading information and the instigator of public sentiment (Broniatowski et al., 2018; Shi et al., 2020). Given this research status, this study attempts to explore how social bots influence information diffusion and emotional contagion in social networks.

-

-

psycnet.apa.org psycnet.apa.org

-

Using actual fake-news headlines presented as they were seen on Facebook, we show that even a single exposure increases subsequent perceptions of accuracy, both within the same session and after a week. Moreover, this “illusory truth effect” for fake-news headlines occurs despite a low level of overall believability and even when the stories are labeled as contested by fact checkers or are inconsistent with the reader’s political ideology. These results suggest that social media platforms help to incubate belief in blatantly false news stories and that tagging such stories as disputed is not an effective solution to this problem.

-

-

www.nature.com www.nature.com

-

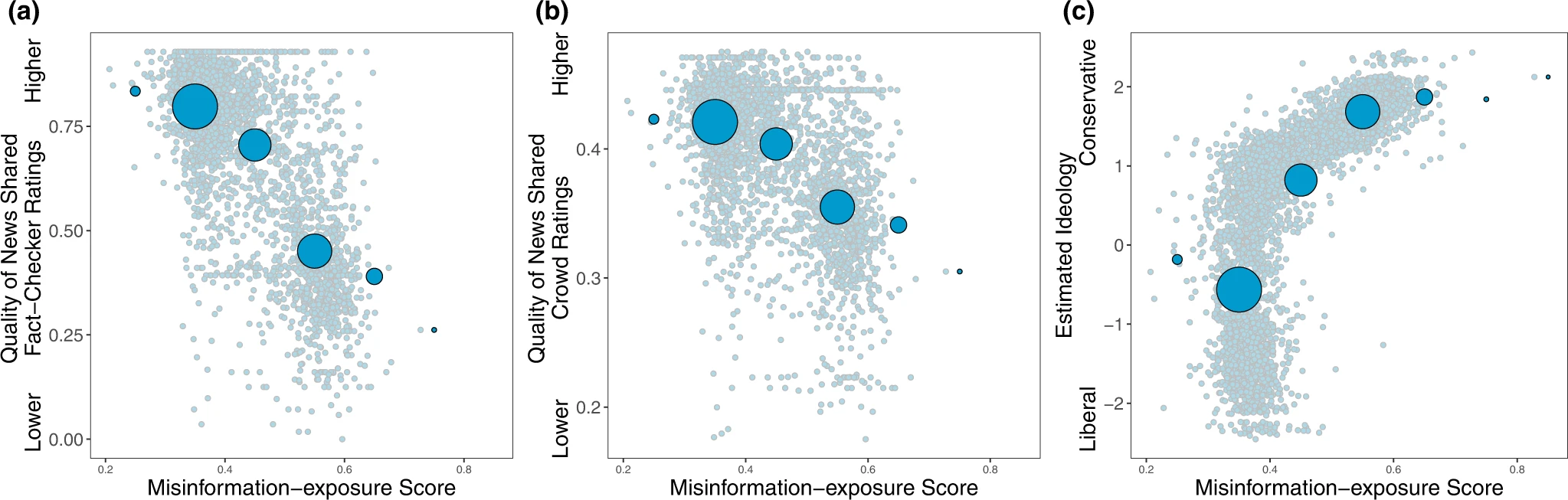

. Furthermore, our results add to the growing body of literature documenting—at least at this historical moment—the link between extreme right-wing ideology and misinformation8,14,24 (although, of course, factors other than ideology are also associated with misinformation sharing, such as polarization25 and inattention17,37).

Misinformation exposure and extreme right-wing ideology appear associated in this report. Others find that it is partisanship that predicts susceptibility.

-

. We also find evidence of “falsehood echo chambers”, where users that are more often exposed to misinformation are more likely to follow a similar set of accounts and share from a similar set of domains. These results are interesting in the context of evidence that political echo chambers are not prevalent, as typically imagined

-

And finally, at the individual level, we found that estimated ideological extremity was more strongly associated with following elites who made more false or inaccurate statements among users estimated to be conservatives compared to users estimated to be liberals. These results on political asymmetries are aligned with prior work on news-based misinformation sharing

This suggests the misinformation sharing elites may influence whether followers become more extreme. There is little incentive not to stoke outrage as it improves engagement.

-

We found that users who followed elites who made more false or inaccurate statements themselves shared news from lower-quality news outlets (as judged by both fact-checkers and politically-balanced crowds of laypeople), used more toxic language, and expressed more moral outrage.

Elite mis and disinformation sharers have a negative effect on followers.

-

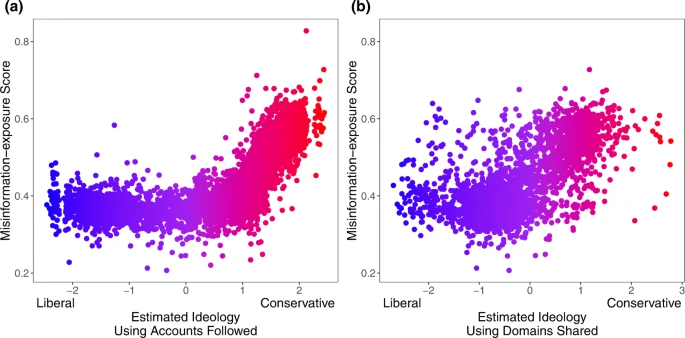

Estimated ideological extremity is associated with higher elite misinformation-exposure scores for estimated conservatives more so than estimated liberals.

Political ideology is estimated using accounts followed10. b Political ideology is estimated using domains shared30 (Red: conservative, blue: liberal). Source data are provided as a Source Data file.

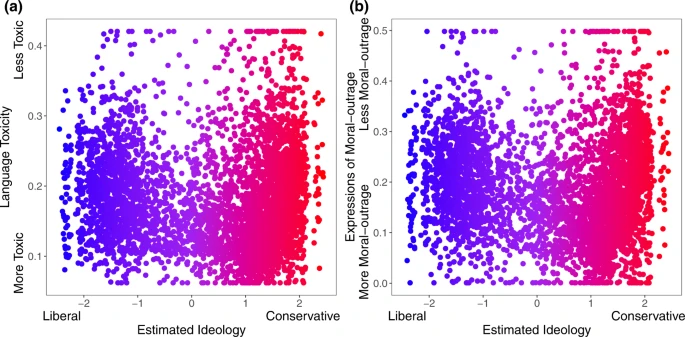

Estimated ideological extremity is associated with higher language toxicity and moral outrage scores for estimated conservatives more so than estimated liberals.

The relationship between estimated political ideology and (a) language toxicity and (b) expressions of moral outrage. Extreme values are winsorized by 95% quantile for visualization purposes. Source data are provided as a Source Data file.

-

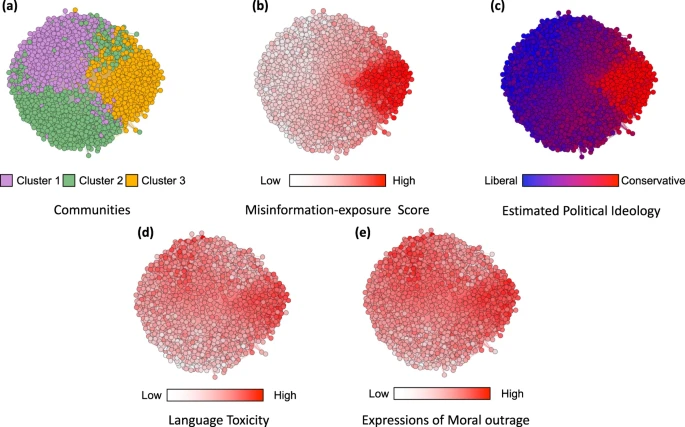

In the co-share network, a cluster of websites shared more by conservatives is also shared more by users with higher misinformation exposure scores.

Nodes represent website domains shared by at least 20 users in our dataset and edges are weighted based on common users who shared them. a Separate colors represent different clusters of websites determined using community-detection algorithms29. b The intensity of the color of each node shows the average misinformation-exposure score of users who shared the website domain (darker = higher PolitiFact score). c Nodes’ color represents the average estimated ideology of the users who shared the website domain (red: conservative, blue: liberal). d The intensity of the color of each node shows the average use of language toxicity by users who shared the website domain (darker = higher use of toxic language). e The intensity of the color of each node shows the average expression of moral outrage by users who shared the website domain (darker = higher expression of moral outrage). Nodes are positioned using directed-force layout on the weighted network.

-

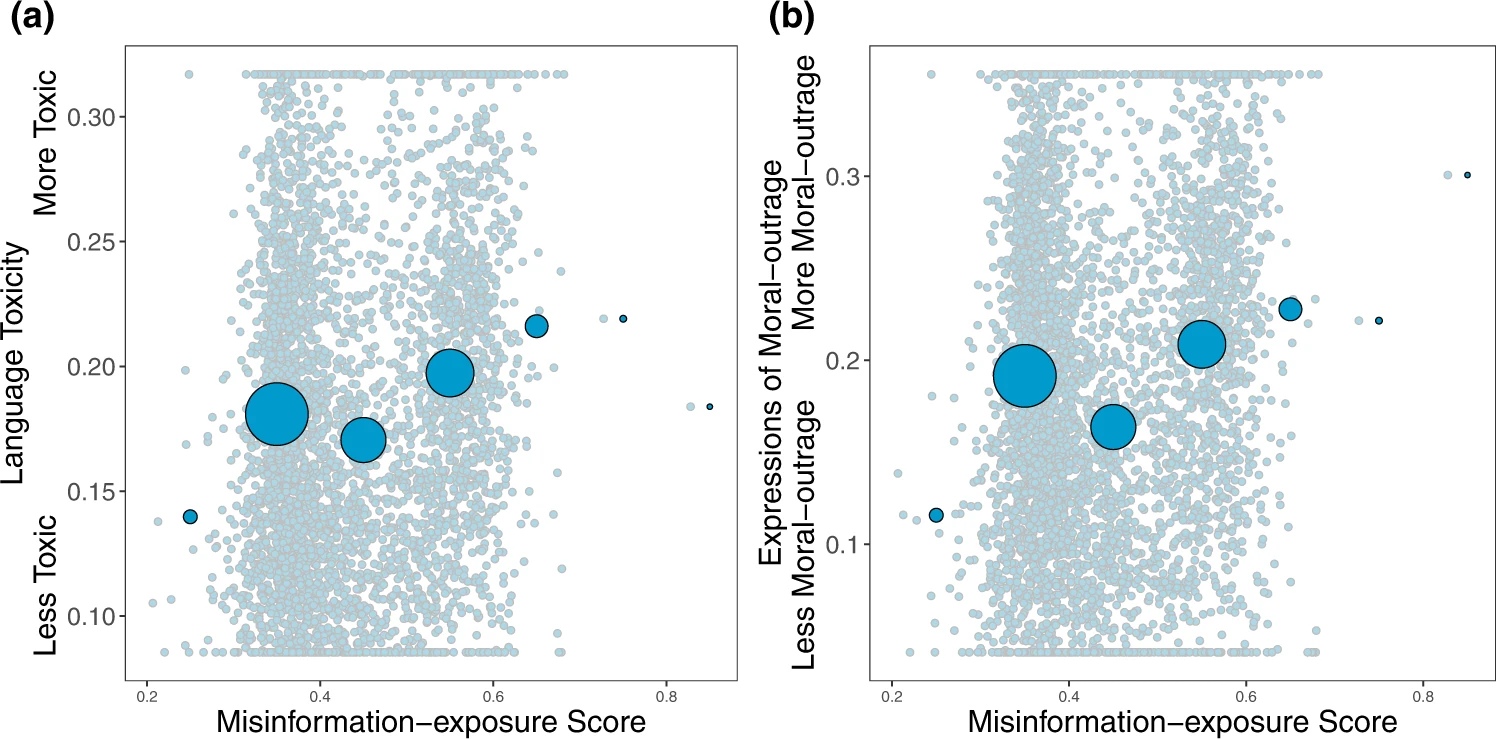

Exposure to elite misinformation is associated with the use of toxic language and moral outrage.

Shown is the relationship between users’ misinformation-exposure scores and (a) the toxicity of the language used in their tweets, measured using the Google Jigsaw Perspective API27, and (b) the extent to which their tweets involved expressions of moral outrage, measured using the algorithm from ref. 28. Extreme values are winsorized by 95% quantile for visualization purposes. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin. Source data are provided as a Source Data file.

-

We found that misinformation-exposure scores are significantly positively related to language toxicity (Fig. 3a; b = 0.129, 95% CI = [0.098, 0.159], SE = 0.015, t (4121) = 8.323, p < 0.001; b = 0.319, 95% CI = [0.274, 0.365], SE = 0.023, t (4106) = 13.747, p < 0.001 when controlling for estimated ideology) and expressions of moral outrage (Fig. 3b; b = 0.107, 95% CI = [0.076, 0.137], SE = 0.015, t (4143) = 14.243, p < 0.001; b = 0.329, 95% CI = [0.283,0.374], SE = 0.023, t (4128) = 14.243, p < 0.001 when controlling for estimated ideology). See Supplementary Tables 1, 2 for full regression tables and Supplementary Tables 3–6 for the robustness of our results.

-

Aligned with prior work finding that people who identify as conservative consume15, believe24, and share more misinformation8,14,25, we also found a positive correlation between users’ misinformation-exposure scores and the extent to which they are estimated to be conservative ideologically (Fig. 2c; b = 0.747, 95% CI = [0.727,0.767] SE = 0.010, t (4332) = 73.855, p < 0.001), such that users estimated to be more conservative are more likely to follow the Twitter accounts of elites with higher fact-checking falsity scores. Critically, the relationship between misinformation-exposure score and quality of content shared is robust controlling for estimated ideology

-

-

www.nature.com www.nature.com

-

Exposure to elite misinformation is associated with sharing news from lower-quality outlets and with conservative estimated ideology.

Shown is the relationship between users’ misinformation-exposure scores and (a) the quality of the news outlets they shared content from, as rated by professional fact-checkers21, (b) the quality of the news outlets they shared content from, as rated by layperson crowds21, and (c) estimated political ideology, based on the ideology of the accounts they follow10. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin.

-

-

arxiv.org arxiv.org

-

Notice that Twitter’s account purge significantly impacted misinformation spread worldwide: the proportion of low-credible domains in URLs retweeted from U.S. dropped from 14% to 7%. Finally, despite not having a list of low-credible domains in Russian, Russia is central in exporting potential misinformation in the vax rollout period, especially to Latin American countries. In these countries, the proportion of low-credible URLs coming from Russia increased from 1% in vax development to 18% in vax rollout periods (see Figure 8 (b), Appendix).

-

Interestingly, the fraction of low-credible URLs coming from U.S. dropped from 74% in the vax devel-opment period to 55% in the vax rollout. This large decrease can be directly ascribed to Twitter’s moderationpolicy: 46% of cross-border retweets of U.S. users linking to low-credible websites in the vax developmentperiod came from accounts that have been suspended following the U.S. Capitol attack (see Figure 8 (a), Ap-pendix).

-

Considering the behavior of users in no-vax communities,we find that they are more likely to retweet (Figure 3(a)), share URLs (Figure 3(b)), and especially URLs toYouTube (Figure 3(c)) than other users. Furthermore, the URLs they post are much more likely to be fromlow-credible domains (Figure 3(d)), compared to those posted in the rest of the networks. The differenceis remarkable: 26.0% of domains shared in no-vax communities come from lists of known low-credibledomains, versus only 2.4% of those cited by other users (p < 0.001). The most common low-crediblewebsites among the no-vax communities are zerohedge.com, lifesitenews.com, dailymail.co.uk (consideredright-biased and questionably sourced) and childrenshealthdefense.com (conspiracy/pseudoscience)

-

We find that, during the pandemic, no-vax communities became more central in the country-specificdebates and their cross-border connections strengthened, revealing a global Twitter anti-vaccinationnetwork. U.S. users are central in this network, while Russian users also become net exporters ofmisinformation during vaccination roll-out. Interestingly, we find that Twitter’s content moderationefforts, and in particular the suspension of users following the January 6th U.S. Capitol attack, had aworldwide impact in reducing misinformation spread about vaccines. These findings may help publichealth institutions and social media platforms to mitigate the spread of health-related, low-credibleinformation by revealing vulnerable online communities

-

-

arxiv.org arxiv.org

-

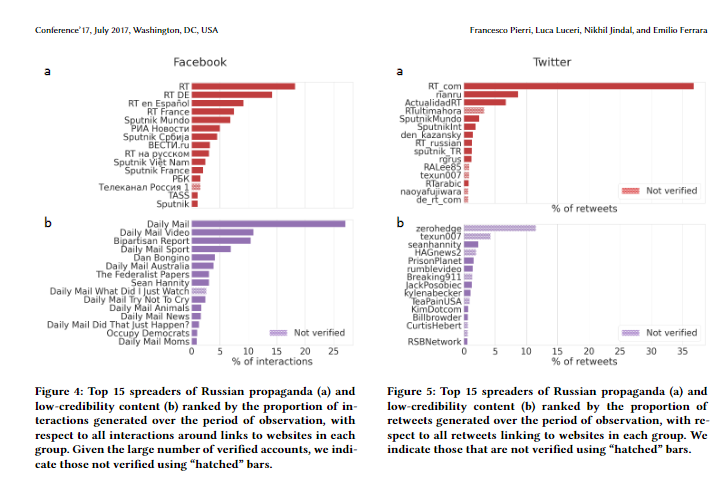

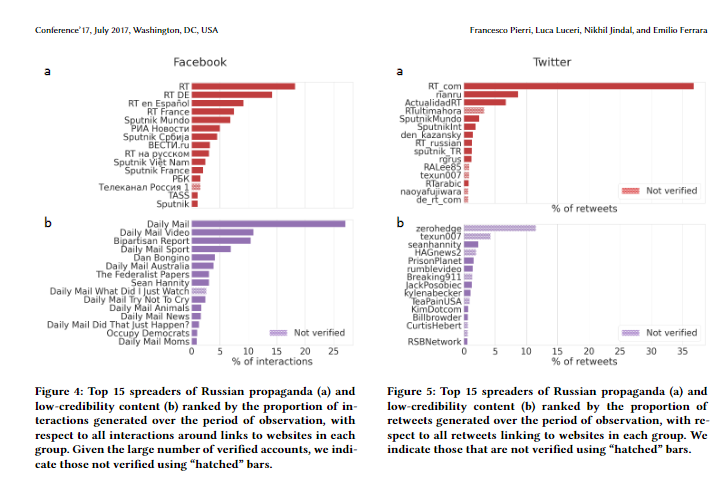

On Facebook, we identified 51,269 posts (0.25% of all posts)sharing links to Russian propaganda outlets, generating 5,065,983interactions (0.17% of all interactions); 80,066 posts (0.4% of allposts) sharing links to low-credibility news websites, generating28,334,900 interactions (0.95% of all interactions); and 147,841 postssharing links to high-credibility news websites (0.73% of all posts),generating 63,837,701 interactions (2.13% of all interactions). Asshown in Figure 2, we notice that the number of posts sharingRussian propaganda and low-credibility news exhibits an increas-ing trend (Mann-Kendall 𝑃 < .001), whereas after the invasion ofUkraine both time series yield a significant decreasing trend (moreprominent in the case of Russian propaganda); high-credibilitycontent also exhibits an increasing trend in the Pre-invasion pe-riod (Mann-Kendall 𝑃 < .001), which becomes stable (no trend)in the period afterward. T

-

We estimated the contribution of veri-fied accounts to sharing and amplifying links to Russian propagandaand low-credibility sources, noticing that they have a dispropor-tionate role. In particular, superspreaders of Russian propagandaare mostly accounts verified by both Facebook and Twitter, likelydue to Russian state-run outlets having associated accounts withverified status. In the case of generic low-credibility sources, a sim-ilar result applies to Facebook but not to Twitter, where we alsonotice a few superspreaders accounts that are not verified by theplatform.

-

On Twitter, the picture is very similar in the case of Russianpropaganda, where all accounts are verified (with a few exceptions)and mostly associated with news outlets, and generate over 68%of all retweets linking to these websites (see panel a of Figure 4).For what concerns low-credibility news, there are both verified (wecan notice the presence of seanhannity) and not verified users,and only a few of them are directly associated with websites (e.g.zerohedge or Breaking911). Here the top 15 accounts generateroughly 30% of all retweets linking to low-credibility websites.

-

Figure 5: Top 15 spreaders of Russian propaganda (a) andlow-credibility content (b) ranked by the proportion ofretweets generated over the period of observation, with re-spect to all retweets linking to websites in each group. Weindicate those that are not verified using “hatched” bars

-

Figure 4: Top 15 spreaders of Russian propaganda (a) andlow-credibility content (b) ranked by the proportion of in-teractions generated over the period of observation, withrespect to all interactions around links to websites in eachgroup. Given the large number of verified accounts, we indi-cate those not verified using “hatched” bars.

-

-

ieeexplore.ieee.org ieeexplore.ieee.org

-

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

-

-

www.mdpi.com www.mdpi.com

-

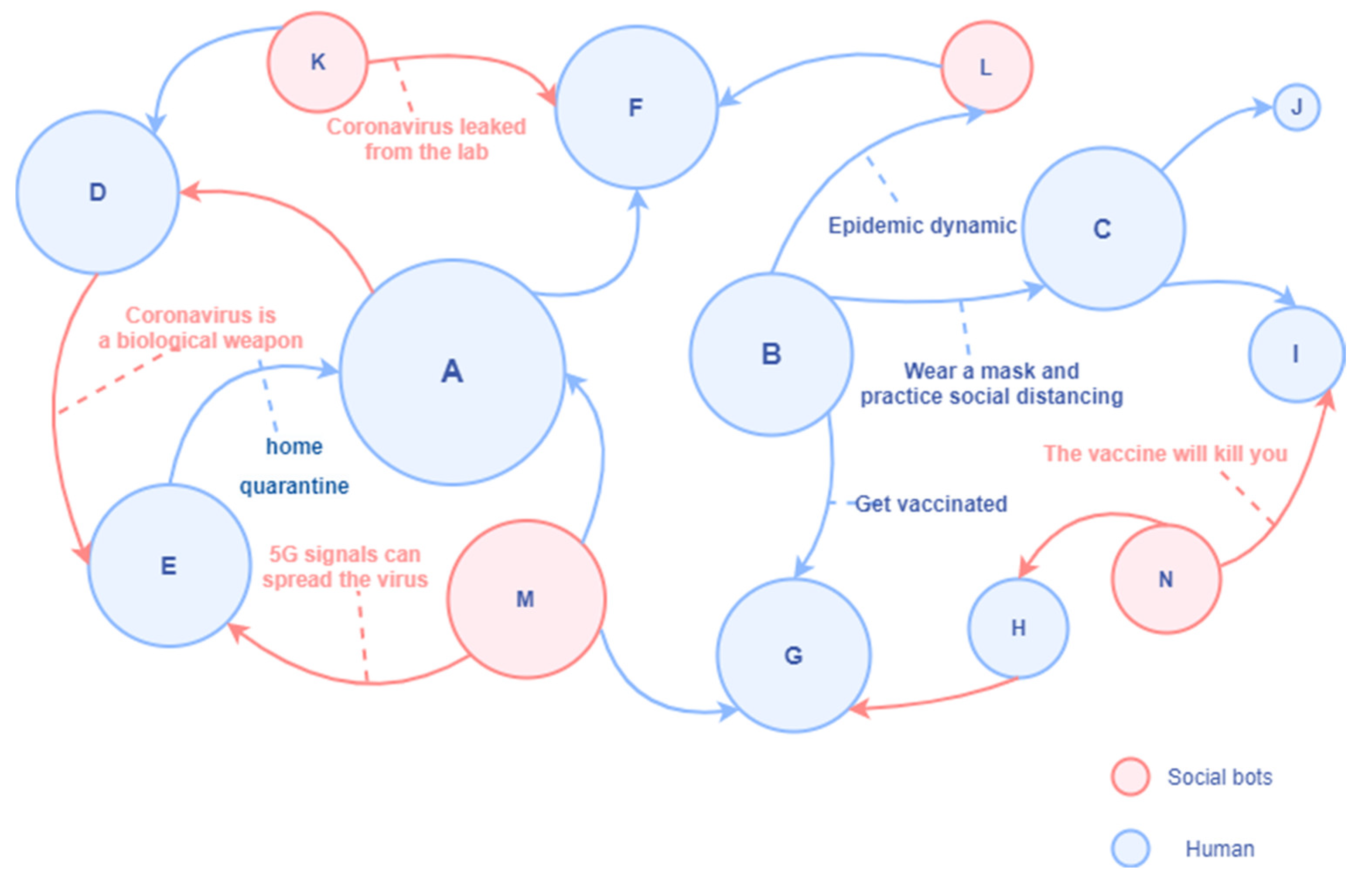

Therefore, although the social bot individual is “small”, it has become a “super spreader” with strategic significance. As an intelligent communication subject in the social platform, it conspired with the discourse framework in the mainstream media to form a hybrid strategy of public opinion manipulation.

-

we found that social bots played a bridge role in diffusion in the apparent directional topic like “Wuhan Lab”. Previous research also found that social bots play some intermediary roles between elites and everyday users regarding information flow [43]. In addition, verified Twitter accounts continue to be very influential and receive more retweets, whereas social bots retweet more tweets from other users. Studies have found that verified media accounts remain more central to disseminating information during controversial political events [75]. However, occasionally, even the verified accounts—including those of well-known public figures and elected officials—sent misleading tweets. This inspired us to investigate the validity of tweets from verified accounts in subsequent research. It is also essential to rely solely on science and evidence-based conclusions and avoid opinion-based narratives in a time of geopolitical conflict marked by hidden agendas, disinformation, and manipulation [76].

-

In Figure 6, the node represented by human A is a high-degree centrality account with poor discrimination ability for disinformation and rumors; it is easily affected by misinformation retweeted by social bots. At the same time, it will also refer to the opinions of other persuasive folk opinion leaders in the retweeting process. Human B represents the official institutional account, which has a high in-degree and often pushes the latest news, preventive measures, and suggestions related to COVID-19. Human C represents a human account with high media literacy, which mainly retweets information from information sources with high credibility. It has a solid ability to identify information quality and is not susceptible to the proliferation of social bots. Human D actively creates and spreads rumors and conspiracy theories and only retweets unverified messages that support his views in an attempt to expand the influence. Social bots K, M, and N also spread unverified information (rumors, conspiracy theories, and disinformation) in the communication network without fact-checking. Social bot L may be a social bot of an official agency.

-

There were 120,118 epidemy-related tweets in this study, and 34,935 Twitter accounts were detected as bot accounts by Botometer, accounting for 29%. In all, 82,688 Twitter accounts were human, accounting for 69%; 2495 accounts had no bot score detected.In social network analysis, degree centrality is an index to judge the importance of nodes in the network. The nodes in the social network graph represent users, and the edges between nodes represent the connections between users. Based on the network structure graph, we may determine which members of a group are more influential than others. In 1979, American professor Linton C. Freeman published an article titled “Centrality in social networks conceptual clarification“, on Social Networks, formally proposing the concept of degree centrality [69]. Degree centrality denotes the number of times a central node is retweeted by other nodes (or other indicators, only retweeted are involved in this study). Specifically, the higher the degree centrality is, the more influence a node has in its network. The measure of degree centrality includes in-degree and out-degree. Betweenness centrality is an index that describes the importance of a node by the number of shortest paths through it. Nodes with high betweenness centrality are in the “structural hole” position in the network [69]. This kind of account connects the group network lacking communication and can expand the dialogue space of different people. American sociologist Ronald S. Bert put forward the theory of a “structural hole” and said that if there is no direct connection between the other actors connected by an actor in the network, then the actor occupies the “structural hole” position and can obtain social capital through “intermediary opportunities”, thus having more advantages.

-

We analyzed and visualized Twitter data during the prevalence of the Wuhan lab leak theory and discovered that 29% of the accounts participating in the discussion were social bots. We found evidence that social bots play an essential mediating role in communication networks. Although human accounts have a more direct influence on the information diffusion network, social bots have a more indirect influence. Unverified social bot accounts retweet more, and through multiple levels of diffusion, humans are vulnerable to messages manipulated by bots, driving the spread of unverified messages across social media. These findings show that limiting the use of social bots might be an effective method to minimize the spread of conspiracy theories and hate speech online.

-

-

www.universiteitleiden.nl www.universiteitleiden.nl

-

Our study of QAnon messages found a highprevalence of linguistic identity fusion indicators along with external threat narratives, violence-condoninggroup norms as well as demonizing, dehumanizing, and derogatory vocabulary applied to the out-group, espe-cially when compared to the non-violent control group. The aim of this piece of research is twofold: (i.) It seeksto evaluate the national security threat posed by the QAnon movement, and (ii.) it aims to provide a test of anovel linguistic toolkit aimed at helping to assess the risk of violence in online communication channels.

-

-

www.nature.com www.nature.com

-

The style is one that is now widely recognized as a tool of sowing doubt: the author just asked ‘reasonable’ questions, without making any evidence-based conclusions.Who is the audience of this story and who could potentially be targeted by such content? As Bratich argued, 9/11 represents a prototypical case of ‘national dissensus’ among American individuals, and an apparently legitimate case for raising concerns about the transparency of the US authorities13. It is indicative that whoever designed the launch of RT US knew how polarizing it would be to ask questions about the most painful part of the recent past.

-

Conspiracy theories that provide names of the beneficiaries of political, social and economic disasters help people to navigate the complexities of the globalized world, and give simple answers as to who is right and who is wrong. If you add to this global communication technologies that help to rapidly develop and spread all sorts of conspiracy theories, these theories turn into a powerful tool to target subnational, national and international communities and to spread chaos and doubt. The smog of subjectivity created by user-generated content and the crisis of expertise have become a true gift to the Kremlin’s propaganda.

-

To begin with, the US output of RT tapped into the rich American culture of conspiracy theories by running a story entitled ‘911 questions to the US government about 9/11’

-

Instead, to counter US hegemonic narratives, the Kremlin took to systematically presenting alternative narratives and dissenting voices. Russia’s public diplomacy tool — the international television channel Russia Today — was rebranded as RT in 2009, probably to hide its clear links to the Russian government11. After an aggressive campaign to expand in English-, Spanish-, German- and French-speaking countries throughout the 2010s, the channel became the most visible source of Russia’s disinformation campaigns abroad. Analysis of its broadcasts shows the adoption of KGB approaches, as well as the use of novel tools provided by the global online environment

-

-

www.nature.com www.nature.com

-

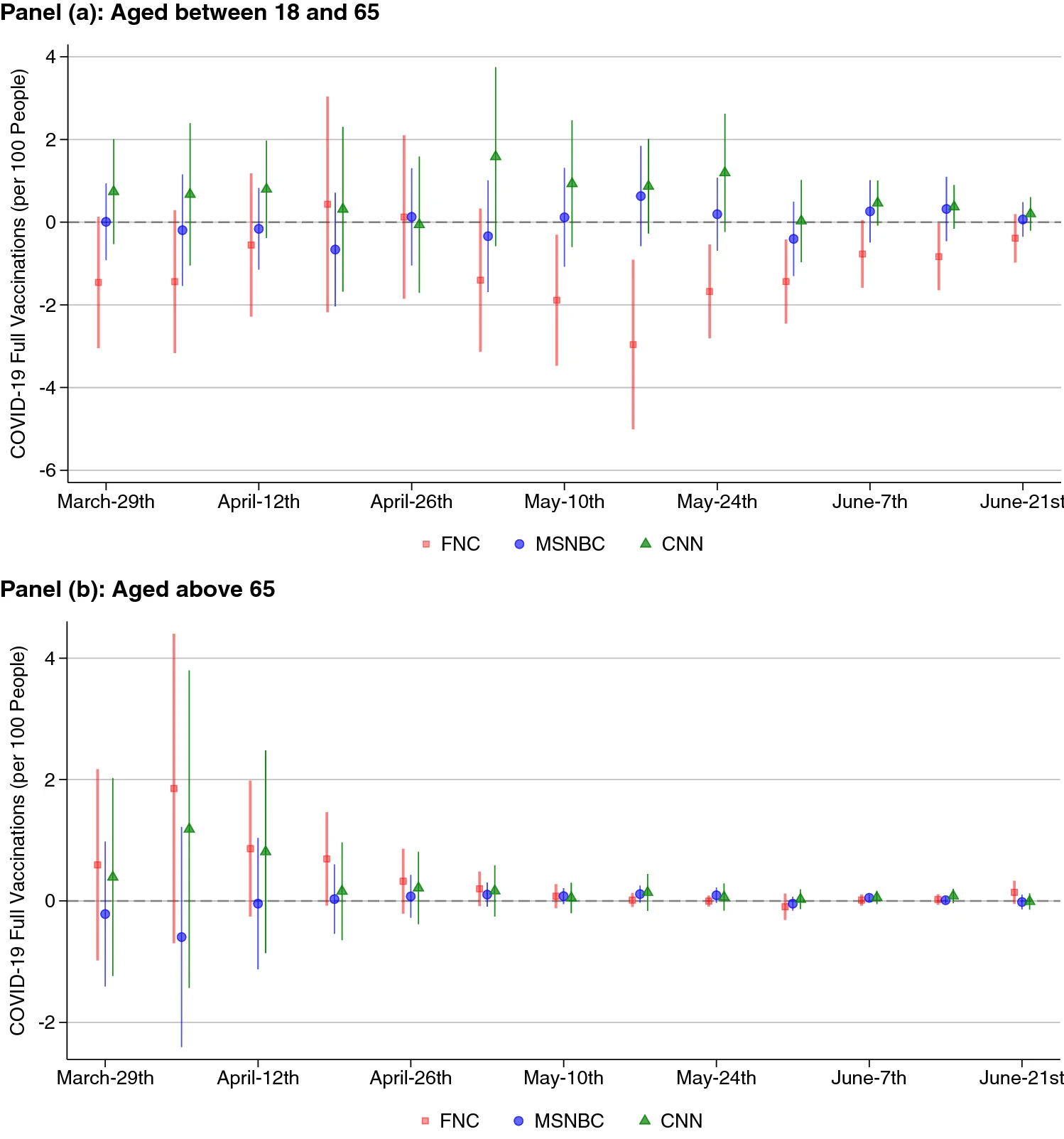

Effect of network viewership on weekly vaccination rates by age group, 2021 (2SLS). Coefficient plots with 95% CIs from 2SLS regressions showing the effect of one standard deviation changes in viewership on weekly vaccinations per 100 people, by age group. Viewerships are instrumented using the lineup channel positions. Regressions include demographic and cable-system controls. Standard errors are clustered by state.

-

-

www.nature.com www.nature.com

-

Our results show that Fox News is reducing COVID-19 vaccination uptake in the United States, with no evidence of the other major networks having any effect. We first show that there is an association between areas with higher Fox News viewership and lower vaccinations, then provide an instrumental variable analysis to account for endogeneity, and help pin down the magnitude of the local average treatment effect.

-

Overall, an additional weekly hour of Fox News viewership for the average household accounts for a reduction of 0.35–0.76 weekly full vaccinations per 100 people during May and June 2021. This result is not only driven by Fox News’ anti-science messaging, but also by the network’s skeptic coverage of COVID-19 vaccinations.

-

-

www.nature.com www.nature.com

-

highlights the need for public health officials to disseminate information via multiple media channels to increase the chances of accessing vaccine resistant or hesitant individuals.

-

Engagement of religious leaders, for example, has been documented as an important approach to improve vaccine acceptance16,57. Key to the preparation of a COVID-19 vaccine is, therefore, the early and frequent engagement of religious and community-leaders58, and for health authorities to work collaboratively with multiple societal stakeholders to avoid the feeling that they are only acting on behalf of government authorities59.

-

Interestingly, while vaccine hesitant and resistant individuals in Ireland and the UK varied in relation to their social, economic, cultural, political, and geographical characteristics, both populations shared similar psychological profiles. Specifically, COVID-19 vaccine hesitant or resistant persons were distinguished from their vaccine accepting counterparts by being more self-interested, more distrusting of experts and authority figures (i.e. scientists, health care professionals, the state), more likely to hold strong religious beliefs (possibly because these kinds of beliefs are associated with distrust of the scientific worldview) and also conspiratorial and paranoid beliefs (which reflect lack of trust in the intentions of others).

-

They were also more likely to believe that their lives are primarily under their own control, to have a preference for societies that are hierarchically structured and authoritarian, and to be more intolerant of migrants in society (attitudes that have been previously hypothesised to be consistent with, and understandable in the context of, evolved responses to the threat of pathogens)56. They were also more impulsive in their thinking style, and had a personality characterised by being more disagreeable, more emotionally unstable, and less conscientious.

-

Consistent with previous research39, vaccine resistance was associated with lower income in the UK and Ireland with all earning categories below the highest income bracket associated with COVID-19 vaccine resistance.

-

Across the Irish and UK samples, similarities and differences emerged regarding those in the population who were more likely to be hesitant about, or resistant to, a vaccine for COVID-19. Three demographic factors were significantly associated with vaccine hesitance or resistance in both countries: sex, age, and income level. Compared to respondents accepting of a COVID-19 vaccine, women were more likely to be vaccine hesitant, a finding consistent with a number of studies identifying sex and gender-related differences in vaccine uptake and acceptance37,38. Younger age was also related to vaccine hesitance and resistance.

-

Similar rates of vaccine hesitance (26% and 25%) and resistance (9% and 6%) were evident in the Irish and UK samples, with only 65% of the Irish population and 69% of the UK population fully willing to accept a COVID-19 vaccine. These findings align with other estimates across seven European nations where 26% of adults indicated hesitance or resistance to a COVID-19 vaccine7 and in the United States where 33% of the population indicated hesitance or resistance34. Rates of resistance to a COVID-19 vaccine also parallel those found for other types of vaccines. For example, in the United States 9% regarded the MMR vaccine as unsafe in a survey of over 1000 adults35, while 7% of respondents across the world said they “strongly disagree” or “somewhat disagree” with the statement ‘Vaccines are safe’36. Thus, upwards of approximately 10% of study populations appear to be opposed to vaccinations in whatever form they take. Importantly, however, the findings from the current study and those from around Europe and the United States may not be consistent with or reflective of vaccine acceptance, hesitancy, or resistance in non-Western countries or regions.

-

There were no significant differences in levels of consumption and trust between the vaccine accepting and vaccine hesitant groups in the Irish sample. Compared to vaccine hesitant responders, vaccine resistant individuals consumed significantly less information about the pandemic from television and radio, and had significantly less trust in information disseminated from newspapers, television broadcasts, radio broadcasts, their doctor, other health care professionals, and government agencies.

-

In the Irish sample, the combined vaccine hesitant and resistant group differed most pronouncedly from the vaccine acceptance group on the following psychological variables: lower levels of trust in scientists (d = 0.51), health care professionals (d = 0.45), and the state (d = 0.31); more negative attitudes toward migrants (d’s ranged from 0.27 to 0.29); lower cognitive reflection (d = 0.25); lower levels of altruism (d’s ranged from 0.17 to 0.24); higher levels of social dominance (d = 0.22) and authoritarianism (d = 0.14); higher levels of conspiratorial (d = 0.21) and religious (d = 0.20) beliefs; lower levels of the personality trait agreeableness (d = 0.15); and higher levels of internal locus of control (d = 0.14).

-

-

powerofus.substack.com powerofus.substack.com

-

This new paper finds that people are less interested in reducing economic inequality through redistribution when they think about wealth disparities as hierarchies of individuals rather than of groups. This seems to be because, as the researchers put it, “people are more likely to believe that the wealth of individuals, rather than groups, at the top is well earned”.

-

-

onlinelibrary.wiley.com onlinelibrary.wiley.com

-

The psychology of hate: Moral concerns differentiate hate from dislike

The effect of hate versus dislike on morality judgments (a) and moral emotions (b) in Study 2. Hated objects were rated as significantly more tied to core moral beliefs and moral emotions than disliked attitude objects.

The effect of hate versus dislike on ratings of (a) negativity, (b) morality and (c) attitude strength in Study 3. Hated objects were rated as significantly more negative and were more associated with attitude strength than disliked objects but received similar ratings in negativity and attitude strength as extremely disliked objects. Hated objects were rated as more related to morality than extremely disliked attitude objects and disliked attitude objects.

Results

Consistent with our three laboratory studies, this analysis of real-world hate groups lent further support to the morality hypothesis. The language of hate groups was different, in the moral domain, from that of complain forums. These results reflect real, uncensored language used by groups and individuals known to espouse hate or dislike, and often known to take significant actions in support of these attitudes. Importantly, the expressions of these groups and individuals are less likely to involve their own lay theories about hate or dislike—but rather their public positions on these issues. There are, admittedly, several differences between hate websites and complaint forums and these data should be treated as preliminary. But taken together with the carefully controlled lab experiments, this overall pattern of findings suggests that morality helps differentiate expressions of hate from expressions of dislike.

-

-

malariajournal.biomedcentral.com malariajournal.biomedcentral.com

-

Rising greenhouse gas concentrations have resulted in detectable trends in average climate (particularly temperature), but also in changes in the timing of key seasons in some locations and in daily weather variability, including extreme weather and climate events like heat waves and droughts [10,11,12]. It is primarily through these changes in weather and seasonality, rather than through gradual, long-term trends, that climate change is likely to influence malaria risk. These impacts on malaria could occur both directly, as optimum climate ranges and critical thresholds for vector and parasite development are crossed, and indirectly, as society grapples with the disruptive effects of changes in weather patterns and seasonal cycles.

-

Climate can influence malaria directly, through transmission dynamics, or indirectly, through myriad pathways including the many socioeconomic factors that underpin malaria risk. These indirect effects are largely unpredictable and so are not included in climate-driven disease models. Such models have been effective at predicting transmission from weeks to months ahead. However, due to several well-documented limitations, climate projections cannot accurately predict the medium- or long-term effects of climate change on malaria, especially on local scales. Long-term climate trends are shifting disease patterns, but climate shocks (extreme weather and climate events) and variability from sub-seasonal to decadal timeframes have a much greater influence than trends and are also more easily integrated into control programmes.

-

-

-

Trends in some regions are clear, but insect biology, climate quirks, and public health preparedness will determine whether outbreaks occur.

Tags

Annotators

URL

-

-

link.springer.com link.springer.com

-

For effective vector control, the influence of climatic factors on vector-borne diseases should be studied since the mosquito vectors are also sensitive to the alterations in the climatic condition and the existing vector control approaches are inadequate to combat with the adverse effects of global warming.

-

-

www.tandfonline.com www.tandfonline.com

-

The models show moderate areal increase and altitudinal shift in the malaria-endemic areas in Greece in the future. The length of the transmission season is predicted to increase by 1 to 2 months, mainly in the mid-elevation regions and the Aegean Archipelago.

-

-

tobaccotactics.org tobaccotactics.org

-

Undermining the Concept of Environmental Risk

By the mid-1990s, the Institute of Economic Affairs had extended its work on Risk Assessment (RA). More specifically, the head of IEA’s Environment Unit Roger Bate was interested in undermining the concept of “environmental risk”, especially in relation to key themes, such as climate change and pesticides, and second hand smoke.

-

-

www.oxfordbibliographies.com www.oxfordbibliographies.com

-

Motivated Reasoning

In broad terms, motivated reasoning theory suggests that reasoning processes (information selection and evaluation, memory encoding, attitude formation, judgment, and decision-making) are influenced by motivations or goals. Motivations are desired end-states that individuals want to achieve.

-

-

thedecisionlab.com thedecisionlab.com

-

What is the Illusory Truth Effect?

The illusory truth effect, also known as the illusion of truth, describes how, when we hear the same false information repeated again and again, we often come to believe it is true. Troublingly, this even happens when people should know better—that is, when people initially know that the misinformation is false.

-