80% of food in the UK is imported. How about your country? Your town?

Food for thought

80% of food in the UK is imported. How about your country? Your town?

Food for thought

The South Korean government is delivering food parcels to those in quarantine. Our national and local governments need to quickly organise the capacity and resources required to do this.Japanese schools are scheduled to be closed for march.

Food delivery in South Korea and closing schools in Japan

The limited availability of beds in Wuhan raised their mortality rate from 0.16% to 4.9%This is why the Chinese government built a hospital in a week. Are our governments capable of doing the same?

Case of Wuhan

The UK population is 67 million people, that’s 5.4 million infected.Currents predictions are that 80% of the cases will be mild.If 20% of those people require hospitalization for 3–6 weeks?That’s 1,086,176 People.Do you know how many beds the NHS has?140,000

There will be a great lack of beds

Evolving to be observant of direct dangers to ourselves seems to have left us terrible at predicting second and third-order effects of events.When worrying about earthquakes we think first of how many people will die from collapsing buildings and falling rubble. Do we think of how many will die due to destroyed hospitals?

Thinking of second and third-order effects of events

Can you guess the number of people that have contracted the flu this year that needed hospitalisation in the US? 0.9%

0.9% of flu cases that required hospitalisation vs 20% of COVID-19

The UK has 2.8 million people over the age of 85.The US has 12.6 million people over the Age of 80.Trump told people not to worry because 60,000 people a year die of the flu. If just 25% of the US over 80’s cohort get infected, given current mortality rates that’s 466,200 deaths in that age group alone with the assumption that the healthcare system has the capacity to handle all of the infected.

Interesting calculation of probabilistic deaths of people > 80. Basically, with at least 25% infected people in the US who are > 80, we might have almost x8 more deaths than by flu

in XML the ordering of elements is significant. Whereas in JSON the ordering of the key-value pairs inside objects is meaningless and undefined

XML vs JSON (ordering elements)

XML is a document markup language; JSON is a structured data format, and to compare the two is to compare apples and oranges

XML vs JSON

dictionary (a piece of structured data) can be converted into n different possible documents (XML, PDF, paper or otherwise), where n is the number of possible permutations of the elements in the dictionary

Dictionary

The correct way to express a dictionary in XML is something like this

Correct dictionary in XML:

<root>

<item>

<key>Name</key>

<value>John</value>

</item>

<item>

<key>City</key>

<value>London</value>

</item>

</root>

Broadly speaking, XML excels at annotating corpuses of text with structure and metadata

The right use of XML

XML has no notion of numbers (or booleans, or other data types), any numbers represented are just considered more text

Numbers in XML

To date, the only XML schemas I have seen which I would actually consider a good use of XML are XHTML and DocBook

Good use of XML

A particular strength of JSON is its support for nested data structures

JSON can facilitate arrays, such as:

"favorite_movies": [ "Diehard", "Shrek" ]

JSON’s origins as a subset of JavaScript can be seen with how easily it represents key/value object data. XML, on the other hand, optimizes for document tree structures, by cleanly separating node data (attributes) from child data (elements)

JSON for key/value object data

XML for document tree structures (clearly separating node data (attributes) from child data (elements))

The advantages of XML over JSON for trees becomes more pronounced when we introduce different node types. Assume we wanted to introduce departments into the org chart above. In XML, we can just use an element with a new tag name

JSON is well-suited for representing lists of objects with complex properties. JSON’s key/value object syntax makes it easy. By contrast, XML’s attribute syntax only works for simple data types. Using child elements to represent complex properties can lead to inconsistencies or unnecessary verbosity.

JSON works well for list of objects with complex properties. XML not so much

UI layouts are represented as component trees. And XML is ideal for representing tree structures. It’s a match made in heaven! In fact, the most popular UI frameworks in the world (HTML and Android) use XML syntax to define layouts.

XML works great for displaying UI layouts

XML may not be ideal to represent generic data structures, but it excels at representing one particular structure: the tree. By separating node data (attributes) from parent/child relationships, the tree structure of the data shines through, and the code to process the data can be quite elegant.

XML is good for representing tree structured data

Here is a high level comparison of the tools we reviewed above:

Comparison of Delta Lake, Apache Iceberg and Apache Hive:

To address Hadoop’s complications and scaling challenges, Industry is now moving towards a disaggregated architecture, with Storage and Analytics layers very loosely coupled using REST APIs.

Things used to address Hadoop's lacks

Hive is now trying to address consistency and usability. It facilitates reading, writing, and managing large datasets residing in distributed storage using SQL. Structure can be projected onto data already in storage.

Apache Hive offers:

Delta Lake is an open-source platform that brings ACID transactions to Apache Spark™. Delta Lake is developed by Spark experts, Databricks. It runs on top of your existing storage platform (S3, HDFS, Azure) and is fully compatible with Apache Spark APIs.

Delta Lake offers:

Apache Iceberg is an open table format for huge analytic data sets. Iceberg adds tables to Presto and Spark that use a high-performance format that works just like a SQL table. Iceberg is focussed towards avoiding unpleasant surprises, helping evolve schema and avoid inadvertent data deletion.

Apache Iceberg offers:

in this disaggregated model, users can choose to use Spark for batch workloads for analytics, while Presto for SQL heavy workloads, with both Spark and Presto using the same backend storage platform.

Disaggregated model allows more flexible choice of tools

These projects sit between the storage and analytical platforms and offer strong ACID guarantees to the end user while dealing with the object storage platforms in a native manner.

Solutions to the disaggregated models:

rise of Hadoop as the defacto Big Data platform and its subsequent downfall. Initially, HDFS served as the storage layer, and Hive as the analytics layer. When pushed really hard, Hadoop was able to go up to few 100s of TBs, allowed SQL like querying on semi-structured data and was fast enough for its time.

Hadoop's HDFS and Hive became unprepared for even larger sets of data

Disaggregated model means the storage system sees data as a collection of objects or files. But end users are not interested in the physical arrangement of data, they instead want to see a more logical view of their data.

File or Tables problem of disaggregated models

ACID stands for Atomicity (an operation either succeeds completely or fails, it does not leave partial data), Consistency (once an application performs an operation the results of that operation are visible to it in every subsequent operation), Isolation (an incomplete operation by one user does not cause unexpected side effects for other users), and Durability (once an operation is complete it will be preserved even in the face of machine or system failure).

ACID definition

Currently this may be possible using version management of object store, but that as we saw earlier is at a lower layer of physical detail which may not be useful at higher, logical level.

Change management issue of disaggregated models

Traditionally Data Warehouse tools were used to drive business intelligence from data. Industry then recognized that Data Warehouses limit the potential of intelligence by enforcing schema on write. It was clear that all the dimensions of data-set being collected could not be thought of at the time of data collection.

Data Warehouses were later being replaced with Data Lakes to face the amount of big data

As explained above, users are no longer willing to consider inefficiencies of underlying platforms. For example, data lakes are now also expected to be ACID compliant, so that the end user doesn’t have the additional overhead of ensuring data related guarantees.

SQL Interface issue of disaggregated models

Commonly used Storage platforms are object storage platforms like AWS S3, Azure Blob Storage, GCS, Ceph, MinIO among others. While analytics platforms vary from simple Python & R based notebooks to Tensorflow to Spark, Presto to Splunk, Vertica and others.

Commonly used storage platforms:

Commonly used analytics platforms:

Data Lakes that are optimized for unstructured and semi-structured data, can scale to PetaBytes easily and allowed better integration of a wide range of tools to help businesses get the most out of their data.

Data Lake definitions / what do offer us:

dplyr in R also lets you use a different syntax for querying SQL databases like Postgres, MySQL and SQLite, which is also in a more logical order

It’s just that it often makes sense to write code in the order JOIN / WHERE / GROUP BY / HAVING. (I’ll often put a WHERE first to improve performance though, and I think most database engines will also do a WHERE first in practice)

Pandas usually writes code in this syntax:

JOINWHEREGROUP BYHAVINGExample:

df = thing1.join(thing2) # like a JOINdf = df[df.created_at > 1000] # like a WHEREdf = df.groupby('something', num_yes = ('yes', 'sum')) # like a GROUP BYdf = df[df.num_yes > 2] # like a HAVING, filtering on the result of a GROUP BYdf = df[['num_yes', 'something1', 'something']] # pick the columns I want to display, like a SELECTdf.sort_values('sometthing', ascending=True)[:30] # ORDER BY and LIMITdf[:30]We save all of this code, the ui object, the server function, and the call to the shinyApp function, in an R script called app.R

The same basic structure for all Shiny apps:

ui object.server function.shinyApp function.---> examples <---

ui

UI example of a Shiny app (check the code below)

server

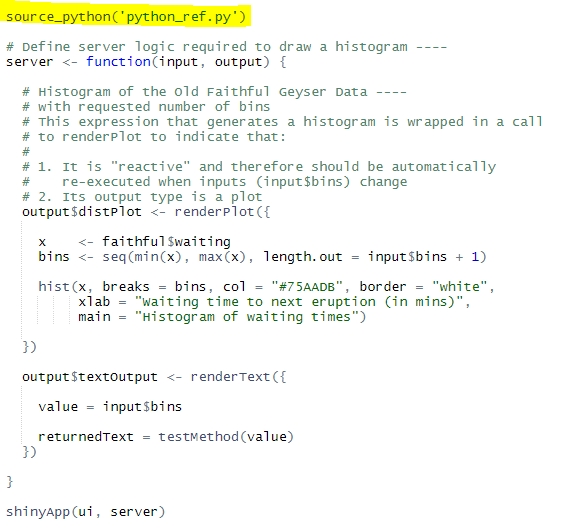

Server example of a Shiny app (check the code below):

renderPlotI want to get the selected number of bins from the slider and pass that number into a python method and do some calculation/manipulation (return: “You have selected 30bins and I came from a Python Function”) inside of it then return some value back to my R Shiny dashboard and view that result in a text field.

Using Python scripts inside R Shiny (in 6 steps):

textOutput("textOutput") (after plotoutput()).output$textOutput <- renderText({

}].

library(reticulate).source_python() function will make Python available in R:

reticulate package to R Environment and sourced the script inside your R code.Hit run.

Currently Shiny is far more mature than Dash. Dash doesn’t have a proper layout tool yet, and also not build in theme, so if you are not familiar with Html and CSS, your application will not look good (You must have some level of web development knowledge). Also, developing new components will need ReactJS knowledge, which has a steep learning curve.

Shiny > Dash:

You can host standalone apps on a webpage or embed them in R Markdown documents or build dashboards. You can also extend your Shiny apps with CSS themes, Html widgets, and JavaScript actions.

Typical tools used for working with Shiny

You can either create a one R file named app.R and create two seperate components called (ui and server inside that file) or create two R files named ui.R and server.R

Vaex supports Just-In-Time compilation via Numba (using LLVM) or Pythran (acceleration via C++), giving better performance. If you happen to have a NVIDIA graphics card, you can use CUDA via the jit_cuda method to get even faster performance.

Tools supported by Vaex

virtual columns. These columns just house the mathematical expressions, and are evaluated only when required

Virtual columns

displaying a Vaex DataFrame or column requires only the first and last 5 rows to be read from disk

Vaex tries to go over the entire dataset with as few passes as possible

Why is it so fast? When you open a memory mapped file with Vaex, there is actually no data reading going on. Vaex only reads the file metadata

Vaex only reads the file metadata:

When filtering a Vaex DataFrame no copies of the data are made. Instead only a reference to the original object is created, on which a binary mask is applied

Filtering Vaex DataFrame works on reference to the original data, saving lots of RAM

If you are interested in exploring the dataset used in this article, it can be used straight from S3 with Vaex. See the full Jupyter notebook to find out how to do this.

Example of EDA in Vaex ---> Jupyter Notebook

Vaex is an open-source DataFrame library which enables the visualisation, exploration, analysis and even machine learning on tabular datasets that are as large as your hard-drive. To do this, Vaex employs concepts such as memory mapping, efficient out-of-core algorithms and lazy evaluations.

Vaex - library to manage as large datasets as your HDD, thanks to:

All wrapped in a Pandas-like API

The first step is to convert the data into a memory mappable file format, such as Apache Arrow, Apache Parquet, or HDF5

Before opening data with Vaex, we need to convert it into a memory mappable file format (e.g. Apache Arrow, Apache Parquet or HDF5). This way, 100 GB data can be load in Vaex in 0.052 seconds!

Example of converting CSV ---> HDF5.

The describe method nicely illustrates the power and efficiency of Vaex: all of these statistics were computed in under 3 minutes on my MacBook Pro (15", 2018, 2.6GHz Intel Core i7, 32GB RAM). Other libraries or methods would require either distributed computing or a cloud instance with over 100GB to preform the same computations.

Possibilities of Vaex

AWS offers instances with Terabytes of RAM. In this case you still have to manage cloud data buckets, wait for data transfer from bucket to instance every time the instance starts, handle compliance issues that come with putting data on the cloud, and deal with all the inconvenience that come with working on a remote machine. Not to mention the costs, which although start low, tend to pile up as time goes on.

AWS as a solution to analyse data too big for RAM (like 30-50 GB range). In this case, it's still uncomfortable:

git config --global alias.s status

Replace git status with git s:

git config --global alias.s status

It will modify config in .gitconfig file.

Other set of useful aliases:

[alias]

s = status

d = diff

co = checkout

br = branch

last = log -1 HEAD

cane = commit --amend --no-edit

lo = log --oneline -n 10

pr = pull --rebase

You can apply them (^) with:

git config --global alias.s status

git config --global alias.d diff

git config --global alias.co checkout

git config --global alias.br branch

git config --global alias.last "log -1 HEAD"

git config --global alias.cane "commit --amend --no-edit"

git config --global alias.pr "pull --rebase"

git config --global alias.lo "log --oneline -n 10"

alias g=git

alias g=git

This command will let you type g s in your shell to check git status

The best commit messages I’ve seen don’t just explain what they’ve changed: they explain why

Proper commits:

If you use practices like pair or mob programming, don't forget to add your coworkers names in your commit messages

It's good to give a shout-out to developers who collaborated on the commit. For example:

$ git commit -m "Refactor usability tests.

>

>

Co-authored-by: name <name@example.com>

Co-authored-by: another-name <another-name@example.com>"

I'm fond of gitmoji commit convention. It lies on categorizing commits using emojies. I'm a visual person so it fits well to me but I understand this convention is not made for everyone.

You can add gitmojis (emojis) in your commits, such as:

:recycle: Make core independent from the git client (#171)

:whale: Upgrade Docker image version (#167)

which will transfer on GitHub/GitLab to:

♻️ Make core independent from the git client (#171)

🐳 Upgrade Docker image version (#167)

Separate subject from body with a blank line Limit the subject line to 50 characters Capitalize the subject line Do not end the subject line with a period Use the imperative mood in the subject line Wrap the body at 72 characters Use the body to explain what and why vs. how

7 rules of good commit messages.

Don’t commit directly to the master or development branches. Don’t hold up work by not committing local branch changes to remote branches. Never commit application secrets in public repositories. Don’t commit large files in the repository. This will increase the size of the repository. Use Git LFS for large files. Learn more about what Git LFS is and how to utilize it in this advanced Learning Git with GitKraken tutorial. Don’t create one pull request addressing multiple issues. Don’t work on multiple issues in the same branch. If a feature is dropped, it will be difficult to revert changes. Don’t reset a branch without committing/stashing your changes. If you do so, your changes will be lost. Don’t do a force push until you’re extremely comfortable performing this action. Don’t modify or delete public history.

Git Don'ts

Create a Git repository for every new project. Learn more about what a Git repo is in this beginner Learning Git with GitKraken tutorial. Always create a new branch for every new feature and bug. Regularly commit and push changes to the remote branch to avoid loss of work. Include a gitignore file in your project to avoid unwanted files being committed. Always commit changes with a concise and useful commit message. Utilize git-submodule for large projects. Keep your branch up to date with development branches. Follow a workflow like Gitflow. There are many workflows available, so choose the one that best suits your needs. Always create a pull request for merging changes from one branch to another. Learn more about what a pull request is and how to create them in this intermediate Learning Git with GitKraken tutorial. Always create one pull request addressing one issue. Always review your code once by yourself before creating a pull request. Have more than one person review a pull request. It’s not necessary, but is a best practice. Enforce standards by using pull request templates and adding continuous integrations. Learn more about enhancing the pull request process with templates. Merge changes from the release branch to master after each release. Tag the master sources after every release. Delete branches if a feature or bug fix is merged to its intended branches and the branch is no longer required. Automate general workflow checks using Git hooks. Learn more about how to trigger Git hooks in this intermediate Learning Git with GitKraken tutorial. Include read/write permission access control to repositories to prevent unauthorized access. Add protection for special branches like master and development to safeguard against accidental deletion.

Git Dos

To add the .gitattributes to the repo first you need to create a file called .gitattributes into the root folder for the repo.

With such a content of .gitattributes:

*.js eol=lf

*.jsx eol=lf

*.json eol=lf

the end of line will be the same for everyone

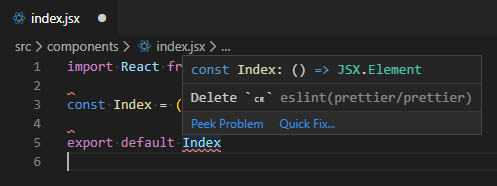

On the Windows machine the default for the line ending is a Carriage Return Line Feed (CRLF), whereas on Linux/MacOS it's a Line Feed (LF).

Thar is why you might want to use .gitattributes to prevent such differences.

On Windows Machine if endOfLine property is set to lf

{

"endOfLine": "lf"

}

On the Windows machine the developer will encounter linting issues from prettier:

The above commands will now update the files for the repo using the newly defined line ending as specified in the .gitattributes.

Use these lines to update the current repo files:

git rm --cached -r .

git reset --hard

I feel great that all of my posts are now safely saved in version control and markdown. It’s a relief for me to know that they’re no longer an HTML mess inside of a MySQL database, but markdown files which are easy to read, write, edit, share, and backup.

Good feeling of switching to GatsbyJS

However, I realized that a static site generator like Gatsby utilizes the power of code/data splitting, pre-loading, pre-caching, image optimization, and all sorts of performance enhancements that would be difficult or impossible to do with straight HTML.

Benefits of mixing HTML/CSS with some JavaScript (GatsbyJS):

I’ll give you the basics of what I did in case you also want to make the switch.

(check the text below this highlight for a great guide of migrating from WordPress to GatsbyJS)

A few things I really like about Gatsby

Main benefits of GatsbyJS:

I had over 100 guides and tutorials to migrate, and in the end I was able to move everything in 10 days, so it was far from the end of the world.

If you're smart, you can move from WordPress to GatsbyJS in ~ 10 days

There are a lot of static site generators to choose from. Jekyll, Hugo, Next, and Hexo are some of the big ones, and I’ve heard of and looked into some interesting up-and-coming SSGs like Eleventy as well.

Other statis site generators to consider, apart from GatsbyJS:

There is a good amount of prerequisite knowledge required to set up a Gatsby site - HTML, CSS, JavaScript, ES6, Node.js development environment, React, and GraphQL are the major ones.

There's a bit of technologies to be familiar with before setting up a GatsbyJS blog:

but you can be fine with the Gatsby Getting Started Tutorial

Gatsby is SEO friendly – it is part of the JAMStack after all!

With Gatsby you don't have to worry about SEO

Gatsby is a React based framework which utilises the powers of Webpack and GraphQL to bundle real React components into static HTML, CSS and JS files. Gatsby can be plugged into and used straight away with any data source you have available, whether that is your own API, Database or CMS backend (Spoiler Alert!).

Good GatsbyJS explanation in a single paragraph

The combination of WordPress, React, Gatsby and GraphQL is just that - fun

Intriguing combination of technologies.

Keep an eye on the post author, who is going to discuss the technologies in the next writings

A: Read an article from start to finish. ONLY THEN do you import parts into Anki for remembering B: Incremental Reading: interleaving between reading and remembering

Two algorithms (A and B) for studying

“I think SM is only good for a small minority of learners. But they will probably value it very much.”

I totally agree with it

In Anki, you are only doing the remembering part. You are not reading anything new in Anki

Anki is for remembering

Using either SRS has already given you a huge edge over not using any SRS: No SRS: 70 hours Anki: 10 hours SuperMemo: 6 hours The difference between using any SRS (whether it’s Anki or SM) and not using is huge, but the difference between Anki or SM is not

It doesn't matter as much which SRS you're using. It's most important to use one of them at least

“Anki is a tool and SuperMemo is a lifestyle.”

Anki vs SuperMemo

The Cornell Note-taking System

The Cornell Note-taking System reassembling the combination of active learning and spaced repetition, just as Anki

And for the last three years, I've added EVERYTHING to Anki. Bash aliases, IDE Shortcuts, programming APIs, documentation, design patterns, etc. Having done that, I wouldn't recommend adding EVERYTHING

Put just the relevant information into Anki

Habit: Whenever I search StackOverflow, I'll immediately create a flashcard of my question and the answer(s) into Anki.

Example habit to make a flashcard

The confidence of knowing that once something is added to Anki it won't be forgotten is intoxicating

Intoxicating

Kyle had a super hero ability. Photographic memory in API syntax and documentation. I wanted that and I was jealous. My career was stuck and something needed to change. And so I began a dedicated journey into spaced repetition. Every day for three years, I spent one to three hours in spaced repetition

Spaced repetition as a tool for photographic memory in API syntax and documentation

First up, regular citizens who download copyrighted content from illegal sources will not be criminalized. This means that those who obtain copies of the latest movies from the Internet, for example, will be able to continue doing so without fear of reprisals. Uploading has always been outlawed and that aspect has not changed.

In Switzerland you will be able to download, but not upload pirate content

The point of nbdev is to bring the key benefits of IDE/editor development into the notebook system, so you can work in notebooks without compromise for the entire lifecycle

'Directed' means that the edges of the graph only move in one direction, where future edges are dependent on previous ones.

Meaning of "directed" in Directed Acyclic Graph

Several cryptocurrencies use DAGs rather than blockchain data structures in order to process and validate transactions.

DAG vs Blockchain:

graph data structure that uses topological ordering, meaning that the graph flows in only one direction, and it never goes in circles.

Simple definition of Directed Acyclic Graph (DAG)

'Acyclic' means that it is impossible to start at one point of the graph and come back to it by following the edges.

Meaning of "acyclic" in Directed Acyclic Graph

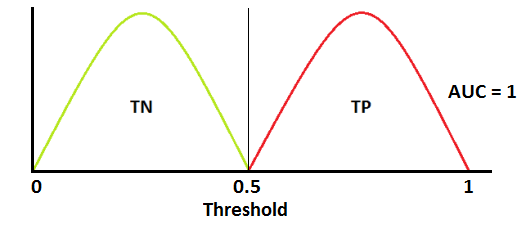

when AUC is 0.5, it means model has no class separation capacity whatsoever.

If AUC = 0.5

ROC is a probability curve and AUC represents degree or measure of separability. It tells how much model is capable of distinguishing between classes.

ROC & AUC

In multi-class model, we can plot N number of AUC ROC Curves for N number classes using One vs ALL methodology. So for Example, If you have three classes named X, Y and Z, you will have one ROC for X classified against Y and Z, another ROC for Y classified against X and Z, and a third one of Z classified against Y and X.

Using AUC ROC curve for multi-class model

When AUC is approximately 0, model is actually reciprocating the classes. It means, model is predicting negative class as a positive class and vice versa

If AUC = 0

AUC near to the 1 which means it has good measure of separability.

If AUC = 1

LR is nothing but the binomial regression with logit link (or probit), one of the numerous GLM cases. As a regression - itself it doesn't classify anything, it models the conditional (to linear predictor) expected value of the Bernoulli/binomially distributed DV.

Linear Regression - the ultimate definition (it's not a classification algorithm!)

It's used for classification when we specify a 50% threshold.

BOW is often used for Natural Language Processing (NLP) tasks like Text Classification. Its strengths lie in its simplicity: it’s inexpensive to compute, and sometimes simpler is better when positioning or contextual info aren’t relevant

Usefulness of BOW:

Notice that we lose contextual information, e.g. where in the document the word appeared, when we use BOW. It’s like a literal bag-of-words: it only tells you what words occur in the document, not where they occurred

The analogy behind using bag term in the bag-of-words (BOW) model.

Here’s my preferred way of doing it, which uses Keras’s Tokenizer class

Keras's Tokenizer Class - Victor's preferred way of implementing BOW in Python

vectors here have length 7 instead of 6 because of the extra 0 element at the beginning. This is an inconsequential detail - Keras reserves index 0 and never assigns it to any word.

Keras always reserves 0 and never assigns any word to it; therefore, even when we have 6 words, we end up with the length of 7:

[0. 1. 1. 1. 0. 0. 0.]

process that takes input data through a series of transformation stages, producing data as output

Data pipeline

Softmax turns arbitrary real values into probabilities

Softmax function -

1. Logistic regression IS a binomial regression (with logit link), a special case of the Generalized Linear Model. It doesn't classify anything *unless a threshold for the probability is set*. Classification is just its application. 2. Stepwise regression is by no means a regression. It's a (flawed) method of variable selection. 3. OLS is a method of estimation (among others: GLS, TLS, (RE)ML, PQL, etc.), NOT a regression. 4. Ridge, LASSO - it's a method of regularization, NOT a regression. 5. There are tens of models for the regression analysis. You mention mainly linear and logistic - it's just the GLM! Learn the others too (link in a comment). STOP with the "17 types of regression every DS should know". BTW, there're 270+ statistical tests. Not just t, chi2 & Wilcoxon

5 clarifications to common misconceptions shared over data science cheatsheets on LinkedIn

400 sized probability sample (a small random sample from the whole population) is often better than a millions sized administrative sample (of the kind you can download from gov sites). The reason is that an arbitrary sample (as opposed to a random one) is very likely to be biased, and, if large enough, a confidence interval (which actually doesn't really make sense except for probability samples) will be so narrow that, because of the bias, it will actually rarely, if ever, include the true value we are trying to estimate. On the other hand, the small, random sample will be very likely to include the true value in its (wider) confidence interval

Summary of Lecture 01 (Data Science Lifecycle, Study Design) - Data 100 Su19

An exploratory plot is all about you getting to know the data. An explanatory graphic, on the other hand, is about telling a story using that data to a specific audience.

Exploratory vs Explanatory plot

Here’s a very simple example of how a VQA system might answer the question “what color is the triangle?”

Visual Question Answering (VQA): answering open-ended questions about images. VQA is interesting because it requires combining visual and language understanding.

Visual Question Answering (VQA) = visual + language understanding

Most VQA models would use some kind of Recurrent Neural Network (RNN) to process the question input

The standard approach to performing VQA looks something like this: Process the image. Process the question. Combine features from steps 1/2. Assign probabilities to each possible answer.

Approach to handle VQA problems:

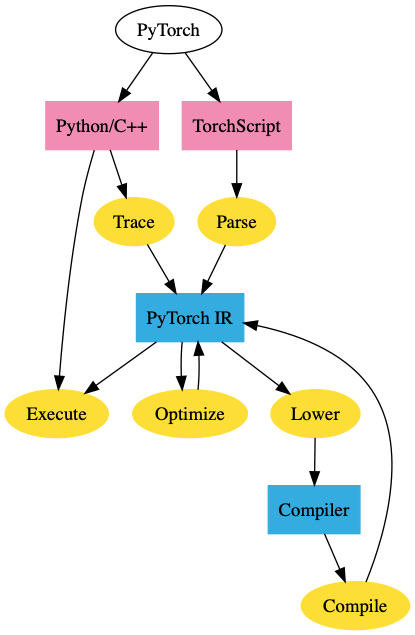

Script mode takes a function/class, reinterprets the Python code and directly outputs the TorchScript IR. This allows it to support arbitrary code, however it essentially needs to reinterpret Python

Script mode in PyTorch

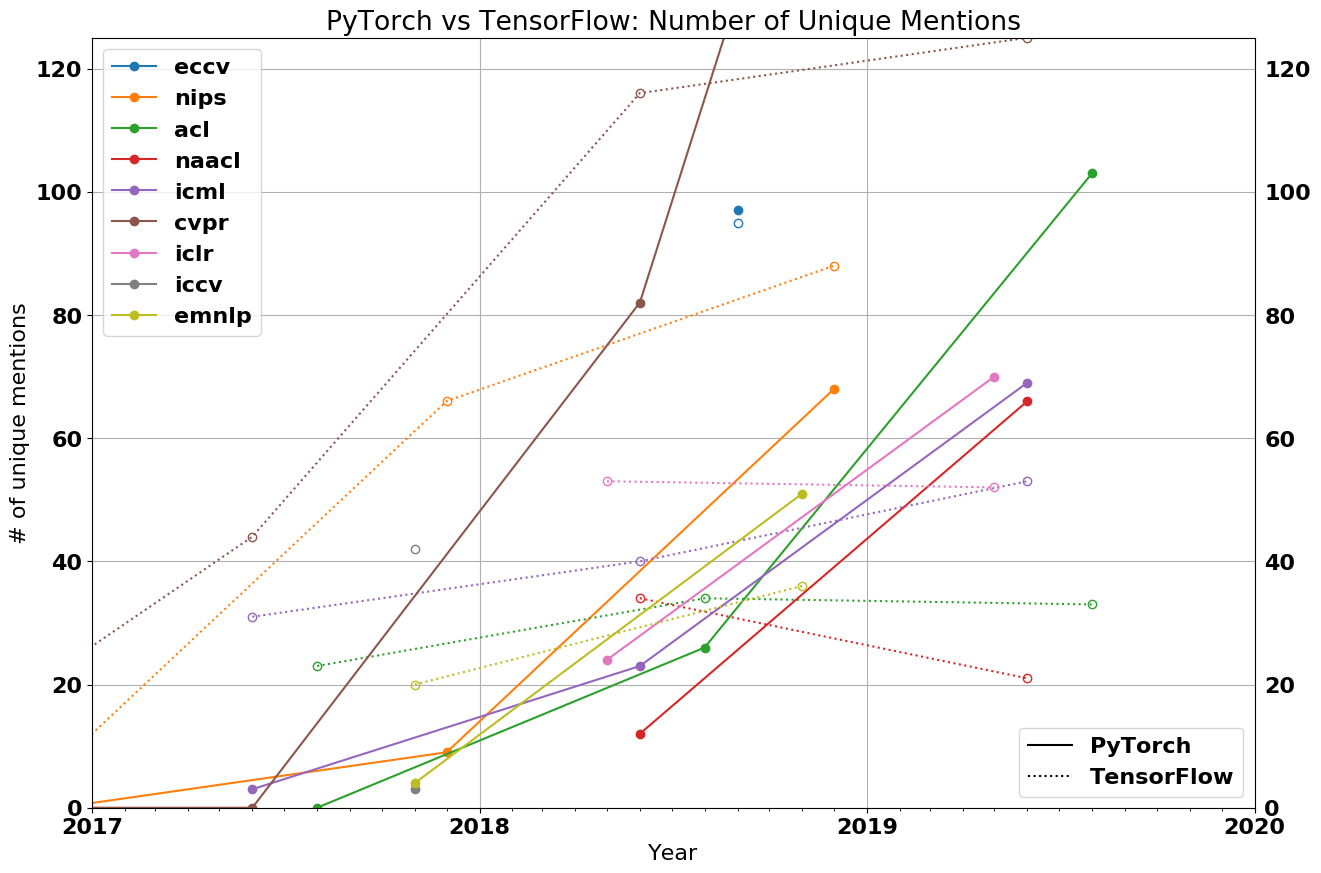

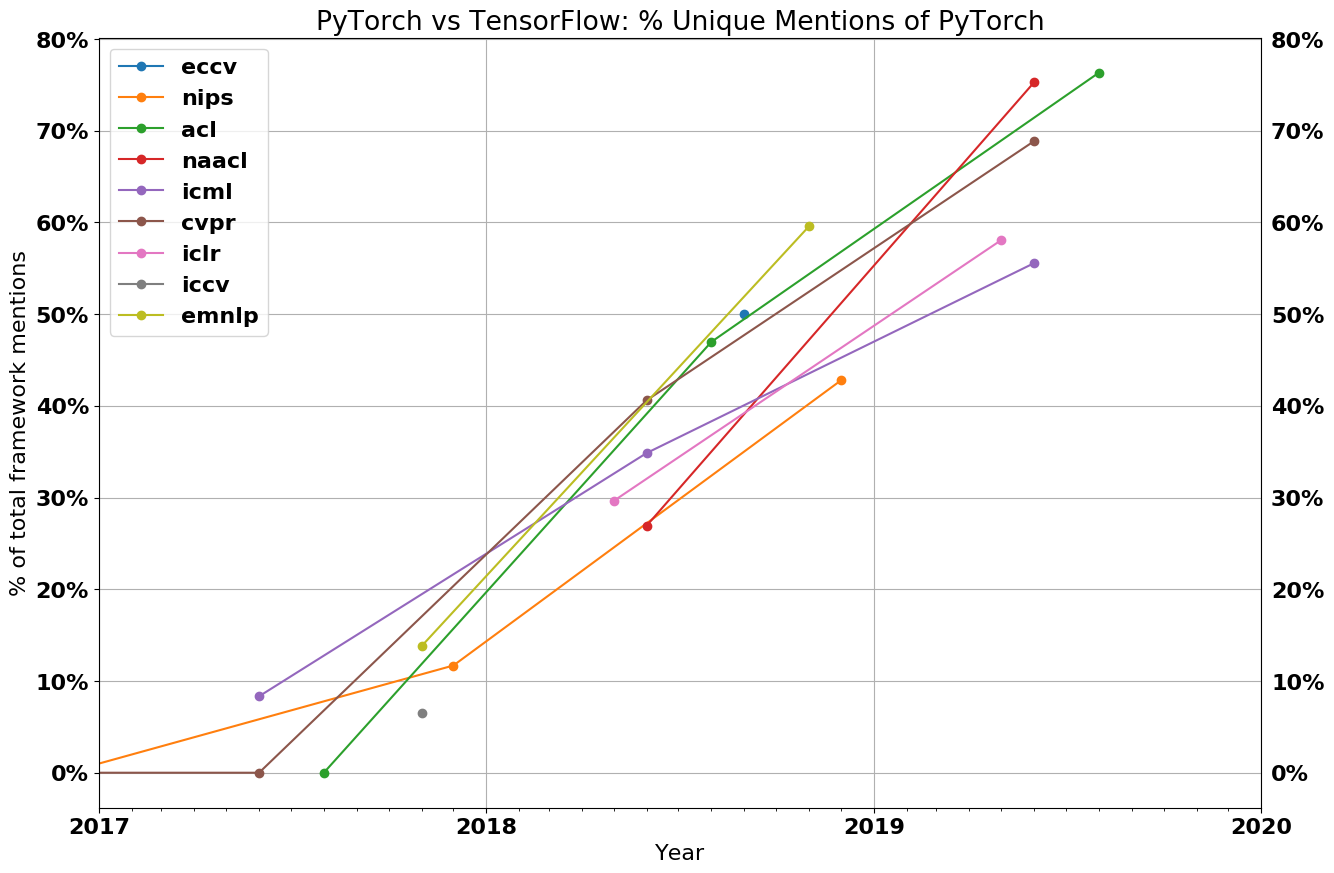

In 2019, the war for ML frameworks has two remaining main contenders: PyTorch and TensorFlow. My analysis suggests that researchers are abandoning TensorFlow and flocking to PyTorch in droves. Meanwhile in industry, Tensorflow is currently the platform of choice, but that may not be true for long

Why do researchers love PyTorch?

Researchers care about how fast they can iterate on their research, which is typically on relatively small datasets (datasets that can fit on one machine) and run on <8 GPUs. This is not typically gated heavily by performance considerations, but by their ability to quickly implement new ideas. On the other hand, industry considers performance to be of the utmost priority. While 10% faster runtime means nothing to a researcher, that could directly translate to millions of savings for a company

Researchers value how fast they can implement tools on their research.

Industry considers value performance as it brings money.

From the early academic outputs Caffe and Theano to the massive industry-backed PyTorch and TensorFlow

It's not easy to track all the ML frameworks

Caffe, Theano ---> PyTorch, TensorFlow

When you run a PyTorch/TensorFlow model, most of the work isn’t actually being done in the framework itself, but rather by third party kernels. These kernels are often provided by the hardware vendor, and consist of operator libraries that higher-level frameworks can take advantage of. These are things like MKLDNN (for CPU) or cuDNN (for Nvidia GPUs). Higher-level frameworks break their computational graphs into chunks, which can then call these computational libraries. These libraries represent thousands of man hours of effort, and are often optimized for the architecture and application to yield the best performance

What happens behind when you run ML frameworks

Jax is built by the same people who built the original Autograd, and features both forward- and reverse-mode auto-differentiation. This allows computation of higher order derivatives orders of magnitude faster than what PyTorch/TensorFlow can offer

Jax

TensorFlow will always have a captive audience within Google/DeepMind, but I wonder whether Google will eventually relax this

Generally, PyTorch will be much more favorised that maybe one day it will replace TensorFlow at Google's offices

At their core, PyTorch and Tensorflow are auto-differentiation frameworks

auto-differentation = takes derivative of some function. It can be implemented in many ways so most ML frameworks choose "reverse-mode auto-differentation" (known as "backpropagation")

Once your PyTorch model is in this IR, we gain all the benefits of graph mode. We can deploy PyTorch models in C++ without a Python dependency , or optimize it.

At the API level, TensorFlow eager mode is essentially identical to PyTorch’s eager mode, originally made popular by Chainer. This gives TensorFlow most of the advantages of PyTorch’s eager mode (ease of use, debuggability, and etc.) However, this also gives TensorFlow the same disadvantages. TensorFlow eager models can’t be exported to a non-Python environment, they can’t be optimized, they can’t run on mobile, etc. This puts TensorFlow in the same position as PyTorch, and they resolve it in essentially the same way - you can trace your code (tf.function) or reinterpret the Python code (Autograph).

Tensorflow Eager

TensorFlow came out years before PyTorch, and industry is slower to adopt new technologies than researchers

Reason why PyTorch wasn't previously more popular than TensorFlow

The PyTorch JIT is an intermediate representation (IR) for PyTorch called TorchScript. TorchScript is the “graph” representation of PyTorch. You can turn a regular PyTorch model into TorchScript by using either tracing or script mode.

PyTorch JIT

In 2018, PyTorch was a minority. Now, it is an overwhelming majority, with 69% of CVPR using PyTorch, 75+% of both NAACL and ACL, and 50+% of ICLR and ICML

Tracing takes a function and an input, records the operations that were executed with that input, and constructs the IR. Although straightforward, tracing has its downsides. For example, it can’t capture control flow that didn’t execute. For example, it can’t capture the false block of a conditional if it executed the true block

Tracing mode in PyTorch

On the other hand, industry has a litany of restrictions/requirements

TensorFlow's requirements:

TensorFlow is still the dominant framework. For example, based on data [2] [3] from 2018 to 2019, TensorFlow had 1541 new job listings vs. 1437 job listings for PyTorch on public job boards, 3230 new TensorFlow Medium articles vs. 1200 PyTorch, 13.7k new GitHub stars for TensorFlow vs 7.2k for PyTorch, etc

Nowadays, the numbers still play against PyTorch

every major conference in 2019 has had a majority of papers implemented in PyTorch

Legend:

the transition from TensorFlow 1.0 to 2.0 will be difficult and provides a natural point for companies to evaluate PyTorch

Chance of faster transition to PyTorch in industry

For the application of machine learning in finance, it’s still very early days. Some of the stuff people have been doing in finance for a long time is simple machine learning, and some people were using neural networks back in the 80s and 90s. But now we have a lot more data and a lot more computing power, so with our creativity in machine learning research, “We are so much in the beginning that we can’t even picture where we’re going to be 20 years from now”

We are just in time to apply modern ML techniques to financial industry

ability to learn from data e.g. OpenAI and the Rubik’s Cube and DeepMind with AlphaGo required the equivalent of thousands of years of gameplay to achieve those milestones

Even while making the perfect algorithm, we have to expect long hours of learning

Pedro’s book “The Master Algorithm” takes readers on a journey through the five dominant paradigms of machine learning research on a quest for the master algorithm. Along the way, Pedro wanted to abstract away from the mechanics so that a broad audience, from the CXO to the consumer, can understand how machine learning is shaping our lives

"The Master Algorithm" book seems to be too abstract in such a case; however, it covers the following 5 paradigms:

We’ve always lived in a world which we didn’t completely understand but now we’re living in a world designed by us – for Pedro, that’s actually an improvement

We never really understood the surroundings, but now we have a great impact to modify it

But at the end of the day, what we know about neuroscience today is not enough to determine what we do in AI, it’s only enough to give us ideas. In fact it’s a two way street – AI can help us to learn how the brain works and this loop between the two disciplines is a very important one and is growing very rapidly

Neuroscience can help us understand AI and the opposite

Pedro believes that success will come from unifying the different major types of learning and their master algorithms –not just combining, but unifying them such that “it feels like using one thing”

Interesting point of view on designing the master algorithm

if you look at the number of connections that the state of the art machine learning systems for some of these problems have, they’re more than many animals – they have many hundreds of millions or billions of connections

State of the art ML systems are composed of millions or billions of connections (close to humans)

There was this period of a couple of 100 years where we understood our technology. Now we just have to learn live in a world where we don’t understand the machines that work for us, we just have to be confident they are working for us and doing their best

Should we just accept the fact that machines will rule the world with a mysterious intelligence?

team began its analysis on YouTube 8M, a publicly available dataset of YouTube videos

YouTube 8M - public dataset of YouTube videos. With this, we can analyse video features like: *color

The trailer release for a new movie is a highly anticipated event that can help predict future success, so it behooves the business to ensure the trailer is hitting the right notes with moviegoers. To achieve this goal, the 20th Century Fox data science team partnered with Google’s Advanced Solutions Lab to create Merlin Video, a computer vision tool that learns dense representations of movie trailers to help predict a specific trailer’s future moviegoing audience

Merlin Video - computer vision tool to help predict a specific trailer's moviegoing audience

pipeline also includes a distance-based “collaborative filtering” (CF) model and a logistic regression layer that combines all the model outputs together to produce the movie attendance probability

other elements of pipeline:

Merlin returns the following labels: facial_hair, beard, screenshot, chin, human, film

Types of features Merlin Video can generate from a single trailer frame.

Final result of feature collecting and ordering:

The obvious choice was Cloud Machine Learning Engine (Cloud ML Engine), in conjunction with the TensorFlow deep learning framework

Merlin Video is powered by:

custom model learns the temporal sequencing of labels in the movie trailer

Temporal sequencing - times of different shots (e.g. long or short).

Temporal sequencing can convey information on:

When combined with historical customer data, sequencing analysis can be used to create predictions of customer behavior.

The elasticity of Cloud ML Engine allowed the data science team to iterate and test quickly, without compromising the integrity of the deep learning model

Cloud ML Engine reduced the deployment time from months to days

Architecture flow diagram for Merlin

The first challenge is the temporal position of the labels in the trailer: it matters when the labels occur in the trailer. The second challenge is the high dimensionality of this data

2 challenges that we find in labelling video clips: occurrence and volume of labels

20th Century Fox has been using this tool since the release of The Greatest Showman in 2017, and continues to use it to inform their latest releases

The Merlin Video tool is used nowadays by 20th Century Fox

When it comes to movies, analyzing text taken from a script is limiting because it only provides a skeleton of the story, without any of the additional dynamism that can entice an audience to see a movie

Analysing movie script isn't enough to predict the overall movie's attractiveness to the audience

model is trained end-to-end, and the loss of the logistic regression is back-propagated to all the trainable components (weights). Merlin’s data pipeline is refreshed weekly to account for new trailer releases

Way the model is trained and located in the pipeline

After a movie’s release, we are able to process the data on which movies were previously seen by that audience. The table below shows the top 20 actual moviegoer audiences (Comp ACT) compared to the top 20 predicted audiences (Comp PRED)

Way of validating the Merlin model

It’s possible to check whether a variable refers to it with the comparison operators == and !=

Checking against None with == and !=

>>> x, y = 2, None

>>> x == None

False

>>> y == None

True

>>> x != None

True

>>> y != None

False

More Pythonic way by using is and is not:

>>> x is None

False

>>> y is None

True

>>> x is not None

True

>>> y is not None

False

Python allows defining getter and setter methods similarly as C++ and Java

Getters and Setters in Python:

>>> class C:

... def get_x(self):

... return self.__x

... def set_x(self, value):

... self.__x = value

Get and set the state of the object:

>>> c = C()

>>> c.set_x(2)

>>> c.get_x()

2

In almost all cases, you can use the range to get an iterator that yields integers

Iterating over Sequences and Mappings

>>> x = [1, 2, 4, 8, 16]

>>> for i in range(len(x)):

... print(x[i])

...

1

2

4

8

16

better way of iterating over a sequence:

>>> for item in x:

... print(item)

...

1

2

4

8

16

Sometimes you need both the items from a sequence and the corresponding indices

Reversing with indices:

>>> for i in range(len(x)):

... print(i, x[i])

...

0 1

1 2

2 4

3 8

4 16

Better way by using enumerate:

>>> for i, item in enumerate(x):

... print(i, item)

...

0 1

1 2

2 4

3 8

4 16

But what if you want to iterate in the reversed order? Of course, the range is an option again

Iterating over a reversed order:

>>> for i in range(len(x)-1, -1, -1):

... print(x[i])

...

16

8

4

2

1

More elegant way:

>>> for item in x[::-1]:

... print(item)

...

16

8

4

2

1

Pythonic way of reversing an order:

>>> for item in reversed(x):

... print(item)

...

16

8

4

2

1

it’s often more elegant to define and use properties, especially in simple cases

Defining some properties (considered to be more Pythonic):

>>> class C:

... @property

... def x(self):

... return self.__x

... @x.setter

... def x(self, value):

... self.__x = value

Result:

>>> c = C()

>>> c.x = 2

>>> c.x

2

Python has a very flexible system of providing arguments to functions and methods. Optional arguments are a part of this offer. But be careful: you usually don’t want to use mutable optional arguments

Avoiding multiple optional arguments:

>>> def f(value, seq=[]):

... seq.append(value)

... return seq

If you don't provide seq, f() appends a value to an empty list and returns something like [value]:

>>> f(value=2)

[2]

Don't be fooled. This option isn't fine...

>>> f(value=4)

[2, 4]

>>> f(value=8)

[2, 4, 8]

>>> f(value=16)

[2, 4, 8, 16]

Iterating over a dictionary yields its keys

Iterating over a dictionary:

>>> z = {'a': 0, 'b': 1}

>>> for k in z:

... print(k, z[k])

...

a 0

b 1

Applying method .items():

>>> for k, v in z.items():

... print(k, v)

...

a 0

b 1

You can also use the methods .keys() and .values()

following the rules called The Zen of Python or PEP 20

The Zen of Python or PEP 20 <--- rules followed by Python

You can use unpacking to assign values to your variables

Unpacking <--- assign values

>>> a, b = 2, 'my-string'

>>> a

2

>>> b

'my-string'

what if you want to iterate over two or more sequences? Of course, you can use the range again

Iterating over two or more sequences:

>>> y = 'abcde'

>>> for i in range(len(x)):

... print(x[i], y[i])

...

1 a

2 b

4 c

8 d

16 e

Better solution by applying zip:

>>> for item in zip(x, y):

... print(item)

...

(1, 'a')

(2, 'b')

(4, 'c')

(8, 'd')

(16, 'e')

Combining it with unpacking:

>>> for x_item, y_item in zip(x, y):

... print(x_item, y_item)

...

1 a

2 b

4 c

8 d

16 e

None is a special and unique object in Python. It has a similar purpose, like null in C-like languages

None (Python) ==similar== Null (C)

Python allows you to chain the comparison operations. So, you don’t have to use and to check if two or more comparisons are True

Chaining <--- checking if two or more operations are True

>>> x = 4

>>> x >= 2 and x <= 8

True

More compact (mathematical) form:

>>> 2 <= x <= 8

True

>>> 2 <= x <= 3

False

Unpacking can be used for the assignment to multiple variables in more complex cases

Unpacking <--- assign even more variables

>>> x = (1, 2, 4, 8, 16)

>>> a = x[0]

>>> b = x[1]

>>> c = x[2]

>>> d = x[3]

>>> e = x[4]

>>> a, b, c, d, e

(1, 2, 4, 8, 16)

more readable approach:

>>> a, b, c, d, e = x

>>> a, b, c, d, e

(1, 2, 4, 8, 16)

even cooler (* collects values not assigned to others):

>>> a, *y, e = x

>>> a, e, y

(1, 16, [2, 4, 8])

the most concise and elegant variables swap

Unpacking <--- swap values

>>> a, b = b, a

>>> a

'my-string'

>>> b

2

Python doesn’t have real private class members. However, there’s a convention that says that you shouldn’t access or modify the members beginning with the underscore (_) outside their instances. They are not guaranteed to preserve the existing behavior.

Avoiding accessing protected class members Consider the code:

>>> class C:

... def __init__(self, *args):

... self.x, self._y, self.__z = args

...

>>> c = C(1, 2, 4)

The instances of class C have three data members: .x, .y, and ._Cz (because z has two underscores, unlike y). If a member’s name begins with a double underscore (dunder), it becomes mangled, that is modified. That’s why you have ._Cz instead of ._z.

Now, it's quite OK to access/modify .x directly:

>>> c.x # OK

1

You can also access ._y, from outside its instance, but it's considered a bad practice:

>>> c._y # Possible, but a bad practice!

2

You can’t access .z because it’s mangled, but you can access or modify ._Cz:

>>> c.__z # Error!

Traceback (most recent call last):

File "", line 1, in

AttributeError: 'C' object has no attribute '__z'

>>> c._C__z # Possible, but even worse!

4

>>>

what if an exception occurs while processing your file? Then my_file.close() is never executed. You can handle this with exception-handling syntax or with context managers

**`with a`` block to handle exceptions:

>>> with open('filename.csv', 'w') as my_file:

... # do something with `my_file`

Using the with block means that the special methods .enter() and .exit() are called, even in the cases of exceptions

Python code should be elegant, concise, and readable. It should be beautiful. The ultimate resource on how to write beautiful Python code is Style Guide for Python Code or PEP 8

Write beautiful Python code with

PEP 8 provides the style guide for Python code, and PEP 20 represents the principles of Python language

Python also supports chained assignments. So, if you want to assign the same value to multiple variables, you can do it in a straightforward way

Chained assignments <--- assign the same value to multiple variables:

>>> x = 2

>>> y = 2

>>> z = 2

More elegant way:

>>> x, y, z = 2, 2, 2

Chained assignments:

>>> x = y = z = 2

>>> x, y, z

(2, 2, 2)

open a file and process it

Open a file:

>>> my_file = open('filename.csv', 'w')

>>> # do something with `my_file`

Close the file to properly manage memory:

>>> my_file = open('filename.csv', 'w')

>>> # do something with `my_file and`

>>> my_file.close()

The author of the class probably begins the names with the underscore(s) to tell you, “don’t use it”

__ <--- don't touch!

You can keep away from that with some additional logic. One of the ways is this:

You can keep away from the problem:

>>> def f(value, seq=[]):

... seq.append(value)

... return seq

with some additional logic:

>>> def f(value, seq=None):

... if seq is None:

... seq = []

... seq.append(value)

... return seq

Shorter version:

>>> def f(value, seq=None):

... if not seq:

... seq = []

... seq.append(value)

... return seq

The result:

>>> f(value=2)

[2]

>>> f(value=4)

[4]

>>> f(value=8)

[8]

>>> f(value=16)

[16]

The Pythonic way is to exploit the fact that zero is interpreted as False in a Boolean context, while all other numbers are considered as True

Comparing to zero - Pythonic way:

>>> bool(0)

False

>>> bool(-1), bool(1), bool(20), bool(28.4)

(True, True, True, True)

Using if item instead of if item != 0:

>>> for item in x:

... if item:

... print(item)

...

1

2

3

4

You can also use if not item instead of if item == 0

When you have numeric data, and you need to check if the numbers are equal to zero, you can but don’t have to use the comparison operators == and !=

Comparing to zero:

>>> x = (1, 2, 0, 3, 0, 4)

>>> for item in x:

... if item != 0:

... print(item)

...

1

2

3

4

The reason that Julia is fast (ten to 30 times faster than Python) is because it is compiled and not interpreted

Julia seems to be even faster than Scala when comparing to the speed of Python

Scala is ten times faster than Python

Interesting estimation

First of all, write a script that carries out the task in a sequential fashion. Secondly, transform the script so that it carries out the task using the map function. Lastly, replace map with a neat function from the concurrent.futures module

Concurrent Python programs in 3 steps:

map function.map with a neat function from the concurrent.futures module.Python standard library makes it fairly easy to create threads and processes

Fortunately, there is a workaround for concurrent programming in Python

Python is a poor choice for concurrent programming. A principal reason for this is the ‘Global Interpreter Lock’ or GIL. The GIL ensures that only one thread accesses Python objects at a time, effectively preventing Python from being able to distribute threads onto several CPUs by default

Python isn't the best choice for concurrent programming

Introducing multiprocessing now is a cinch; I just replace ThreadPoolExecutor with ProcessPoolExecutor in the previous listing

Replacing multithreading with multiprocessing:

replace ThreadPoolExecutor with ProcessPoolExecutor

this article merely scratches the surface. If you want to dig deeper into concurrency in Python, there is an excellent talk titled Thinking about Concurrency by Raymond Hettinger on the subject. Make sure to also check out the slides whilst you’re at it

Learn more about concurrency:

As the name suggests, multiprocessing spawns processes, while multithreading spawns threads. In Python, one process can run several threads. Each process has its proper Python interpreter and its proper GIL. As a result, starting a process is a heftier and more time-consuming undertaking than starting a thread.

Reason for multiprocessing being slower than multithreading:

Multiprocessing is more time-consuming to use because of its architecture

its purpose is to dump Python tracebacks explicitly on a fault, after a timeout, or on a user signal

Faulthandler in contrast to tracing tracks specific events and has slightly better documentation

what parts of the software do we profile (measure its performance metrics)

Most profiled parts of the software:

Line profiling, as the name suggests, means to profile your Python code line by line

Line profiling

The profile module gives similar results with similar commands. Typically, you switch to profile if cProfile isn’t available

cProfile > profile. Use profile only when cProfile isn't available

Another common component to profile is the memory usage. The purpose is to find memory leaks and optimize the memory usage in your Python programs

Memory usage can be tracked with pympler or objgraph libraries

The purpose of trace module is to “monitor which statements and functions are executed as a program runs to produce coverage and call-graph information

Purpose of trace module

method profiling tool like cProfile (which is available in the Python language), the timing metrics for methods can show you statistics, such as the number of calls (shown as ncalls), total time spent in the function (tottime), time per call (tottime/ncalls and shown as percall), cumulative time spent in a function (cumtime), and cumulative time per call (quotient of cumtime over the number of primitive calls and shown as percall after cumtime)

cProfile is one of the Python tools to measure method execution time. Specifically:

tracing is a special use case of logging in order to record information about a program’s execution

Tracing (more for software devs) is very similar to event logging (more for system administrators)

If a method has an acceptable speed but is so frequently called that it becomes a huge time sink, you would want to know this from your profiler

We also want to measure the frequency of method calls. cProfile can highlight the number of function calls and how many of those are native calls

trace and faulthandler modules cover basic tracing

Basic Python libraries for tracing

# Creating a 5x5 matrix arr = [[i for i in range(5)] for j in range(5)] arr >>> [[0, 1, 2, 3, 4], [0, 1, 2, 3, 4], [0, 1, 2, 3, 4], [0, 1, 2, 3, 4], [0, 1, 2, 3, 4]]

Nested for loop using list comprehension to come up with 5x5 matrix:

arr = [[i for i in range(5)] for j in range(5)]

arr

>>> [[0, 1, 2, 3, 4],

[0, 1, 2, 3, 4],

[0, 1, 2, 3, 4],

[0, 1, 2, 3, 4],

[0, 1, 2, 3, 4]]

x = [2,45,21,45] y = {i:v for i,v in enumerate(x)} print(y) >>> {0: 2, 1: 45, 2: 21, 3: 45}

List comprehension in Python to create a simple dictionary:

x = [2,45,21,45]

y = {i:v for i,v in enumerate(x)}

print(y)

>>> {0: 2, 1: 45, 2: 21, 3: 45}

x = [[0, 1, 2, 3, 4], [5, 6, 7, 8, 9]] arr = [i for j in x for i in j] print(arr) >>> [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

Flattening a multi-dimensional matrix into a 1-D array using list comprehension:

x = [[0, 1, 2, 3, 4],

[5, 6, 7, 8, 9]]

arr = [i for j in x for i in j]

print(arr)

>>> [0, 1, 2, 3, 4, 5, 6, 7, 8, 9]

arr = [i for i in range(10) if i % 2 == 0] print(arr) >>> [0, 2, 4, 6, 8] arr = ["Even" if i % 2 == 0 else "Odd" for i in range(10)] print(arr) >>> ['Even', 'Odd', 'Even', 'Odd', 'Even', 'Odd', 'Even', 'Odd', 'Even', 'Odd']

2 examples of conditional statements in list comprehension:

arr = [i for i in range(10) if i % 2 == 0]

print(arr)

>>> [0, 2, 4, 6, 8]

and:

arr = ["Even" if i % 2 == 0 else "Odd" for i in range(10)]

print(arr)

>>> ['Even', 'Odd', 'Even', 'Odd', 'Even', 'Odd', 'Even', 'Odd', 'Even', 'Odd']

use pyenv. With it, you will be able to have any version you want at your disposal, very easy.

pyenv allows you to easily switch between Python versions

the __ methods allow us to interact with core concepts of the python language. You can see them also as a mechanism of implementing behaviours, interface methods.

__ methods

Dunder or magic method, are methods that start and end with double _ like __init__ or __str__. This kind of methods are the mechanism we use to interact directly with python's data model

Dunder methods

Dutch programmer Guido van Rossum designed Python in 1991, naming it after the British television comedy Monty Python's Flying Circus because he was reading the show's scripts at the time.

Origins of Python name