AWS is 10x slower than a dedicated server for the same price

- Video Title: AWS is 10x slower than a dedicated server for the same price

- Core Argument: Cloud providers, particularly AWS, charge significantly more for base-level compute instances than traditional Virtual Private Server (VPS) providers while delivering substantially less performance. The video argues that horizontal scaling is often unnecessary for 95% of businesses.

- Comparison Setup: The video compared an entry-level AWS instance (EC2 and ECS Fargate) with a similarly specced VPS (1 vCPU, 2 GB RAM) from a popular German provider (Hetzner, referred to as HTNA in the video) using the Sysbench tool.

- AWS EC2 Results: The base EC2 instance cost almost 3 times more than the VPS but delivered poor performance:

- CPU: Approximately 20% of the VPS performance.

- Memory: Only 7.74% of the VPS performance.

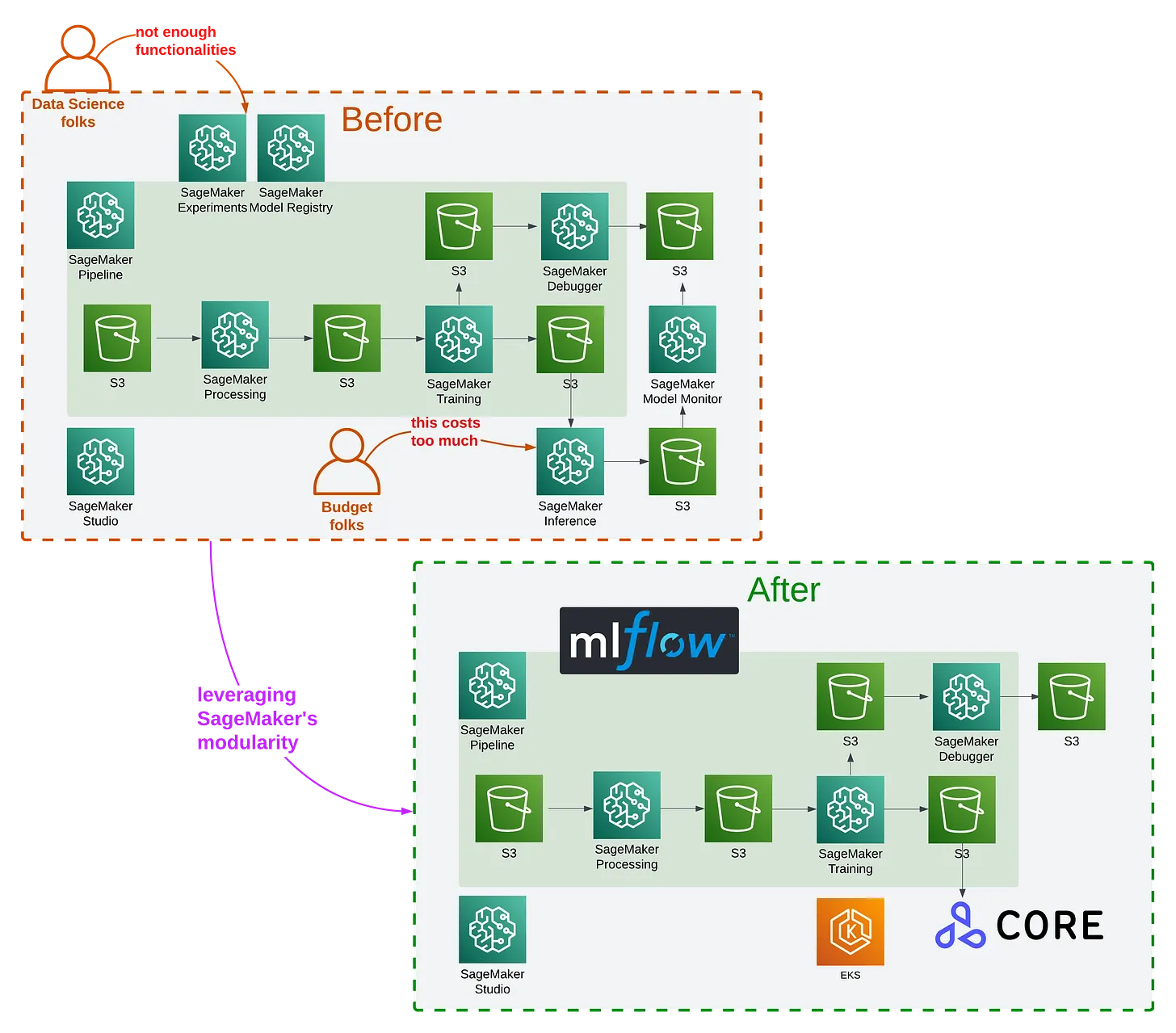

- AWS ECS Fargate Results: Using the "serverless" Fargate option, setup was complex and involved many AWS services (ECS, ECR, IAM).

- Cost: The instance was 6 times more expensive than the VPS.

- Performance: Performance improved over EC2 but was still slower and less consistent: 23% (CPU), 80% (Memory), and 84% (File I/O) of the VPS's performance, with fluctuations up to 18%.

- Cost Efficiency: A dedicated VPS server with 4vCPU and 16 GB of RAM was found to be cheaper than the 1 vCPU ECS Fargate task used in the test.

- Conclusion: For a similar price point, a dedicated server is about 10 times faster than an equivalent AWS cloud instance. The video concludes that AWS's dominance is due to its large marketing spend, not superior technical or cost efficiency. A real-world example cited is Lichess, which supports 5.2 million chess games per day on a single dedicated server [00:12:06].

Hacker News Discussion

The discussion was split between criticizing the video's methodology and debating the fundamental value proposition of hyperscale cloud providers versus traditional hosting.

- Criticism of Methodology: Several top comments argued the video was a "low effort 'ha ha AWS sucks' video" with an "AWFUL analysis." Critics suggested the author did not properly configure or understand ECS/Fargate and that comparing the lowest-end shared instances isn't a "proper comparison," which should involve mid-range hardware and careful configuration.

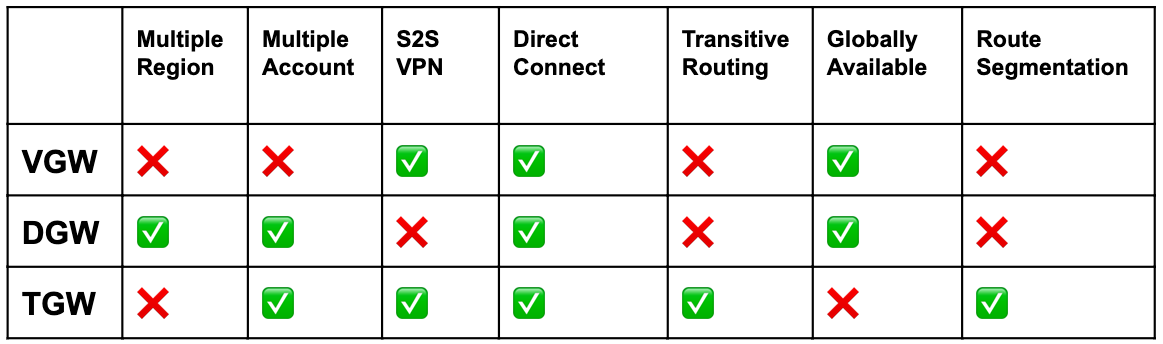

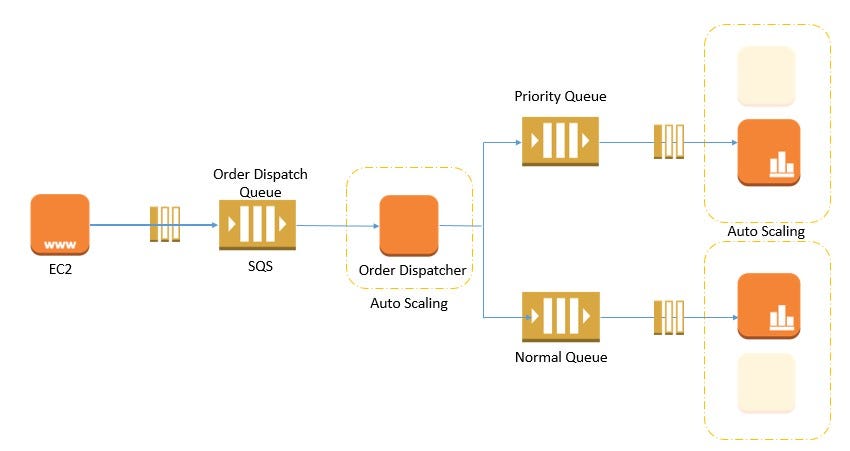

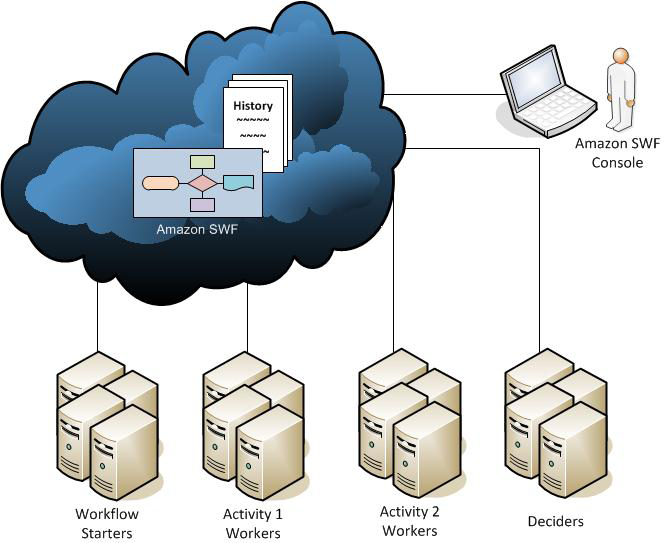

- The Value of AWS Services: Many users defended AWS by stating that customers rarely choose it just for the base EC2 instance price. The true value lies in the managed ecosystem of services like RDS, S3, EKS, ELB, and Cognito, which abstract away operational complexity and allow large customers to negotiate off-list pricing.

- Complexity and Cost Rebuttals: Counter-arguments highlighted that managing AWS complexity often requires hiring expensive "cloud wizards" (Solutions Architects or specialized DevOps staff), shifting the high cost of a SysAdmin team to high cloud management costs. Anecdotes about sudden huge AWS bills and complex debugging were common.

- The "Nobody Gets Fired" Factor: The most common justification for choosing AWS, even at a higher cost, is risk aversion and the avoidance of personal liability. If a core AWS region (like US-East-1) goes down, it's a shared industry failure, but if a self-hosted server fails, the admin is solely responsible for fixing it at 3 a.m.

- Alternative Recommendations: The discussion frequently validated the use of non-hyperscale providers like Hetzner and OVH for significant cost savings and comparable reliability for many non-"cloud native" workloads.