We ran high-level US civil war simulations. Minnesota is exactly how they start<br /> by [[Claire Finkelstein]] in The Guardian<br /> accessed on 2026-01-21T10:07:26

- Jan 2026

-

www.theguardian.com www.theguardian.com

Tags

- Trump Administration

- Center for Ethics and the Rule of Law (CERL)

- read

- Renee Nicole Good

- Kristi Noem

- presidential immunity

- U.S. Immigration and Customs Enforcement (ICE)

- United States Department of Defense

- use of force

- constitutional crisis

- Department of Defense’s Rules for the Use of Force

Annotators

URL

-

- Dec 2025

-

www.americanscientist.org www.americanscientist.org

-

nstead, such perturbed ecosystems may settle on a new composition that includes different species, many of them resistant to antibiotic treatment.

for - progress trap - long term antibiotic use - can create new composition of microbiome with species resistant to antibiotic treatment

-

- Oct 2025

-

www.berkley-fishing.com www.berkley-fishing.com

-

https://www.berkley-fishing.com/collections/line-tools/products/line-counter

Off-label use of a line counter with typewriter ribbon length?

-

-

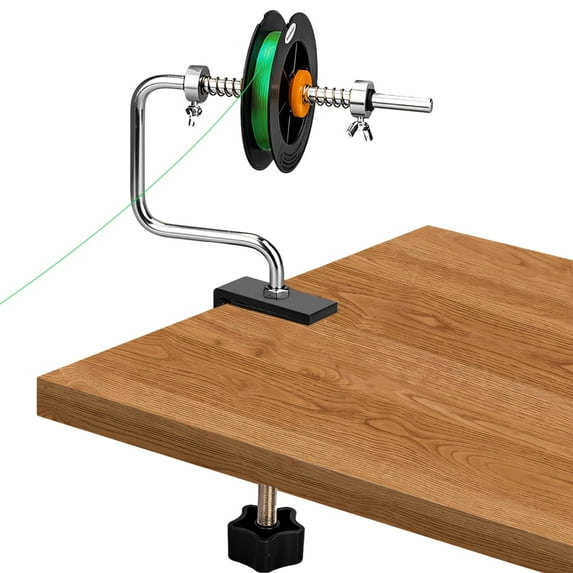

www.walmart.com www.walmart.com

-

Off-label uses for linewinders as typewriter ribbon winders?

- https://www.walmart.com/ip/Fishing-Line-Winder-Spooler-Aluminum-Alloy-Spooling-Station-Adjustable-Rotating-Axis-Steel-Frame-Clamp-Workbench-Fishing-Line-Spooler-Machine-Multipl/17084510284?classType=REGULAR

- https://www.walmart.com/ip/Fishing-Line-Spoolers-Adjustable-Fishing-Line-Winders-Spoolers-Machine-Spinnings-Baitcasting-Reel-Spooling/17886252799?classType=VARIANT

- https://www.walmart.com/ip/Apooke-Spinning-Reel-Fishing-Line-Fishingline-Winder-Equipment-Winder-Spooler-Machine-with-Suction-Cup-Fishing-Line-Spooler/5648376830?classType=REGULAR

Tags

- typewriter ribbon winder

- linewinders

- fishing line

- typewriter spools

- off-label use cases

- fishing

- photos

Annotators

URL

-

-

www.trianglesport.com www.trianglesport.com

-

https://www.trianglesport.com/

Potential off-label use cases for their line winders with respect to spooling typewriter ribbon?

-

-

docs.google.com docs.google.com

-

Introduction: AI is now recently everywhere but we still need humans

Tags

Annotators

URL

-

- Sep 2025

-

www.reddit.com www.reddit.com

-

Reddit Search for typewriter uses:<br /> https://www.reddit.com/r/typewriters/search/?q=typewriter+uses

Tags

Annotators

URL

-

-

www.reddit.com www.reddit.com

-

www.reddit.com www.reddit.com

-

reply to u/todddiskin at https://www.reddit.com/r/typewriters/comments/1nlodr0/how_do_you_use_your_machines/

Some various recent uses:

- I've got writing projects sitting in two different machines.

- I use one on my primary desk for typing up notes on index cards, recipes, my commonplace "book", letters, and other personal correspondence.

- I use a few of my portables on the porch in the mornings/evenings for journaling.

- One machine in the hallway is for impromptu ideas and poetry and an occasional bit of typewriter art.

- One machine near the kitchen is always gamed up for adding to the ever-growing shopping list.

- I'll often get one out for scoring baseball games.

- Participating in One Typed Page and One Typed Quote

- Typing up notes in zoom calls - I've got a camera mount over a Royal KMG that has its own Zoom account so people can watch the notes typed in real time.

- Labels for folders, index card dividers, and sticky labels.

- Addressing envelopes.

- Writing out checks.

- Typecasting

- Hiding a flask or two of bourbon (the Fold-A-Matic Remingtons are great for this)

- Supplementing the nose of my bourbon and whisky collection.

At the end of the day though, unless you're Paul Sheldon, typewriters are unitaskers and are designed to do one thing well: put text on paper. All the rest are just variations on the theme. 😁🤪☠️

see also: https://www.reddit.com/r/typewriters/search/?q=typewriter+uses

-

- Aug 2025

-

writingslowly.com writingslowly.com

-

Use case for the Zettelkasten | Writing Slowly<br /> by [[Richard Griffiths]] <br /> accessed on 2025-08-30T23:59:42

-

-

www.youtube.com www.youtube.com

-

Ray Bradbury's Rules to Writing: Don't Think!<br /> accessed on 2025-08-12T09:45:59

"...you must never think at the typewriter, you must feel." —Ray Bradbury

This also cleverly goes against the idea that "writing is thinking". Bradbury frames it as "writing is feeling" or "writing is being."

-

-

www.reddit.com www.reddit.com

-

Use and maintenance

The use and maintenance details you might be looking for: https://boffosocko.com/2025/06/06/typewriter-use-and-maintenance-for-beginning-to-intermediate-typists/

Typewriter Manuals

In case you need a manual: https://site.xavier.edu/polt/typewriters/tw-manuals.html Your specific manual may be helpful for the tiny specifics like where the carriage lock is or how to properly thread your ribbon, but all the "good" stuff may be in much older manuals for other machines, especially in the 1920s by which time most typewriter technology and features were roughly standardized. Later manuals became less dense as it was assumed that newer users had friends/family/teachers to show them the "missing manual" portions for how to use them.

Typewriter repair

If you need to or decide to (for fun) go down the repair rabbit hole: https://boffosocko.com/2024/10/24/learning-typewriter-maintenance-and-repair/

from reply to u/FarInsect3003 at https://reddit.com/r/typewriters/comments/1mggp8g/olivetti_lettera_32_uppercase_smudge/

-

- Jul 2025

-

archive.org archive.org

-

www.reddit.com www.reddit.com

-

in olden timey days, they taught more or less like this: hyphenate between syllables, in general, more than half the word should be on the first line. If the bell has gone off, then generally don’t start a long word. After the bell, there is room for 7, or 6 plus a hyphen. If your word is longer than that, save it for the next line. Use the margin release sparingly. For example, you might need a comma after the last word. ,

via u/LycO-145b2 at https://reddit.com/r/typewriters/comments/1m2vc8m/rules_for_endofline_hyphens/n3soefg/

-

Rules for end-of-line hyphens? by u/Heavyduty35

Back in the heyday of typewriters in the office books like Dougherty's Instant Spelling Dictionary were kept on the desks of most typists and secretaries for looking up words for hyphenating. Standard dictionaries also provide this functionality, but obviously tend to be 10x the length and size and take longer to look up words, so for doing this at greater speed, these spelling books were common tools in the office.

See: https://archive.org/details/texts?tab=collection&query=instant+spelling+dictionary

-

-

www.reddit.com www.reddit.com

-

reply to u/MarkC64 at https://reddit.com/r/typewriters/comments/1lyq6o8/smith_premier_model_50_60_help_needed_plz/ on typewriter manuals

While it's nice to have the exact manual for your typewriter or even something close enough, there isn't a huge amount of variability in typewriter functionality by the time your machine was built, so pick almost any manual you like and you're probably good to go: https://site.xavier.edu/polt/typewriters/tw-manuals.html

Because of the disparity in general knowledge as typewriters became more ubiquitous in society, manuals from the 1930s are going to have lots more detail in them than the manuals from the 1960s.

If you need more help on general usage and functionality try some of the films at: https://boffosocko.com/2025/06/06/typewriter-use-and-maintenance-for-beginning-to-intermediate-typists/

-

-

www.reddit.com www.reddit.com

-

it probably needs new feet too. I snagged a rubber urethane jeweler's pad off Amazon for $9 and it's enough for 4+ machines. Better than the $35 I saw online. The feet are just 1x1x1/2 in blocks. I cut them a hair big with a utility knife and many passes and sanded them to fit. Kinda messy.

-

-

www.reddit.com www.reddit.com

-

www.reddit.com www.reddit.com

-

That's an important question with several answers. Give it to someone as a gift. Give it to someone as a punishment. Store it in a safe place. Send it to a type pal. Give it to recycling. Rub yourself down with (mud? molasses? butter? beer? blood? snow?) and burn it in a bonfire. Throw it in the sea. Throw it in a volcano. Throw it in a hallway. Throw it in a drawer. Take a picture of it and submit it on one typed page. Type over it in another colour. Type over it in the same colour. Eat it. (The last should only be considered for very little amounts. Please use common sense.)

reply from u/andrebartels1977 to u/Electrical_Raise_345's question: "Hey what should I do with my type writing." at https://reddit.com/r/typewriters/comments/1lru709/hey_what_should_i_do_with_my_type_writing/

-

- Jun 2025

-

www.theguardian.com www.theguardian.com

-

it is bananas

means 'it is insane/ extremely silly'.

-

wealth as a shield

Metaphor. It implies using wealth to protect oneself or others from harm

-

-

www.reddit.com www.reddit.com

-

https://www.reddit.com/r/typewriters/comments/1l8m84q/putting_the_imperial_back_into_service_again/

Use of a typewriter in inhospitable environments (cold, no cell service, remote)

-

-

academic.oup.com academic.oup.com

-

Stefan Dercon

-

-

incowrimo.org incowrimo.org

-

InCoWriMo is the short name for International Correspondence Writing Month, otherwise known as February. With an obvious nod to NaNoWriMo for the inspiration, InCoWriMo challenges you to hand-write and mail/deliver one letter, card, note or postcard every day during the month of February.

-

- May 2025

-

www.reddit.com www.reddit.com

-

https://www.reddit.com/r/typewriters/comments/1kzw0fk/your_typewriter_collection/?sort=old

-

reply to u/Back2Analog at https://old.reddit.com/r/typewriters/comments/1kzw0fk/your_typewriter_collection/?sort=old

-

Total: I currently have 53 with 2 incoming and 1 outbound. About 12 are standards, 7 ultra-portables, and the remainder are portables. Maybe a dozen non-standard typefaces including 2 Vogues and a Clarion Gothic. You can find most of the specifics at https://typewriterdatabase.com/typewriters.php?hunter_search=7248 or on my site at https://boffosocko.com/research/typewriter-collection/#My%20Typewriter%20Collection

-

Display: I've usually got eight displayed in various places around the house including three on desks, but ready to actively type on. The remainder are in cases either behind our living room couch or a closet for easy access and rotation. I'm debating a large credenza or cabinet for additional display/storage space. There are two machines out in the garage, and one currently disassembled on our dining room table (my wife isn't a fan of this one right now).

-

About 25 have been cleaned and mostly restored, most are functional/usable, but need to be cleaned, repaired, or restored to some level. One is a parts machine. I always have a Royal KMG, a Royal FP, and two other standards out ready to go and rotate the others on a semi-weekly basis. There's usually at least one portable in my car for typing out in the wild.

-

Use cases: I spend a few hours a day writing on one or more machines and use them for nearly every conceivable case from quick notes (zettels), letters, essays, lists, snide remarks, poetry, etc., etc. I should spend more time typing for the typosphere. Because I enjoy restoring machines maybe even more than collecting them, I've recently started taking mechanic/restoration commissions.

-

At 50 machines, I'm about at the upper limit of my collecting space. I've given away a few to interested parties, and sold a small handful that I didn't use as frequently. I'm currently trying to balance incoming versus outgoing and might like to get my collection down to a tighter 35-40 machines in excellent condition.

-

Next typewriters: I'm currently looking for an Olympia SG1, a Royal Ten, a Hermes Ambassador, and a Hermes 3000. I'm also passively looking for either very large (6 or 8 CPI) or very small typefaces (>12CPI). I'm definitely spending less time actively hunting these days and more time restoring. I'm tending towards being far more selective in acquisitions compared to my earlier "acquisition campaign".

-

Miscellaneous: I enjoy writing about typewriter collecting and repair to help out others: https://boffosocko.com/research/typewriter-collection/

-

-

Writing letters mostly to people from InCoWriMo or (more frequently) TypePals.com I often will write short stories for my kids or journaling entries, though I have been writing more journal entries with fountain pens these days.

via u/brianlpowers at https://www.reddit.com/r/typewriters/comments/1kzw0fk/your_typewriter_collection/?sort=old

-

-

www.theguardian.com www.theguardian.com

-

Am 14.05.2025 zeigte eine französische Studie mit 15.000 Teilnehmern, dass Männer 26 % mehr Treibhausgase ausstoßen als Frauen, hauptsächlich durch höheren Fleischkonsum und Autonutzung. Nach Kontrolle sozioökonomischer Faktoren beträgt der Unterschied 18 %. Der Konsum von rotem Fleisch und das Autofahren erklären fast den gesamten verbleibenden Unterschied von 6,5-9,5 %. Traditionelle Geschlechternormen, die Männlichkeit mit Fleischkonsum und Autofahren verbinden, spielen eine bedeutende Rolle. Frauen zeigen mehr Besorgnis über die Klimakrise, was zu klimafreundlicherem Verhalten führen könnte. [Zusammenfassung mit Mistral generiert] https://www.theguardian.com/environment/2025/may/14/car-use-and-meat-consumption-drive-emissions-gender-gap-research-suggests

-

-

essd.copernicus.org essd.copernicus.org

-

Total global 240GHG emissions were 55.1± 5.1 GtCO2e in 2023. Of this total, CO2-FFI contributed 37.8 ± 3.0 GtCO2, CO2-LULUCF 241contributed 3.6 ± 2.5 GtCO2, CH4contributed 9.2 ± 2.7 GtCO2e, N2O contributed 2.9 ± 1.7 GtCO2e and F-gas 242emissions contributed 1.6 ± 0.5 GtCO2e.

-

-

ipfs.indy0.net ipfs.indy0.net

-

to what its purpose might have been. By looking at TPM and Gebser together,we can begin to get out of our linguistic ruts and use the ladder (ordinarylanguage) to see beyond the ladder.

for - meme - use the ladder to see beyond the ladder

-

- Apr 2025

-

www.reddit.com www.reddit.com

-

I’m so excited by the wide carriage, I use my typewriter to edition prints and drawings, and I’m so excited to be able to edition larger ones now.

https://www.reddit.com/r/typewriters/comments/1k6zfvl/help_with_id_i_cant_find_anything_online/

via u/Pinkbumblebee-666

-

-

-

Maine Republican Sen. Susan Collins, who previously said she "was shocked" by Gaetz's nomination, said by withdrawing, "he put country first," and noted, "certainly there were a lot of red flags."

Collins was frequently quoted by other sources as the main Republican voice in this space.

-

Asked about who might replace Gaetz as Trump's pick for the attorney general, Sen. Chuck Grassley, the incoming chair of the Senate Judiciary Committee, said he did not "have the slightest idea who they might be."

This article claims that even people on the Hill have no clue who could be the next nominee, yet other articles speculated more than 10 names.

-

Sen. Mike Rounds, R-S.D., said he would not second-guess Trump's decision to tap Gaetz, but that the president needed an attorney general that both he and the senate "can have confidence in."

Rounds was also quoted by other news outlets frequently.

-

"There is no time to waste on a needlessly protracted Washington scuffle, thus I'll be withdrawing my name from consideration to serve as Attorney General," he continued.

This quote appears again, as it has in every other article.

-

-

nypost.com nypost.com

-

pulled publicly by the president-elect.

First article to suggest that Trump would have pulled his name himself if he did not resign. Other sources seemed to think that Gaetz would just not get confirmed.

-

-

www.foxnews.com www.foxnews.com

-

Those on the short list included former White House attorney Mark Paoletta, who served during Trump’s first term as counsel to then-Vice President Mike Pence and to the Office of Management and Budget; Missouri Attorney General Andrew Bailey, who was tapped in 2022 to be the state’s top prosecutor after then-state Attorney General Eric Schmitt was elected to the U.S. Senate.

This is more speculation based on some evidence. Interestingly, it is much different than the list of possible nominees from the BBC article.

-

-

campaignlegal.org campaignlegal.org

-

The individuals nominated and confirmed to executive branch positions hold some of the most powerful positions in government and make decisions that affect the daily lives of the entire American public as they implement and enforce the laws Congress passes.

This article continues to stress the power that Gaetz would have had if he was confirmed. This is a narrative of escaping or dodging possible terrible consequences.

-

After public outcry from elected officials and voters, both liberal and conservative, former Rep. Matt Gaetz withdrew his name from consideration to be nominated attorney general of the United States.

This paints a different narrative from other news sources. It claims that Gaetz withdrew due to public outcry rather than his own decision.

-

-

-

Will Matt Gaetz return to Congress?

Like the CNN article this one has a section of opinion masked as prediction.

-

reportedly prompting a significant closed-door effort by him and Trump to secure the necessary support.

This closed-door effort to get votes is a secondary story that has been touched on by multiple outlets. This story says it was him and Trump while CNN said it was him and Vance.

-

"needlessly protracted Washington scuffle."

Quote that has shown up often. Trying to diffuse seriousness of report.

-

after days of debate over whether to release a congressional report on sexual misconduct allegations against him.

This suggests binary of whether to release or not to release. If it was released he would look guilty and not get votes but if it was not then he would keep skeletons hidden. Obviously there is much more than this and we do not know the contents of the report.

Tags

Annotators

URL

-

-

thirty81press.com thirty81press.com

-

stackoverflow.com stackoverflow.com

-

Your design should strongly depend from your purpose.

-

Ask yourself what is the main purpose of storing this data? Do you intend to actually send mail to the person at the address? Track demographics, populations? Be able to ask callers for their correct address as part of some basic authentication/verification? All of the above? None of the above? Depending on your actual need, you will determine either a) it doesn't really matter, and you can go for a free-text approach, or b) structured/specific fields for all countries, or c) country specific architecture.

-

-

www.reddit.com www.reddit.com

-

Mathematics with Typewriters

What you're suggesting is certainly doable, and was frequently done in it's day, but it isn't the sort of thing you want to subject yourself to while you're doing your Ph.D. (and probably not even if you're doing it as your stress-releiving hobby on the side.)

I several decades of heavy math and engineering experience and really love typewriters. I even have a couple with Greek letters and other basic math glyphs available, but I wouldn't ever bother with typing out any sort of mathematical paper using a typewriter these days.

Unless you're in a VERY specific area that doesn't require more than about 10 symbols, you're highly unlikely to be pleased with the result and it's going to require a huge amount of hand drawn symbols and be a pain to add in the graphs and illustrations. Even if you had a 60's+ Smith-Corona with a full set of math fonts using their Chageable Type functionality, you'd spend far more time trying to typeset your finished product than it would be worth.

You can still find some typewritten textbooks from the 30s and 40s in math and even some typed lecture notes collections into the 1980s and they are all a miserable experience to read. As an example, there's a downloadable copy of Claude Shannon's master's thesis at MIT from 1940, arguably one of the most influential and consequential masters theses ever written, that only uses basic Boolean Algebra and it's just dreadful to read this way: https://dspace.mit.edu/handle/1721.1/11173 (Incidentally, a reasonable high schooler should be able to read and appreciate this thesis today, which shows you just how far things have come since the 1940s.)

If you're heavily enough into math to be doing a Ph.D. you not only should be using TeX/LaTeX, but you'll be much, much, much happier with the output in the long run. It's also a professional skill any mathematician should have.

As a professional aside, while typewriten mathematical texts may seem like a fun and quirky thing to do, there probably isn't an awful lot of audience that would appreciate them. Worse, most professional mathematicians would automatically take a typescript verison as the product of a quack and dismiss it out of hand.

tl;dr in terms of The Godfather: Buy the typewriter, leave the thesis in LaTeX.

a reply to u/Quaternion253 RE: Typing a maths PhD thesis using a typewriter at https://reddit.com/r/typewriters/comments/1js3cs5/typing_a_maths_phd_thesis_using_a_typewriter/

-

-

www.reddit.com www.reddit.com

- Mar 2025

-

web.cvent.com web.cvent.com

-

for - event - Skoll World Forum 2025 - program page - inspiration - new idea - Indyweb dev - curate desilo'd global commons of events - that are topic-mapped in mindplex - link to a global, desilo'd schedule - new idea - use annotation to select events to attend - new Indyweb affordance - hypothesis annotation for program event selection - event program selection - 2025 - April 1 - 4 - Skoll World Forum

new idea - use annotation to select events to attend - demonstrate first use of this affordance on the annotation of this online event program

summary - A good resource rich with many ideas relevant to bottom-up, rapid whole system change

-

-

chem.libretexts.org chem.libretexts.org

-

Figure 44\PageIndex{4}: Titration of a Weak Polyprotic Acid. Another 10 mL, or a total of 20 mL, of the titrant is added to the weak polyprotic acid to reach the second equivalence point. (CC BY; Heather Yee via LibreTexts)

This figure is misleading. The Equivalence Point is where the moles of acid and conjugate base are equal. The Midpoint to ionization is NOT where the moles are equal. The midpoint is where the pKa is determined... by sliding left from the midpoint dot to the Y axis and reading the pH. The pKa = pH at the Midpoint (which is found by taking the 1/2 Equivalence Point (or half the distance required (on the X axis) for the ionization) and moving up to the graphed line). Presumably the next diagram also has the same error.

-

-

stackoverflow.com stackoverflow.com

-

When to use an init

-

- Feb 2025

-

www.youtube.com www.youtube.com

-

hese rehab facilities the these addiction treatment centers they they they CL 85% of them in the US are based on the disease model 85% and an almost overlapping 85% uses 12-step methods as their primary primary uh um uh intervention method well you know that's hard to actually figure out because medicine is this and 12 steps has very little to do with medicine it's kind of based on a religious orientation

> for - stats - addiction - rehab centers - 85% are based on disease model - and 85% use a religious oriented 12 step program

-

losing synaptic density they're losing synapsis they're not brain cells

> for - addiction - graph - years of use vs loss of synaptic connections

-

-

superuser.com superuser.com

-

but that simply just launches a headless browser and downloads the requested URL. This approach is useless in my case since I want to save the Reddit page with the modifications that I've personally and manually made (i.e., with the desired comment threads manually expanded).

-

-

elixir.bootlin.com elixir.bootlin.com

-

#define NCHUNKS_ORDER 6

used for bit shift ops. 2^6 = 64 bytes or chunks depending upon your problem

-

-

gcgh.grandchallenges.org gcgh.grandchallenges.org

-

webuild.envato.com webuild.envato.com

-

A use case is a written description of how users will perform tasks on your website. It outlines, from a user’s point of view, a system’s behavior as it responds to a request. Each use case is represented as a sequence of simple steps, beginning with a user’s goal and ending when that goal is fulfilled.

-

Another problem is that now your business logic is obfuscated inside the ORM layer. If you look at the structure of the source code of a typical Rails application, all you see are these nice MVC buckets. They may reveal the domain models of the application, but you can’t see the Use Cases of the system, what it’s actually meant to do.

-

- Jan 2025

-

blog.mozilla.org blog.mozilla.org

-

We believe the bill unduly dictates one particular technical approach, and does so without considering the privacy, security, and equity risks it poses.

unduly dictates one particular technical approach

-

-

clpccd.instructure.com clpccd.instructure.com

-

These can be helpful for you, but there are also serious concerns. • Ai can change the authenticity of your writing, turning into a “voice” that is not your own. For example, Grammarly often changes my word choices so they don’t sound like something I’d actually say. That goes beyond just checking grammar. • It can definitely lead to plagiarism, basically creating something that is not from you. • The information is often incorrect or made up, for example citing resources that don’t actually exist.

This resonates with me, so I think after I use grammar correction, I still need to go back and check my writing to express my ideas in a way that suits my style and tone.

-

-

-

What's missing, and that's what I try to work on is, because at the same time we have this exponential growth of millions of people doing regenerative local work, but they're underfunded, they're undercapitalized. Usually, it's like two people getting half a wage from an NGO, and they work 16 hours a day. After five years, they totally burn out. How can we fund that? I think that Web3 can be the vehicle for capital to be invested in regeneration.

for - work to find way to use web 3 / crypto to fund currently underfunded regenerative work done by millions of people - the missing link - SOURCE - Youtube Ma Earth channel interview - Devcon 2024 - Cosmo Local Commoning with Web 3 - Michel Bauwens - 2025, Jan 2

Tags

Annotators

URL

-

-

www.yanisvaroufakis.eu www.yanisvaroufakis.eu

-

Aneurin Bevan

for - further research - Aneurin Bevan - 1952 - liberal democracy's greatest paradox - How does wealth manage to persuade poverty to use its political freedom to keep wealth in power? - source - article - Le Monde - Musk, Trump and the Broligarch's novel hyper-weapon - Yanis Varoufakis - 2025, Jan 4 - inequality - elites - source - article - Le Monde - Musk, Trump and the Broligarch's novel hyper-weapon - Yanis Varoufakis - 2025, Jan 4

-

How does wealth manage to persuade poverty to use its political freedom to keep wealth in power?

for - key insight - inequality - elites - How does wealth manage to persuade poverty to use its political freedom to keep wealth in power? - source - article - Le Monde - Musk, Trump and the Broligarch's novel hyper-weapon - Yanis Varoufakis - 2025, Jan 4

Tags

- inequality - elites - source - article - Le Monde - Musk, Trump and the Broligarch's novel hyper-weapon - Yanis Varoufakis - 2025, Jan 4

- further research - Aneurin Bevan - 1952 - liberal democracy's greatest paradox - How does wealth manage to persuade poverty to use its political freedom to keep wealth in power? - source - article - Le Monde - Musk, Trump and the Broligarch's novel hyper-weapon - Yanis Varoufakis - 2025, Jan 4

- key insight - inequality - elites - How does wealth manage to persuade poverty to use its political freedom to keep wealth in power? - source - article - Le Monde - Musk, Trump and the Broligarch's novel hyper-weapon - Yanis Varoufakis - 2025, Jan 4

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

Der Guardian kritisiert in einem Editorial den Verzicht auf Kernelemente des Green Deal in der europäischen Agrarpolitik, darunter die Nicht-Verabschiedung des Nature Restoration Law. Er verweist auf eine Umfrage, die zeigt, dass eine Mehrheit in Europa eine konsequentere Klimapolitik unterstützt.

Tags

- 2024-04-08

- event: renouncement to halve the use of pesticides

- process: lowering climate ambition

- project: European Green Deal

- actor: agribusiness

- actor: European Commission

- law: nature restoration law

- event: farmers' protests

- event: renouncement to reduce agricultural emissions

- country: Europe

- event: scrapping of changes to the agricultural policy

- mode: comment

- topic: surveys

Annotators

URL

-

-

www.sfu.ca www.sfu.ca

-

for - Dr. Julian Somers - SFU Health Sciences - substance use & mental health, primary heath care reform, homelessness, clinical psychology - home page - from - Youtube - Tyler Oliviera Vancouver - Disneyland for Drug Addicts - 2024, Dec - https://hyp.is/6va49soNEe-OwyvA2lPThw/www.youtube.com/watch?v=ggfZwBdaiYk

-

- Dec 2024

-

www.youtube.com www.youtube.com

-

there were a group of scientists that were trying to understand how the brain processes language, and they found something very interesting. They found that when you learn a language as a child, as a two-year-old, you learn it with a certain part of your brain, and when you learn a language as an adult -- for example, if I wanted to learn Japanese

for - research study - language - children learning mother tongue use a different post off the brain then adults learning another language - from TED Talk - YouTube - A word game to convey any language - Ajit Narayanan

-

-

4thgenerationcivilization.substack.com 4thgenerationcivilization.substack.com

-

The current global system of production and trade is reported to use three times more of its resource use for transport, not for making. This creates a profound ‘ecological’, i.e. biophysical and thermodynamic, rationale for relocalizing production

for - stats - motivation for cosmolocal - high inefficacy of resource and energy use - 3x for transport as for production - from Substack article - The Cosmo-Local Plan for our Next Civilization - Michel Bauwens - 2024, Dec 20

Tags

Annotators

URL

-

-

cloud.pocketbook.digital cloud.pocketbook.digital

-

Das Ergebnis: In nur sechs Jahrzehnten wurde fast ein Drittel der globalen Landfläche auf irgendeine Weise verändert. Der Wert war damit nach Angaben aus Karlsruhe etwa vier Mal so hoch, wie langfristige Analysen bis dahin angenommen hatten

-

Zwischen 1963 und 2005 nahm die weltweite Anbaufläche für Nahrungsmittel um etwa 270 Millionen Hektar zu. Das entspricht etwa acht Mal der Fläche Deutschlands.

-

- Nov 2024

-

jgvw2024.peergos.me jgvw2024.peergos.me

-

Fig. 3

for - paper - Translating Earth system boundaries for cities and businesses - Fig. 3 - Ten principles of translation - Bai et al. 2024 - from - paper - Cross-scale translation of Earth system boundaries should use methods that are more science-based - citation of Fig.3 - Xue & Bakshi

from - paper - citation - Cross-scale translation of Earth system boundaries should use methods that are more science-based - citation of Fig.3 - Xue & Bakshi - https://hyp.is/xf3MxqveEe-pGZeWkHHcLA/jgvw2024.peergos.me/StopResetGo/2024/11/PDFs/MattersArisingBaietal.pdf

Tags

- paper - Translating Earth system boundaries for cities and businesses - Fig. 3 - Ten principles of translation - Bai et al. 2024

- from - paper - citation - Cross-scale translation of Earth system boundaries should use methods that are more science-based - citation of Fig.3 - Xue & Bakshi

Annotators

URL

-

-

blogs.baruch.cuny.edu blogs.baruch.cuny.edu

-

To generate text that I've edited to include in my own writing

I see this as collaborative writing with AI; no longer just the students work

-

Grammarly

I personally use grammarly and see it differently from using platforms such as ChatGPT. I wonder what other folks think of this. I see one as to clean up writing and the other to generate content/ideas.

-

-

www.youtube.com www.youtube.com

-

something like Gangam Style has been viewed to the tune of like almost or over two billion times and when you just when you count just the data coming out of Google servers let alone all the links we're dealing with something close to 500 pedabytes of data that's a lot for a video right I mean this is clearly an issue there's no reason we should be moving around all of this data constantly through the network

for - internet limitations - inefficient bandwidth use - example - music video - Gangam Style

-

-

www.gida-global.org www.gida-global.org

-

TRSP Desirable Characteristics Data governance should take into account the potential future use and future harm based on ethical frameworks grounded in the values and principles of the relevant Indigenous community. Metadata should acknowledge the provenance and purpose and any limitations or obligations in secondary use inclusive of issues of consent.

-

-

www.nature.com www.nature.com

-

Terms of use, both for the repository and for the data holdings.

TRSP Desirable Characteristics

Tags

Annotators

URL

-

-

-

TRSP Desirable Characteristics

The terms of reuse of datasets that are provided by a repository

Tags

Annotators

URL

-

-

elixir.bootlin.com elixir.bootlin.com

-

#ifdef CONFIG_ARCH_HAS_HUGEPD static unsigned long hugepte_addr_end(unsigned long addr, unsigned long end, unsigned long sz) { unsigned long __boundary = (addr + sz) & ~(sz-1); return (__boundary - 1 < end - 1) ? __boundary : end; } static int gup_hugepte(pte_t *ptep, unsigned long sz, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { unsigned long pte_end; struct page *page; struct folio *folio; pte_t pte; int refs; pte_end = (addr + sz) & ~(sz-1); if (pte_end < end) end = pte_end; pte = huge_ptep_get(ptep); if (!pte_access_permitted(pte, flags & FOLL_WRITE)) return 0; /* hugepages are never "special" */ VM_BUG_ON(!pfn_valid(pte_pfn(pte))); page = nth_page(pte_page(pte), (addr & (sz - 1)) >> PAGE_SHIFT); refs = record_subpages(page, addr, end, pages + *nr); folio = try_grab_folio(page, refs, flags); if (!folio) return 0; if (unlikely(pte_val(pte) != pte_val(ptep_get(ptep)))) { gup_put_folio(folio, refs, flags); return 0; } if (!folio_fast_pin_allowed(folio, flags)) { gup_put_folio(folio, refs, flags); return 0; } if (!pte_write(pte) && gup_must_unshare(NULL, flags, &folio->page)) { gup_put_folio(folio, refs, flags); return 0; } *nr += refs; folio_set_referenced(folio); return 1; } static int gup_huge_pd(hugepd_t hugepd, unsigned long addr, unsigned int pdshift, unsigned long end, unsigned int flags, struct page **pages, int *nr) { pte_t *ptep; unsigned long sz = 1UL << hugepd_shift(hugepd); unsigned long next; ptep = hugepte_offset(hugepd, addr, pdshift); do { next = hugepte_addr_end(addr, end, sz); if (!gup_hugepte(ptep, sz, addr, end, flags, pages, nr)) return 0; } while (ptep++, addr = next, addr != end); return 1; } #else static inline int gup_huge_pd(hugepd_t hugepd, unsigned long addr, unsigned int pdshift, unsigned long end, unsigned int flags, struct page **pages, int *nr) { return 0; } #endif /* CONFIG_ARCH_HAS_HUGEPD */ static int gup_huge_pmd(pmd_t orig, pmd_t *pmdp, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { struct page *page; struct folio *folio; int refs; if (!pmd_access_permitted(orig, flags & FOLL_WRITE)) return 0; if (pmd_devmap(orig)) { if (unlikely(flags & FOLL_LONGTERM)) return 0; return __gup_device_huge_pmd(orig, pmdp, addr, end, flags, pages, nr); } page = nth_page(pmd_page(orig), (addr & ~PMD_MASK) >> PAGE_SHIFT); refs = record_subpages(page, addr, end, pages + *nr); folio = try_grab_folio(page, refs, flags); if (!folio) return 0; if (unlikely(pmd_val(orig) != pmd_val(*pmdp))) { gup_put_folio(folio, refs, flags); return 0; } if (!folio_fast_pin_allowed(folio, flags)) { gup_put_folio(folio, refs, flags); return 0; } if (!pmd_write(orig) && gup_must_unshare(NULL, flags, &folio->page)) { gup_put_folio(folio, refs, flags); return 0; } *nr += refs; folio_set_referenced(folio); return 1; } static int gup_huge_pud(pud_t orig, pud_t *pudp, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { struct page *page; struct folio *folio; int refs; if (!pud_access_permitted(orig, flags & FOLL_WRITE)) return 0; if (pud_devmap(orig)) { if (unlikely(flags & FOLL_LONGTERM)) return 0; return __gup_device_huge_pud(orig, pudp, addr, end, flags, pages, nr); } page = nth_page(pud_page(orig), (addr & ~PUD_MASK) >> PAGE_SHIFT); refs = record_subpages(page, addr, end, pages + *nr); folio = try_grab_folio(page, refs, flags); if (!folio) return 0; if (unlikely(pud_val(orig) != pud_val(*pudp))) { gup_put_folio(folio, refs, flags); return 0; } if (!folio_fast_pin_allowed(folio, flags)) { gup_put_folio(folio, refs, flags); return 0; } if (!pud_write(orig) && gup_must_unshare(NULL, flags, &folio->page)) { gup_put_folio(folio, refs, flags); return 0; } *nr += refs; folio_set_referenced(folio); return 1; } static int gup_huge_pgd(pgd_t orig, pgd_t *pgdp, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { int refs; struct page *page; struct folio *folio; if (!pgd_access_permitted(orig, flags & FOLL_WRITE)) return 0; BUILD_BUG_ON(pgd_devmap(orig)); page = nth_page(pgd_page(orig), (addr & ~PGDIR_MASK) >> PAGE_SHIFT); refs = record_subpages(page, addr, end, pages + *nr); folio = try_grab_folio(page, refs, flags); if (!folio) return 0; if (unlikely(pgd_val(orig) != pgd_val(*pgdp))) { gup_put_folio(folio, refs, flags); return 0; } if (!pgd_write(orig) && gup_must_unshare(NULL, flags, &folio->page)) { gup_put_folio(folio, refs, flags); return 0; } if (!folio_fast_pin_allowed(folio, flags)) { gup_put_folio(folio, refs, flags); return 0; } *nr += refs; folio_set_referenced(folio); return 1; } static int gup_pmd_range(pud_t *pudp, pud_t pud, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { unsigned long next; pmd_t *pmdp; pmdp = pmd_offset_lockless(pudp, pud, addr); do { pmd_t pmd = pmdp_get_lockless(pmdp); next = pmd_addr_end(addr, end); if (!pmd_present(pmd)) return 0; if (unlikely(pmd_trans_huge(pmd) || pmd_huge(pmd) || pmd_devmap(pmd))) { /* See gup_pte_range() */ if (pmd_protnone(pmd)) return 0; if (!gup_huge_pmd(pmd, pmdp, addr, next, flags, pages, nr)) return 0; } else if (unlikely(is_hugepd(__hugepd(pmd_val(pmd))))) { /* * architecture have different format for hugetlbfs * pmd format and THP pmd format */ if (!gup_huge_pd(__hugepd(pmd_val(pmd)), addr, PMD_SHIFT, next, flags, pages, nr)) return 0; } else if (!gup_pte_range(pmd, pmdp, addr, next, flags, pages, nr)) return 0; } while (pmdp++, addr = next, addr != end); return 1; } static int gup_pud_range(p4d_t *p4dp, p4d_t p4d, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { unsigned long next; pud_t *pudp; pudp = pud_offset_lockless(p4dp, p4d, addr); do { pud_t pud = READ_ONCE(*pudp); next = pud_addr_end(addr, end); if (unlikely(!pud_present(pud))) return 0; if (unlikely(pud_huge(pud) || pud_devmap(pud))) { if (!gup_huge_pud(pud, pudp, addr, next, flags, pages, nr)) return 0; } else if (unlikely(is_hugepd(__hugepd(pud_val(pud))))) { if (!gup_huge_pd(__hugepd(pud_val(pud)), addr, PUD_SHIFT, next, flags, pages, nr)) return 0; } else if (!gup_pmd_range(pudp, pud, addr, next, flags, pages, nr)) return 0; } while (pudp++, addr = next, addr != end); return 1; } static int gup_p4d_range(pgd_t *pgdp, pgd_t pgd, unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { unsigned long next; p4d_t *p4dp; p4dp = p4d_offset_lockless(pgdp, pgd, addr); do { p4d_t p4d = READ_ONCE(*p4dp); next = p4d_addr_end(addr, end); if (p4d_none(p4d)) return 0; BUILD_BUG_ON(p4d_huge(p4d)); if (unlikely(is_hugepd(__hugepd(p4d_val(p4d))))) { if (!gup_huge_pd(__hugepd(p4d_val(p4d)), addr, P4D_SHIFT, next, flags, pages, nr)) return 0; } else if (!gup_pud_range(p4dp, p4d, addr, next, flags, pages, nr)) return 0; } while (p4dp++, addr = next, addr != end); return 1; } static void gup_pgd_range(unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { unsigned long next; pgd_t *pgdp; pgdp = pgd_offset(current->mm, addr); do { pgd_t pgd = READ_ONCE(*pgdp); next = pgd_addr_end(addr, end); if (pgd_none(pgd)) return; if (unlikely(pgd_huge(pgd))) { if (!gup_huge_pgd(pgd, pgdp, addr, next, flags, pages, nr)) return; } else if (unlikely(is_hugepd(__hugepd(pgd_val(pgd))))) { if (!gup_huge_pd(__hugepd(pgd_val(pgd)), addr, PGDIR_SHIFT, next, flags, pages, nr)) return; } else if (!gup_p4d_range(pgdp, pgd, addr, next, flags, pages, nr)) return; } while (pgdp++, addr = next, addr != end); } #else static inline void gup_pgd_range(unsigned long addr, unsigned long end, unsigned int flags, struct page **pages, int *nr) { }

policy use functions for gup_huge pte policy code function above (not right above, gotta scroll probably to find it)

-

static int internal_get_user_pages_fast(unsigned long start, unsigned long nr_pages, unsigned int gup_flags, struct page **pages) { unsigned long len, end; unsigned long nr_pinned; int locked = 0; int ret; if (WARN_ON_ONCE(gup_flags & ~(FOLL_WRITE | FOLL_LONGTERM | FOLL_FORCE | FOLL_PIN | FOLL_GET | FOLL_FAST_ONLY | FOLL_NOFAULT | FOLL_PCI_P2PDMA | FOLL_HONOR_NUMA_FAULT))) return -EINVAL; if (gup_flags & FOLL_PIN) mm_set_has_pinned_flag(¤t->mm->flags); if (!(gup_flags & FOLL_FAST_ONLY)) might_lock_read(¤t->mm->mmap_lock); start = untagged_addr(start) & PAGE_MASK; len = nr_pages << PAGE_SHIFT; if (check_add_overflow(start, len, &end)) return -EOVERFLOW; if (end > TASK_SIZE_MAX) return -EFAULT; if (unlikely(!access_ok((void __user *)start, len))) return -EFAULT; nr_pinned = lockless_pages_from_mm(start, end, gup_flags, pages); if (nr_pinned == nr_pages || gup_flags & FOLL_FAST_ONLY) return nr_pinned; /* Slow path: try to get the remaining pages with get_user_pages */ start += nr_pinned << PAGE_SHIFT; pages += nr_pinned; ret = __gup_longterm_locked(current->mm, start, nr_pages - nr_pinned, pages, &locked, gup_flags | FOLL_TOUCH | FOLL_UNLOCKABLE); if (ret < 0) { /* * The caller has to unpin the pages we already pinned so * returning -errno is not an option */ if (nr_pinned) return nr_pinned; return ret; } return ret + nr_pinned; } /** * get_user_pages_fast_only() - pin user pages in memory * @start: starting user address * @nr_pages: number of pages from start to pin * @gup_flags: flags modifying pin behaviour * @pages: array that receives pointers to the pages pinned. * Should be at least nr_pages long. * * Like get_user_pages_fast() except it's IRQ-safe in that it won't fall back to * the regular GUP. * * If the architecture does not support this function, simply return with no * pages pinned. * * Careful, careful! COW breaking can go either way, so a non-write * access can get ambiguous page results. If you call this function without * 'write' set, you'd better be sure that you're ok with that ambiguity. */ int get_user_pages_fast_only(unsigned long start, int nr_pages, unsigned int gup_flags, struct page **pages) { /* * Internally (within mm/gup.c), gup fast variants must set FOLL_GET, * because gup fast is always a "pin with a +1 page refcount" request. * * FOLL_FAST_ONLY is required in order to match the API description of * this routine: no fall back to regular ("slow") GUP. */ if (!is_valid_gup_args(pages, NULL, &gup_flags, FOLL_GET | FOLL_FAST_ONLY)) return -EINVAL; return internal_get_user_pages_fast(start, nr_pages, gup_flags, pages); } EXPORT_SYMBOL_GPL(get_user_pages_fast_only); /** * get_user_pages_fast() - pin user pages in memory * @start: starting user address * @nr_pages: number of pages from start to pin * @gup_flags: flags modifying pin behaviour * @pages: array that receives pointers to the pages pinned. * Should be at least nr_pages long. * * Attempt to pin user pages in memory without taking mm->mmap_lock. * If not successful, it will fall back to taking the lock and * calling get_user_pages(). * * Returns number of pages pinned. This may be fewer than the number requested. * If nr_pages is 0 or negative, returns 0. If no pages were pinned, returns * -errno. */ int get_user_pages_fast(unsigned long start, int nr_pages, unsigned int gup_flags, struct page **pages) { /* * The caller may or may not have explicitly set FOLL_GET; either way is * OK. However, internally (within mm/gup.c), gup fast variants must set * FOLL_GET, because gup fast is always a "pin with a +1 page refcount" * request. */ if (!is_valid_gup_args(pages, NULL, &gup_flags, FOLL_GET)) return -EINVAL; return internal_get_user_pages_fast(start, nr_pages, gup_flags, pages); } EXPORT_SYMBOL_GPL(get_user_pages_fast); /** * pin_user_pages_fast() - pin user pages in memory without taking locks * * @start: starting user address * @nr_pages: number of pages from start to pin * @gup_flags: flags modifying pin behaviour * @pages: array that receives pointers to the pages pinned. * Should be at least nr_pages long. * * Nearly the same as get_user_pages_fast(), except that FOLL_PIN is set. See * get_user_pages_fast() for documentation on the function arguments, because * the arguments here are identical. * * FOLL_PIN means that the pages must be released via unpin_user_page(). Please * see Documentation/core-api/pin_user_pages.rst for further details. * * Note that if a zero_page is amongst the returned pages, it will not have * pins in it and unpin_user_page() will not remove pins from it. */ int pin_user_pages_fast(unsigned long start, int nr_pages, unsigned int gup_flags, struct page **pages) { if (!is_valid_gup_args(pages, NULL, &gup_flags, FOLL_PIN)) return -EINVAL; return internal_get_user_pages_fast(start, nr_pages, gup_flags, pages); } EXPORT_SYMBOL_GPL(pin_user_pages_fast); /** * pin_user_pages_remote() - pin pages of a remote process * * @mm: mm_struct of target mm * @start: starting user address * @nr_pages: number of pages from start to pin * @gup_flags: flags modifying lookup behaviour * @pages: array that receives pointers to the pages pinned. * Should be at least nr_pages long. * @locked: pointer to lock flag indicating whether lock is held and * subsequently whether VM_FAULT_RETRY functionality can be * utilised. Lock must initially be held. * * Nearly the same as get_user_pages_remote(), except that FOLL_PIN is set. See * get_user_pages_remote() for documentation on the function arguments, because * the arguments here are identical. * * FOLL_PIN means that the pages must be released via unpin_user_page(). Please * see Documentation/core-api/pin_user_pages.rst for details. * * Note that if a zero_page is amongst the returned pages, it will not have * pins in it and unpin_user_page*() will not remove pins from it. */ long pin_user_pages_remote(struct mm_struct *mm, unsigned long start, unsigned long nr_pages, unsigned int gup_flags, struct page **pages, int *locked) { int local_locked = 1; if (!is_valid_gup_args(pages, locked, &gup_flags, FOLL_PIN | FOLL_TOUCH | FOLL_REMOTE)) return 0; return __gup_longterm_locked(mm, start, nr_pages, pages, locked ? locked : &local_locked, gup_flags); } EXPORT_SYMBOL(pin_user_pages_remote); /** * pin_user_pages() - pin user pages in memory for use by other devices * * @start: starting user address * @nr_pages: number of pages from start to pin * @gup_flags: flags modifying lookup behaviour * @pages: array that receives pointers to the pages pinned. * Should be at least nr_pages long. * * Nearly the same as get_user_pages(), except that FOLL_TOUCH is not set, and * FOLL_PIN is set. * * FOLL_PIN means that the pages must be released via unpin_user_page(). Please * see Documentation/core-api/pin_user_pages.rst for details. * * Note that if a zero_page is amongst the returned pages, it will not have * pins in it and unpin_user_page*() will not remove pins from it. */ long pin_user_pages(unsigned long start, unsigned long nr_pages, unsigned int gup_flags, struct page **pages) { int locked = 1; if (!is_valid_gup_args(pages, NULL, &gup_flags, FOLL_PIN)) return 0; return __gup_longterm_locked(current->mm, start, nr_pages, pages, &locked, gup_flags); } EXPORT_SYMBOL(pin_user_pages); /* * pin_user_pages_unlocked() is the FOLL_PIN variant of * get_user_pages_unlocked(). Behavior is the same, except that this one sets * FOLL_PIN and rejects FOLL_GET. * * Note that if a zero_page is amongst the returned pages, it will not have * pins in it and unpin_user_page*() will not remove pins from it. */ long pin_user_pages_unlocked(unsigned long start, unsigned long nr_pages, struct page **pages, unsigned int gup_flags) { int locked = 0; if (!is_valid_gup_args(pages, NULL, &gup_flags, FOLL_PIN | FOLL_TOUCH | FOLL_UNLOCKABLE)) return 0; return __gup_longterm_locked(current->mm, start, nr_pages, pages, &locked, gup_flags); }

fast gup functions

-

/** * unpin_user_pages() - release an array of gup-pinned pages. * @pages: array of pages to be marked dirty and released. * @npages: number of pages in the @pages array. * * For each page in the @pages array, release the page using unpin_user_page(). * * Please see the unpin_user_page() documentation for details. */ void unpin_user_pages(struct page **pages, unsigned long npages) { unsigned long i; struct folio *folio; unsigned int nr; /* * If this WARN_ON() fires, then the system *might* be leaking pages (by * leaving them pinned), but probably not. More likely, gup/pup returned * a hard -ERRNO error to the caller, who erroneously passed it here. */ if (WARN_ON(IS_ERR_VALUE(npages))) return; sanity_check_pinned_pages(pages, npages); for (i = 0; i < npages; i += nr) { folio = gup_folio_next(pages, npages, i, &nr); gup_put_folio(folio, nr, FOLL_PIN); } }

gup unpin function, not actual logic

-

void unpin_user_page_range_dirty_lock(struct page *page, unsigned long npages, bool make_dirty) { unsigned long i; struct folio *folio; unsigned int nr; for (i = 0; i < npages; i += nr) { folio = gup_folio_range_next(page, npages, i, &nr); if (make_dirty && !folio_test_dirty(folio)) { folio_lock(folio); folio_mark_dirty(folio); folio_unlock(folio); } gup_put_folio(folio, nr, FOLL_PIN); } }

unpin logic but for dirty pages

-

static void __maybe_unused undo_dev_pagemap(int *nr, int nr_start, unsigned int flags, struct page **pages) { while ((*nr) - nr_start) { struct page *page = pages[--(*nr)]; ClearPageReferenced(page); if (flags & FOLL_PIN) unpin_user_page(page); else put_page(page); } }

policy use function that undoes mapping

-

#ifdef CONFIG_STACK_GROWSUP return vma_lookup(mm, addr); #else static volatile unsigned long next_warn; struct vm_area_struct *vma; unsigned long now, next; vma = find_vma(mm, addr); if (!vma || (addr >= vma->vm_start)) return vma; /* Only warn for half-way relevant accesses */ if (!(vma->vm_flags & VM_GROWSDOWN)) return NULL; if (vma->vm_start - addr > 65536) return NULL; /* Let's not warn more than once an hour.. */ now = jiffies; next = next_warn; if (next && time_before(now, next)) return NULL; next_warn = now + 60*60*HZ; /* Let people know things may have changed. */ pr_warn("GUP no longer grows the stack in %s (%d): %lx-%lx (%lx)\n", current->comm, task_pid_nr(current), vma->vm_start, vma->vm_end, addr); dump_stack(); return NULL;

helper func to lookup vma(virtual mem area) that warns per hour about half way relevant acc and changes in stack

-

void unpin_user_pages_dirty_lock(struct page **pages, unsigned long npages, bool make_dirty) { unsigned long i; struct folio *folio; unsigned int nr; if (!make_dirty) { unpin_user_pages(pages, npages); return; } sanity_check_pinned_pages(pages, npages); for (i = 0; i < npages; i += nr) { folio = gup_folio_next(pages, npages, i, &nr); /* * Checking PageDirty at this point may race with * clear_page_dirty_for_io(), but that's OK. Two key * cases: * * 1) This code sees the page as already dirty, so it * skips the call to set_page_dirty(). That could happen * because clear_page_dirty_for_io() called * page_mkclean(), followed by set_page_dirty(). * However, now the page is going to get written back, * which meets the original intention of setting it * dirty, so all is well: clear_page_dirty_for_io() goes * on to call TestClearPageDirty(), and write the page * back. * * 2) This code sees the page as clean, so it calls * set_page_dirty(). The page stays dirty, despite being * written back, so it gets written back again in the * next writeback cycle. This is harmless. */ if (!folio_test_dirty(folio)) { folio_lock(folio); folio_mark_dirty(folio); folio_unlock(folio); } gup_put_folio(folio, nr, FOLL_PIN); } }

unpins and dirties page

-

static inline struct folio *gup_folio_next(struct page **list, unsigned long npages, unsigned long i, unsigned int *ntails) { struct folio *folio = page_folio(list[i]); unsigned int nr; for (nr = i + 1; nr < npages; nr++) { if (page_folio(list[nr]) != folio) break; } *ntails = nr - i; return folio; }

gets folio of next page along with reference to end of folio

-

static inline struct folio *gup_folio_range_next(struct page *start, unsigned long npages, unsigned long i, unsigned int *ntails) { struct page *next = nth_page(start, i); struct folio *folio = page_folio(next); unsigned int nr = 1; if (folio_test_large(folio)) nr = min_t(unsigned int, npages - i, folio_nr_pages(folio) - folio_page_idx(folio, next)); *ntails = nr; return folio; }

gets the folio of the next page from start to 'i' range. also gets the tail folio/reference

-

void unpin_user_page(struct page *page) { sanity_check_pinned_pages(&page, 1); gup_put_folio(page_folio(page), 1, FOLL_PIN); } EXPORT_SYMBOL(unpin_user_page);

actual policy use logic

-

if (!put_devmap_managed_page_refs(&folio->page, refs)) folio_put_refs(folio, refs);

Definitely a vital and straightforward policy use section of gup that simples places a reference on the folio

-

if (flags & FOLL_PIN) { if (is_zero_folio(folio)) return; node_stat_mod_folio(folio, NR_FOLL_PIN_RELEASED, refs); if (folio_test_large(folio)) atomic_sub(refs, &folio->_pincount); else refs *= GUP_PIN_COUNTING_BIAS; }

Checks if the folio is zero/large

-

if (folio_test_large(folio)) atomic_add(refs, &folio->_pincount); else folio_ref_add(folio, refs * (GUP_PIN_COUNTING_BIAS - 1))

maintaining reference counts. Part of policy logic most likely

-

-

academic.oup.com academic.oup.com

-

although less-than-fluent exposure to sign language is still beneficial for DHH learners (Caselli et al., 2021)

Quote - for the use of ASL...even it is not at a high level.

-

- Oct 2024

-

www.carbonbrief.org www.carbonbrief.org

-

Erstmals wurde genau erfasst, welcher Teil der von Waldbränden betroffenen Gebiete sich auf die menschlich verursachte Erhitzung zurückführen lässt. Er wächst seit 20 Jahren deutlich an. Insgesamt kompensieren die auf die Erhitzung zurückgehenden Waldbrände den Rückgang an Bränden durch Entwaldung. Der von Menschen verursachte – und für die Berechnung von Schadensansprüchen relevante – Anteil der CO2-Emissione ist damit deutlich höher als bisher angenommen https://www.carbonbrief.org/climate-change-almost-wipes-out-decline-in-global-area-burned-by-wildfires/

Tags

- World Weather Attribution

- CO2-Emissionen von Waldbränden

- global

- attribution

- land use change

- David Bowman

- increasing risk oft wildfires

- Matthew W. Jones

- Global rise in forest fire emissions linked to climate change in the extratropics

- Inter-Sectoral Impact Model Intercomparison Project

- Global Carbon Budget

- Seppe Lampe

- Natural Environment Research Council

- Global burned area increasingly explained by climate change

- Transdisciplinary Fire Centre at the University of Tasmania.

- Maria Barbosa

Annotators

URL

-

-

www.youtube.com www.youtube.com

-

for - from - Fair Share Commons discussion thread - Marie - discussing the use of the word "capital" in "spiritual capital" - webcast - Great Simplification - On the Origins of Energy Blindness - Steve Keen - Energy Blindness

-

-

www.youtube.com www.youtube.com

-

1:25:22 First comes an AGREEMENT by members of the neighbourhood to co-operate and then they agree to use a mechanism called money to mobilise resources

-

1:00:18 We should not even use the term borrowing

-

18:17 A government who creates a currency does not need to tax its citizens to get dollars. 18:25 Currency issuers spend first before they tax - they do not use tax to spend

Tags

- Selling Treasuries is NOT BORROWING

- 1:25:22 First comes an AGREEMENT by members of the neighbourhood to co-operate and then they agree to use a mechanism called money to mobilise resources

- We should not even use the term borrowing

- Currency issuers spend first before they tax - they do not use tax to spend

- A government who creates a currency does not need to tax its citzens to get dollars.

Annotators

URL

-

-

elixir.bootlin.com elixir.bootlin.com

-

if (dtc->wb_thresh < 2 * wb_stat_error()) { wb_reclaimable = wb_stat_sum(wb, WB_RECLAIMABLE); dtc->wb_dirty = wb_reclaimable + wb_stat_sum(wb, WB_WRITEBACK); } else { wb_reclaimable = wb_stat(wb, WB_RECLAIMABLE); dtc->wb_dirty = wb_reclaimable + wb_stat(wb, WB_WRITEBACK); }

This is a configuration policy that does a more accurate calculation on the number of reclaimable pages and dirty pages when the threshold for the dirty pages in the writeback context is lower than 2 times the maximal error of a stat counter.

-

static long wb_min_pause(struct bdi_writeback *wb, long max_pause, unsigned long task_ratelimit, unsigned long dirty_ratelimit, int *nr_dirtied_pause

This function is an algorithmic policy that determines the minimum throttle time for a process between consecutive writeback operations for dirty pages based on heuristics. It is used for balancing the load of the I/O subsystems so that there will not be excessive I/O operations that impact the performance of the system.

-

if (!laptop_mode && nr_reclaimable > gdtc->bg_thresh && !writeback_in_progress(wb)) wb_start_background_writeback(wb);

This is a configuration policy that determines whether to start background writeout. The code here indicates that if laptop_mode, which will reduce disk activity for power saving, is not set, then when the number of dirty pages reaches the bg_thresh threshold, the system starts writing back pages.

-

if (thresh > dirty) return 1UL << (ilog2(thresh - dirty) >> 1);

This implements a configuration policy that determines the interval for the kernel to wake up and check for dirty pages that need to be written back to disk.

-

limit -= (limit - thresh) >> 5;

This is a configuration policy that determines how much should the limit be updated. The limit controls the amount of dirty memory allowed in the system.

-

if (dirty <= dirty_freerun_ceiling(thresh, bg_thresh) && (!mdtc || m_dirty <= dirty_freerun_ceiling(m_thresh, m_bg_thresh))) { unsigned long intv; unsigned long m_intv; free_running: intv = dirty_poll_interval(dirty, thresh); m_intv = ULONG_MAX; current->dirty_paused_when = now; current->nr_dirtied = 0; if (mdtc) m_intv = dirty_poll_interval(m_dirty, m_thresh); current->nr_dirtied_pause = min(intv, m_intv); break; } /* Start writeback even when in laptop mode */ if (unlikely(!writeback_in_progress(wb))) wb_start_background_writeback(wb); mem_cgroup_flush_foreign(wb); /* * Calculate global domain's pos_ratio and select the * global dtc by default. */ if (!strictlimit) { wb_dirty_limits(gdtc); if ((current->flags & PF_LOCAL_THROTTLE) && gdtc->wb_dirty < dirty_freerun_ceiling(gdtc->wb_thresh, gdtc->wb_bg_thresh)) /* * LOCAL_THROTTLE tasks must not be throttled * when below the per-wb freerun ceiling. */ goto free_running; } dirty_exceeded = (gdtc->wb_dirty > gdtc->wb_thresh) && ((gdtc->dirty > gdtc->thresh) || strictlimit); wb_position_ratio(gdtc); sdtc = gdtc; if (mdtc) { /* * If memcg domain is in effect, calculate its * pos_ratio. @wb should satisfy constraints from * both global and memcg domains. Choose the one * w/ lower pos_ratio. */ if (!strictlimit) { wb_dirty_limits(mdtc); if ((current->flags & PF_LOCAL_THROTTLE) && mdtc->wb_dirty < dirty_freerun_ceiling(mdtc->wb_thresh, mdtc->wb_bg_thresh)) /* * LOCAL_THROTTLE tasks must not be * throttled when below the per-wb * freerun ceiling. */ goto free_running; } dirty_exceeded |= (mdtc->wb_dirty > mdtc->wb_thresh) && ((mdtc->dirty > mdtc->thresh) || strictlimit); wb_position_ratio(mdtc); if (mdtc->pos_ratio < gdtc->pos_ratio) sdtc = mdtc; }

This is an algorithmic policy that determines whether the process can run freely or a throttle is needed to control the rate of the writeback by checking if the number of dirty pages exceed the average of the global threshold and background threshold.

-

shift = dirty_ratelimit / (2 * step + 1); if (shift < BITS_PER_LONG) step = DIV_ROUND_UP(step >> shift, 8); else step = 0; if (dirty_ratelimit < balanced_dirty_ratelimit) dirty_ratelimit += step; else dirty_ratelimit -= step;

This is a configuration policy that determines how much we should increase/decrease the dirty_ratelimit, which controls the rate that processors write dirty pages back to storage.

-

ratelimit_pages = dirty_thresh / (num_online_cpus() * 32); if (ratelimit_pages < 16) ratelimit_pages = 16;

This is a configuration policy that dynamically determines the rate that kernel can write dirty pages back to storage in a single writeback cycle.

-

t = wb_dirty / (1 + bw / roundup_pow_of_two(1 + HZ / 8));

This implements a configuration policy that determines the maximum time that the kernel should wait between writeback operations for dirty pages. This ensures that dirty pages are flushed to disk within a reasonable time frame and control the risk of data loss in case of a system crash.

-

if (IS_ENABLED(CONFIG_CGROUP_WRITEBACK) && mdtc) {

This is a configuration policy that controls whether to update the limit in the control group. The config enables support for controlling the writeback of dirty pages on a per-cgroup basis in the Linux kernel. This allows for better resource management and improved performance.

-

-

elixir.bootlin.com elixir.bootlin.com

-

if (si->cluster_info) { if (!scan_swap_map_try_ssd_cluster(si, &offset, &scan_base)) goto scan; } else if (unlikely(!si->cluster_nr--)) {

Algorithmic policy decision: If SSD, use SSD wear-leveling friendly algorithm. Otherwise, use HDD algo which minimizes seek times. Potentially, certain access patterns may make one of these algorithms less effective (i.e. in the case of wear leveling, the swap is constantly full)

-

scan_base = offset = si->lowest_bit; last_in_cluster = offset + SWAPFILE_CLUSTER - 1; /* Locate the first empty (unaligned) cluster */ for (; last_in_cluster <= si->highest_bit; offset++) { if (si->swap_map[offset]) last_in_cluster = offset + SWAPFILE_CLUSTER; else if (offset == last_in_cluster) { spin_lock(&si->lock); offset -= SWAPFILE_CLUSTER - 1; si->cluster_next = offset; si->cluster_nr = SWAPFILE_CLUSTER - 1; goto checks; } if (unlikely(--latency_ration < 0)) { cond_resched(); latency_ration = LATENCY_LIMIT; } }

Here, (when using HDDs), a policy is implemented that places the swapped page in the first available slot. This is supposed to reduce seek time in spinning drives, as it encourages having swap entries near each other.

-

/* * Even if there's no free clusters available (fragmented), * try to scan a little more quickly with lock held unless we * have scanned too many slots already. */ if (!scanned_many) { unsigned long scan_limit; if (offset < scan_base) scan_limit = scan_base; else scan_limit = si->highest_bit; for (; offset <= scan_limit && --latency_ration > 0; offset++) { if (!si->swap_map[offset]) goto checks; } }

Here we have a configuration policy where we do another smaller scan as long as we haven't exhausted our latency_ration. Another alternative could be yielding early in anticipation that we aren't going to find a free slot.

-

/* * select a random position to start with to help wear leveling * SSD */ for_each_possible_cpu(cpu) { per_cpu(*p->cluster_next_cpu, cpu) = get_random_u32_inclusive(1, p->highest_bit); }

An algorithmic (random) policy is used here to spread swap pages around an SSD to help with wear leveling instead of writing to the same area of an SSD often.

-

cluster_list_add_tail(&si->discard_clusters, si->cluster_info, idx);

A policy decision is made to add a cluster to the discard list on a first-come, first-served basis. However, this approach could be enhanced by prioritizing certain clusters higher on the list based on their 'importance.' This 'importance' could be defined by how closely a cluster is related to other pages in the swap. By doing so, the system can reduce seek time as mentioned on line 817.

-

if (unlikely(--latency_ration < 0)) { cond_resched(); latency_ration = LATENCY_LIMIT; scanned_many = true; } if (swap_offset_available_and_locked(si, offset)) goto checks; } offset = si->lowest_bit; while (offset < scan_base) { if (unlikely(--latency_ration < 0)) { cond_resched(); latency_ration = LATENCY_LIMIT; scanned_many = true; }

Here, a policy decision is made to fully replenish the latency_ration with the LATENCY_LIMIT and then yield back to the scheduler if we've exhausted it. This makes it so that when scheduled again, we have the full LATENCY_LIMIT to do a scan. Alternative policies could grow/shrink this to find a better heuristic instead of fully replenishing each time.

Marked config/value as awe're replacing latency_ration with a compiletime-defined limit.

-

while (scan_swap_map_ssd_cluster_conflict(si, offset)) { /* take a break if we already got some slots */ if (n_ret) goto done; if (!scan_swap_map_try_ssd_cluster(si, &offset, &scan_base)) goto scan;

Here, a policy decision is made to stop scanning if some slots were already found. Other policy decisions could be made to keep scanning or take into account how long the scan took or how many pages were found.

-

else if (!cluster_list_empty(&si->discard_clusters)) { /* * we don't have free cluster but have some clusters in * discarding, do discard now and reclaim them, then * reread cluster_next_cpu since we dropped si->lock */ swap_do_scheduled_discard(si); *scan_base = this_cpu_read(*si->cluster_next_cpu); *offset = *scan_base; goto new_cluster;

This algorithmic policy discards + reclaims pages as-needed whenever there is no free cluster. Other policies could be explored that do this preemptively in order to avoid the cost of doing it here.

-

#ifdef CONFIG_THP_SWAP

This is a build-time flag that configures how hugepages are handled when swapped. When defined, it swaps them in one piece, while without it splits them into smaller units and swaps those units.

-

if (swap_flags & SWAP_FLAG_DISCARD_ONCE) p->flags &= ~SWP_PAGE_DISCARD; else if (swap_flags & SWAP_FLAG_DISCARD_PAGES) p->flags &= ~SWP_AREA_DISCARD

This is a configuration policy decision where a sysadmin can pass flags to sys_swapon() to control the behavior discards are handled. If DISCARD_ONCE is set, a flag which "discard[s] swap area at swapon-time" is unset, and if DISCARD_PAGES is set, a flag which "discard[s] page-clusters after use" is unset.

-

-

elixir.bootlin.com elixir.bootlin.com

-

randomize_stack_top

This function uses a configuration policy to enable Address Space Layout Randomization (ASLR) for a specific process if PF_RANDOMIZE flag is set. It randomly arranges the positions of stack of a process to help defend certain attacks by making memory addresses unpredictable.

-

-

elixir.bootlin.com elixir.bootlin.com

-

if (prev_class) { if (can_merge(prev_class, pages_per_zspage, objs_per_zspage)) { pool->size_class[i] = prev_class; continue; } }

This is an algorithmic policy. A

zs_poolmaintainszs_pages of differentsize_class. However, somesize_classes share exactly same characteristics, namelypages_per_zspageandobjs_per_zspage. Recall the other annotation of mine, it searched freezspages bysize_class:zspage = find_get_zspage(class);. Thus, grouping different classes improves memory utilization. -

zspage = find_get_zspage(class); if (likely(zspage)) { obj = obj_malloc(pool, zspage, handle); /* Now move the zspage to another fullness group, if required */ fix_fullness_group(class, zspage); record_obj(handle, obj); class_stat_inc(class, ZS_OBJS_INUSE, 1); goto out; }

This is an algorithmic policy. Instead of immediately allocating new zspages for each memory request, the algorithm first attempts to find and reuse existing partially filled zspages from a given size class by invoking the find_get_zspage(class) function. It also updates the corresponding fullness groups.

-

-

elixir.bootlin.com elixir.bootlin.com

-

1

This is a configuration policy that sets the timeout between retries if vmap_pages_range() fails. This could be tunable variable.

-

if (!(flags & VM_ALLOC)) area->addr = kasan_unpoison_vmalloc(area->addr, requested_size, KASAN_VMALLOC_PROT_NORMAL);

This is an algorithmic policy. This is an optimization that prevents duplicate marks of accessibility. Only pages allocated without

VM_ALLOC(e.g. ioremap) was not set accessible (unpoison), thus requiring explicit setting here. -

100U

This is an configuration policy that determines 100 pages are the upper limit for the bulk-allocator. However, the implementation of

alloc_pages_bulk_array_mempolicydoes not explicitly limit in the implementation. So I believe it is an algorithmic policy related to some sort of optimization. -

if (!order) {

This is an algorithmic policy determines that only use the bulk allocator for order-0 pages (non-super pages). Maybe the bulk allocator could be applied to super pages to speed up allocation. Currently I haven't seen the reason why it cannot be applied.

-

if (likely(count <= VMAP_MAX_ALLOC)) { mem = vb_alloc(size, GFP_KERNEL); if (IS_ERR(mem)) return NULL; addr = (unsigned long)mem; } else { struct vmap_area *va; va = alloc_vmap_area(size, PAGE_SIZE, VMALLOC_START, VMALLOC_END, node, GFP_KERNEL, VMAP_RAM); if (IS_ERR(va)) return NULL; addr = va->va_start; mem = (void *)addr; }

This is an algorithmic policy that determines whether to use a more efficient

vl_allocwhich does not involve complex virtual-to-physical mappings. Unlike the latteralloc_vmap_area,vb_allocdoes not need to traverse the rb-tree of free vmap areas. It simply find a larger enough block fromvmap_block_queue. -

VMAP_PURGE_THRESHOLD

The threshold VMAP_PURGE_THRESHOLD is a configuration policy that could be tuned by machine learning. Setting this threshold lower reduces purging activities while setting it higher reduces framentation.

-

if (!(force_purge

This is an algorithmic policy that prevents purging blocks with considerable amount of "usable memory" unless requested with force_purge.

-

resched_threshold = lazy_max_pages() << 1;

The assignment of resched_threshold and lines 1776-1777 are configuration policies to determine fewer than which number of lazily-freed pages it should yield CPU temporarily to higher-priority tasks.

-

log = fls(num_online_cpus());

This heuristic scales lazy_max_pages logarithmically, which is a configuration policy. Alternatively, machine learning could determine the optimal scaling function—whether linear, logarithmic, square-root, or another approach.

-

32UL * 1024 * 1024

This is a configuration policy that decides to always returns multiples of 32 MB worth of pages. This could be a configurable variable rather than a fixed magic number.

-

- Sep 2024

-

elixir.bootlin.com elixir.bootlin.com

-

if (lruvec->file_cost + lruvec->anon_cost > lrusize / 4) { lruvec->file_cost /= 2; lruvec->anon_cost /= 2; }

It is a configuration policy. The policy here is to adjust the cost of current page. The cost means the overhead for kernel to operate the page if the page is swapped out. Kernel adopts a decay policy. Specifically, if current cost is greater than lrusize/4, its cost will be divided by 2. If kernel has no this policy, after a long-term running, the cost of each page is very high. Kernel might mistakenly reserve pages which are frequently visited long time ago but are inactive currently. It might cause performance degradation. The value (lrusize/4) here has a trade-off between performance and sensitivity. For example, if the value is too small, kernel will frequently adjust the priority of each page, resulting in process's performance degradation. If the value is too large, kernel might be misleaded by historical data, causing wrongly swapping currently popular pages and further performance degradation.

-

if (megs < 16) page_cluster = 2; else page_cluster = 3;

It is a configuration policy. The policy here is to determine the size of page cluster. "2" and "3" is determined externally to the kernel. Page cluster is the actual execution unit when swapping. If the machine is small-memory, kernel executes swap operation on 4 (2^2) pages for each time. Otherwise, kernel operats 8 (2^3) for each time. The rational here is to avoid much memory pressure for small-memory system and to improve performance for large-memory system.

-

-

elixir.bootlin.com elixir.bootlin.com

-

mod_memcg_page_state(area->pages[i], MEMCG_VMALLOC, 1);

It is a configuration policy. It is used to count the physical page for memory control group. The policy here is one physical page corresponds to one count to the memory control group. If using huge page, the value here might not be 1,

-

schedule_timeout_uninterruptible(1);

It is a configuration policy. If kernel cannot allocate an enough virtual space with alignment, while nofail is specified to disallow failure, the kernel will let the process to sleep for 1 time slot. In this period, the process cannot be interrupted and quit the schedule queue of CPU. The "1" here is a configuration parameter, made externally to the kernel. It is not large enough for latency-sensitive process and is not small enough to retry frequently.

-

if (array_size > PAGE_SIZE) { area->pages = __vmalloc_node(array_size, 1, nested_gfp, node, area->caller); } else { area->pages = kmalloc_node(array_size, nested_gfp, node); }

It is an algorithmic policy and also a configuration policy. The algorithmic policy here is to maxmize the data locality of virtual memory address, by letting the space be in continuous virtual pages. If the demanded size of virtual memory is larger than one page, the kernel will allocate multiple continuous pages for it by using __vmalloc_node(). Otherwise, the kernel will call kmalloc_node() to place it in a single page. On the other hand, to allocate continuous pages, we should invoke __vmalloc_node() by declaring align = 1, which is a configuration policy.

-

if (!counters) return; if (v->flags & VM_UNINITIALIZED) return;

It is an algorithmic policy. The show_numa_info() is used for printing how the virtual memory of v (vm_struct) distributes its pages across numa nodes. The two if conditions are used for filtering the invalid v. The first if means the virtual memory has not been associated with physical page. The second if means the virtual memory has not been initialized yet.

-

if (page) copied = copy_page_to_iter_nofault(page, offset, length, iter); else copied = zero_iter(iter, length);