Tacit knowledge is knowledge that cannot be captured through words alone.

Tacit knowledge

Tacit knowledge is knowledge that cannot be captured through words alone.

Tacit knowledge

Artifactory/Nexus/Docker repo was unavailable for a tiny fraction of a second when downloading/uploading packagesThe Jenkins builder randomly got stuck

Typical random issues when deploying microservices

Microservices can really bring value to the table, but the question is; at what cost? Even though the promises sound really good, you have more moving pieces within your architecture which naturally leads to more failure. What if your messaging system breaks? What if there’s an issue with your K8S cluster? What if Jaeger is down and you can’t trace errors? What if metrics are not coming into Prometheus?

Microservices have quite many moving parts

If you’re going with a microservice:

9 things needed for deploying a microservice (listed below)

Let’s take a simple online store app as an example.

5 things needed for deploying a monolith (listed below)

some of the pros for going microservices

Pros of microservices (not always all are applicable):

I think one of the ways that remote work changes this is that I can do other things while I think through a tricky problem; I can do dishes or walk my dog or something instead of trying to look busy in a room with 6-12 other people who are furiously typing because that's how the manager and project manager understand that work gets done.

Way work often looks like during remote dev work

docker scan elastic/logstash:7.13.3 | grep 'Arbitrary Code Execution'

Example of scanning docker image for a log4j vulnerability

AAX is Pro Tools' exclusive format for virtual synths. So you really only need it if you use Pro Tools (which doesn't support the VST format).

Only install VST plugin format (not AAX) for FL Studio

When you usually try to download an image, your browser opens a connection to the server and sends a GET request asking for the image. The server responds with the image and closes the connection. Here however, the server sends the image, but doesn't close the connection! It keeps the connection open and sends bits of data periodically to make sure it stays open. So your browser thinks that the image is still being sent over, which is why the download seems to be going on infinitely.

How to not let the user downloading an image

be microfamous. Microfame is the best kind of fame, because it combines an easier task (be famous to fewer people) with a better outcome (be famous to the right people).

Idea of being microfamous over famous

:w !sudo tee %

Save a file in Vim / Vi without root permission with sudo

Windows 10 Enterprise LTSC 2021 (tak brzmi pełna, poprawna nazwa wersji 21H2 LTSC) będzie otrzymywał aktualizacje do stycznia 2032.

Windows 10 LTSC

Doświadczeni użytkownicy wiedzieli jednak, że należy czekać na Server. W przypadku Windows 11, wydaje się że należy czekać na wersję LTSC.

You may want to wait for Windows 11 LTSC before updating from Windows 10 which gets LTSC first

if it's important enough it will surface somewhere somehow.

Way to apply FOMO

The Feynman technique goes as follows: write down what you know about a subject. Explain it in words simple enough for a 6th grader to understand. Identify any gaps in your knowledge and read up on that again until all of the explanation is dead simple and short.

The Feyman technique for learning

Similar to frequency lists in a natural language there are concepts in any software product that the rest of the software builds upon. I'll call them core concepts. Let's use git as an example. Three core concepts are commits, branches and conflicts.If you know these three core concepts you can proceed with further learning.

To speed up learning, start with the core concepts like frequency lists for learning languages

We can also see that converting the original non-dithered image to WebP gets a much smaller file size, and the original aesthetic of the image is preserved.

Favor converting images to WebP over ditchering them

A lot of us are going to die of unpredictable diseases, some of us young. Really, don't spend your life getting fitter, healthier, more productive. We are all going to die, and Earth will explode in the Sun in a few billion years: please, enjoy some now.

:)

I’d probably choose the official Docker Python image (python:3.9-slim-bullseye) just to ensure the latest bugfixes are always available.

python:3.9-slim-bullseye may be the sweet spot for a Python Docker image

So which should you use? If you’re a RedHat shop, you’ll want to use their image. If you want the absolute latest bugfix version of Python, or a wide variety of versions, the official Docker Python image is your best bet. If you care about performance, Debian 11 or Ubuntu 20.04 will give you one of the fastest builds of Python; Ubuntu does better on point releases, but will have slightly larger images (see above). The difference is at most 10% though, and many applications are not bottlenecked on Python performance.

Choosing the best Python base Docker image depends on different factors.

There are three major operating systems that roughly meet the above criteria: Debian “Bullseye” 11, Ubuntu 20.04 LTS, and RedHat Enterprise Linux 8.

3 candidates for the best Python base Docker image

While people who are both trustworthy and competent are the most sought after when it comes to team assembly, friendliness and trustworthiness are often more important factors than competency.

The researchers found that people who exhibited both competence, through the use of challenging voice, and trustworthiness, through the use of supportive voice, were the most in-demand people when it came to assembling teams.

Feature GraphQL REST

GraphQL vs *REST (table)

There are advantages and disadvantages to both systems, and both have their use in modern API development. However, GraphQL was developed to combat some perceived weaknesses with the REST system, and to create a more efficient, client-driven API.

List of differences between REST and GraphQL (below this annotation)

special permission bit at the end here t, this means everyone can add files, write files, modify files in the /tmp directory, but only root can delete the /tmp directory

t permission bit

We implemented a bash script to be installed in the master node of the EMR cluster, and the script is scheduled to run every 5 minutes. The script monitors the clusters and sends a CUSTOM metric EMR-INUSE (0=inactive; 1=active) to CloudWatch every 5 minutes. If CloudWatch receives 0 (inactive) for some predefined set of data points, it triggers an alarm, which in turn executes an AWS Lambda function that terminates the cluster.

Solution to terminate EMR cluster; however, right now EMR supports auto-termination policy out of the box

git ls-files is more than 5 times faster than both fd --no-ignore and find

git ls-files is the fastest command to find entries in filesystem

If we call this using Bash, it never gets further than the exec line, and when called using Python it will print lol as that's the only effective Python statement in that file.

#!/bin/bash

"exec" "python" "myscript.py" "$@"

print("lol")

For Python the variable assignment is just a var with a weird string, for Bash it gets executed and we store the result.

__PYTHON="$(command -v python3 || command -v python)"

There are a handful of tools that I used to use and now it’s narrowed down to just one or two: pandas-profiling and Dataiku for columnar or numeric data - here’s some getting started tips. I used to also load the data into bamboolib but the purpose of such a tool is different. For text data I have written my own profiler called nlp-profiler.

Tools to help with data exploration:

If for some reason you don’t see a running pod from this command, then using kubectl describe po a is your next-best option. Look at the events to find errors for what might have gone wrong.

kubectl run a –image alpine –command — /bin/sleep 1d

As with listing nodes, you should first look at the status column and look for errors. The ready column will show how many pods are desired and how many are running.

kubectl get pods -A -o wide

-o wide option will tell us additional details like operating system (OS), IP address and container runtime. The first thing you should look for is the status. If the node doesn’t say “Ready” you might have a problem, but not always.

kubectl get nodes -o wide

This command will be the easiest way to discover if your scheduler, controller-manager and etcd node(s) are healthy.

kubectl get componentstatus

If something broke recently, you can look at the cluster events to see what was happening before and after things broke.

kubectl get events -A

this command will tell you what CRDs (custom resource definitions) have been installed in your cluster and what API version each resource is at. This could give you some insights into looking at logs on controllers or workload definitions.

kubectl api-resources -o wide –sort-by name

kubectl get --raw '/healthz?verbose'

Alternative to kubectl get --raw '/healthz?verbose'. It does not show scheduler or controller-manager output, but it adds a lot of additional checks that might be valuable if things are broken

Here are the eight commands to run

8 commands to debug Kubernetes cluster:

kubectl version --short

kubectl cluster-info

kubectl get componentstatus

kubectl api-resources -o wide --sort-by name

kubectl get events -A

kubectl get nodes -o wide

kubectl get pods -A -o wide

kubectl run a --image alpine --command -- /bin/sleep 1d

80% of developers are "dark", they dont write or speak or participate in public tech discourse.

After working in tech, I would estimate the same

They'll teach you for free. Most people don't see what's right in front of them. But not you. "With so many junior devs out there, why will they help me?", you ask. Because you learn in public. By teaching you, they teach many. You amplify them.

Senior engineers can teach you for free if you just open up online

Try your best to be right, but don't worry when you're wrong. Repeatedly. If you feel uncomfortable, or like an impostor, good. You're pushing yourself. Don't assume you know everything, but try your best anyway, and let the internet correct you when you are inevitably wrong. Wear your noobyness on your sleeve.

Truly inspiring! I need to save this as one of my favorite quotes (and share on my blog, of course)!

start building a persistent knowledge base that grows over time. Open Source your Knowledge! At every step of the way: Document what you did and the problems you solved.

That is why I am trying to be present even more on my social media, or on the personal blog. Maybe one day I will try to open-source my OneNote notes as a Wiki-like page

Whatever your thing is, make the thing you wish you had found when you were learning. Don't judge your results by "claps" or retweets or stars or upvotes - just talk to yourself from 3 months ago.

This is the exact same mindset I am following since some time, and it is awesome!

For example instead of "the team were incredibly frustrating to work with and ignored all my suggestions", instead "the culture made it very hard to do quality engineering, which over time was demoralising"

Way to nicely explain why you have left your previous job

Given all that, I simply do not understand why people keep recommending the {} syntax at all. It's a rare case where you'd want all the associated issues. Essentially, the only "advantage" of not running your functions in a subshell is that you can write to global variables. I'm willing to believe there are cases where that is useful, but it should definitely not be the default.

According to the author, strangely, {} syntax is more popular than ().

However, the subshell has its various disadvantages, as listed by the HackerNews user

All we've done is replace the {} with (). It may look like a benign change, but now, whenever that function is invoked, it will be run within a subshell.

Running bash functions within a subshell: () brings some advantages

Coaching is external guidance and feedback on your performance.

Coaching - external guidance and feedback on performance

Mentoring - subset of coaching primarily focused on the creation of knowledge

Dogadać się z firmami z podobnej branży i umieszczać u siebie zdjęcia ich produktów, bez żadnego kodu. Napisać czasem (odpowiednio oznaczony) artykuł sponsorowany. Dodać link do bezpośrednich wpłat na swoje konto. Pomysłów jest multum. Niestety wielu wybrało najłatwiejszą opcję i podpięcie się pod globalne sieci reklamowe. Niekoniecznie zyskują na tym „partnerstwie”. Elementy reklamowe zbierają informacje o użytkownikach nawet jeśli ich nie klikniemy (a zatem i tak nie przyniesiemy zarobków właścicielom stron).

Why it's worth to use ad blockers & how site owners could replace this business model

I’ll recap the steps in case you got lost. I start with the assumption that I’ve already downloaded the invite.ics file.

5 simple steps how to spoof invite.ics files

border collies—could recall and retrieve at least 10 toys they had been taught the names of. One overachiever named Whisky correctly retrieved 54 out of 59 toys he had learned to identify.

Border collies can recall like 10-55 toy names, whereas most dogs can recall around 1-2

those capable of quickly memorizing multiple toy names—shows they often tilt their heads before correctly retrieving a specific toy. That suggests the behavior might be a sign of concentration and recall in our canine pals, the team suggests.

Dogs may tilt their head to recall a toy name

x() is the same as doing x.__call__()

How do you even begin to check if you can try and “call” a function, class, and whatnot? The answer is actually quite simple: You just see if the object implements the __call__ special method.

Use of __call__

Python is referred to as a “duck-typed” language. What it means is that instead of caring about the exact class an object comes from, Python code generally tends to check instead if the object can satisfy certain behaviours that we are looking for.

everything is stored inside dictionaries. And the vars method exposes the variables stored inside objects and classes.

Python stores objects, their variables, methods and such inside dictionaries, which can be checked using vars()

The overall flow of Gitflow is: A develop branch is created from main A release branch is created from develop Feature branches are created from develop When a feature is complete it is merged into the develop branch When the release branch is done it is merged into develop and main If an issue in main is detected a hotfix branch is created from main Once the hotfix is complete it is merged to both develop and main

The overall flow of Gitflow

Hotfix branches are a lot like release branches and feature branches except they're based on main instead of develop. This is the only branch that should fork directly off of main. As soon as the fix is complete, it should be merged into both main and develop (or the current release branch), and main should be tagged with an updated version number.

Hotfix branches

Once develop has acquired enough features for a release (or a predetermined release date is approaching), you fork a release branch off of develop. Creating this branch starts the next release cycle, so no new features can be added after this point—only bug fixes, documentation generation, and other release-oriented tasks should go in this branch. Once it's ready to ship, the release branch gets merged into main and tagged with a version number. In addition, it should be merged back into develop, which may have progressed since the release was initiated.

Release branch

feature branches use develop as their parent branch. When a feature is complete, it gets merged back into develop. Features should never interact directly with main.

Feature branches should only interact with a develop branch

When using the git-flow extension library, executing git flow init on an existing repo will create the develop branch

git flow init will create:

The main condition that needs to be satisfied in order to use OneFlow is that every new production release is based on the previous release. The most difference between One Flow and Git Flow that it not has develop branch.

Main difference between OneFlow and Git Flow

The most difference between GitLab Flow and GitHub Flow are the environment branches having in GitLab Flow (e.g. staging and production) because there will be a project that isn’t able to deploy to production every time you merge a feature branch

Main difference between GitLab Flow and GitHub Flow

4 branching workflows for Git

release-* — release branches support preparation of a new production release. They allow many minor bug to be fixed and preparation of meta-data for a release. May branch off from develop and must merge into master anddevelop.

release branches

hotfix-* — hotfix branches are necessary to act immediately upon an undesired status of master. May branch off from master and must merge into master anddevelop.

hotfix branches

feature-* — feature branches are used to develop new features for the upcoming releases. May branch off from develop and must merge into develop.

feature branches

The main idea behind the space-based pattern is the distributed shared memory to mitigate issues that frequently occur at the database level. The assumption is that by processing most of operations using in-memory data we can avoid extra operations in the database, thus any future problems that may evolve from there (for example, if your user activity data entity has changed, you don’t need to change a bunch of code persisting to & retrieving that data from the DB).The basic approach is to separate the application into processing units (that can automatically scale up and down based on demand), where the data will be replicated and processed between those units without any persistence to the central database (though there will be local storages for the occasion of system failures).

Space-based architecture

Microservices architecture consists of separately deployed services, where each service would have ideally single responsibility. Those services are independent of each other and if one service fails others will not stop running.

Microservices architecture

First of all, if you know the basics of architecture patterns, then it is easier for you to follow the requirements of your architect. Secondly, knowing those patterns will help you to make decisions in your code

2 main advantages of using design patterns:

Mikrokernel Architecture, also known as Plugin architecture, is the design pattern with two main components: a core system and plug-in modules (or extensions). A great example would be a Web browser (core system) where you can install endless extensions (or plugins).

Microkernel (plugin) architecture

The idea behind this pattern is to decouple the application logic into single-purpose event processing components that asynchronously receive and process events. This pattern is one of the popular distributed asynchronous architecture patterns known for high scalability and adaptability.

Event-driven architecture: high scalability and adaptability

It is the most common architecture for monolithic applications. The basic idea behind the pattern is to divide the app logic into several layers each encapsulating specific role. For example, the Persistence layer would be responsible for the communication of your app with the database engine.

Layered architecture

My favourite tactic is to ask a yes/no question. What I love about this is that there’s a much lower chance that the person answering will go off on an irrelevant tangent – they’ll almost always say something useful to me.

Asking yes/no questions can be more powerul than I thought

instead of finding someone who can easily give a clear explanation, I just need to find someone who has the information I want and then ask them specific questions until I’ve learned what I want to know. And I’ve found that most people really do want to be helpful, so they’re very happy to answer questions. And if you get good at asking questions, you can often find a set of questions that will get you the answers you want pretty quickly, so it’s a good use of everyone’s time!

Explaining things is extremely hard, especially for some people. Therefore, you need to be ready to ask more specific questions

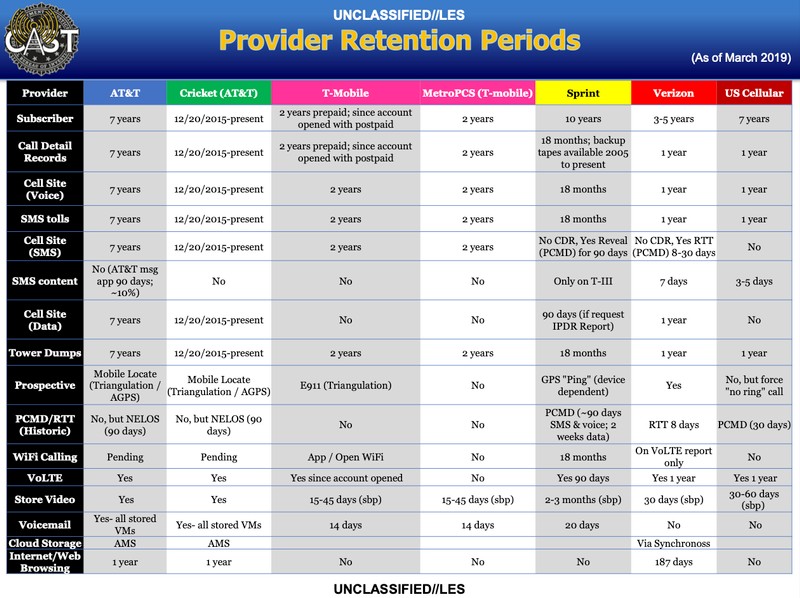

A screenshot from the document providing an overview of different data retention periods. Image: Motherboard.

Is it possible that FBI stores this data on us?

$@ is all of the parameters passed to the script. For instance, if you call ./someScript.sh foo bar then $@ will be equal to foo bar.

Meaning of $@ in Bash

Go ahead and Clear Data so you can start Onenote. Before you close Onenote click on the Sticky Notes button then close Onenote. Onenote will now open normally. If you forget to click on the Sticky Notes button Onenote will break and it wont start again. When this happens you'll need to Clear Data to get Onenote started. I use Onenote everyday and something changed last night.

I am having the same problem with OneNote and this is the solution

indent=True here is treated as indent=1, so it works, but I’m pretty sure nobody would intend that to mean an indent of 1 space

bool is actually not a primitive data type — it’s actually a subclass of int!

Python has only 5 primitives

complex is a supertype of float, which, in turn, is a supertype of int.

On some of Python's primitives

Now since the “compiling to bytecode” step above takes a noticeable amount of time when you import a module, Python stores (marshalls) the bytecode into a .pyc file, and stores it in a folder called __pycache__. The __cached__ parameter of the imported module then points to this .pyc file.When the same module is imported again at a later time, Python checks if a .pyc version of the module exists, and then directly imports the already-compiled version instead, saving a bunch of time and computation.

Python takes benefit of caching imports

Bytecode is a set of micro-instructions for Python’s virtual machine. This “virtual machine” is where Python’s interpreter logic resides. It essentially emulates a very simple stack-based computer on your machine, in order to execute the Python code written by you.

What bytecode does

Python is compiled. In fact, all Python code is compiled, but not to machine code — to bytecode

Python is compiled to bytecode

Python always runs in debug mode by default.The other mode that Python can run in, is “optimized mode”. To run python in “optimized mode”, you can invoke it by passing the -O flag. And all it does, is prevents assert statements from doing anything (at least so far), which in all honesty, isn’t really useful at all.

Python debug vs optimized mode

np = __import__('numpy') # Same as doing 'import numpy as np'

This refers to the module spec. It contains metadata such as the module name, what kind of module it is, as well as how it was created and loaded.

__spec__

let’s say you only want to support integer addition with this class, and not floats. This is where you’d use NotImplemented

Example use case of NotImplemented:

class MyNumber:

def __add__(self, other):

if isinstance(other, float):

return NotImplemented

return other + 42

__radd__ operator, which adds support for right-addition

class MyNumber:

def __add__(self, other):

return other + 42

def __radd__(self, other):

return other + 42

Now I should mention that all objects in Python can add support for all Python operators, such as +, -, +=, etc., by defining special methods inside their class, such as __add__ for +, __iadd__ for +=, and so on.

For example:

class MyNumber:

def __add__(self, other):

return other + 42

and then:

>>> num = MyNumber()

>>> num + 3

45

NotImplemented is used inside a class’ operator definitions, when you want to tell Python that a certain operator isn’t defined for this class.

NotImplemented constant in Python

Doing that would even catch KeyboardInterrupt, which would make you unable to close your program by pressing Ctrl+C.

except BaseException: ...

every exception is a subclass of BaseException, and nearly all of them are subclasses of Exception, other than a few that aren’t supposed to be normally caught.

on Python's exceptions

print(dir(__builtins__))

command to get all the builtins

builtin scope in Python:It’s the scope where essentially all of Python’s top level functions are defined, such as len, range and print.When a variable is not found in the local, enclosing or global scope, Python looks for it in the builtins.

builtin scope (part of LEGB rule)

Global scope (or module scope) simply refers to the scope where all the module’s top-level variables, functions and classes are defined.

Global scope (part of LEGB rule)

you can use the nonlocal keyword in Python to tell the interpreter that you don’t mean to define a new variable in the local scope, but you want to modify the one in the enclosing scope.

nonlocal

The enclosing scope (or nonlocal scope) refers to the scope of the classes or functions inside which the current function/class lives.

Enclosing scope (part of LEGB rule)

The local scope refers to the scope that comes with the current function or class you are in.

Local scope (part of LEGB rule)

A builtin in Python is everything that lives in the builtins module.

Python's builtin

in Python 3.0 (alongside 2.6), A new method was added to the str data type: str.format. Not only was it more obvious in what it was doing, it added a bunch of new features, like dynamic data types, center alignment, index-based formatting, and specifying padding characters.

History of str.format in Python

So, while DELETE operations are free, LIST operations (to get a list of objects) are not free (~$.005 per 1000 requests, varying a bit by region).

Deleting buckets on S3 is not free. If you use either Web Console or AWS CLI, it will execute the LIST call per 1000 objects

TypedDict is a dictionary whose keys are always string, and values are of the specified type. At runtime, it behaves exactly like a normal dictionary.

TypedDict

you should only use reveal_type to debug your code, and remove it when you’re done debugging.

Because it's only used by mypy

What this says is “function double takes an argument n which is an int, and the function returns an int.

def double(n: int) -> int:

This tells mypy that nums should be a list of integers (List[int]), and that average returns a float.

from typing import List

def average(nums: List[int]) -> float:

for starters, use mypy --strict filename.py

If you're starting your journey with mypy, use the --strict flag

few battle-hardened options, for instance: Airflow, a popular open-source workflow orchestrator; Argo, a newer orchestrator that runs natively on Kubernetes, and managed solutions such as Google Cloud Composer and AWS Step Functions.

Current top orchestrators:

To make ML applications production-ready from the beginning, developers must adhere to the same set of standards as all other production-grade software. This introduces further requirements:

Requirements specific to MLOps systems:

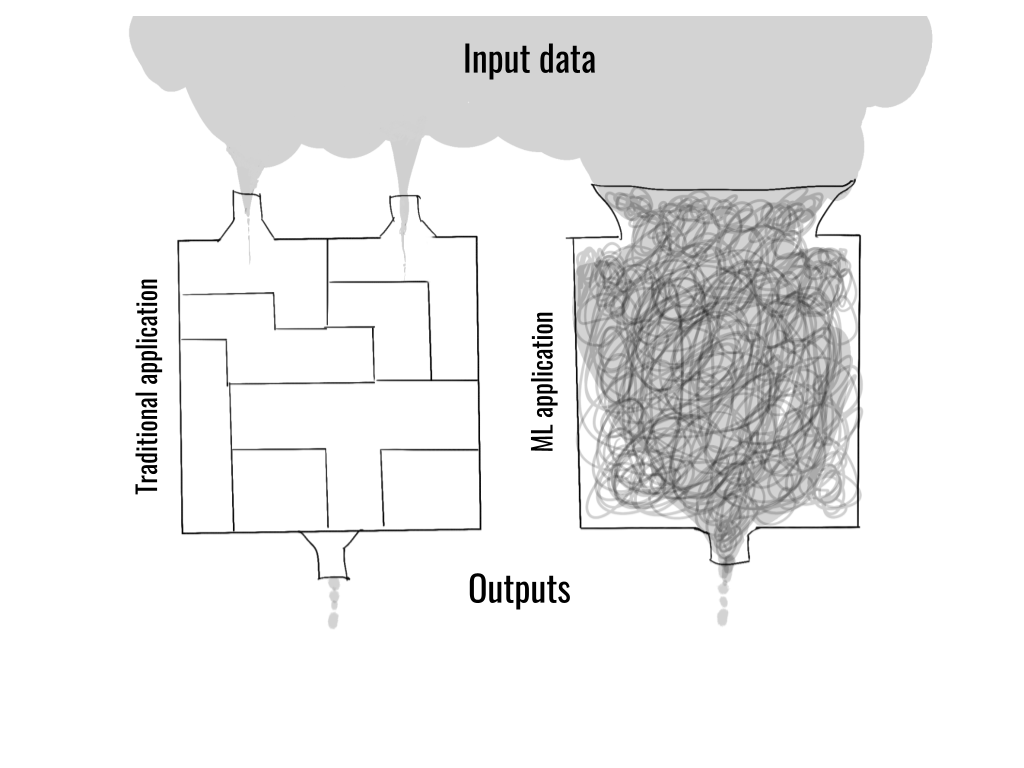

In contrast, a defining feature of ML-powered applications is that they are directly exposed to a large amount of messy, real-world data which is too complex to be understood and modeled by hand.

One of the best ways to picture a difference between DevOps and MLOps

As a character, I believe that I am quite a responsible and hardworking person. The combination of the two becomes dangerous when you lose the measure of how much you should work to get a job done right.

That sounds like me, and I completely agree with the author that it can be truly dangerous at times

UPDATE--SHA-1, the 25-year-old hash function designed by the NSA and considered unsafe for most uses for the last 15 years, has now been “fully and practically broken” by a team that has developed a chosen-prefix collision for it.

SHA-1 has been broken; therefore, make sure not to use it in a production based environment

So, what’s a better way to illustrate JOIN operations? JOIN diagrams!

Apparently, SQL should be taught using JOIN diagrams not Venn diagrams?

State of Data Science and Machine Learning 2021

iOS 15.0 introduces a new feature: an iPhone can be located with Find My even while the iPhone is turned "off"

Argo Workflow is part of the Argo project, which offers a range of, as they like to call it, Kubernetes-native get-stuff-done tools (Workflow, CD, Events, Rollouts).

High level definition of Argo Workflow

Argo is designed to run on top of k8s. Not a VM, not AWS ECS, not Container Instances on Azure, not Google Cloud Run or App Engine. This means you get all the good of k8s, but also the bad.

Pros of Argo Workflow:

Cons of Argo Workflow:

If you are already heavily invested in Kubernetes, then yes look into Argo Workflow (and its brothers and sisters from the parent project).The broader and harder question you should ask yourself is: to go full k8s-native or not? Look at your team’s cloud and k8s experience, size, growth targets. Most probably you will land somewhere in the middle first, as there is no free lunch.

Should you go into Argo, or not?

In order to reduce the number of lines of text in Workflow YAML files, use WorkflowTemplate . This allow for re-use of common components.

kind: WorkflowTemplate

Wet behind the ears: the ones that have just started working as a professional programmers and need lots of guidance. Sort of knows what they are doing: professional programmers, who have sort of figured what is going on and can mostly finish their tasks on their own. Experienced: the ones who have been around the block for a while and are most likely the brains behind all the major design decisions of a large software project. Coworkers usually turn to them when they need advice for a hard technical problem. Some programmers will never reach this level, despite their official title stating otherwise (but more on that later).

3 levels of programming experience:

The problem with the first approach is that it considers software development to be a deterministic process when, in fact, it’s stochastic. In other words, you can’t accurately determine how long it will take to write a particular piece of code unless you have already written it.

Estimation around software development is a stochastic process

Reading books, being aware of the curiosity gap, and asking a lot of questions:

Ways to boost creativity

The difference between smart and curious people and only smart people is that curiosity helps you move forward in life. If you shut the door to curiosity. You shut the door to learning. And when you don’t learn. You don’t move forward. You must be curious to learn. Otherwise, you won’t even consider learning.

Curiosity is the core drive of learning

Smart people become even smarter because they are smart enough to understand that they don’t have all the answers.

Smartness is driven by curiosity

You probably shouldn't use Alpine for Python projects, instead use the slim Docker image versions.

(have a look below this highlight for a full reasoning)

Cost — it’s by far the most affordable headset in its class, and while I have a tendency to be lavish with my gadgetry I’m still a cheapskate: I love a good deal and a favorably skewed cost/benefit ratio even more.

Oculus seems to be offering a good quality/cost ratio

Adapting to the new environment is immediate, like moving between rooms, and since the focal length in the headset matches regular human vision there’s no acclimating or adjustment.

Adaptation to VR work should be seamless

I do highly contextual work, with multiple work orders and their histories open, supporting reference documentation, API specifications, several areas of code (and calls in the stack), tests, logs, databases, and GUIs — plus Slack, Spotify, clock, calendar, and camera feeds. I tend to only look at 25% of that at once, but everything is within a comfortable glance without tabbing between windows. Protecting that context and augmenting my working memory maintains my flow.

Application types to look at during work:

With all that, we may look at around 25% of the stuff at once

Realism will increase (perhaps to hyperrealism) and our ability to perceive and interact with simulated objects and settings will be indistinguishable to our senses. Acting in simulated contexts will have physical consequences as systems interpret and project actions into the world — telepresence will take a quantum leap, removing limitations of time and distance. Transcending today’s drone piloting, remote surgery, etc., we will see through remote eyes and work through remote hands anywhere.

On the increase of realism in the future

It has a ton of promise, but… I don’t really care for the promise it’s making. 100% of what you can do in Workrooms is feasible in a physical setting, although it would be really expensive (lots of smart hardware all over the place). But that’s the thing: it’s imitating life within a tool that doesn’t share the same limitations, so as a VR veteran I find it bland and claustrophobic. That’s going to be really good for newcomers or casual users because the skeuomorphism is familiar, making it easy to immediately orient oneself and begin working together — and that illustrates a challenge in design vocabulary. While the familiar can provide a safe and comfortable starting point, the real power of VR requires training users for potentially unfamiliar use cases. Also, if you can be anywhere, why would you want to be in a meeting room, virtual-Lake Tahoe notwithstanding?

Author's feedback on why Workrooms do not fully use their potential

For meeting with those not in VR, or if I have a video call that needs input rather than passive attendance, I’ll frequently use a virtual webcam to attend by avatar. It’s sufficiently demonstrative for most team meetings, and the crew has gotten used to me showing up as a digital facsimile. I’ll surface from VR and use a physical webcam for anything sensitive or personal, however.

On VR meetings with other people while they are using normal webcams

Meetings are best in person, in VR, in MURAL, and in Zoom — in that order. As a remote worker of several years, “in person” is a rarity for me — so I use VR to preserve the feeling of shared presence, of inhabiting a place with other people, especially when good spatial audio is used. Hand tracking enables meaningful gestures and animated expression, despite the avatars cartoonish appearance — somehow it all “just works”, your brain accepts that these people you know are embodied through these virtual puppets, and you get on with communicating instead of quibbling about missing realism (which will be a welcome improvement as it becomes available but doesn’t stop this from working right now).

Author's shared feeling over working remotely

What’s it like to actually use? In a word: comfortable. Given a few more words, I’d choose productive and effective. I can resize, reposition, add, or remove as much screen space as I need. I never have to squint or lean forward, crane my neck, hunt for an application window I just had open, or struggle to find a place for something. Many trade-offs and compromises from the past no longer apply — I put my apps in convenient locations I can see at a glance, and without getting in my way. I move myself and my gaze enough throughout the day that I’m not stiff at the end of it and experience less eye strain than I ever did with a bunch of desk-bound LCDs.

Author's reflections on working in VR. It seems like he highly values the comfortability and space for multiple windows

Since all I need is a keyboard, mouse, and a place to park myself, I’ve completely ditched the traditional desk. I can use a floor setup for part of the day and mix it up with a standing arrangement for the rest.

Working in VR, you don't need the screens in front of your eyes

Supporting 4×4 MIMO takes a lot more power, and for battery powered devices, runtime is FAR more important.

Reason why client devices still use 2x2 MIMO, not 4x4 MIMO

How did your router even get a 'rating' of 5300 Mbps in the first place? Router manufacturers combine/add the maximum physical network speeds for ALL wifi bands (usually 2 or 3 bands) in the router to produce a single aggregate (grossly inflated) Mbps number. But your client device only connects to ONE band (not all bands) on the router at once. So, '5300 Mbps' is all marketing hype.

Why routers get such a high rating

The only thing that really matters to you is the maximum speed of a single 5 GHz band (using all MIMO antennas).

What to focus on when choosing a router

You have 1 Gbps Internet, and just bought a very expensive AX11000 class router with advertised speeds of up to 11 Gbps, but when you run a speed test from your iPhone XS Max (at a distance of around 32 feet), you only get around 450 Mbps (±45 Mbps). Same for iPad Pro. Same for Samsung Galaxy S8. Same for a laptop computer. Same for most wireless clients. Why? Because that is the speed expected from these (2×2 MIMO) devices!

Reason why you may be getting slow internet speed on your client device (2x2 MIMO one)

Before we dive into the details, here's a brief summary of the most important changes:

List of the most important upcoming Python 3.10 features (see below)

It’s been a hot, hot year in the world of data, machine learning and AI.

Summary of data tools in October 2021: http://46eybw2v1nh52oe80d3bi91u-wpengine.netdna-ssl.com/wp-content/uploads/2021/09/ML-AI-Data-Landscape-2021.pdf

we will be releasing KServe 0.7 outside of the Kubeflow Project and will provide more details on how to migrate from KFServing to KServe with minimal disruptions

KFServing is now KServe

You can attach Visual Studio Code to this container by right-clicking on it and choosing the Attach Visual Studio Code option. It will open a new window and ask you which folder to open.

It seems like VS Code offers a better way to manage Docker containers

You don’t have to download them manually, as a docker-compose.yml will do that for you. Here’s the code, so you can copy it to your machine:

Sample docker-compose.yml file to download both: Kafka and Zookeeper containers

Kafka version 2.8.0 introduced early access to a Kafka version without Zookeeper, but it’s not ready yet for production environments.

In the future, Zookeeper might be no longer needed to operate Kafka

Kafka consumer — A program you write to get data out of Kafka. Sometimes a consumer is also a producer, as it puts data elsewhere in Kafka.

Simple Kafka consumer terminology

Kafka producer — An application (a piece of code) you write to get data to Kafka.

Simple producer terminology

Kafka topic — A category to which records are published. Imagine you had a large news site — each news category could be a single Kafka topic.

Simple Kafka topic terminology

Kafka broker — A single Kafka Cluster is made of Brokers. They handle producers and consumers and keeps data replicated in the cluster.

Simple Kafka broker terminology

Kafka — Basically an event streaming platform. It enables users to collect, store, and process data to build real-time event-driven applications. It’s written in Java and Scala, but you don’t have to know these to work with Kafka. There’s also a Python API.

Simple Kafka terminology

Then I noticed in the extension details that the "Source" was listed as the location of the "bypass-paywalls-chrome-master" file. I reasoned that the extension would load from there, and I think it does at every browser start-up. So before I re-installed it, I moved the source folder to a permanent location and then dragged it over the extensions page to install it. It has never disappeared since. You need to leave the source folder in place after installation.

Solution to "Chrome automatically deleting unwanted extensions" :)

Some problems with the feature

Chapter with list of problems pattern matching brings to Python 3.10

One thing to note is that match and case are not real keywords but “soft keywords”, meaning they only operate as keywords in a match ... case block.

match and case are soft keywords

Use variable names that are set if a case matches Match sequences using list or tuple syntax (like Python’s existing iterable unpacking feature) Match mappings using dict syntax Use * to match the rest of a list Use ** to match other keys in a dict Match objects and their attributes using class syntax Include “or” patterns with | Capture sub-patterns with as Include an if “guard” clause

pattern matching in Python 3.10 is like a switch statement + all these features

It’s tempting to think of pattern matching as a switch statement on steroids. However, as the rationale PEP points out, it’s better thought of as a “generalized concept of iterable unpacking”.

High-level description of pattern matching coming in Python 3.10

We have decided to spend some extra time refactoring the Goose Protocol. The impact of this is that we will have to push the release out a week, but it will save us time when we implement the Duck and Chicken protocols next quarter.

Good example of communicating impact

Often, when you ask someone to perform a task, you have an expectation of what the output of that task will look like. Making sure that you communicate that expectation clearly means that they are set up for success and that you get the outcome you anticipated.

As a team leader make sure to be clear in your communication

I am finding that it is often more important to bring wider context (“Ah, that would align with the work that Team Scooby are working on.") and ask the right questions (“How are we going to roll back if this fails?"), allowing the engineers (more experienced than you or not) to do their best work.

How to think as a team lead

Machine Unlearning is a new area of research that aims to make models selectively “forget” specific data points it was trained on, along with the “learning” derived from it i.e. the influence of these training instances on model parameters. The goal is to remove all traces of a particular data point from a machine learning system, without affecting the aggregate model performance.Why is it important? Work is motivated in this area, in part by growing concerns around privacy and regulations like GDPR and the “Right to be Forgotten”. While multiple companies today allow users to request their private data be deleted, there is no way to request that all context learned by algorithms from this data be deleted as well. Furthermore, as we have covered previously, ML models suffer from information leakage and machine unlearning can be an important lever to combat this. In our view, there is another important reason why machine unlearning is important: it can help make recurring model training more efficient by making models forget those training examples that are outdated, or no longer matter.

What is Machine UNlearning and why is it important

The difference appears to be what the primary causes of that burnout are. The top complaint was “being asked to take on more work” after layoffs, consolidations or changing priorities. Other top beefs included toxic workplaces, being asked to work faster and being micromanaged.

The real reason behind burnouts

What’s left are two options, but only one for the WWW. To capture the most internet users, the best option is to use a .is TLD; however, for true anonimity and control, a .onion is superior.

The 2 most liberal domains: .is (Iceland) and .onion (world)

When you notice someone feeling anxious at work, try grabbing a notebook and helping them to get their concerns and questions onto paper. Often this takes the form of a list of to-dos or scenarios.

Fight anxiety by organizing your mind

Anxiety comes from not seeing the full picture.

Where anxiety comes from

Overreaction is often a sign that something else might be going on that you aren’t aware of. Perhaps they didn’t get enough sleep or recently had a fight with a friend. Maybe something about the situation is triggering an unresolved trauma from their childhood — a phenomenon called transference.

Possible reason for emotion overreactiveness

In 2020, 35% of respondents said they used Docker. In 2021, 48.85% said they used Docker. If you look at estimates for the total number of developers, they range from 10 to 25 million. That's 1.4 to 3 million new users this year.

Rapidly growing popularity of Docker (2020 - 2021)

kind, microk8s, or k3s are replacements for Docker Desktop. False. Minikube is the only drop-in replacement. The other tools require a Linux distribution, which makes them a non-starter on macOS or Windows. Running any of these in a VM misses the point – you don't want to be managing the Kubernetes lifecycle and a virtual machine lifecycle. Minikube abstracts all of this.

At the current moment the best approach is to use minikube with a preferred backend (Docker Engine and Podman are already there), and you can simply run one command to configure Docker CLI to use the engine from the cluster.

The best practice is this: #!/usr/bin/env bash #!/usr/bin/env sh #!/usr/bin/env python

The best shebang convention: #!/usr/bin/env bash.

However, at the same time it might a security risk if the $PATH to bash points to some malware. Maybe then it's better to point directly to it with #!/bin/bash

As python supports virtual environments, using /usr/bin/env python will make sure that your scripts runs inside the virtual environment, if you are inside one. Whereas, /usr/bin/python will run outside the virtual environment.

Important difference between /usr/bin/env python and /usr/bin/python

Here's my bash boilerplate with some sane options explained in the comments

Clearly explained use of the typical bash script commands: set -euxo pipefail

This post is about all the major disadvantages of Julia. Some of it will just be rants about things I particularly don't like - hopefully they will be informative, too.

It seems like Julia is not just about pros:

And back in the day, everything was GitHub issues. The company internal blog was an issue-only repository. Blog posts were issues, you’d comment on them with comments on issues. Sales deals were tracked with issue threads. Recruiting was in issues - an issue per candidate. All internal project planning was tracked in issues.

Interesting how versatile GitHub Issues can be

set -euo pipefail

One simple line to improve security of bash scripts:

-e - Exit immediately if any command fails.-u - Exit if an unset variable is invoked.-o pipefail - Exit if a command in a piped series of commands fails.The real world is the polar opposite. You’ll have some ultra-vague end goal, like “help people in sub-Saharan Africa solve their money problems,” based on which you’ll need to prioritize many different sub-problems. A solution’s performance has many different dimensions (speed, reliability, usability, repeatability, cost, …)—you probably don’t even know what all the dimensions are, let alone which are the most important.

How real world problems differ from the school ones

k3d is basically running k3s inside of Docker. It provides an instant benefit over using k3s on a local machine, that is, multi-node clusters. Running inside Docker, we can easily spawn multiple instances of our k3s Nodes.

k3d <--- k3s that allows to run mult-node clusters on a local machine

Kubernetes in Docker (KinD) is similar to minikube but it does not spawn VM's to run clusters and works only with Docker. KinD for the most part has the least bells and whistles and offers an intuitive developer experience in getting started with Kubernetes in no time.

KinD (Kubernetes in Docker) <--- sounds like the most recommended solution to learn k8s locally

Contrary to the name, it comes in a larger binary of 150 MB+. It can be run as a binary or in DinD mode. k0s takes security seriously and out of the box, it meets the FIPS compliance.

k0s <--- similar to k3s, but not as lightweight

k3s is a lightweight Kubernetes distribution from Rancher Labs. It is specifically targeted for running on IoT and Edge devices, meaning it is a perfect candidate for your Raspberry Pi or a virtual machine.

k3s <--- lightweight solution

All of the tools listed here more or less offer the same feature, including but not limited to

7 tools for learning k8s locally:

There are multiple tools for running Kubernetes on your local machine, but it basically boils down to two approaches on how it is done

We can run Kubernetes locally as a:

Before we move on to talk about all the tools, it will be beneficial if you installed arkade on your machine.

With arkade, we can quickly set up different k8s tools, while using a single command:

e.g. arkade get k9s

The hard truth that many companies struggle to wrap their heads around is that they should be paying their long-tenured engineers above market rate. This is because an engineer that’s been working at a company for a long time will be more impactful specifically at that company than at any other company.

Engineer's market value over time:

This one implements the behavior of git checkout when running it only against a branch name. So you can use it to switch between branches or commits.

git switch <branch_name>

When you provide just a branch or commit as an argument for git checkout, then it will change all your files to their state in the corresponding revision, but if you also specify a filename, it will only change the state of that file to match the specified revision.

git checkout has a 2nd option that most of us skipped

if you are in the develop branch and want to change the test.txt file to be the version from the main branch, you can do it like this

git chekout main -- test.txt

CBL-Mariner is an internal Linux distribution for Microsoft’s cloud infrastructure and edge products and services.

CBL-Mariner <--- Microsoft's Linux distribution

Open source is not a good business model.If you want to make money do literally anything else: try to sell software, do consulting, build a SAAS and charge monthly for it, rob a bank.

Open-source software is not money friendly

The only way for one person to even attempt cross-platform app is to use a UI abstraction layer like Qt, WxWidgets or Gtk.The problem is that Gtk is ugly, Qt is extremely bloated and WxWidgets barely works.

Why releasing cross-platform apps can make them ugly

However, human babies are also able to immediately detect objects and identify motion, such as a finger moving across their field of vision, suggesting that their visual system was also primed before birth.

A new Yale study suggests that, in a sense, mammals dream about the world they are about to experience before they are even born.

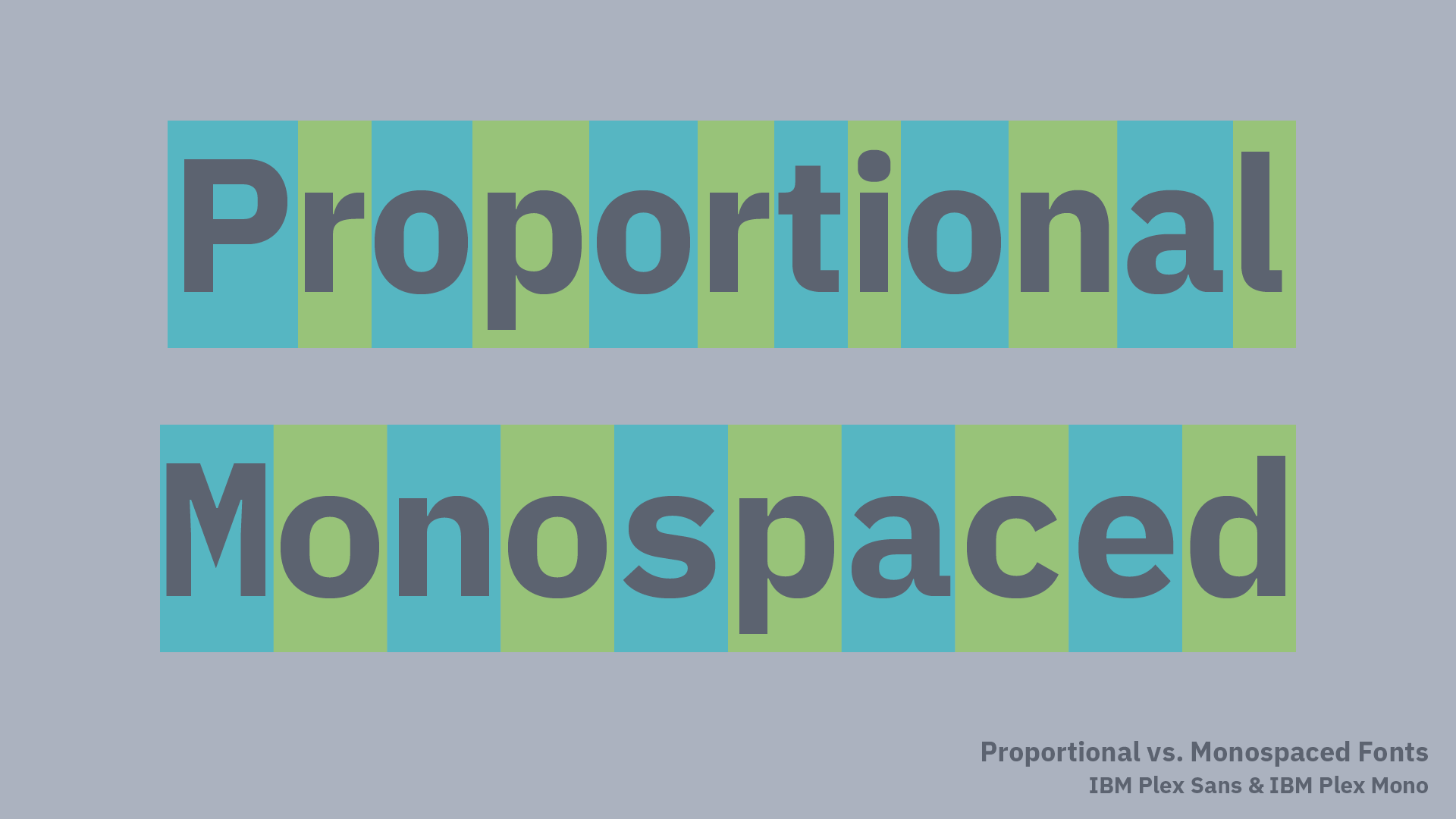

The visual and spacing differences between proportional and monospaced fonts

Proportional vs Monospaced fonts:

you don’t want to miss out on a great engineer just because they spent all of their energy making great products for prior employers rather than blogging, speaking and coding in public.

valuable HR tip

Why do 87% of data science projects never make it into production?

It turns out that this phrase doesn't lead to an existing research. If one goes down the rabbit hole, it all ends up with dead links

Cats don't understand smiles, but they have an equivalent: Slow blinking. Slow blinking means: We are cool, we are friends. Beyond that you can just physically pet them, use gentle voice.

Equivalent of smiling in cats language = slow blinking

PyPA still advertises pipenv all over the place and only mentions poetry a couple of times, although poetry seems to be the more mature product.

Sounds like PyPA does not like poetry as much for political/business reasons

The main selling point for Anaconda back then was that it provided pre-compiled binaries. This was especially useful for data-science related packages which depend on libatlas, -lapack, -openblas, etc. and need to be compiled for the target system.

Reason why Anaconda got so popular

Many of Python’s standard tools allow already for configuration in pyproject.toml so it seems this file will slowly replace the setup.cfg and probably setup.py and requirements.txt as well. But we’re not there yet.

Potential future of pyproject.toml

When I'm at the very beginning of a learning journey, I tend to focus primarily on guided learning. It's difficult to build anything in an unguided way when I'm still grappling with the syntax and the fundamentals!As I become more comfortable, though, the balance shifts.

Finding the right balance while learning. For example, start with guided and eventually move to unguided learning:

I try and act like a scientist. If I have a hypothesis about how this code is supposed to work, I test that hypothesis by changing the code, and seeing if it breaks in the way I expect. When I discover that my hypothesis is flawed, I might detour from the tutorial and do some research on Google. Or I might add it to a list of "things to explore later", if the rabbit hole seems to go too deep.

Soudns like shotgun debugging is not the worst method to learn programming

Things never go smoothly when it comes to software development. Inevitably, we'll hit a rough patch where the code doesn't do what we expect.This can either lead to a downward spiral—one full of frustration and self-doubt and impostor syndrome—or it can be seen as a fantastic learning opportunity. Nothing helps you learn faster than an inscrutable error message, if you have the right mindset.Honestly, we learn so much more from struggling and failing than we do from effortless success. With a growth mindset, the struggle might not be fun exactly, but it feels productive, like a good workout.

Cultivating a growth mindset while learning programming

I had a concrete goal, something I really wanted, I was able to push through the frustration and continue making progress. If I had been learning this stuff just for fun, or because I thought it would look good on my résumé, I would have probably given up pretty quickly.

To truly learn something, it is good to have the concrete GOAL, otherwise you might not push yourself as hard