Any import statement compiles to a series of bytecode instructions, one of which, called IMPORT_NAME, imports the module by calling the built-in __import__() function.

All the Python import statements come down to the __import__() function

Any import statement compiles to a series of bytecode instructions, one of which, called IMPORT_NAME, imports the module by calling the built-in __import__() function.

All the Python import statements come down to the __import__() function

Furthermore, in order to build a comprehensive pipeline, the code quality, unit test, automated test, infrastructure provisioning, artifact building, dependency management and deployment tools involved have to connect using APIs and extend the required capabilities using IaC.

Vital components of a pipeline

59% of organizations are following agile principles, but only 4% of these organizations are getting the full benefit.

databases is an async SQL query builder that works on top of the SQLAlchemy Core expression language.

databases Python package

The fact that FastAPI does not come with a development server is both a positive and a negative in my opinion. On the one hand, it does take a bit more to serve up the app in development mode. On the other, this helps to conceptually separate the web framework from the web server, which is often a source of confusion for beginners when one moves from development to production with a web framework that does have a built-in development server (like Django or Flask).

FastAPI does not include a web server like Flask. Therefore, it requires Uvicorn.

Not having a web server has pros and cons listed here

FastAPI makes it easy to deliver routes asynchronously. As long as you don't have any blocking I/O calls in the handler, you can simply declare the handler as asynchronous by adding the async keyword like so:

FastAPI makes it effortless to convert synchronous handlers to asynchronous ones

Fixtures are created when first requested by a test, and are destroyed based on their scope: function: the default scope, the fixture is destroyed at the end of the test.

Fixtures can be executed in 5 different scopes, where function is the default one:

When pytest goes to run a test, it looks at the parameters in that test function’s signature, and then searches for fixtures that have the same names as those parameters. Once pytest finds them, it runs those fixtures, captures what they returned (if anything), and passes those objects into the test function as arguments.

What happens when we include fixtures in our testing code

“Fixtures”, in the literal sense, are each of the arrange steps and data. They’re everything that test needs to do its thing.

To remind, the tests consist of 4 steps:

(pytest) fixtures are generally the arrange (set up) operations that need to be performed before the act (running the tests. However, fixtures can also perform the act step.

Get the `curl-format.txt` from github and then run this curl command in order to get the output $ curl -L -w "@curl-format.txt" -o tmp -s $YOUR_URL

Testing server latency with curl:

1) Get this file from GitHub

2) Run the curl: curl -L -w "@curl-format.txt" -o tmp -s $YOUR_URL

there is a drawback, docker-compose runs on a single node which makes scaling hard, manual and very limited. To be able to scale services across multiple hosts/nodes, orchestrators like docker-swarm or kubernetes comes into play.

We had to build the image for our python API every-time we changed the code, we had to run each container separately and manually insuring that out database container is running first. Moreover, We had to create a network before hand so that we connect the containers and we had to add these containers to that network and we called it mynet back then. With docker-compose we can forget about all of that.

Things being resolved by a docker-compose

A deep interest in a topic makes people work harder than any amount of discipline can.

deep interest = working harder

Whereas what drives me now, writing essays, is the flaws in them. Between essays I fuss for a few days, like a dog circling while it decides exactly where to lie down. But once I get started on one, I don't have to push myself to work, because there's always some error or omission already pushing me.

Potential drive to write essays

And if you think there's something admirable about working too hard, get that idea out of your head. You're not merely getting worse results, but getting them because you're showing off — if not to other people, then to yourself.

It is not right to work hard

That limit varies depending on the type of work and the person. I've done several different kinds of work, and the limits were different for each. My limit for the harder types of writing or programming is about five hours a day. Whereas when I was running a startup, I could work all the time. At least for the three years I did it; if I'd kept going much longer, I'd probably have needed to take occasional vacations.

The limits of work vary by the type of work you're doing

There are three ingredients in great work: natural ability, practice, and effort. You can do pretty well with just two, but to do the best work you need all three: you need great natural ability and to have practiced a lot and to be trying very hard.

3 ingredients of great work:

One thing I know is that if you want to do great things, you'll have to work very hard.

Obvious, yet good to remember

rebase is really nothing more than a merge (or a series of merges) that deliberately forgets one of the parents of each merge step.

What rebase really is

Here is how you can create a fully configured new project in a just a couple of minutes (assuming you have pyenv and poetry installed already).

Fast track setup of a new Python project

After reading through PEP8, you may wonder if there is a way to automatically check and enforce these guidelines? Flake8 does exactly this, and a bit more. It works out of the box, and can be configured in case you want to change some specific settings.

Flake8 does PEP8 and a bit more

Pylint is a very strict and nit-picky linter. Google uses it for their Python projects internally according to their guidelines. Because of it’s nature, you’ll probably spend a lot of time fighting or configuring it. Which is maybe not bad, by the way. Outcome of such strictness can be a safer code, however, as a consequence - longer development time.

Pylint is a very strict linter embraced by Google

The goal of this tutorial is to describe Python development ecosystem.

tl;dr:

INSTALLATION:

TESTING:

REFACTORING:

For Windows, there is pyenv for Windows - https://github.com/pyenv-win/pyenv-win. But you’d probably better off with Windows Subsystem for Linux (WSL), then installing it the Linux way.

You can install pyenv for Windows, but maybe it's better to go the WSL way

There are often multiple versions of python interpreters and pip versions present. Using python -m pip install <library-name> instead of pip install <library-name> will ensure that the library gets installed into the default python interpreter.

Potential solution for the Python's ImportError after a successful pip installation

In addition to SQLAlchemy core queries, you can also perform raw SQL queries

Instead of SQLAlchemy core query:

query = notes.insert()

values = [

{"text": "example2", "completed": False},

{"text": "example3", "completed": True},

]

await database.execute_many(query=query, values=values)

One can write a raw SQL query:

query = "INSERT INTO notes(text, completed) VALUES (:text, :completed)"

values = [

{"text": "example2", "completed": False},

{"text": "example3", "completed": True},

]

await database.execute_many(query=query, values=values)

=================================

The same goes with fetching in SQLAlchemy:

query = notes.select()

rows = await database.fetch_all(query=query)

And doing the same with raw SQL:

query = "SELECT * FROM notes WHERE completed = :completed"

rows = await database.fetch_all(query=query, values={"completed": True})

This means that an event-driven system focuses on addressable event sources while a message-driven system concentrates on addressable recipients. A message can contain an encoded event as its payload.

Event-Driven vs Message-Driven

we want systems that are Responsive, Resilient, Elastic and Message Driven. We call these Reactive Systems.

Reactive Systems:

as a result, they are:

Resilience is achieved by replication, containment, isolation and delegation.

Components of resilience

Today applications are deployed on everything from mobile devices to cloud-based clusters running thousands of multi-core processors. Users expect millisecond response times and 100% uptime. Data is measured in Petabytes.

Today's demands from users

Even though Kubernetes is moving away from Docker, it will always support the OCI and Docker image formats. Kubernetes doesn’t pull and run images itself, instead the Kubelet relies on container engines like CRI-O and containerd to pull and run the images. These are the two main container engines used with CRI-O and they both support the Docker and OCI image formats, so no worries on this one.

Reason why one should not be worried about k8s depreciating Docker

We comment out the failed line, and the Dockerfile now looks like this:

To test a failing Dockerfile step, it is best to comment it out, successfully build an image, and then run this command from inside of the Dockerfile

Some options (you will have to use your own judgment, based on your use case)

4 different options to install Poetry through a Dockerfile

When you have one layer that downloads a large temporary file and you delete it in another layer, that has the result of leaving the file in the first layer, where it gets sent over the network and stored on disk, even when it's not visible inside your container. Changing permissions on a file also results in the file being copied to the current layer with the new permissions, doubling the disk space and network bandwidth for that file.

Things to watch out for in Dockerfile operations

making sure the longest RUN command come first and in their own layer (again to be cached), instead of being chained with other RUN commands: if one of those fail, the long command will have to be re-executed. If that long command is isolated in its own (Dockerfile line)/layer, it will be cached.

Optimising Dockerfile is not always as simple as MIN(layers). Sometimes, it is worth keeping more than a single RUN layer

Docker has a default entrypoint which is /bin/sh -c but does not have a default command.

This StackOverflow answer is a good explanation of the

purpose behind the ENTRYPOINT and CMD command

To prevent this skew, companies like DoorDash and Etsy log a variety of data at online prediction time, like model input features, model outputs, and data points from relevant production systems.

Log inputs and outputs of your online models to prevent training-serving skew

idempotent jobs — you should be able to run the same job multiple times and get the same result.

Encourage idempotency

Uber and Booking.com’s ecosystem was originally JVM-based but they expanded to support Python models/scripts. Spotify made heavy use of Scala in the first iteration of their platform until they received feedback like:some ML engineers would never consider adding Scala to their Python-based workflow.

Python might be even more popular due to MLOps

Spotify has a CLI that helps users build Docker images for Kubeflow Pipelines components. Users rarely need to write Docker files.

Spotify approach towards writing Dockerfiles for Kubeflow Pipelines

Most serving systems are built in-house, I assume for similar reasons as a feature store — there weren’t many serving tools until recently and these companies have stringent production requirements.

The reason of many feature stores and model serving tools built in house, might be, because there were not many open-source tools before

Models require a dedicated system because their behavior is determined not only by code, but also by the training data, and hyper-parameters. These three aspects should be linked to the artifact, along with metrics about performance on hold-out data.

Why model registry is a must in MLOps

five ML platform components stand out which are indicated by the green boxes in the diagram below

we employed a three-stage strategy for validating and deploying the latest binary of the Real-time Prediction Service: staging integration test, canary integration test, and production rollout. The staging integration test and canary integration tests are run against non-production environments. Staging integration tests are used to verify the basic functionalities. Once the staging integration tests have been passed, we run canary integration tests to ensure the serving performance across all production models. After ensuring that the behavior for production models will be unchanged, the release is deployed onto all Real-time Prediction Service production instances, in a rolling deployment fashion.

3-stage strategy for validating and deploying the latest binary of the Real-time Prediction Service:

We add auto-shadow configuration as part of the model deployment configurations. Real-time Prediction Service can check on the auto-shadow configurations, and distribute traffic accordingly. Users only need to configure shadow relations and shadow criteria (what to shadow and how long to shadow) through API endpoints, and make sure to add features that are needed for the shadow model but not for the primary model.

auto-shadow configuration

In a gradual rollout, clients fork traffic and gradually shift the traffic distribution among a group of models. In shadowing, clients duplicate traffic on an initial (primary) model to apply on another (shadow) model).

gradual rollout (model A,B,C) vs shadowing (model D,B):

we built a model auto-retirement process, wherein owners can set an expiration period for the models. If a model has not been used beyond the expiration period, the Auto-Retirement workflow, in Figure 1 above, will trigger a warning notification to the relevant users and retire the model.

Model Auto-Retirement - without it, we may observe unnecessary storage costs and an increased memory footprint

For helping machine learning engineers manage their production models, we provide tracking for deployed models, as shown above in Figure 2. It involves two parts:

Things to track in model deployment (listed below)

Model deployment does not simply push the trained model into Model Artifact & Config store; it goes through the steps to create a self-contained and validated model package

3 steps (listed below) are executed to validate the packaged model

we implemented dynamic model loading. The Model Artifact & Config store holds the target state of which models should be served in production. Realtime Prediction Service periodically checks that store, compares it with the local state, and triggers loading of new models and removal of retired models accordingly. Dynamic model loading decouples the model and server development cycles, enabling faster production model iteration.

Dynamic Model Loading technique

The first challenge was to support a large volume of model deployments on a daily basis, while keeping the Real-time Prediction Service highly available.

A typical MLOps use case

pip install 'poetry==$POETRY_VERSION'

Install Poetry with pip to control its version

To address this problem, I offer git undo, part of the git-branchless suite of tools. To my knowledge, this is the most capable undo tool currently available for Git. For example, it can undo bad merges and rebases with ease, and there are even some rare operations that git undo can undo which can’t be undone with git reflog.

You can use git undo through git-brancheless.

There's also GitUp, but only for macOS

the logical and physical page addresses are decoupled. A mapping table, which is stored on the SSD, translates logical (software) addresses to physical (flash) locations. This component is also called Flash Translation Layer (FTL).

Flash Translation Layer (FTL)

NAND flash pages cannot be overwritten. Page writes can only be performed sequentially within blocks that have been erased beforehand.

Overwriting data on SSDs

For example, if one looks at write latency, one may measure results as low as 10us – 10 times faster than a read. However, latency only appears so low because SSDs are caching writes on volatile RAM. The actual write latency of NAND flash is about 1ms – 10 times slower than a read.

SSDs writes aren't as fast as they seem to be

Another important difference between disks and SSDs is that disks have one disk head and perform well only for sequential accesses. SSDs, in contrast, consist of dozens or even hundreds of flash chips ("parallel units"), which can be accessed concurrently.

2nd difference between SSDs and HDDs

SSDs are often referred to as disks, but this is misleading as they store data on semiconductors instead of a mechanical disk.

1st difference between SSDs and HDDs

It basically takes any command line arguments passed to entrypoint.sh and execs them as a command. The intention is basically "Do everything in this .sh script, then in the same shell run the command the user passes in on the command line".

What is the use of this part in a Docker entry point:

#!/bin/bash

set -e

... code ...

exec "$@"

The alternative for curl is a credential file: A .netrc file can be used to store credentials for servers you need to connect to.And for mysql, you can create option files: a .my.cnf or an obfuscated .mylogin.cnf will be read on startup and can contain your passwords.

Linux keyring offers several scopes for storing keys safely in memory that will never be swapped to disk. A process or even a single thread can have its own keyring, or you can have a keyring that is inherited across all processes in a user’s session. To manage the keyrings and keys, use the keyctl command or keyctl system calls.

Linux keyring is a considerable lightweight secrets manager in the Linux kernel

Docker container can call out to a secrets manager for its secrets. But, a secrets manager is an extra dependency. Often you need to run a secrets manager server and hit an API. And even with a secrets manager, you may still need Bash to shuttle the secret into your target application.

Secrets manager in Docker is not a bad option but adds more dependencies

Using environment variables for secrets is very convenient. And we don’t recommend it because it’s so easy to leak things

If possible, avoid using environment variables for passing secrets

As the sanitized example shows, a pipeline is generally an excellent way to pass secrets around, if the program you’re using will accept a secret via STDIN.

Piped secrets are generally an excellent way to pass secrets

A few notes about storing and retrieving file secrets

Credentials files are also a good way to pass secrets

vibrating bike (and rider) is absorbing energy that reduces the bike’s speed.

The narrower tires, the more vibrations

And since we now have very similar tires in widths from 26 to 54 mm, we could do controlled testing of all these sizes. We found that they all perform the same. Even on very smooth asphalt, you don’t lose anything by going to wider tires (at least up to 54 mm). And on rough roads, wider tires are definitely faster.

Wider tires performe as well as the narrower ones, but they definitely perform better on rough roads

Tire width influences the feel of the bike, but not its speed. If you like the buzzy, connected-to-the-road feel of a racing bike, choose narrower tires. If you want superior cornering grip and the ability to go fast even when the roads get rough, choose wider tires.

Conclusion of a debunked mth: Wide tires are NOT slower than the narrower ones

This is where off-site backups come into play. For this purpose, I recommend Borg backup. It has sophisticated features for compression and encryption, and allows you to mount any version of your backups as a filesystem to recover the data from. Set this up on a cronjob as well for as frequently as you feel the need to make backups, and send them off-site to another location, which itself should have storage facilities following the rest of the recommendations from this article. Set up another cronjob to run borg check and send you the results on a schedule, so that their conspicuous absence may indicate that something fishy is going on. I also use Prometheus with Pushgateway to make a note every time that a backup is run, and set up an alarm which goes off if the backup age exceeds 48 hours. I also have periodic test alarms, so that the alert manager’s own failures are noticed.

Solution for human failures and existential threads:

RAID is complicated, and getting it right is difficult. You don’t want to wait until your drives are failing to learn about a gap in your understanding of RAID. For this reason, I recommend ZFS to most. It automatically makes good decisions for you with respect to mirroring and parity, and gracefully handles rebuilds, sudden power loss, and other failures. It also has features which are helpful for other failure modes, like snapshots. Set up Zed to email you reports from ZFS. Zed has a debug mode, which will send you emails even for working disks — I recommend leaving this on, so that their conspicuous absence might alert you to a problem with the monitoring mechanism. Set up a cronjob to do monthly scrubs and review the Zed reports when they arrive. ZFS snapshots are cheap - set up a cronjob to take one every 5 minutes, perhaps with zfs-auto-snapshot.

ZFS is recommended (not only for the beginners) over the complicated RAID

these days hardware RAID is almost always a mistake. Most operating systems have software RAID implementations which can achieve the same results without a dedicated RAID card.

According to the author software RAID is preferable over hardware RAID

Failing disks can show signs of it in advance — degraded performance, or via S.M.A.R.T reports. Learn the tools for monitoring your storage medium, such as smartmontools, and set it up to report failures to you (and test the mechanisms by which the failures are reported to you).

Preventive maintenance of disk failures

RAID gets more creative with three or more hard drives, utilizing parity, which allows it to reconstruct the contents of failed hard drives from still-online drives.

If you are using RAID and one of the 3 drives fail, you can still recover its content thanks to XOR operation

A more reliable solution is to store the data on a hard drive1. However, hard drives are rated for a limited number of read/write cycles, and can be expected to fail eventually.

Hard drives are a better lifetime option than microSD cards but still not ideal

The worst way I can think of is to store it on a microSD card. These fail a lot. I couldn’t find any hard data, but anecdotally, 4 out of 5 microSD cards I’ve used have experienced failures resulting in permanent data loss.

microSD cards aren't recommended for storing lifetime data

As it stands, sudo -i is the most practical, clean way to gain a root environment. On the other hand, those using sudo -s will find they can gain a root shell without the ability to touch the root environment, something that has added security benefits.

Which sudo command to use:

sudo -i <--- most practical, clean way to gain a root environmentsudo -s <--- secure way that doesn't let touching the root environmentMuch like sudo su, the -i flag allows a user to get a root environment without having to know the root account password. sudo -i is also very similar to using sudo su in that it’ll read all of the environmental files (.profile, etc.) and set the environment inside the shell with it.

sudo -i vs sudo su. Simply, sudo -i is a much cleaner way of gaining root and a root environment without directly interacting with the root user

This means that unlike a command like sudo -i or sudo su, the system will not read any environmental files. This means that when a user tells the shell to run sudo -s, it gains root but will not change the user or the user environment. Your home will not be the root home, etc. This command is best used when the user doesn’t want to touch root at all and just wants a root shell for easy command execution.

sudo -s vs sudo -i and sudo su. Simply, sudo -s is good for security reasons

Though there isn’t very much difference from “su,” sudo su is still a very useful command for one important reason: When a user is running “su” to gain root access on a system, they must know the root password. The way root is given with sudo su is by requesting the current user’s password. This makes it possible to gain root without the root password which increases security.

Crucial difference between sudo su and su: the way password is provided

“su” is best used when a user wants direct access to the root account on the system. It doesn’t go through sudo or anything like that. Instead, the root user’s password has to be known and used to log in with.

The su command is used to get a direct access to the root account

A good philosophy to live by at work is to “always be quitting”. No, don’t be constantly thinking of leaving your job 😱. But act as if you might leave on short notice 😎.

"always be quitting" = making yourself "replaceable"

An incomplete list of skills senior engineers need, beyond coding

23 social skills to look for in great engineers

if a module's name has no dots, it is not considered to be part of a package. It doesn't matter where the file actually is on disk.

what if Python module's name has no dots

if you imported moduleX (note: imported, not directly executed), its name would be package.subpackage1.moduleX. If you imported moduleA, its name would be package.moduleA. However, if you directly run moduleX from the command line, its name will instead be __main__, and if you directly run moduleA from the command line, its name will be __main__. When a module is run as the top-level script, it loses its normal name and its name is instead __main__.

When Python's module name is __main__ vs when it's a full name (preceded by the names of any packages/subpackages of which it is a part, separated by dots)

A file is loaded as the top-level script if you execute it directly, for instance by typing python myfile.py on the command line. It is loaded as a module if you do python -m myfile, or if it is loaded when an import statement is encountered inside some other file.

3 cases when a Python file is called as a top-level script vs module

After working on the problem for a while, we boiled it down to a 4-turn (2 per player), 9 roll (including doubles) game. Detail on each move given below. If executed quickly enough, this theoretical game can be played in 21 seconds (see video below).

The shortest possible 2-player Monopoly game in 4 turns (2 per player). See the details below this annotation

The majority of Python packaging tools also act as virtualenv managers to gain the ability to isolate project environments. But things get tricky when it comes to nested venvs: One installs the virtualenv manager using a venv encapsulated Python, and create more venvs using the tool which is based on an encapsulated Python. One day a minor release of Python is released and one has to check all those venvs and upgrade them if required. PEP 582, on the other hand, introduces a way to decouple the Python interpreter from project environments. It is a relative new proposal and there are not many tools supporting it (one that does is pyflow), but it is written with Rust and thus can't get much help from the big Python community. For the same reason it can't act as a PEP 517 backend.

The reason why PDM - Python Development Master may replace poetry or pipenv

After suggestions from comments below, I read A Philosophy of Software Design (2018) by John Ousterhout and found it to be a much more positive experience. I would be happy to recommend it over Clean Code.

The author recommends A Philosophy of Software Design over Clean Code

If you insist on using Git and insist on tracking many large files in version control, you should definitely consider LFS. (Although, if you are a heavy user of large files in version control, I would consider Plastic SCM instead, as they seem to have the most mature solution for large files handling.)

When the usage of Git LFS makes sense

In Mercurial, use of LFS is a dynamic feature that server/repo operators can choose to enable or disable whenever they want. When the Mercurial server sends file content to a client, presence of external/LFS storage is a flag set on that file revision. Essentially, the flag says the data you are receiving is an LFS record, not the file content itself and the client knows how to resolve that record into content.

Generally, Merculiar handles LFS slightly better than Git

If you adopt LFS today, you are committing to a) running an LFS server forever b) incurring a history rewrite in the future in order to remove LFS from your repo, or c) ceasing to provide an LFS server and locking out people from using older Git commits.

Disadvantages of using Git LFS

:/<text>, e.g. :/fix nasty bug A colon, followed by a slash, followed by a text, names a commit whose commit message matches the specified regular expression.

:/<text> - searching git commits using their text instead of hash, e.g. :/fix nasty bug

git worktree add <path> automatically creates a new branch whose name is the final component of <path>, which is convenient if you plan to work on a new topic. For instance, git worktree add ../hotfix creates new branch hotfix and checks it out at path ../hotfix.

The simple idea of git-worktree to manage multiple working trees without stashing

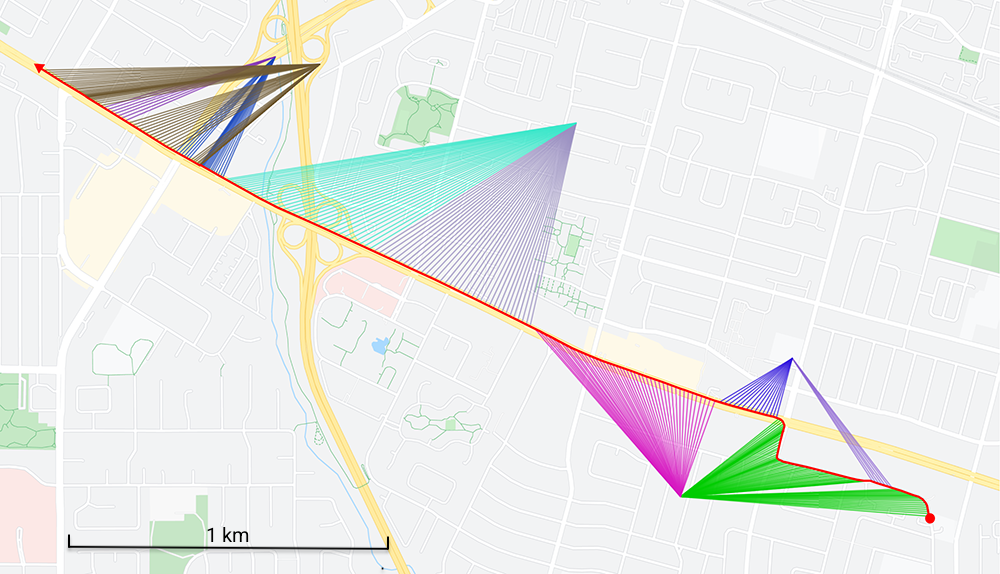

LocationManager can provide a GPS location (lat,long) every second. Meanwhile, TelephonyManager gives the cellID=(mmc,mcc,lac,cid) the radio is currently camping on. A cellID database[1], allows to know the (lat,long) of each CellID. What is left is to draw the itinerary (in red) and, for each second, a cellID-color-coded connection to the cell.

To design such a map, one needs to use these 2 Android components:

lat,long) every secondmmc,mcc,lac,cid) the radio is currently camping onand cellID database to know the (lat,long) of each cell ID.

For instance, when someone is not confident in what they are saying or know that they are lying, they may take a step backwards as if stepping back from the situation.

Example of micro expression to detect a lie

People with ADHD are highly sensitive to micro expressions.

There are seven expressions that are universal to all humans no matter which country they are born: anger, fear, disgust, sadness, happiness, surprise, and contempt.

7 universal expressions

A macro expression is the normal facial expression you notice that lasts between ½ a second to 4 seconds. These are fairly easy to notice and tend to match the tone and content of what is being said.A micro expression is an involuntary facial expressions that lasts less than half a second. These expressions are often missed altogether but they reveal someones true feelings about what they are saying.

Macro vs micro expressions

From an employers perspective, I believe there are many advantages:

List of advantages for working 4 days per week (instead of 5)

The research found that working 55 hours or more a week was associated with a 35% higher risk of stroke and a 17% higher risk of dying from heart disease, compared with a working week of 35 to 40 hours.

What I did have going for me was what might be considered hacker sensibilities - lack of fear of computers in general, and in trying unknown things. A love of exploring, learning by experimenting, putting stuff together on the fly.

Well described concept of hacker sensibilities

The only problem is that Kubeflow Pipelines must be deployed on a Kubernetes Cluster. You will struggle with permissions, VPC and lots of problems to deploy and use it if you are in a small company that uses sensitive data, which makes it a bit difficult to be adoptedVertex AI solves this problem with a managed pipeline runner: you can define a Pipeline and it will executed it, being responsible to provision all resources, store all the artifacts you want and pass them through each of the wanted steps.

How Vertex AI solves the problem/need of deploying on a Kubernetes Cluster

Kubeflow Pipelines comes to solve this problem. KFP, for short, is a toolkit dedicated to run ML Workflows (as experiments for model training) on Kubernetes, and it does it in a very clever way:Along with other ways, Kubeflow lets us define a workflow as a series of Python functions that pass results, and Artifacts for one another.For each Python function, we can define dependencies (for libs used) and Kubeflow will create a container to run each function in an isolated way, and passing any wanted object to a next step on the workflow. We can set needed resources, (as memory or GPUs) and it will provision them for our workflow step. It feels like magic.Once you’ve ran your pipeline, you will be able to see it in a nice UI, like this:

Brief explanation of Kubeflow Pipelines

Vertex AI came from the skies to solve our MLOps problem with a managed — and reasonably priced—alternative. Vertex AI comes with all the AI Platform classic resources plus a ML metadata store, a fully managed feature store, and a fully managed Kubeflow Pipelines runner.

Vertex AI - Google Cloud’s new unified ML platform

Method 1: We can grab the PDF Versions of Google’s TotT episodes or create our own posters that are more relevant to the company and put them in places where both developers and testers can’t be ignored.Method 2 : We can initiate something called ‘Tip of the day’ Mailing System from Quality Engineering Department.

Ways to implement Google's Testing on the Toilet concept

They started to write flyers about everything from dependency injection to code coverage and then regularly plaster the bathrooms in all over Google with each episode, almost 500 stalls worldwide.

Testing on the Toilet (TotT) concept

Dogfooding → Internal adoption of software that is not yet released. The phrase “eating your own dogfood” is meant to convey the idea that if you make a product to sell to someone else, you should be willing to use it yourself to find out if it is any good.

Dogfooding testing method at Google

It's Faster

Makefile is also faster than package.json script

It's even more discoverable if your shell has smart tab completion: for example, on my current project, if you enter the aws/ directory and type make<TAB>, you'll see a list that includes things like docker-login, deploy-dev and destroy-sandbox.

If your shell has smart TAB completion, you can easily discover relevant make commands

Variables. Multiple lines. No more escaped quotation marks. Comments.

What are Makefile's advantages over package.json scripts

People might think I’m not experiencing new things, but I think the secret to a good life is to enjoy your work. I could never stay indoors and watch TV. I hear London is a place best avoided. I think living in a city would be terrible – people living on top of one another in great tower blocks. I could never do it. Walking around the farm fills me with wonder. What makes my life is working outside, only going in if the weather is very bad.

How farmers perceive happiness in life

pronunciation cues in an individual’s speech communicate their social status more accurately than the content of their speech.

reciting seven random words is sufficient to allow people to discern the speaker’s social class with above-chance accuracy.

Cookiecutter takes a source directory tree and copies it into your new project. It replaces all the names that it finds surrounded by templating tags {{ and }} with names that it finds in the file cookiecutter.json. That’s basically it. [1]

The main idea behind cookiecutter

In short, MLflow makes it far easier to promote models to API endpoints on various cloud vendors compared to Kubeflow, which can do this but only with more development effort.

MLflow seems to be much easier

Bon Appétit?

Quick comparison of MLflow and Kubeflow (check below the annotation)

MLflow is a single python package that covers some key steps in model management. Kubeflow is a combination of open-source libraries that depends on a Kubernetes cluster to provide a computing environment for ML model development and production tools.

Brief comparison of MLflow and Kubeflow

I decided to embrace imperfection and use it as the unifying theme of the album.

You might find a lot of imperfection on the way, but instead of fighting with it, maybe try to embrace it?

I start by sampling loops from songs I like, and then use the concatenative synthesis and source separations tools to extract interesting musical ideas (e.g., melodies, percussion, ambiance). These results can be used directly, with a little FX (Replica XT, Serum FX), or translated into MIDI and resynthesized (Serum, VPS Avenger). This gives me the building blocks of the song (the tracks), each a few bars long, which I carefully combine using Ableton Live's session view into something that sounds "good". Later, each track is expanded by sampling more loops from the original songs until I have plenty of content to create something with enough structure to be called a "song".

Author's music production process:

Another extremely interesting and underrated task in the audio domain is translating audio into MIDI notes. Melodyne is state of the art in the area, but Ableton's Convert Audio to Midi works really well too.

For converting audio to midi, try Melodyne or Ableton's Convert Audio to Midi

imperfect separations are sometimes the most interesting, especially the results from models trained to separate something that isn't in the source sound (e.g., extract vocals from an instrumental track)

Right, it might produce some unique sounds

Sound Source Separation

It seems like Spleeter and Open Unmix perform equally well in Sound Source Separation

concatenative sound synthesis. The main idea is simple: given a database of sound snippets, concatenate or mix these sounds in order to create new and original ones.

Concatenative sound synthesis

Calibri has been the default font for all things Microsoft since 2007, when it stepped in to replace Times New Roman across Microsoft Office. It has served us all well, but we believe it’s time to evolve. To help us set a new direction, we’ve commissioned five original, custom fonts to eventually replace Calibri as the default.

Microsoft will be moving away from Calibri as a default font

To summarize, implementing ML in a production environment doesn't only mean deploying your model as an API for prediction. Rather, it means deploying an ML pipeline that can automate the retraining and deployment of new models. Setting up a CI/CD system enables you to automatically test and deploy new pipeline implementations. This system lets you cope with rapid changes in your data and business environment. You don't have to immediately move all of your processes from one level to another. You can gradually implement these practices to help improve the automation of your ML system development and production.

The ideal state of MLOps in a project (2nd level)

🐛 (home, components): Resolve issue with modal collapses 🚚 (home): Move icons folder ✨ (newsletter): Add Newsletter component

With gitmojis, we can replace the <type> part of a git commit

feat(home, components): Add login modal fix(home, components): Resolve issue with modal collapses chore(home): Move icons folder

Examples of readable commits in the format:

<type> [scope]: "Message"

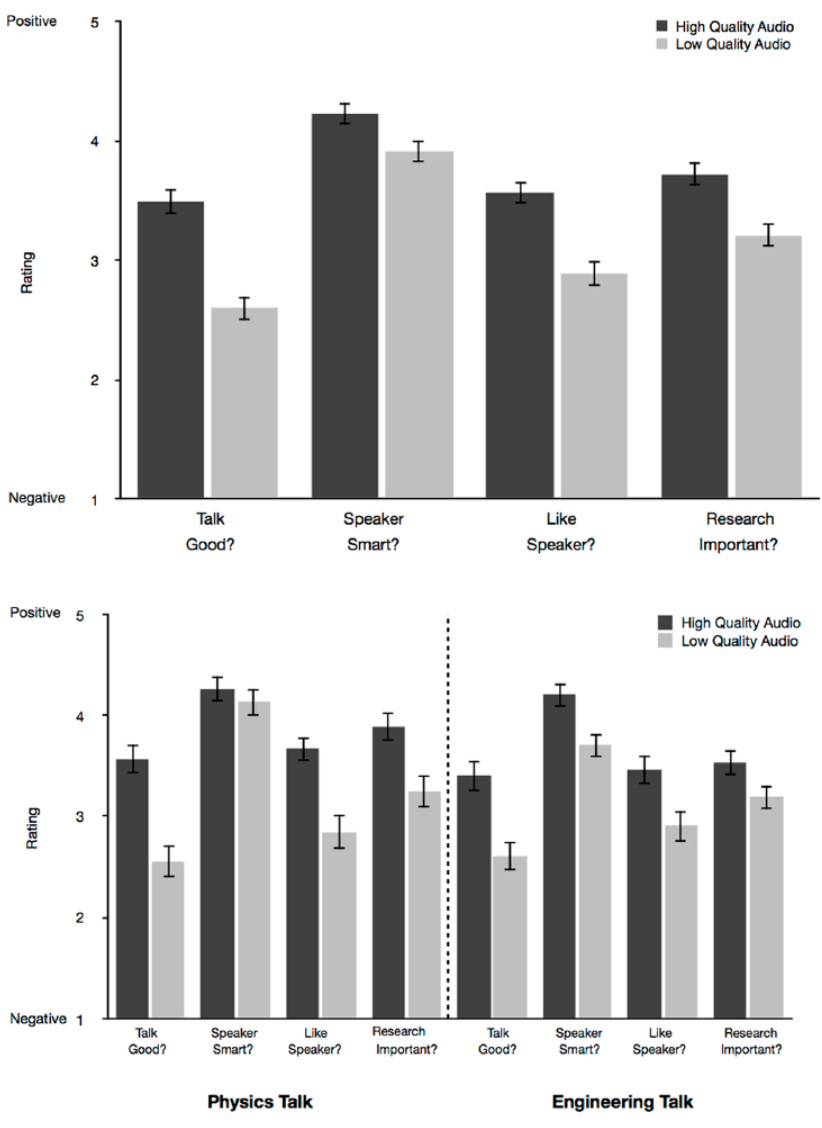

When audio quality is high (vs low), people judge the content as better and more important. They also judge the speaker as more intelligent, competent, and likable.In an experiment, people rated a physicist’s talk at a scientific conference as 19.3% better when they listened to it in high quality audio vs slightly distorted, echo-prone audio.

High quality audio makes you sound smarter:

Just write your markdown as text/plain, email clients are broken.

E-mail clients still do not render Markdown in a convenient format

Bring up the board that shows the status of every item the team is working on.Starting in the right-most column, get an update for each ticket in the column.Be sure to ask: “What’s needed to move this ticket to the next stage of progress?”Highlight blockers that people bring up and define what’s needed to unblock work.Move to the next column to the left and get updates for each ticket in that column.Continue until you get to the left-most column.

Format of "walk the board" daily standup

More people brings more status updates.More updates mean more information that others won’t care about.The team may be working on multiple projects at once.If the team has customers, ad-hoc work will regularly come up.

Problems of dailies when more people show up

I start thinking about my update to prove I should keep my jobPeople zone out when a teammate starts talking about how they worked on something that doesn’t affect me.It’s finally my turn to give an update. No one is listening except for the facilitator.We go over our time and end when the next team starts lurking outside the room.

That's how a typical daily usually looks like

What did I complete yesterday that contributed to the team?What do I plan to complete today to contribute to the team?What impediments do I see that prevent me or the team from achieving its goals?

3 questions to be answered during a daily meeting

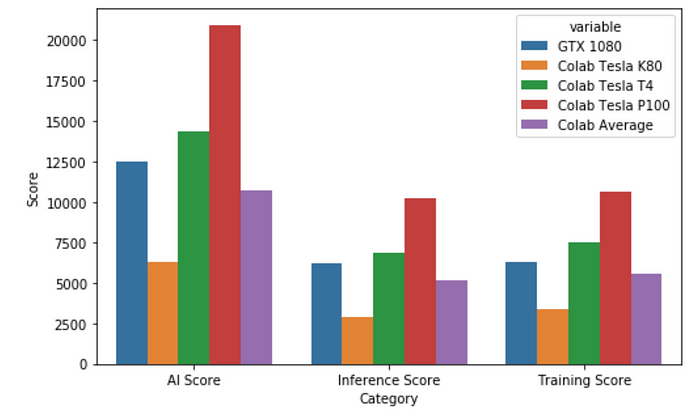

On the median case, Colab is going to assign users a K80, and the GTX 1080 is around double the speed, which does not stack up particularly well for Colab. However, on occasion, when a P100 is assigned, the P100 is an absolute killer GPU (again, for FREE).

Some of the GPUs from Google Colab are outstanding.

With Spark 3.1, the Spark-on-Kubernetes project is now considered Generally Available and Production-Ready.

With Spark 3.1 k8s becomes the right option to replace YARN

The key libraries of TFX are as follows

TensorFlow Extend (TFX) = TFDV + TFT + TF Estmators and Keras + TFMA + TFServing

The console is a killer SQLite feature for data analysis: more powerful than Excel and more simple than pandas. One can import CSV data with a single command, the table is created automatically

SQLite makes it fairly easy to import and analyse data. For example:

import --csv city.csv cityselect count(*) from city;This is not a problem if your DBMS supports SQL recursion: lots of data can be generated with a single query. The WITH RECURSIVE clause comes to the rescue.

WITH RECURSIVE can help you quickly generate a series of random data.

Interrupt if: T3F > RTW (IO + T3E)

Formula for interruption: T3F = A1 RTW = A4 + A5 IO = A3 T3E = A2

A1 > (A4 + A5)(A3 + A2)

When you seek advice, first write down everything you’ve tried.

If you’re stuck for over an hour, seek help.

Rule of thumb for when to seek help

Simple … a single Linode VPS.

You might not need all the Kubernetes clusters and run well on a single Linode VPS.

Twitter thread: https://twitter.com/levelsio/status/1101581928489078784

When peeking at your brain may help with mental illness

yyyy-mm-dd hh:mm:ss

ISO 8601 date format. The only right date format

We use Prometheus to collect time-series metrics and Grafana for graphs, dashboards, and alerts.

How Prometheus and Grafana can be used to collect information from running ML on K8s

large machine learning job spans many nodes and runs most efficiently when it has access to all of the hardware resources on each node. This allows GPUs to cross-communicate directly using NVLink, or GPUs to directly communicate with the NIC using GPUDirect. So for many of our workloads, a single pod occupies the entire node.

The way OpenAI runs large ML jobs on K8s

We use Kubernetes mainly as a batch scheduling system and rely on our autoscaler to dynamically scale up and down our cluster — this lets us significantly reduce costs for idle nodes, while still providing low latency while iterating rapidly.

For high availability, we always have at least 2 masters, and set the --apiserver-count flag to the number of apiservers we’re running (otherwise Prometheus monitoring can get confused between instances).

Tip for high availability:

--apiserver-count flag to the number of running apiservers We’ve increased the max etcd size with the --quota-backend-bytes flag, and the autoscaler now has a sanity check not to take action if it would terminate more than 50% of the cluster.

If we've more than 1k nodes, etcd's hard storage limit might stop accepting writes

Another helpful tweak was storing Kubernetes Events in a separate etcd cluster, so that spikes in Event creation wouldn’t affect performance of the main etcd instances.

Another trick apart from tweaking default settings of Fluentd & Datadog

The root cause: the default setting for Fluentd’s and Datadog’s monitoring processes was to query the apiservers from every node in the cluster (for example, this issue which is now fixed). We simply changed these processes to be less aggressive with their polling, and load on the apiservers became stable again:

Default settings of Fluentd and Datadog might not be suited for running many nodes

We then moved the etcd directory for each node to the local temp disk, which is an SSD connected directly to the instance rather than a network-attached one. Switching to the local disk brought write latency to 200us, and etcd became healthy!

One of the solutions for etcd using only about 10% of the available IOPS. It was working till about 1k nodes

When should you end a conversation? Probably sooner than you think

Why we’re so bad at daydreaming, and how to fix it

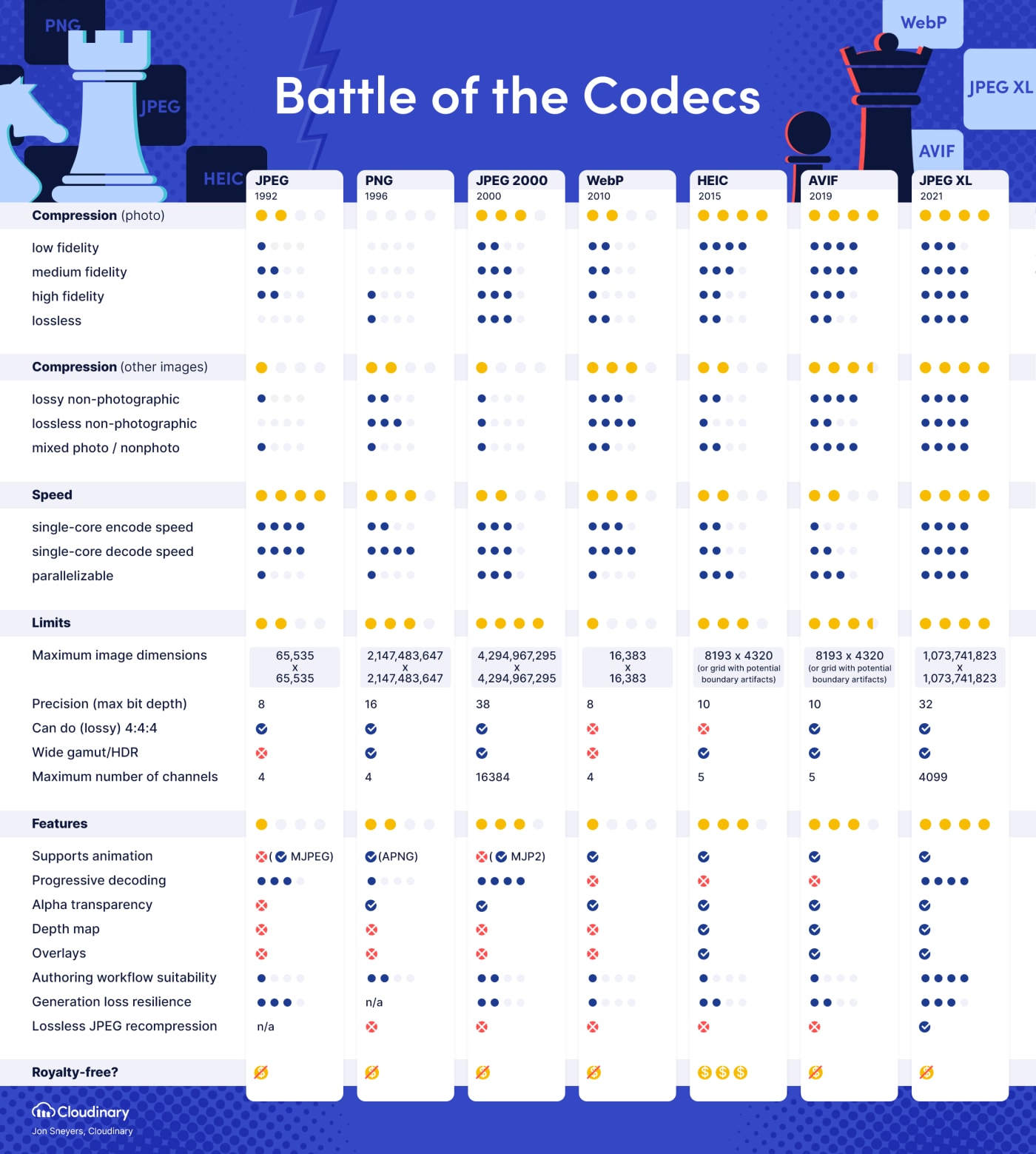

The newest generation of image codecs—in particular AVIF and JPEG XL—are a major improvement of the old JPEG and PNG codecs. To be sure, JPEG 2000 and WebP also compress more effectively and offer more features, yet the overall gain is not significant and consistent enough to warrant fast and widespread adoption. AVIF and JPEG XL will do much better—at least that’s what I hope.

Comparison of image codecs (JPEG, PNG, JPEG 2000, WebP, HEIC, AVIF, JPEG XL)

Scientists break through the wall of sleep to the untapped world of dreams

'Night owls' may be twice as likely as morning 'larks' to underperform at work

Consider the amount of data and the speed of the data, if low latency is your priority use Akka Streams, if you have huge amounts of data use Spark, Flink or GCP DataFlow.

For low latency = Akka Streams

For huge amounts of data = Spark, Flink or GCP DataFlow

As we mentioned before, the majority of machine learning implementations are based on running model serving as a REST service, which might not be appropriate for the high volume data processing or usage of the streaming system, which requires re coding/starting systems for model update, for example, TensorFlow or Flink. Model as Data is a great fit for big data pipelines. For online inference, it is quite easy to implement, you can store the model anywhere (S3, HDFS…), read it into memory and call it.

Model as Data <--- more appropriate approach than REST service for serving big data pipelines

The most common way to deploy a trained model is to save into the binary format of the tool of your choice, wrap it in a microservice (for example a Python Flask application) and use it for inference.

Model as Code <--- the most common way of deploying ML models

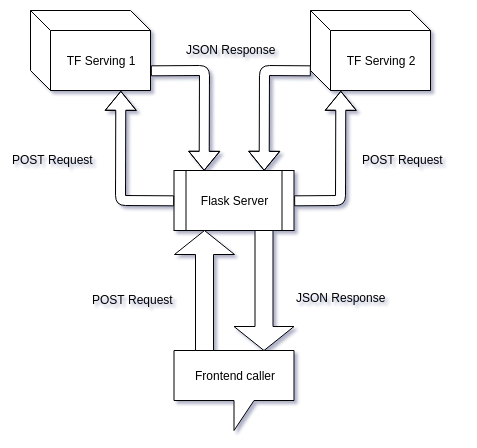

When we are providing our API endpoint to frontend team we need to ensure that we don’t overwhelm them with preprocessing technicalities.We might not always have a Python backend server (eg. Node.js server) so using numpy and keras libraries, for preprocessing, might be a pain.If we are planning to serve multiple models then we will have to create multiple TensorFlow Serving servers and will have to add new URLs to our frontend code. But our Flask server would keep the domain URL same and we only need to add a new route (a function).Providing subscription-based access, exception handling and other tasks can be carried out in the Flask app.

4 reasons why we might need Flask apart from TensorFlow serving

Next, imagine you have more models to deploy. You have three optionsLoad the models into the existing cluster — having one cluster serve all models.Spin up a new cluster to serve each model — having multiple clusters, one cluster serves one model.Combination of 1 and 2 — having multiple clusters, one cluster serves a few models.The first option would not scale, because it’s just not possible to load all models into one cluster as the cluster has limited resources.The second option will definitely work but it doesn’t sound like an effective process, as you need to create a set of resources every time you have a new model to deploy. Additionally, how do you optimize the usage of resources, e.g., there might be unutilized resources in your clusters that could potentially be shared by the rest.The third option looks promising, you can manually choose the cluster to deploy each of your new models into so that all the clusters’ resource utilization is optimal. The problem is you have to manuallymanage it. Managing 100 models using 25 clusters can be a challenging task. Furthermore, running multiple models in a cluster can also cause a problem as different models usually have different resource utilization patterns and can interfere with each other. For example, one model might use up all the CPU and the other model won’t be able to serve anymore.Wouldn’t it be better if we had a system that automatically orchestrates model deployments based on resource utilization patterns and prevents them from interfering with each other? Fortunately, that is exactly what Kubernetes is meant to do!

Solution for deploying lots of ML models

If you’re running lots of deployments of models then it becomes important to record which versions were deployed and when. This is needed to be able to go back to specific versions. Model registries help with this problem by providing ways to store and version models.

Model Registries <--- way to handle multiple ML models in production

Here is a quick recap table of every technology we discussed in this blog post.

Quick comparison of Python web scraping tools (socket, urlib3, requests, scrapy, selenium) [below this highlight]

Can the Brain Resist the Group Opinion?

The benefits of applying GitOps best practices are far reaching and provide:

The 6 provided benefits also explain GitOps in simple terms

GitOps is a way to do Kubernetes cluster management and application delivery. It works by using Git as a single source of truth for declarative infrastructure and applications. With GitOps, the use of software agents can alert on any divergence between Git with what's running in a cluster, and if there's a difference, Kubernetes reconcilers automatically update or rollback the cluster depending on the case. With Git at the center of your delivery pipelines, developers use familiar tools to make pull requests to accelerate and simplify both application deployments and operations tasks to Kubernetes.

Other definition of GitOps (source):

GitOps is a way of implementing Continuous Deployment for cloud native applications. It focuses on a developer-centric experience when operating infrastructure, by using tools developers are already familiar with, including Git and Continuous Deployment tools.

It was actually very common to have one Dockerfile to use for development (which contained everything needed to build your application), and a slimmed-down one to use for production, which only contained your application and exactly what was needed to run it. This has been referred to as the “builder pattern”.

Builder pattern - maintaining two Dockerfiles: 1st for development, 2nd for production. It's not an ideal solution and we shall aim for multi-stage builds.

Multi-stage build - uses multiple FROM commands in the same Dockerfile. The end result is the same tiny production image as before, with a significant reduction in complexity. You don’t need to create any intermediate images and you don’t need to extract any artifacts to your local system at all

volumes are often a better choice than persisting data in a container’s writable layer, because a volume does not increase the size of the containers using it, and the volume’s contents exist outside the lifecycle of a given container.

Aim for using volumes instead of bind mounts in Docker. Also, if your container generates non-persistent data, consider using a tmpfs mount to avoid storing the data permanently

One case where it is appropriate to use bind mounts is during development, when you may want to mount your source directory or a binary you just built into your container. For production, use a volume instead, mounting it into the same location as you mounted a bind mount during development.

after 4 decades, we can observe that not much has changed beside learning how to measure the “figuring out” time.

Comparing 1979 to 2018 results, we spend nearly the same amount of time for maintenance/comprehension of the code:

1979 in a book by Zelkowitz, Shaw, and Gannon entitled Principles of software engineering and design. It said that most of the development time was spent on maintenance (67%).

Where software developers spent most of their in 1979:

I can't recommend the Data Engineer career enough for junior developers. It's how I started and what I pursued for 6 years (and I would love doing it again), and I feel like it gave me such an incredible foundation for future roles :- Actually big data (so, not something you could grep...) will trigger your code in every possible way. You quickly learn that with trillions of input, the probabily to reach a bug is either 0% or 100%. In turn, you quickly learn to write good tests.- You will learn distributed processing at a macro level, which in turn enlighten your thinking at a micro level. For example, even though the order of magnitudes are different, hitting data over network versus on disk is very much like hitting data on disk versus in cache. Except that when the difference ends up being in hours or days, you become much more sensible to that, so it's good training for your thoughts.- Data engineering is full of product decisions. What's often called data "cleaning" is in fact one of the import product decisions made in a company, and a data engineer will be consistently exposed to his company product, which I think makes for great personal development- Data engineering is fascinating. In adtech for example, logs of where ads are displayed are an unfiltered window on the rest of humanity, for the better or the worse. But it definitely expands your views on what the "average" person actually does on its computer (spoiler : it's mainly watching porn...), and challenges quite a bit what you might think is "normal"- You'll be plumbing technologies from all over the web, which might or might not be good news for you.So yeah, data engineering is great ! It's not harder than other specialties for developers, but imo, it's one of the fun ones !

Many reasons why Data Engineer is a great starting position for junior developers

We recommend the Alpine image as it is tightly controlled and small in size (currently under 5 MB), while still being a full Linux distribution. This is fine advice for Go, but bad advice for Python, leading to slower builds, larger images, and obscure bugs.

Alipne Linux isn't the most convenient OS for Python, but fine for Go

If a service can run without privileges, use USER to change to a non-root user. This is excellent advice. Running as root exposes you to much larger security risks, e.g. a CVE in February 2019 that allowed escalating to root on the host was preventable by running as a non-root user. Insecure: However, the official documentation also says: … you should use the common, traditional port for your application. For example, an image containing the Apache web server would use EXPOSE 80. In order to listen on port 80 you need to run as root. You don’t want to run as root, and given pretty much every Docker runtime can map ports, binding to ports <1024 is completely unnecessary: you can always map port 80 in your external port. So don’t bind to ports <1024, and do run as a non-privileged user.

Due to security reasons, if you don't need the root privileges, bind to ports >=1024

Multi-stage builds allow you to drastically reduce the size of your final image, without struggling to reduce the number of intermediate layers and files. This is true, and for all but the simplest of builds you will benefit from using them. Bad: However, in the most likely image build scenario, naively using multi-stage builds also breaks caching. That means you’ll have much slower builds.

Multi-stage builds claim to reduce image size but it can also break caching

layer caching is great: it allows for faster builds and in some cases for smaller images. Insecure: However, it also means you won’t get security updates for system packages. So you also need to regularly rebuild the image from scratch.

Layer caching is great for speeding up the processes, but it can bring some security issues

Different data sources are better suited for different types of data transformations and provide access to different data quantities at different freshnesses

Comparison of data sources

MLOps platforms like Sagemaker and Kubeflow are heading in the right direction of helping companies productionize ML. They require a fairly significant upfront investment to set up, but once properly integrated, can empower data scientists to train, manage, and deploy ML models.

Two popular MLOps platforms: Sagemaker and Kubeflow

…Well, deploying ML is still slow and painful

How the typical ML production pipeline may look like:

Unfortunately, it ties hands of Data Scientists and takes a lot of time to experiment and eventually ship the results to production

Music gives the brain a crucial connective advantage

Downloading a pretrained model off the Tensorflow website on the Iris dataset probably is no longer enough to get that data science job. It’s clear, however, with the large number of ML engineer openings that companies often want a hybrid data practitioner: someone that can build and deploy models. Or said more succinctly, someone that can use Tensorflow but can also build it from source.

Who the industry really needs

When machine learning become hot 🔥 5-8 years ago, companies decided they need people that can make classifiers on data. But then frameworks like Tensorflow and PyTorch became really good, democratizing the ability to get started with deep learning and machine learning. This commoditized the data modelling skillset. Today, the bottleneck in helping companies get machine learning and modelling insights to production center on data problems.

Why Data Engineering became more important

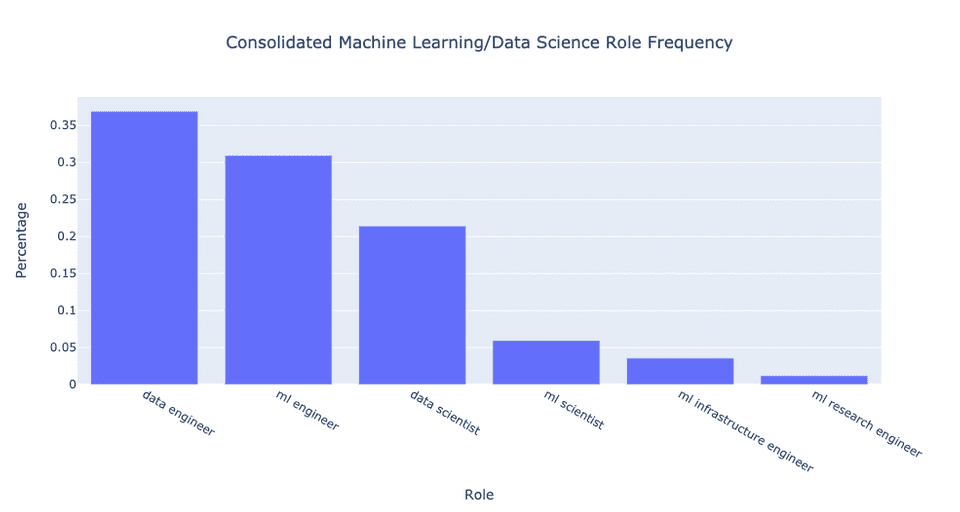

Overall the consolidation made the differences even more pronounced! There are ~70% more open data engineer than data scientist positions. In addition, there are ~40% more open ML engineer than data scientist positions. There are also only ~30% as many ML scientist as data scientist positions.

Takeaway from the analysis:

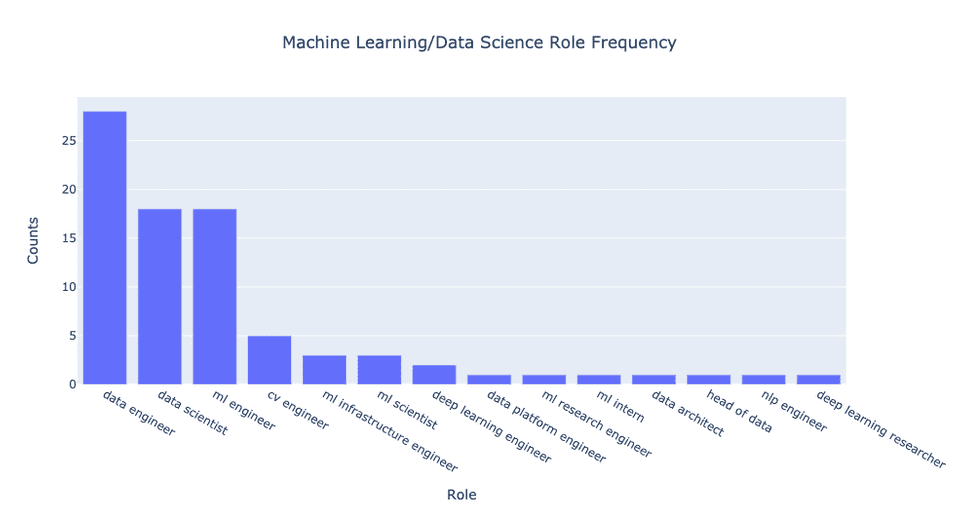

Data scientist: Use various techniques in statistics and machine learning to process and analyse data. Often responsible for building models to probe what can be learned from some data source, though often at a prototype rather than production level. Data engineer: Develops a robust and scalable set of data processing tools/platforms. Must be comfortable with SQL/NoSQL database wrangling and building/maintaining ETL pipelines. Machine Learning (ML) Engineer: Often responsible for both training models and productionizing them. Requires familiarity with some high-level ML framework and also must be comfortable building scalable training, inference, and deployment pipelines for models. Machine Learning (ML) Scientist: Works on cutting-edge research. Typically responsible for exploring new ideas that can be published at academic conferences. Often only needs to prototype new state-of-the-art models before handing off to ML engineers for productionization.

4 different data profiles (and more):

consolidated:

consolidated:

I scraped the homepage URLs of every YC company since 2012, producing an initial pool of ~1400 companies. Why stop at 2012? Well, 2012 was the year that AlexNet won the ImageNet competition, effectively kickstarting the machine learning and data-modelling wave we are now living through. It’s fair to say that this birthed some of the earliest generations of data-first companies. From this initial pool, I performed keyword filtering to reduce the number of relevant companies I would have to look through. In particular, I only considered companies whose websites included at least one of the following terms: AI, CV, NLP, natural language processing, computer vision, artificial intelligence, machine, ML, data. I also disregarded companies whose website links were broken.

How data was collected

There are 70% more open roles at companies in data engineering as compared to data science. As we train the next generation of data and machine learning practitioners, let’s place more emphasis on engineering skills.

The resulting 70% is based on a real analysis

When you think about it, a data scientist can be responsible for any subset of the following: machine learning modelling, visualization, data cleaning and processing (i.e. SQL wrangling), engineering, and production deployment.

What tasks can Data Scientist be responsible for

A big reason for GPUs popularity in Machine Learning is that they are essentially fast matrix multiplication machines. Deep Learning is essentially matrix multiplication.

Deep Learning is mostly about matrix multiplication

Unity ML agents is a way for you to turn a video game into a Reinforcement Learning environment.

Unity ML agents is a great way to practice RNN

Haskell is the best functional programming language in the world and Neural Networks are functionsThis is the main motivation behind Hasktorch which lets you discover new kinds of Neural Network architectures by combining functional operators

Haskell is a great solution for neural networks

I can’t think of a single large company where the NLP team hasn’t experimented with HuggingFace. They add new Transformer models within days of the papers being published, they maintain tokenizers, datasets, data loaders, NLP apps. HuggingFace has created multiple layers of platforms that each could be a compelling company in its own right.

HuggingFace company

Keras is a user centric library whereas Tensorflow especially Tensorflow 1.0 is a machine centric library. ML researchers think in terms of terms of layers, automatic differentiation engines think in terms of computational graphs.As far as I’m concerned my time is more valuable than the cycles of a machine so I’d rather use something like Keras.

Why simplicity of Keras is important

A matrix is a linear map but linear maps are far more intuitive to think about than matrices

Graduate Student Descent

:)

BERT engineer is now a full time job. Qualifications include:Some bash scriptingDeep knowledge of pip (starting a new environment is the suckier version of practicing scales)Waiting for new HuggingFace models to be releasedWatching Yannic Kilcher’s new Transformer paper the day it comes outRepeating what Yannic said at your team reading group

Structure of a BERT engineer job

“Useful” Machine Learning research on all datasets has essentially reduced to making Transformers faster, smaller and scale to longer sequence lengths.

Typical type of advancement we see in ML

The best people in empirical fields are typically those who have accumulated the biggest set of experiences and there’s essentially two ways to do this.Spend lots of time doing itGet really good at running many concurrent experiments

How to derive with the best research

I often get asked by young students new to Machine Learning, what math do I need to know for Deep Learning and my answer is Matrix Multiplication and Derivatives of square functions.

Deep Neural Networks are a composition of matrix multiplications with the occasional non-linearity in between

Slack is the best digital watercooler in the world but it’s a terrible place to collaborate - long winded disagreements should happen over Zoom and collaborations should happen in a document instead.

What Slack is good for and for what it's not

Even if you really love meetings, you can only attend about 10h of them per day but a single well written document will continue being read even while you’re sleeping.It’s unlikely that Christianity would have garnered millions of followers if Jesus Christ had to get on a “quick call” with each new potential prospect.Oral cultures don’t scale.

Oral cultures don't scale