Meta wants to charge for sharing links on Facebook, for business. 2 links for free each month

Hopefully this means all EU / MS public institutions will leave FB.

Meta wants to charge for sharing links on Facebook, for business. 2 links for free each month

Hopefully this means all EU / MS public institutions will leave FB.

It’s not always necessary that the data be made absolutelyunavailable; sometimes data can just be decontextualized enough tobecome less valuable. Facebook provides a fine example. If a greatdeal of personal creativity and life experience has been added to thesite, it’s hard to give all that up. Even if you capture every little thingyou had uploaded, you can’t save it in the context of interactions withother people. You have to lose a part of yourself to leave Facebookonce you become an avid user. If you leave, it will become difficult forsome people to contact you at all.

Meta/FB decision to remove factchecking likely puts it at odds with the EU DSA. Likely that's why the announcement includes 'starting in the US'. Clashes certain in EU.

nobody told it what to do that's that's the kind of really amazing and frightening thing about these situations when Facebook gave uh the algorithm the uh uh aim of increased user engagement the managers of Facebook did not anticipate that it will do it by spreading hatefield conspiracy theories this is something the algorithm discovered by itself the same with the capture puzzle and this is the big problem we are facing with AI

for - AI - progress trap - example - Facebook AI algorithm - target - increase user engagement - by spreading hateful conspiracy theories - AI did this autonomously - no morality - Yuval Noah Harari story

meta they just rolled out they're like hey if you want to pay a certain subscription we will show your stuff to your followers 00:03:14 on Instagram and Facebook

for - example - social media platforms bleeding content producers - Meta - Facebook - Instagram

creepy uncanny valley stuff. Platform sanctioned bots insinuating themselves in spots where the expected context is 100% human interaction, undisclosed, and with the aim to mimick interaction to lock in engagement ('cause #adtech) Making the group of people a means. One wonders if this reaches the emotional influencing threshold in the EU legal framework

Choosing names from a list is a lot more user-friendly and less error-prone than asking them to blindly type in an e-mail address and hope that it is correct and matches an existing user (or at least a real e-mail account that can then be sent an invitation to register). In my opinion, this is a big reason why Facebook became so popular — because it let you see your list of friends, and send message to people by their names instead of having to already know/remember/ask for their e-mail address.

Eine Studie von Avaaz zeigt, dass Facebook erheblich dazu beiträgt, Falschinformationen zu Klimafragen zu verbreiten. Dabei wirkt sich fatal aus, dass Facebook auf das Factchecking bei Politiker-Posts verzichtet. https://www.washingtonpost.com/politics/2021/04/23/technology-202-researchers-warn-misinformation-facebook-threatens-undermine-biden-climate-agenda/

[[Marisa Kabas]] in The Handbasket - Here's the column Meta doesn't want you to see

ᔥ[[Ben Werdmuller]] in Mastodon @ben@werd.social on Apr 06, 2024, 10:45 AM

On Thursday I reported that Meta had blocked all links to the Kansas Reflector from approximately 8am to 4pm, citing cybersecurity concerns after the nonprofit published a column critical of Facebook’s climate change ad policy. By late afternoon, all links were once again able to be posted on Facebook, Threads and Instagram–except for the critical column." Here it is. #Media<br /> https://www.thehandbasket.co/p/kansas-reflector-meta-facebook-column-censored

Here's the column Meta doesn't want you to see by [[Marisa Kabas]]

repost with comment of:<br /> When Facebook fails, local media matters even more for our planet’s future By [[Dave Kendall]]

Katherine Hayhoe, author of “Saving Us: A Climate Scientist’s Case for Hope and Healing in a Divided World,” serves as Chief Scientist for the Nature Conservancy and is a distinguished professor at Texas Tech. You might expect that she would be considered a legitimate authority on the subject. p span[style*="font-size"] { line-height: 1.6; } But in the Meta-verse, where it seems virtually impossible to connect with a human being associated with the administration of the platform, rules are rules, and it appears they would prefer to suppress anything that might prove problematic for them. p span[style*="font-size"] { line-height: 1.6; } Hayhoe expressed her personal frustration in a recent post on Facebook. p span[style*="font-size"] { line-height: 1.6; } “Since August 2018, Facebook has limited the visibility of my page,” she writes, “labelling it as ‘political’ because I talk about climate change and clean energy. This change drastically reduced my post views from hundreds to just tens, and the page’s growth has been stagnant ever since.” p span[style*="font-size"] { line-height: 1.6; } The implications of such policies for our democracy are alarming. Why should corporate entities be able to dictate what type of speech or content is acceptable?

Ongweso Jr., Edward. “The Miseducation of Kara Swisher: Soul-Searching with the Tech ‘Journalist.’” The Baffler, March 29, 2024. https://thebaffler.com/latest/the-miseducation-of-kara-swisher-ongweso.

ᔥ[[Pete Brown]] in Exploding Comma

Critics say this amounts to users having to pay for their privacy.

"If you aren't paying for it, you're the product."

"If you pay for it, you're paying for fundamental rights."

Following a policy change by WhatsApp, many people are looking at alternative messaging options. The new WhatsApp policy requires people to share data with Facebook and associated companies.

WhatsApp is doing the exact thing it said it wouldn't do when it was acquired by Facebook seven years ago.

Also just by observing what they’re doing it becomes pretty clear. For example: Facebook recently purchased full-page ads on major newspapers entirely dedicated to “denounce” Apple. Why? Because Apple has built a system-level feature on iPhones that allows users to very easily disable every kind of advertising tracking and profiling. Facebook absolutely relies on being able to track you and profile your interests, so they immediately cooked up some cynical reasons why Apple shouldn’t be allowed to do this.But the truth is: if Facebook is fighting against someone on privacy matters, that someone is probably doing the right thing.

Completely get away from everything Facebook: FB, Messenger, WhatsApp, Instagram, Oculus. (Yes, I know it’s hard because people are on these platforms, but it is possible to explain your reasoning to those who care about you and establish contact with them on different apps. I moved a ton of people to Telegram for example.)

I use Messenger but not FaceBook. I think the split was useful.

I share your frustration. This was how I felt when they split off Messenger as a separate mobile app from the main Facebook app. Messaging had been working just fine in the Facebook app, so there seemed to be no discernible reason other than pure greed. No attempt to make anything better or easier for the consumer, no innovation, nothing good for the people using the product. It was really just to inflate their download numbers and somehow make more money off of us. No thank you. I have stopped using Facebook since then.

What they say is this is due to is new EU policies about messenger apps. I'm not in the EU. I reckon it's really because there's a new Messenger desktop client for Windows 10, which does have these features. Downloading the app gives FB access to more data from your machine to sell to companies for personalized advertising purposes.

Untangling Threads by Erin Kissane

TOKEN MERGING: YOUR VIT BUT FASTER

Meta reported to switch payments for tracking in EU, as a way around GDPR issues w tracking. Based on EUCJ verdict in which it was mentioned as an aside. NOYB says this has been previously allowed at media-sites. Imo it was backward then, because it retains the fiction that advertising is only possible with tracking, which is false.

What if, early in the morning on Election Day in 2016, Mark Zuckerberg had used Facebook to broadcast “go-out-and-vote” reminders just to supporters of Hillary Clinton? Extrapolating from Facebook’s own published data, that might have given Mrs. Clinton a boost of 450,000 votes or more, with no one but Mr. Zuckerberg and a few cronies knowing about the manipulation.

Que incumplan estas Condiciones, las Normas comunitariasu otras condiciones y políticas que rijan tu

Pero solo lo que como propietario Facebook considere incumplimiento, porque ellos no respetan que no se quiera seguir compartiendo información

Inhabilitamos anteriormente tu cuenta por haber infringido nuestras Condiciones, las Normas comunitarias u otras condiciones o políticas que se aplican al uso que haces de Facebook. Si inhabilitamos tu cuenta debido a una infracción de nuestras Condiciones, las Normas comunitarias u otras condiciones o políticas, aceptas que no podrás crear otra cuenta sin nuestro permiso. Proporcionamos permiso para crear una nueva cuenta a nuestro exclusivo criterio; esto no significa ni insinúa que la medida disciplinaria fue equivocada o sin motivo.

Esta es una falacia, ya que nuestras experiencias con infancia han demostrado que las cuentas aunque infrijan la ley no son cerradas

there’s the famous 2019 paper by Allcott et al. which found that having people deactivate Facebook for a while made them happier, while also making them socialize more and worry less about politics

we remove language that incites or facilitates serious violence

Believing in "government" REQUIRES hypocrisy, schizophrenia and delusion. One illustration of this is the bizarre and contradictory way in which social media platforms PRETEND to be against people advocating violence.

"Emplearemos estas licencias únicamente para ofrecer y mejorar nuestros Productos y servicios, tal y como se describe en el apartado 1 anterior" FACEBOOK.

El discurso encubre un interés económico que no todos los usuarios conocen

Se observa una manipulación de los aspectos económicos que encubre este tipo de plataformas

Al suscribirse a cualquiera de las filiales de Meta, automáticamente se otorgan derechos en común con todas las redes sociales pertenecientes a este conglomerado digital, ya que si bien solo se otorgan permiso a una sola red social, una vez la persona decide no pertenecer más, no es tan fácil desligarse de estas, ya que por ejemplo en el momento que una persona fallece, si nadie tiene acceso a sus perfiles, éste sigue activo, y por lo tanto, su contenido se puede seguir usando, al igual que pueden hacer base de datos y venderla a cualquier compañía que decida comprarla. Estos términos legales de una u otra manera son abusivos, ya que muchas personas no leemos al detalle por el solo hecho de pertenecer a la era digital. Otro claro ejemplo de esto, son las cookies que no sabemos lo que son, pero que, para poder acceder, debemos si p sí aceptarlas sin saber el riesgo que se corre a la hora del robo de datos personales. Hay otros casos en los que, cuando se denuncia una publicación, por más que ésta no cumpla con las reglas comunitarias, no es bajada de la red, tal vez, porque los algoritmos no detectan una infracción, cosa que si sucede con publicaciones sin mala intención, pero que, por una simple palabra que esté dentro de lo prohibido y que sea detectada como no permitida, se bajan publicaciones, y se restringen cuentas. Un caso reciente, fue el de la plataforma zoom en donde, si bien sirvió mucho para acercar a las personas durante la época de pandemia, se detectó que la privacidad estaba siendo vulnerada, por lo que mucha gente migró a otras plataformas como telegram, donde en teoría, había un poco más de intimidad y reserva.

https://www.facebook.com/legal/terms. Se exigen condicione y estas son claras para pertenecer a facebook, quien a su vez se compromete proteger la privacidad de datos e información publicada por los usuarios. ¿como facebook comprueban los antecedentes de quienes hacen parte de ella.

Por ejemplo, podemos mostrar a tus amigos que te interesa un evento promocionado o que has indicado que te gusta una página de Facebook creada por una marca que nos paga para mostrar sus anuncios en Facebook. Este tipo de anuncios y contenido solo se muestra a las personas que tienen tu permiso para ver las acciones que realizas en los Productos de Meta

Este tipo de funciones ni siquiera se pueden personalizar, entonces, no hay forma de saber qué tipo de contenido está mostrando la plataforma a nuestros amigos y a terceros

cierto contenido que compartas o subas, como fotos o videos, esté protegido por leyes de propiedad intelectual. Eres el propietario de los derechos de propiedad intelectual (como derechos de autor o marcas comerciales) de todo el contenido que crees y compartas en Facebook y en los demás Productos de las empresas de Meta que uses. Ninguna disposición en estas Condiciones anula los derechos que tienes sobre tu propio contenido. Puedes compartir libremente tu

Al suscribirse a cualquiera de las filiales de Meta, automáticamente se otorgan derechos en común con todas las redes sociales pertenecientes a este conglomerado digital, ya que si bien solo se otorgan permiso a una sola red social, una vez la persona decide no pertenecer más, no es tan fácil desligarse de estas, ya que por ejemplo en el momento que una persona fallece, si nadie tiene acceso a sus perfiles, éste sigue activo, y por lo tanto, su contenido se puede seguir usando, al igual que pueden hacer base de datos y venderla a cualquier compañía que decida comprarla. Estos términos legales de una u otra manera son abusivos, ya que muchas personas no leemos al detalle por el solo hecho de pertenecer a la era digital. Otro claro ejemplo de esto, son las cookies que no sabemos lo que son, pero que, para poder acceder, debemos si p sí aceptarlas sin saber el riesgo que se corre a la hora del robo de datos personales. Hay otros casos en los que, cuando se denuncia una publicación, por más que ésta no cumpla con las reglas comunitarias, no es bajada de la red, tal vez, porque los algoritmos no detectan una infracción, cosa que si sucede con publicaciones sin mala intención, pero que, por una simple palabra que esté dentro de lo prohibido y que sea detectada como no permitida, se bajan publicaciones, y se restringen cuentas. Un caso reciente, fue el de la plataforma zoom en donde, si bien sirvió mucho para acercar a las personas durante la época de pandemia, se detectó que la privacidad estaba siendo vulnerada, por lo que mucha gente migró a otras plataformas como telegram, donde en teoría, había un poco más de intimidad y reserva.

La licencia dejará de tener efecto cuando tu contenido se elimine de nuestros sistemas.

¿De qué manera uno se puede asegurar de que el contenido que subió, se ha eliminado de los sistemas de Meta? Porque si no te avisan que los van a almacenar y a usar de manera deliberada, tampoco es muy seguro que te avisen que lo han eliminado. Además, en caso de que alguien lo haya reposteado o descargado de alguna manera, tampoco hay forma de saberlo

Es decir, si, por ejemplo, compartes una foto en Facebook, nos das permiso para almacenarla, copiarla y compartirla con otros

Es decir, inmediatamente se sube una foto o cualquier contenido al perfil de alguien, este contenido pasa a ser también de FB, pero ¿Qué tipo de uso le puede dar facebook? ¿De qué manera podría, por ejemplo, modificar una foto mía?

Si se te ha condenado por delitos sexuales.

Pero ¿de qué manera hacen cumplir esto, si uno puede crear una cuenta con un nombre diferente?

Si eres menor de 13 años

La red está llena de menores de edad, no sólo fb sino que las demás aplicaciones que pertenecen a Meta y cuando uno denuncia este tipo de perfiles, no pasa nada, entonces ¿qué tipo de filtros usarán?

(sin nuestro permiso).

¿Por qué tendría qué pedirle permiso a FB para hacer uso de mi cuenta con terceros? ¿Ellos no contarían como uno?

Usar en tu cuenta el nombre que utilizas en tu vida diaria.

Y luego uno entra a Facebook y encuentra miles de perfiles falsos, incluso de objetos inanimados

Você pode criar somente duas contas no Gerenciador de Negócios

Verificar se é possível ter mais de DUAS CONTAS no Gerenciador de Negócios

Criar um Gerenciador de Negócios

Central de Ajuda da Meta para Empresas

In expressing disagreement with the proposed literal interpretation of Article 13(1)(c) GDPR set outin the Preliminary Draft Decision, Facebook submitted that “Facebook Ireland’s interpretation directlytracks the actual wording of the relevant GDPR provision which stipulates only that two items ofinformation be provided about the processing (i.e. purposes and legal bases). It says nothing aboutprocessing operations.”102 Facebook submitted that because, in its view, Article 13(1) GDPR applies “atthe time data is collected”, and therefore refers only to “prospective processing”. It submits that, onthis basis, Article 13(1)(c) GDPR does not relate to ongoing processing operations, but is concernedsolely with information on “intended processing”.103 Facebook’s position is therefore that Article13(1)(c) GDPR is future-gazing or prospective only in its application and that such an interpretation issupported by a literal reading of the GDPR

This is both a ballsy, and utterly stupid argument. The kind of argument that well-paid lawyers will make in order to keep getting paid.

In light of this confirmation by the data controller that it does not seek to rely on consent in thiscontext, there can be no dispute that, as a matter of fact, Facebook is not relying on consent as thelawful basis for the processing complained of. It has nonetheless been argued on the Complainant’sbehalf that Facebook must rely on consent, and that Facebook led the Complainant to believe thatit was relying on consent

Here Helen bitchslaps Max by noting that despite what they hope and wish for, FB is relying on contract, and not consent.

On this basis, the issues that I will address in this Draft Decision are as follows: Issue 1 – Whether clicking on the “accept” button constitutes or must be considered consentfor the purposes of the GDPR Issue 2 – Reliance on Article 6(1)(b) as a lawful basis for personal data processing Issue 3 – Whether Facebook provided the requisite information on the legal basis forprocessing on foot of Article 6(1)(b) GDPR and whether it did so in a transparent manner.

Key issues identified in the draft opinion. Compare later if this differs in final.

Data Policy and related materialsometimes, on the contrary, demonstrate an oversupply of very high level, generalised information atthe expense of a more concise and meaningful delivery of the essential information necessary for thedata subject to understand the processing being undertaken and to exercise his/her rights in ameaningful way. Furthermore, while Facebook has chosen to provide its transparency information byway of pieces of text, there are other options available, such as the possible incorporation of tables,which might enable Facebook to provide the information required in a clear and concise manner,particularly in the case of an information requirement comprising a number of linked elements. Theimportance of concision cannot be overstated nonetheless. Facebook is entitled to provide additionalinformation to its user above and beyond that required by Article 13 and can provide whateveradditional information it wishes. However, it must first comply with more specific obligations under theGDPR, and then secondly ensure that the additional information does not have the effect of creatinginformation fatigue or otherwise diluting the effective delivery of the statutorily required information.That is simply what the GDPR requires.

DPC again schools facebook in reality.

This is facebook.

3NO SELF PROMOTION, RECRUITING, OR DM SPAMMINGMembers love our group because it's SAFE. We are very strict on banning members who blatantly self promote their product or services in the group OR secretly private message members to recruit them.

2NO POST FROM FAN PAGES / ARTICLES / VIDEO LINKSOur mission is to cultivate the highest quality content inside the group. If we allowed videos, fan page shares, & outside websites, our group would turn into spam fest. Original written content only

1NO POSTING LINKS INSIDE OF POST - FOR ANY REASONWe've seen way too many groups become a glorified classified ad & members don't like that. We don't want the quality of our group negatively impacted because of endless links everywhere. NO LINKS

Using actual fake-news headlines presented as they were seen on Facebook, we show that even a single exposure increases subsequent perceptions of accuracy, both within the same session and after a week. Moreover, this “illusory truth effect” for fake-news headlines occurs despite a low level of overall believability and even when the stories are labeled as contested by fact checkers or are inconsistent with the reader’s political ideology. These results suggest that social media platforms help to incubate belief in blatantly false news stories and that tagging such stories as disputed is not an effective solution to this problem.

On Facebook, we identified 51,269 posts (0.25% of all posts)sharing links to Russian propaganda outlets, generating 5,065,983interactions (0.17% of all interactions); 80,066 posts (0.4% of allposts) sharing links to low-credibility news websites, generating28,334,900 interactions (0.95% of all interactions); and 147,841 postssharing links to high-credibility news websites (0.73% of all posts),generating 63,837,701 interactions (2.13% of all interactions). Asshown in Figure 2, we notice that the number of posts sharingRussian propaganda and low-credibility news exhibits an increas-ing trend (Mann-Kendall 𝑃 < .001), whereas after the invasion ofUkraine both time series yield a significant decreasing trend (moreprominent in the case of Russian propaganda); high-credibilitycontent also exhibits an increasing trend in the Pre-invasion pe-riod (Mann-Kendall 𝑃 < .001), which becomes stable (no trend)in the period afterward. T

We estimated the contribution of veri-fied accounts to sharing and amplifying links to Russian propagandaand low-credibility sources, noticing that they have a dispropor-tionate role. In particular, superspreaders of Russian propagandaare mostly accounts verified by both Facebook and Twitter, likelydue to Russian state-run outlets having associated accounts withverified status. In the case of generic low-credibility sources, a sim-ilar result applies to Facebook but not to Twitter, where we alsonotice a few superspreaders accounts that are not verified by theplatform.

I often think back to MySpace’s downfall. In 2007, I penned a controversial blog post noting a division that was forming as teenagers self-segregated based on race and class in the US, splitting themselves between Facebook and MySpace. A few years later, I noted the role of the news media in this division, highlighting how media coverage about MySpace as scary, dangerous, and full of pedophiles (regardless of empirical evidence) helped make this division possible. The news media played a role in delegitimizing MySpace (aided and abetted by a team at Facebook, which was directly benefiting from this delegitimization work).

danah boyd argued in two separate pieces that teenagers self-segregated between MySpace and Facebook based on race and class and that the news media coverage of social media created fear, uncertainty, and doubt which fueled the split.

hat we want is to be able to leave Facebook and still talk to our friends, instead of having many Facebooks.

What about Matrix?

Some of the sensitive data collection analyzed by The Markup appears linked to default behaviors of the Meta Pixel, while some appears to arise from customizations made by the tax filing services, someone acting on their behalf, or other software installed on the site. Report Deeply and Fix Things Because it turns out moving fast and breaking things broke some super important things. Give Now For example, Meta Pixel collected health savings account and college expense information from H&R Block’s site because the information appeared in webpage titles and the standard configuration of the Meta Pixel automatically collects the title of a page the user is viewing, along with the web address of the page and other data. It was able to collect income information from Ramsey Solutions because the information appeared in a summary that expanded when clicked. The summary was detected by the pixel as a button, and in its default configuration the pixel collects text from inside a clicked button. The pixels embedded by TaxSlayer and TaxAct used a feature called “automatic advanced matching.” That feature scans forms looking for fields it thinks contain personally identifiable information like a phone number, first name, last name, or email address, then sends detected information to Meta. On TaxSlayer’s site this feature collected phone numbers and the names of filers and their dependents. On TaxAct it collected the names of dependents.

Wait, wait, wait... the software has a feature that scans for privately identifiable information and sends that detected info to Meta? And in other cases, the users of the Meta Pixel decided to send private information ot Meta?

Trolls, in this context, are humans who hold accounts on social media platforms, more or less for one purpose: To generate comments that argue with people, insult and name-call other users and public figures, try to undermine the credibility of ideas they don’t like, and to intimidate individuals who post those ideas. And they support and advocate for fake news stories that they’re ideologically aligned with. They’re often pretty nasty in their comments. And that gets other, normal users, to be nasty, too.

Not only programmed accounts are created but also troll accounts that propagate disinformation and spread fake news with the intent to cause havoc on every people. In short, once they start with a malicious comment some people will engage with the said comment which leads to more rage comments and disagreements towards each other. That is what they do, they trigger people to engage in their comments so that they can be spread more and produce more fake news. These troll accounts usually are prominent during elections, like in the Philippines some speculates that some of the candidates have made troll farms just to spread fake news all over social media in which some people engage on.

So, bots are computer algorithms (set of logic steps to complete a specific task) that work in online social network sites to execute tasks autonomously and repetitively. They simulate the behavior of human beings in a social network, interacting with other users, and sharing information and messages [1]–[3]. Because of the algorithms behind bots’ logic, bots can learn from reaction patterns how to respond to certain situations. That is, they possess artificial intelligence (AI).

In all honesty, since I don't usually dwell on technology, coding, and stuff. I thought when you say "Bot" it is controlled by another user like a legit person, never knew that it was programmed and created to learn the usual patterns of posting of some people may be it on Twitter, Facebook, and other social media platforms. I think it is important to properly understand how "Bots" work to avoid misinformation and disinformation most importantly during this time of prominent social media use.

Facebook users claim to hate the service, but they keep using it, leading many to describe Facebook as "addictive." But there's a simpler explanation: people keep using Facebook though they hate it because they don't want to lose their connections to the people they love.

De Block Golding, D. (2021, April 7). Viral video contains several false pandemic claims. Full Fact. https://fullfact.org/health/viral-video-contains-several-false-pandemic-claims/

Roth, E. (2021, October 30). Facebook puts tighter restrictions on vaccine misinformation targeted at children. The Verge. https://www.theverge.com/2021/10/30/22754046/facebook-tighter-restrictions-vaccine-misinformation-children

DemTech | COVID-19 Misinformation Newsletter 24 August 2021. (2021, August 24). https://demtech.oii.ox.ac.uk/covid-19-misinformation-newsletter-24-august-2021/#continue

Berliner Impfgegner wegen Volksverhetzung verurteilt. (2021, September 30). https://www.rbb24.de/panorama/beitrag/2021/09/berlin-verurteilung-volksverhetzung-gelber-davidstern-impfgegner-antisemitismus.html

Meet the media startups making big money on vaccine conspiracies. (n.d.). Fortune. Retrieved December 23, 2021, from https://fortune.com/2021/05/14/disinformation-media-vaccine-covid19/

The landscape of social media is ever-changing, especially among teens who often are on the leading edge of this space. A new Pew Research Center survey of American teenagers ages 13 to 17 finds TikTok has rocketed in popularity since its North American debut several years ago and now is a top social media platform for teens among the platforms covered in this survey. Some 67% of teens say they ever use TikTok, with 16% of all teens saying they use it almost constantly. Meanwhile, the share of teens who say they use Facebook, a dominant social media platform among teens in the Center’s 2014-15 survey, has plummeted from 71% then to 32% today.

This echos Meta’s concerns that Facebook was losing ground in this age demographic, and likely also the reasoning to make Instagram more TikTok-like. This may also dovetail with the recently announced change to the Facebook algorithm to be even more sticky and TikTok-like.

"Facebook is fundamentally an advertising machine"—it hasn't been about bringing people closer together in a long time (if that was ever its real mission). And as a better advertising machine comes along—TikTok—Facebook is forced to redesign its user interaction to be more addictive just to stand still. Will a a more human-scale social network...or series of social networks...replace it?

Recommendation media is the new standard for content distribution. Here’s why friend graphs can‘t compete in an algorithmic world.

Mark last week as the end of the social networking era, which began with the rise of Friendster in 2003, shaped two decades of internet growth, and now closes with Facebook's rollout of a sweeping TikTok-like redesign.

这篇文章负面部分偏多,但也算是公平:当一个产品已经走过十年之后,它需要面对的内部和外部挑战太多了,老的用户正在失去兴趣,而新的用户却被对手抢走了注意力。因此,Facebook 的产品成了一种尴尬的范例:如何在一个老产品中增加新功能。Armstrong 这样写道:

如果你打开你的 Facebook 应用程序,我想你会对目前存放在那里的产品数量感到震惊……然而,每一个产品都解决了不同的工作,所以下载 Facebook 的价值主张变得越来越模糊。

而另一个准确的捕捉则是对于 Messenger 的。这个从 Facebook 的私信功能中拆分出来的 app,现在又将被整合回到主端当中,甚至一项雄心勃勃的工作是:要把 Facebook、Instagram 和 Whatsapp 这几个风马牛不相及的产品中即时通信的部分整合成一个。

信息流和私信是一个社交产品的两个极端:前者是公开的,也就是公域,在算法推荐的加持下,发布者几乎已经丧失了对信息分发的控制力;后者是私密的,也就是私域。在过去十几年的社交平台(无论是媒体还是网络)发展史中,我们都能理解,两者有着清晰的界限,一旦把错误的内容放到了错误的地方,就可能产生不可逆的破坏。

Armsrong 对这段历史的总结是这样的: 在过去的几年里,我们对所谓的社交网络学到了一些东西。首先,我们实际上并不关心大多数人的想法。社交部分并不像最初想象的那样吸引人,因为人们的很多日常生活都很无聊。我们根本不关心,根本不关心你高中时的某个人的政治观点。来自熟人的内容并不那么有趣。 我们也想明白了,在网上发布我们的大部分社会存在是有负面机会成本的。我们所有人,我是说我们中的每一个人,都曾在公共领域使用过污言秽语,开过种族主义的玩笑,说过一些贬义词,或者只是单纯的愚蠢。自 2007 年以来,越来越明显的是,在网上分享你的生活的好处并不值得让我们冒险发言,虽然这些话现在感觉很好,但在 10 年后可能会摧毁你的生活。 简而言之,社交图谱只是兴趣图谱的一个可怜的替代品。人们从他们所谓的「社交」媒体中寻找的只是他们感兴趣的媒体。

What's become clear is that our relationships are experiencing a profound reset. Across generations, having faced a stark new reality, a decades-long trend1 reversed as people are now shifting their energy away from maintaining a wide array of casual connections to cultivating a smaller circle of the people who matter most.

‘how the demand for deeper human connection has sparked a profound reset in our relationships’.

The Meta Foresight (formerly Facebook IQ) team conducted a survey of 36,000 adults across 12 markets.

Among their key findings:

72% of respondents said that the pandemic caused them to reprioritize their closest friends

Young people are most open to using more immersive tech to foster connections (including augmented and virtual reality), though all users indicated that tech will play a bigger role in enhancing personal connections moving forward

37% of people surveyed globally reported reassessing their life priorities as a result of the pandemic

And when corporations start to dominate the Internet, they became de-facto governments. Slowly but surely, the tech companies began to act like old power. They use the magic of tech to consolidate their own power, using money to increase their influence, blocking the redistribution of power from the entrenched elites to ordinary people.

The corporations built by white, male, American, and vaguely libertarian people became a focal point of power because of the money they had to influence governments and society. They started looking like "old power."

Later:

Facebook took advantage of tech's tradition of openness [importing content from MySpace], but as soon as it got what it wanted, it closed its platform off.

We believe that Facebook is also actively encouraging people to use tools like Buffer Publish for their business or organization, rather than personal use. They are continuing to support the use of Facebook Pages, rather than personal Profiles, for things like scheduling and analytics.

Of course they're encouraging people to do this. Pushing them to the business side is where they're making all the money.

Facebook provides some data portability, but makes an odd plea for regulation to make more functionality possible.

Why do this when they could choose to do the right thing? They don't need to be forced and could certainly try to enforce security. It wouldn't be any worse than unveiling the tons of personal data they've managed not to protect in the past.

Zugsystem ausklügelt, das dieOpfer möglichst schnell und reibungslos nach Auschwitz bringt, darübervergißt, was in Auschwitz mit ihnen geschieht.

nicht vergleichbar, aber auch ein Bsp für Technik, die dadurch, dass sie als Selbstzweck missverstanden wird, missbraucht wird - Facebook und der Menschenhandel -- siehe Jan Böhmermann Folge zu Facebookleaks

Content moderation takes place within this ecosystem.

The essay makes the point that "Facebook has many faces - it is not a monolith". But algorithmic content moderation is monolithic. Let's see whether this tension is investigated.

Five biggest myths about the COVID-19 vaccines, debunked. (n.d.). Fortune. Retrieved April 29, 2022, from https://fortune.com/2021/10/02/five-biggest-myths-covid-19-vaccines/

dentical to the content of information which was previously declared to be unlawful, or to block access to that information, irrespective of who requested the storage of that information;

This establishes that identical or equivalent content once struck down can be made stayed down by automatic tools.

Directive 2000/31/EC of the European Parliament and of the Council of 8 June 2000 on certain legal aspects of information society services, in particular electronic commerce, in the Internal Market (‘Directive on electronic commerce’), in particular Article 15(1), must be interpreted as meaning that it does not preclude a court of a Member State from:– ordering a host provider to remove information which it stores, the content of which is identical to the content of information which was previously declared to be unlawful, or to block access to that information, irrespective of who requested the storage of that information;– ordering a host provider to remove information which it stores, the content of which is equivalent to the content of information which was previously declared to be unlawful, or to block access to that information, provided that the monitoring of and search for the information concerned by such an injunction are limited to information conveying a message the content of which remains essentially unchanged compared with the content which gave rise to the finding of illegality and containing the elements specified in the injunction, and provided that the differences in the wording of that equivalent content, compared with the wording characterising the information which was previously declared to be illegal, are not such as to require the host provider to carry out an independent assessment of that content, and– ordering a host provider to remove information covered by the injunction or to block access to that information worldwide within the framework of the relevant international law.

C‑18/18 Eva Glawischnig-Piesczek v Facebook

Key for Art 17 AG opinion: this line of arguments justifies the ex ante blocking of manifestly infringing content.

Ben Collins. (2022, February 28). Quick thread: I want you all to meet Vladimir Bondarenko. He’s a blogger from Kiev who really hates the Ukrainian government. He also doesn’t exist, according to Facebook. He’s an invention of a Russian troll farm targeting Ukraine. His face was made by AI. https://t.co/uWslj1Xnx3 [Tweet]. @oneunderscore__. https://twitter.com/oneunderscore__/status/1498349668522201099

First is that it actually lowers paid acquisition costs. It lowers them because the Facebook Ads algorithm rewards engaging advertisements with lower CPMs and lots of distribution. Facebook does this because engaging advertisements are just like engaging posts: they keep people on Facebook.

Engaging advertisements on Facebook benefit from lower acquisition costs because the Facebook algorithm rewards more interesting advertisements with lower CPMs and wider distribution. This is done, as all things surveillance capitalism driven, to keep eyeballs on Facebook.

This isn't too dissimilar to large cable networks that provide free high quality advertising to mass manufacturers in late night slots. The network generally can't sell all of their advertising inventory, particularly in low viewing hours, so they'll offer free or incredibly cheap commercial rates to their bigger buyers (like Coca-Cola or McDonalds, for example) to fill space and have more professional looking advertisements between the low quality advertisements from local mom and pop stores and the "as seen on TV" spots. These higher quality commercials help keep the audience engaged and prevents viewers from changing the channel.

University, G. W. (n.d.). Facebook’s vaccine misinformation policy reduces anti-vax information. Retrieved March 7, 2022, from https://medicalxpress.com/news/2022-03-facebook-vaccine-misinformation-policy-anti-vax.html

Giglietto, F., Farci, M., Marino, G., Mottola, S., Radicioni, T., & Terenzi, M. (2022). Mapping Nefarious Social Media Actors to Speed-up Covid-19 Fact-checking. SocArXiv. https://doi.org/10.31235/osf.io/6umqs

Maher, S. (2022, January 3). Misinformation from the U.S. is the next virus—And it’s spreading fast. Macleans.Ca. https://www.macleans.ca/society/health/misinformation-from-the-u-s-is-the-next-virus-and-its-spreading-fast/

She thinks the companies themselves are behind this, trying to manipulate their users into having certain opinions and points of view.

The irony is that this is, itself, somewhat a conspiracy theory.

Though, I think a nuanced understanding may be closer:

Only 10 percent say Facebook has a positive impact on society, while 56 percent say it has a negative impact and 33 percent say its impact is neither positive nor negative. Even among those who use Facebook daily, more than three times as many say the social network has a negative rather than a positive impact.

Here's the rub. Only 1 out of 10 Americans surveyed think Facebook is a good idea.

Over half of Americans surveyed actually think Facebook is bad for them and society as a whole. And yet, the general sense is now that life is impossible without it.

How does the church respond to this? Do we tell people to get off or "use in moderation?"

ReconfigBehSci on Twitter: ‘RT @NBCNewsNow: Covid conspiracy theories born in the U.S. are having a deadly impact around the world. @BrandyZadrozny takes us to Roman…’ / Twitter. (n.d.). Retrieved 3 December 2021, from https://twitter.com/SciBeh/status/1466065323879243782

US white supremacists targeting under-vaxxed Aboriginal communities, WA Premier says. (2021, December 2). ABC News. https://www.abc.net.au/news/2021-12-02/us-white-supremacists-targeting-aboriginal-communities-in-wa/100670090

How the Far-Right Is Radicalizing Anti-Vaxxers. (n.d.). Retrieved December 2, 2021, from https://www.vice.com/en/article/88ggqa/how-the-far-right-is-radicalizing-anti-vaxxers

Claims about changes to Pfizer vaccine for children miss important details. (16:48:08.203282+00:00). Full Fact. https://fullfact.org/online/claims-about-changes-pfizer-vaccine-children-miss-important-details/

Facebook froze as anti-vaccine comments swarmed users. (n.d.). MSN. Retrieved November 12, 2021, from https://www.msn.com/en-ca/news/science/facebook-froze-as-anti-vaccine-comments-swarmed-users/ar-AAPY06U

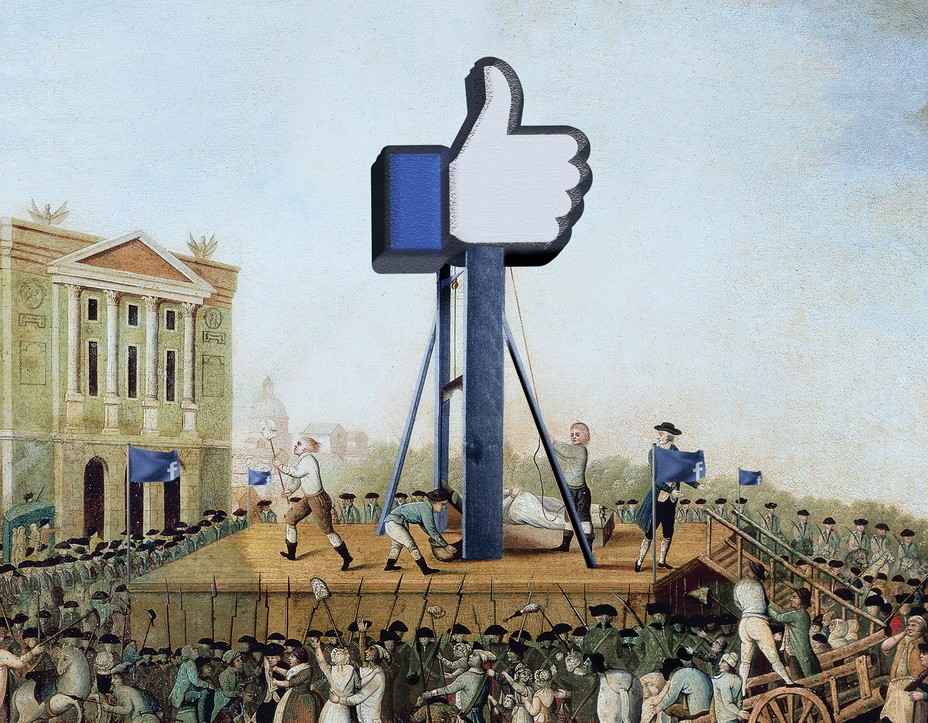

Source: De Agostini Picture Library / Getty

This is a searing image for what this article is about:

Could be entitled "A different kind of social justice."

《TIME》杂志的 10 月份封面是扎克伯格的照片上面写着「Delete "Facebook"?」,这个 Alert 使用的是 iOS 样式的弹框,但搭配了一个鼠标的选择方式,其实完全可以理解为什么这么做,但还是引起了一些讨论,其中最有趣的是一群苹果前员工出来讲自己过去在苹果的设计流程:之前负责重设计 me.com 的设计师 Majd Taby 说,他当时的工作就是把 iPad 的样式完全移植到 me.com 的 web 中,最终的表现就是用鼠标选择 iPad 样式的 Alert,和这个封面展示的效果一模一样。之前负责 iCloud web apps 的 Sebastiaan de With 说,苹果团队内部完全没有 UIKit 或设计源文件的共享,每一个团队要做类似的事情时只能从头开始,要知道那时可是拟物化设计的时代,绘制一套 UI 需要耗费非常多的时间,这一切的原因都是要「保密」。Martin Pedrick 在加入用户界面组被告知的第一件事就是不要用任何资源共享工具来分享设计源文件。

https://www.eventbrite.com/e/logging-off-facebook-what-comes-next-tickets-201128228947

Not attending, but an interesting list of people and related projects to watch.

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>David Dylan Thomas</span> in Come and get yer social justice metaphors! (<time class='dt-published'>11/05/2021 11:26:10</time>)</cite></small>

NHS Covid-19 app is not the third most expensive project ever. (15:49:06.328284+00:00). Full Fact. https://fullfact.org/online/track-and-trace-project-cost/

NHS Test and Trace has not spent £37bn so far. (16:10:35.640943+00:00). Full Fact. https://fullfact.org/online/37bn-test-trace-spending/

Adrienne LaFrance outlines the reasons we need to either abandon Facebook or cause some more extreme regulation of it and how it operates.

While she outlines the ills, she doesn't make a specific plea about the solution of the problem. There's definitely a raging fire in the theater, but no one seems to know what to do about it. We're just sitting here watching the structure burn down around us. We need clearer plans for what must be done to solve this problem.

When the most powerful company in the world possesses an instrument for manipulating billions of people—an instrument that only it can control, and that its own employees say is badly broken and dangerous—we should take notice.

Facebook could say that its platform is not for everyone. It could sound an alarm for those who wander into the most dangerous corners of Facebook, and those who encounter disproportionately high levels of harmful content. It could hold its employees accountable for preventing users from finding these too-harmful versions of the platform, thereby preventing those versions from existing.

The "moral majority" has screamed for years about the dark corners of the internet, and now they seem to be actively supporting a company that actively pushes people to those very extremes.

Facebook could shift the burden of proof toward people and communities to demonstrate that they’re good actors—and treat reach as a privilege, not a right.

Nice to see someone else essentially saying something along the lines that "free speech" is not the same as "free reach".

Traditional journalism has always had thousands of gatekeepers who filtered and weighed who got the privilege of reach. Now anyone with an angry, vile, or upsetting message can get it for free. This is one of the worst parts of what Facebook allows.

“While we have other systems that demote content that might violate our specific policies, like hate speech or nudity, this intervention reduces all content with equal strength. Because it is so blunt, and reduces positive and completely benign speech alongside potentially inflammatory or violent rhetoric, we use it sparingly.”)

If it's neither moral nor legal for one to shout "fire" in a crowded theater, why is it somehow both legal and moral for a service like Facebook to allow their service to scream "fire, fire, fire" within a crowded society?

Facebook wants people to believe that the public must choose between Facebook as it is, on the one hand, and free speech, on the other. This is a false choice.

One example is a program that amounts to a whitelist for VIPs on Facebook, allowing some of the users most likely to spread misinformation to break Facebook’s rules without facing consequences.

“I am worried that Mark’s continuing pattern of answering a different question than the question that was asked is a symptom of some larger problem,” wrote one Facebook employee in an internal post in June 2020, referring to Zuckerberg. “I sincerely hope that I am wrong, and I’m still hopeful for progress. But I also fully understand my colleagues who have given up on this company, and I can’t blame them for leaving. Facebook is not neutral, and working here isn’t either.”

Glad to see that others are seeing Mark Zuckerberg seems to be the one with the flaws that are killing Facebook.

An internal message characterizing Zuckerberg’s reasoning says he wanted to avoid new features that would get in the way of “meaningful social interactions.” But according to Facebook’s definition, its employees say, engagement is considered “meaningful” even when it entails bullying, hate speech, and reshares of harmful content.

Meaningful social interactions don't need algorithmic help.

At the time, Facebook was already weighting the reactions other than “like” more heavily in its algorithm—meaning posts that got an “angry” reaction were more likely to show up in users’ News Feeds than posts that simply got a “like.” Anger-inducing content didn’t spread just because people were more likely to share things that made them angry; the algorithm gave anger-inducing content an edge. Facebook’s Integrity workers—employees tasked with tackling problems such as misinformation and espionage on the platform—concluded that they had good reason to believe targeting posts that induced anger would help stop the spread of harmful content.

Facebook offers a collection of one-tap emoji reactions. Today, they include “like,” “love,” “care,” “haha,” “wow,” “sad,” and “angry.” Company researchers had found that the posts dominated by “angry” reactions were substantially more likely to go against community standards, including prohibitions on various types of misinformation, according to internal documents.

"Angry" reactions can be a measure of posts being against community standards and providing misinformation.

What other signals might misinformation carry that could be used to guard against them at a corporate level?

that many of Facebook’s employees believe their company operates without a moral compass.

Not just Facebook, but specifically Mark Zuckerberg who appears to be on the spectrum and isn't capable of being moral in a traditional sense.

Facebook has dismissed the concerns of its employees in manifold ways. One of its cleverer tactics is to argue that staffers who have raised the alarm about the damage done by their employer are simply enjoying Facebook’s “very open culture,” in which people are encouraged to share their opinions, a spokesperson told me.

Is Facebook ‘Killing Us’? A new study investigates. (n.d.). Retrieved October 25, 2021, from https://news.northwestern.edu/stories/2021/07/is-facebook-killing-us-a-new-study-investigates/

CNN, R. K., Scott Bronstein, Curt Devine and Drew Griffin. (n.d.). They take an oath to do no harm, but these doctors are spreading misinformation about the Covid vaccine. CNN. Retrieved 25 October 2021, from https://www.cnn.com/2021/10/19/us/doctors-covid-vaccine-misinformation-invs/index.html

McIntyre, N. (2021, October 21). Group that spread false Covid claims doubled Facebook interactions in six months. The Guardian. https://www.theguardian.com/technology/2021/oct/21/group-that-spread-false-covid-claims-doubled-facebook-interactions-in-six-months

Illari, L., Restrepo, N. J., Leahy, R., Velasquez, N., Lupu, Y., & Johnson, N. F. (2021). Losing the battle over best-science guidance early in a crisis: Covid-19 and beyond. ArXiv:2110.09634 [Nlin, Physics:Physics]. http://arxiv.org/abs/2110.09634

A Whirlwind Week of Whistleblowing

From a six hour service outage to a senate whistleblower hearing, the PR disasters keep mounting for Facebook.

Not only is Zuckerberg being called out for negligence, but it’s obvious that his ridiculously proposed idea “Instagram for Kids”, a social platform targeting children under the age of 13, is projected to only exacerbate the problem.

Whistleblowing

Former anti-vax Edson woman shares husband’s COVID-19 ICU horror story | Edmonton Journal. (n.d.). Retrieved October 10, 2021, from https://edmontonjournal.com/news/local-news/former-anti-vax-edson-woman-shares-husbands-covid-19-icu-horror-story

A magnet won’t stick to a glass vaccine vial. (2021, June 7). Full Fact. https://fullfact.org/online/covid-vaccine-vial-magnetic/

Vraga, E. K., & Bode, L. (n.d.). Addressing COVID-19 Misinformation on Social Media Preemptively and Responsively - Volume 27, Number 2—February 2021 - Emerging Infectious Diseases journal - CDC. https://doi.org/10.3201/eid2702.203139

FaceBook对未来的设想构建在AR(增强现实)上,而显然现有的AR设备的交互方式还不足以支持平时生活中的使用。他们团队为了实现愿景对全新的交互方式有下面这些设想:

Mena, P. (2020). Cleaning Up Social Media: The Effect of Warning Labels on Likelihood of Sharing False News on Facebook. Policy & Internet, 12(2), 165–183. https://doi.org/10.1002/poi3.214

YouTube is banning Joseph Mercola and a handful of other anti-vaccine activists—The Washington Post. (n.d.). Retrieved October 1, 2021, from https://www.washingtonpost.com/technology/2021/09/29/youtube-ban-joseph-mercola/

“Vigilante treatments”: Anti-vaccine groups push people to leave ICUs. (n.d.). Retrieved September 26, 2021, from https://www.nbcnews.com/tech/tech-news/vigilante-treatments-anti-vaccine-groups-push-people-leave-icus-rcna2233

Ben Collins on Twitter: “A quick thread: It’s hard to explain just how radicalized ivermectin and antivax Facebook groups have become in the last few weeks. They’re now telling people who get COVID to avoid the ICU and treat themselves, often by nebulizing hydrogen peroxide. So, how did we get here?” / Twitter. (n.d.). Retrieved September 26, 2021, from https://twitter.com/oneunderscore__/status/1441395300002848769?s=20

Screenshot of website about Covid-19 vaccine side effects is fake. (16:10:41.471968+00:00). Full Fact. https://fullfact.org/health/yellow-card-fake-website/

Zuckerman, E. (2021). Demand five precepts to aid social-media watchdogs. Nature, 597(7874), 9–9. https://doi.org/10.1038/d41586-021-02341-9

We may think of Pinterest as a visual form of commonplacing, as people choose and curate images (and very often inspirational quotations) that they find motivating, educational, or idealistic(Figure 6). Whenever we choose a passage to cite while sharing an article on Facebook or Twitter, we are creating a very public commonplace book on social media. Every time wepost favorite lyrics from a song or movie to social media or ablog, weare nearing the concept of Renaissance commonplace book culture.

I'm not the only one who's thought this. Pinterest, Facebook, twitter, (and other social media and bookmarking software) can be considered a form of commonplace.

Reuters. (2021, August 18). Facebook removes dozens of vaccine misinformation ‘superspreaders’. Reuters. https://www.reuters.com/technology/facebook-removes-dozens-vaccine-misinformation-superspreaders-2021-08-18/

“Fact Check-Video Does Not Show Australian Children with COVID-19 Being Separated from Their Parents.” Reuters, August 20, 2021, sec. Reuters Fact Check. https://www.reuters.com/article/factcheck-australia-children-idUSL1N2PR183.

‘Analysis | People Are More Anti-Vaccine If They Get Their Covid News from Facebook than from Fox News, Data Shows’. Washington Post. Accessed 4 August 2021. https://www.washingtonpost.com/politics/2021/07/27/people-are-more-anti-vaccine-if-they-get-their-covid-19-news-facebook-rather-than-fox-news-new-data-shows/.

The Daily 202: Nearly 30 groups urge Facebook, Instagram, Twitter to take down vaccine disinformation—The Washington Post. (n.d.). Retrieved August 2, 2021, from https://www.washingtonpost.com/politics/2021/07/19/daily-202-nearly-30-groups-urge-facebook-instagram-twitter-take-down-vaccine-disinformation/?utm_source=twitter&utm_campaign=wp_main&utm_medium=social

We’ve analyzed thousands of COVID-19 misinformation narratives. Here are six regional takeaways—Bulletin of the Atomic Scientists. (n.d.). Retrieved August 1, 2021, from https://thebulletin.org/2021/06/weve-analyzed-thousands-of-covid-19-misinformation-narratives-here-are-six-regional-takeaways/

过去 Facebook 是一家由 Mark Zuckerberg 和 Sheryl Sandberg 双核心领导的公司,但自从特朗普上任后,这个双核心发生了很多变化,公司员工普遍认为 Facebook 的权利结构已经由过去的双核心变成了单核外加其他人构成。至于原因,则是因为 Sheryl Sandberg 没有在特朗普的任期内处理好 Facebook 与华盛顿的关系。

这篇内容节选自还未上市的新书《An Ugly Truth: Inside Facebook's Battle for Domination》。

Facebook Sided With The Science Of The Coronavirus. What Will It Do About Vaccines And Climate Change? (n.d.). BuzzFeed News. Retrieved 11 February 2021, from https://www.buzzfeednews.com/article/alexkantrowitz/facebook-coronavirus-misinformation-takedowns

Akhther, N. (2021). Internet Memes as Form of Cultural Discourse: A Rhetorical Analysis on Facebook. PsyArXiv. https://doi.org/10.31234/osf.io/sx6t7

Carl Heneghan on Twitter. (2020). Twitter. Retrieved 2 March 2021, from https://twitter.com/carlheneghan/status/1329861848573861888

Yasseri, T., & Menczer, F. (2021). Can the Wikipedia moderation model rescue the social marketplace of ideas? ArXiv:2104.13754 [Physics]. http://arxiv.org/abs/2104.13754

Stanley-Becker, I. (n.d.). Anti-vaccine protest at Dodger Stadium was organized on Facebook, including promotion of banned ‘Plandemic’ video. Washington Post. Retrieved 21 February 2021, from https://www.washingtonpost.com/health/2021/02/01/dodgers-anti-vaccine-protest-facebook/

Saltz, E., Leibowicz, C., & Wardle, C. (2020). Encounters with Visual Misinformation and Labels Across Platforms: An Interview and Diary Study to Inform Ecosystem Approaches to Misinformation Interventions. ArXiv:2011.12758 [Cs]. http://arxiv.org/abs/2011.12758

Do your neighbors want to get vaccinated? (n.d.). MIT Technology Review. Retrieved 11 February 2021, from https://www.technologyreview.com/2021/01/16/1016264/covid-vaccine-acceptance-us-county/

Covid-19 pandemic was not planned by Rockefeller Foundation. (16:48:50.595211+00:00). Full Fact. https://fullfact.org/online/covid-19-pandemic-was-not-planned-rockefeller-foundation/

April 30, T. H., & 2021 33. (2021, April 30). Why a Former Anti-Vax Influencer Got Her COVID-19 Shot. Texas Monthly. https://www.texasmonthly.com/news-politics/anti-vax-influencer-covid-19-vaccine-hesitancy/

The CDC Should Be More Like Wikipedia—The Atlantic. (n.d.). Retrieved July 23, 2021, from https://www.theatlantic.com/ideas/archive/2021/07/cdc-should-be-more-like-wikipedia/619469/

Facebook AI. (2021, July 16). We’ve built and open-sourced BlenderBot 2.0, the first #chatbot that can store and access long-term memory, search the internet for timely information, and converse intelligently on nearly any topic. It’s a significant advancement in conversational AI. https://t.co/H17Dk6m1Vx https://t.co/0BC5oQMEck [Tweet]. @facebookai. https://twitter.com/facebookai/status/1416029884179271684

Reuters. (2021, July 17). ‘They’re killing people’: Biden slams Facebook for Covid disinformation. The Guardian. http://www.theguardian.com/media/2021/jul/17/theyre-killing-people-biden-slams-facebook-for-covid-misinformation

Majority of Covid misinformation came from 12 people, report finds | Coronavirus | The Guardian. (n.d.). Retrieved July 19, 2021, from https://www.theguardian.com/world/2021/jul/17/covid-misinformation-conspiracy-theories-ccdh-report?utm_source=dlvr.it&utm_medium=twitter

‘Social Networks Are Exporting Disinformation About Covid Vaccines’. Bloomberg.Com, 20 May 2021. https://www.bloomberg.com/news/articles/2021-05-20/facebook-instagram-twitter-export-covid-vaccine-misinformation-from-u-s.

Dwight Rhinosoros. (2021, June 27). It really can’t be overstated how poisoned the brains of Facebook propaganda boomers are. Https://t.co/eriAt872Ro [Tweet]. @rhinosoros. https://twitter.com/rhinosoros/status/1408933830497648644

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Mike Caulfield</span> in Mike Caulfield on Twitter: "Ok, pressing play again." / Twitter (<time class='dt-published'>06/09/2021 15:47:36</time>)</cite></small>

The Moderna vaccine contains SM-102 not chloroform—Full Fact. (n.d.). Retrieved May 26, 2021, from https://fullfact.org/health/SM-102/

“Over the next five to ten years people will start to learn the importance of privacy and keeping their data,” says Moore. “Facebook’s business model is all about tracking – they are not a social media company, they are an advertising company and if they can track you they can make more money. Apple has got nothing to worry about, but Facebook could be gone in ten years.”

A former FB executive and long-standing friend of Zuckerberg emailed him in 2012 (page 31) to say “The number one threat to Facebook is not another scaled social network, it is the fracturing of information / death by a thousand small vertical apps which are loosely integrated together.”

And this is almost exactly what the IndieWeb is.

The single alternative platform is absolutely not the Facebook-killer.

This is the truth. One only need to look at cable television providers or telephone service providers to see problems here.

To change incentives so that personal data is treated with appropriate care, we need criminal penalties for the Facebook executives who left vulnerable half a billion people’s personal data, unleashing a lifetime of phishing attacks, and who now point to an FTC deal indemnifying them from liability because our phone numbers and unchangeable dates of birth are “old” data.

We definitely need penalties and regulation to fix our problems.

A strong and cogent argument for why we should not be listening to the overly loud cries from Tristan Harris and the Center for Human Technology. The boundary of criticism they're setting is not extreme enough to make the situation significantly better.

It's also a strong argument for who to allow at the table or not when making decisions and evaluating criticism.

it makes a difference whether the argument made before Congress is “Facebook is bad, cannot reform itself, and is guided by people who know what they’re doing but are doing int anyway—and the company needs to be broken up immediately” or if the argument is “Facebook means well, but it sure would be nice if they could send out fewer notifications and maybe stop recommending so much conspiratorial content.”

Note the dramatic difference between these spaces and the potential ability for things to get better.

Misinformation “superspreaders”: Covid vaccine falsehoods still thriving on Facebook and Instagram. (2021, January 6). The Guardian. http://www.theguardian.com/world/2021/jan/06/facebook-instagram-urged-fight-deluge-anti-covid-vaccine-falsehoods

This would be funnier if it weren't so painfully true.

Whether Trump can return to Facebook (and Instagram) will be determined on Wednesday morning, when Facebook’s Oversight Board offers its ruling on the company’s indefinite ban. Check TheWrap.com around 6:15 a.m. PT on Wednesday for an update.

Let's hope that the answer is a resounding "NO!"

The report underscores how climate misinformation is the next front in the disinformation battles.

Shelby, A., & Ernst, K. (2013). Story and science. Human Vaccines & Immunotherapeutics, 9(8), 1795–1801. https://doi.org/10.4161/hv.24828

Why the wrist

为什么要选手环这样一个交互设备,Facebook 是这样解释的:

Yang, K.-C., Pierri, F., Hui, P.-M., Axelrod, D., Torres-Lugo, C., Bryden, J., & Menczer, F. (2020). The COVID-19 Infodemic: Twitter versus Facebook. ArXiv:2012.09353 [Cs]. http://arxiv.org/abs/2012.09353

Smith, N., & Graham, T. (2019). Mapping the anti-vaccination movement on Facebook. Information, Communication & Society, 22(9), 1310–1327. https://doi.org/10.1080/1369118X.2017.1418406

Jamison, A. M., Broniatowski, D. A., Dredze, M., Wood-Doughty, Z., Khan, D., & Quinn, S. C. (2020). Vaccine-Related Advertising in the Facebook Ad Archive. Vaccine, 38(3), 512–520. https://doi.org/10.1016/j.vaccine.2019.10.066

The Information 对 Mark Zuckerberg 进行了一次 45 分钟的访谈,其中提到了 Facebook 在 AR/VR 方面的战略、思考和细节。尽管目前的设备和内容有各种各样的问题,但他非常坚定的认为这会是下一代计算平台,「Facebook 致力于制造让人人都能买得起的设备,而不是像苹果那样依靠溢价赚钱」。为了配合这一战略,Facebook 有接近 1/5 的人为 Facebook Reality Labs 工作。

according to Hao, Mark Zuckerberg wants to increase the number of people who log into Facebook six days a week. I’m not going to get into whether this is good or bad here, but it certainly shows that what you are doing, and how you are doing it, is very important to Facebook.

Can we leverage these data points to follow a movement like Meatless Monday to encourage people to not use Facebook two days a week to cause this data analysis to crash?

Anything that is measured can be gamed. But there's also the issue of a moving target because Facebook will change it's target which then means the community activism will need to change it's target as well. (This may be fine if the point is community engagement and education as the overall mission, in which case the changing target continually engages people and brings in new people to consider what is happening and why.)

the Guardian. ‘Small Number of Facebook Users Responsible for Most Covid Vaccine Skepticism – Report’, 16 March 2021. http://www.theguardian.com/technology/2021/mar/15/facebook-study-covid-vaccine-skepticism.

Yang, K.-C., Pierri, F., Hui, P.-M., Axelrod, D., Torres-Lugo, C., Bryden, J., & Menczer, F. (2020). The COVID-19 Infodemic: Twitter versus Facebook. ArXiv:2012.09353 [Cs]. http://arxiv.org/abs/2012.09353

A Wall Street Journal experiment to see a liberal version and a conservative version of Facebook side by side.

But anything that reduced engagement, even for reasons such as not exacerbating someone’s depression, led to a lot of hemming and hawing among leadership. With their performance reviews and salaries tied to the successful completion of projects, employees quickly learned to drop those that received pushback and continue working on those dictated from the top down.

If the company can't help regulate itself using some sort of moral compass, it's imperative that government or other outside regulators should.

In 2017, Chris Cox, Facebook’s longtime chief product officer, formed a new task force to understand whether maximizing user engagement on Facebook was contributing to political polarization. It found that there was indeed a correlation, and that reducing polarization would mean taking a hit on engagement. In a mid-2018 document reviewed by the Journal, the task force proposed several potential fixes, such as tweaking the recommendation algorithms to suggest a more diverse range of groups for people to join. But it acknowledged that some of the ideas were “antigrowth.” Most of the proposals didn’t move forward, and the task force disbanded. Since then, other employees have corroborated these findings. A former Facebook AI researcher who joined in 2018 says he and his team conducted “study after study” confirming the same basic idea: models that maximize engagement increase polarization. They could easily track how strongly users agreed or disagreed on different issues, what content they liked to engage with, and how their stances changed as a result. Regardless of the issue, the models learned to feed users increasingly extreme viewpoints. “Over time they measurably become more polarized,” he says.

In an internal presentation from that year, reviewed by the Wall Street Journal, a company researcher, Monica Lee, found that Facebook was not only hosting a large number of extremist groups but also promoting them to its users: “64% of all extremist group joins are due to our recommendation tools,” the presentation said, predominantly thanks to the models behind the “Groups You Should Join” and “Discover” features.

“When you’re in the business of maximizing engagement, you’re not interested in truth. You’re not interested in harm, divisiveness, conspiracy. In fact, those are your friends,” says Hany Farid, a professor at the University of California, Berkeley who collaborates with Facebook to understand image- and video-based misinformation on the platform.

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Joan Donovan, PhD</span> in "This is just some of the best back story I’ve ever read. Facebooks web of influence unravels when @_KarenHao pulls the wrong thread. Sike!! (Only the Boston folks will get that.)" / Twitter (<time class='dt-published'>03/14/2021 12:10:09</time>)</cite></small>

This is a fascinating view into algorithmic feeds.

Reminiscent of the Wall Street Journals Red Feed/Blue Feed: https://graphics.wsj.com/blue-feed-red-feed/

Donie O’Sullivan. ‘So the Video Bannon Streamed Live Saying Dr. Anthony Fauci and Christopher Wray Should Be Beheaded Has Been on Facebook for 10 Hours and Has 200,000 Views. 10 Hours. Remember That next Time Zuckerberg Talks about All the Moderators and A.I. They Have.’ Tweet. @donie (blog), 6 November 2020. https://twitter.com/donie/status/1324524141869965312.

ReconfigBehSci on Twitter. (n.d.). Twitter. Retrieved 3 March 2021, from https://twitter.com/SciBeh/status/1331901596863787008

‘Facebook: The Inside Story’ Offers a Front-Row Seat on Voracious Ambition

Inside the Two Years That Shook Facebook—and the World

Facebook (stylized as facebook) is an American online social media and social networking service based in Menlo Park, California, and a flagship service of the namesake company Facebook, Inc. It was founded by Mark Zuckerberg, along with fellow Harvard College students and roommates Eduardo Saverin, Andrew McCollum, Dustin Moskovitz, and Chris Hughes.

Facebook is based in California and was founded by Mark Zuckerberg, Eduardo Saverin, Andrew McCollum, Duston Moskovitz and Chris Hughes.

Anderson, Ian, and Wendy Wood. ‘Habits and the Electronic Herd: The Psychology behind Social Media’s Successes and Failures’. PsyArXiv, 23 November 2020. https://doi.org/10.31234/osf.io/p2yb7.