UK Information Commissioner announces formal investigation of Grok

- Last 7 days

-

-

SpaceX 'bought' xAI for 250 billion USD. xAI previously 'bought' Twitter in March 2025. SpaceX is expected to go public this or next year. Iow, Musk is combining everything to a) inflate overall value b) externalise all risks to investors c) get his biggest payday yet

Grok will be live tweeting nudified astro-pics from every launch and starlink satellite burning up in the atmosphere

-

-

-

French justice dept doing a search of X offices in Paris, wrt purposefully eroding democratic processes w ao amplifying hatespeech.

-

- Jan 2026

-

ec.europa.eu ec.europa.eu

-

If proven, these failures would constitute infringements of Articles 34(1) and (2), 35(1) and 42(2) of the DSA.

The investigation concerns Article 34(1) and 34(2), 35(1) and 42(2).

-

he Commission has extended its ongoing formal proceedings opened against X in December 2023 to establish whether X has properly assessed and mitigated all systemic risks, as defined in the DSA, associated with its recommender systems, including the impact of its recently announced switch to a Grok-based recommender system.

The existing investigation of X under the DSA wrt recommender systems is extended in scope to include the recommender functions that Grok is announced to provide

-

The new investigation will assess whether the company properly assessed and mitigated risks associated with the deployment of Grok's functionalities into X in the EU. This includes risks related to the dissemination of illegal content in the EU, such as manipulated sexually explicit images, including content that may amount to child sexual abuse material.

A new investigation under the DSA wrt Grok and the production/dissemination of illegal incl sexualised imagery and CSAM

-

EC press release wrt investigations into X and Grok under the DSA.

-

-

www.euronews.com www.euronews.com

-

EC opens DSA investigation of X over Grok

-

-

-

Dutch MPs still on X (90 out of 150 #2026/01/21) email addresses provided to ask them to delete their account

Tags

Annotators

URL

-

-

leavex.eu leavex.eu

-

LeaveX tracks politicians who still have an account on X

-

-

www.theguardian.com www.theguardian.com

-

Musk haalt bakzeil met Grok. Zo te lezen alleen in de UK (or the article is only UK focused). However the same applies to EU too wrt legal issues of Grok as it is. Ofcom says despite this change, investigations will continue

-

-

leavex.eu leavex.eu

-

A budding list of politicians across Europe who still have a twitter account, despite it being a cesspool. I wonder if the old Politwoops lists might be useful here (despite being outdated by several elections since 2019)

-

-

www.musicbusinessworldwide.com www.musicbusinessworldwide.com

-

One extractionist behemoth sues another. X sues music industry over licensing deal that is common with other bigtech platforms

-

-

ibestuur.nl ibestuur.nl

-

iBestuur says X is on the path to being banned in the EU bc of Grok. Making and distributing CSAM and AI created explicit images of people are all criminal offences across the EU, next to not being compliant with GDPR, DSA and soon AI reg. A similar app has been shutdown in Italy on GDPR grounds.

-

-

connectedplaces.online connectedplaces.online

-

By [[Laurens Hof p]] X is geen platform probleem maar een machtsprobleem. Nog lezen wat hij exact bedoelt. Imo denk ik dat elk platform dat het type beslissingen neemt dat X doet, geen platform in (US) juridische zin meer is, en dus ook inhoudelijk verantwoordelijk is en daarmee aansprakelijk.

-

-

doublepulsar.com doublepulsar.com

-

On Masto this author mentioned he had reason to believe the UK government would ban X/Grok within 2 weeks. I don't see a particular direct legal path for that, other than CSAM spreading as platform which is a criminal offence.

-

-

digitalrechte.de digitalrechte.de

-

Und die Bundesregierung sollte sich endlich von X verabschieden und damit Elon Musks Plattform die Relevanz entziehen.

yes, there is no explainable position for public entities that still have accounts on Twitter.

-

Wenn Grok also Bilder generiert und auf X veröffentlicht, ohne sie als Deepfake zu kennzeichnen, verstößt das gegen EU-Recht - jedenfalls ab August 2026, wenn die relevanten Vorschriften anwendbar werden.

The relevant parts of the AI reg wrt fakes will be applicable in #2026/08

-

Article by Markus Beckedahl et al on the same Grok situtation. Here AI Act does get a mention, and some lines to GDPR and copyright laws too.

-

-

digitalrechte.de digitalrechte.de

-

Clear headed explanation of DSA wrt Grok and X. Otoh in the case of Grok, I think the AI Act has a role to play, which is directly and more immediately connected to market access of Grok. It only takes into account the DSA, and the AIR isn't fully applicable yet, but still I think the entire framework needs to be taken into account. Incl the GDPR.

-

-

www.volkskrant.nl www.volkskrant.nl

-

Het is volstrekt onbegrijpelijk en onverantwoordelijk dat X het de facto communicatiekanaal van onze overheid lijkt te zijn. Wie bijvoorbeeld de berichten van minister David van Weel over Venezuela wil lezen, kan daarover niets lezen op de officiële website van Buitenlandse Zaken, maar wel op X

This is a key issue. Gebruik is niet meer uit te leggen. Vgl [[Een goed gesprek over digitale soevereiniteit in de gemeente]] Amersfoort

-

Alsof er geen alternatieven zijn en men werkelijk niet zonder kan: het platform wordt volledig gedomineerd door extreemrechts en heeft een marktaandeel van slechts 4 procent in Europa.

Pretending that there's no alternative. Yes, bc staying isn't painful enough still. Although this new little crisis may provide a trigger. Says 4% usage of Twitter in Europe. Source?

-

Ondanks de voortdurende misdragingen en de openlijke digitale oorlogsverklaring aan Europa blijven onze regeringsleiders, politici en journalisten stug doorposten op X. De Franse president Macron bestond het zelfs om zijn veroordeling van het inreisverbod voor Thierry Breton en zijn vier collega’s te posten op… X.

The cognitive dissonance of bemoaning the evils of Twitter on the very same platform.

-

Opinie, over dat overheid op X echt niet langer is uit te leggen. Goed. Want dat is al heel lang waar.

-

-

-

The Financial Times calling X the deepfake porn site previously known as Twitter.

-

- Dec 2025

-

x.com x.com

-

Definitely true

-

-

www.ctol.digital www.ctol.digital

-

The EU had just levied a €120 million fine on X for DSA violations—the first major enforcement action under rules requiring platforms to moderate illegal content

There are many other fines levied (all without making any dents in the behaviour fined though), the 120ME one for Twitter was the first under DSA illegal content rules (which don't specify what illegal content, but mechanisms for moderation against them)

-

- Nov 2025

-

www.youtube.com www.youtube.com

-

the oversimplification of contemporary language

for - language - twitter - oversimplification

-

Twitter you give you give people a 280 character limit and and um you know suddenly things people can't think in entire paragraphs anymore

for - language - twitter - can't think in paragraphs anymore

-

- Aug 2025

-

help.x.com help.x.com

-

Support via Direct Message is only available to X Premium subscribers.

This is apparently just a bot that directs you to the same Help Center as everyone else.

Tags

Annotators

URL

-

- Jan 2025

-

www.linkedin.com www.linkedin.com

-

Holy Moly. Zuckerberg goes all out Elon, joins hands with Trump and Meta content is heading to pretty much ‘anything goes’

for - post - LinkedIn - misinformation - Meta joins Elon Musk and Twitter no moderation - 2025, Jan 8

-

- Nov 2024

-

www.youtube.com www.youtube.com

-

for - misinformation - twitter - how the platform amplifies misinformation

-

-

www.youtube.com www.youtube.com

-

torproject.org has existed for 22 years now, enabling anonymous communication.<br /> normies who are still using the clearnet to speak truth deserve to get fucked.

Tags

Annotators

URL

-

-

www.theatlantic.com www.theatlantic.com

-

X Is a White-Supremacist Site by [[Charlie Warzel]]

-

The justifications—the lure of the community, the (now-limited) ability to bear witness to news in real time, and of the reach of one’s audience of followers—feel particularly weak today. X’s cultural impact is still real, but its promotional use is nonexistent. (A recent post linking to a story of mine generated 289,000 impressions and 12,900 interactions, but only 948 link clicks—a click rate of roughly 0.00328027682 percent.) NPR, which left the platform in April 2023, reported almost negligible declines in traffic referrals after abandoning the site.

-

In its last report before Musk’s acquisition, in just the second half of 2021, Twitter suspended about 105,000 of the more than 5 million accounts reported for hateful conduct. In the first half of 2024, according to X, the social network received more than 66 million hateful-conduct reports, but suspended just 2,361 accounts. It’s not a perfect comparison, as the way X reports and analyzes data has changed under Musk, but the company is clearly taking action far less frequently.

-

X is no longer a social-media site with a white-supremacy problem, but a white-supremacist site with a social-media problem.

-

- Oct 2024

-

www.theatlantic.com www.theatlantic.com

-

en.wikipedia.org en.wikipedia.org

-

x.com x.com

-

for - future annotation - Twitter post - AI - collective democratic - Habermas Machine - Michiel Bakker

-

-

politicseverywherespace.quora.com politicseverywherespace.quora.com

-

Why is the #HaryanaElectionResult trending on Twitter?

The hashtag #HaryanaElectionResult is trending on Twitter due to the recent assembly elections in Haryana, which have garnered significant public interest and engagement. Here are some key reasons for the trend:

-

- Sep 2024

-

github.com github.com

-

Twitter is gone anyway, its public APIs have shut down

Twitter was a key resource for wordfreq for colloquial use of words. No longer as API shut down and the population of X is skewed to hatemongering in a way that makes it lose utility as data source.

-

-

www.pewresearch.org www.pewresearch.org

-

tweets

for stats - digital decay - twitter -20% of tweets are no longer publicly visible 0ne month later

-

- Aug 2024

-

feministai.pubpub.org feministai.pubpub.org

-

AI and Gender Equality on Twitter

there are movements that address gender equality issues, which oppose Thai society’s patriarchal culture and patriarchal bias. These include attacking sexual harassment, allowing same-sex marriage, drafting legislation for the protection of people working in the sex industry, and promoting the availability of free sanitary napkins for women.

-

-

github.com github.com

-

Take ownership of your Twitter data and get your tweets back

-

-

x.com x.com

-

Look at these guys. One works 16 hours a day. One works 4 hours a week. 2 billionaires. 1 mind-blowing lesson about success: (I can't believe no one has connected the dots)

Interesting thread about Elon Musk & Naval Ravikant. Need to read more in-depth later

-

-

x.com x.com

-

Thread of cool maps you've (probably) never seen before 1. All roads lead to Rome

Very interesting Twitter thread

-

- Jul 2024

-

x.com x.com

-

Heiress to one of the world’s most powerful families. Her grandfather cut her out of the $15.4 BILLION family fortune after her scandal. But she fooled the world with her “dumb blond” persona and built a $300 MILLION business portfolio. This is the crazy story of Paris Hilton:

Interesting thread about Paris Hilton.

Main takeaway: Don't be quick to judge. Only form an opinion based on education; thorough research, evidence-based. If you don't want to invest the effort, then don't form an opinion. Simple as that.

Similar to "Patience" by Nas & Damian Marley.

Also Charlie Munger: "I never allow myself to have [express] an opinion about anything that I don't know the opponent side's argument better than they do."

-

- May 2024

-

-

daniel waldman@the_waldman@bleacherreport. go bearsSan Francisco, CAbr.app.link/Get-the-BR-appJoined September 2013665 Following505 Followers

Daniel Waldman, Bleacher Report Twitter Profile

Tags

Annotators

URL

-

-

-

Bassam Haddad@4BassamFounding Director of MidEast & Islamic Studies Program, George Mason University, Executive Director of http://ArabStudiesInstitute.org. Satire warning!jadaliyya.comJoined April 2009

Bassam Haddad Twitter Profile Aran Studies Institute Executive Director Twitter profile Jadaliyya

-

-

-

Maura Finkelstein@Dr_maurafWriter. Ethnographer. Professor. Author of The Archive of Loss: Lively Ruination in Mill Land Mumbai (Duke UP 2019) rep’d by @a_la_ash she/her/Free Palestine Philadelphia, PAmaurafinkelstein.comJoined February 2017

Maura Finkelstein Twitter bio

Tags

Annotators

URL

-

-

twitter.com twitter.com

-

Alex Sarabia@SarabiaTXCommunications Director for @SenWarren Previously @JoaquinCastrotx @JulianCastro #GoSpursGo he/him/él

Alex Sarabia Twitter profile Senator Elizabeth Warren Communications Director Previously worked for Joaquin Castro and Julian Castro

-

- Feb 2024

-

-

https://github.com/travisbrown/cancel-culture

Cancel culture, tool for fighting abuse on Twitter, which can also be used to archive one's Tweets.

Example: An archive of a user's Tweets: https://gist.github.com/rondy/e6fc62e6968f39428df5eb1746f0d308

-

-

blogs.lse.ac.uk blogs.lse.ac.uk

-

Scholars have taken keen advantage of these social media norms.

Sadly, Twitter is not what it once was...

-

-

twitter.com twitter.com

-

<script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>Each day on twitter there is one main character. The goal is to never be it

— maple cocaine (@maplecocaine) January 3, 2019

-

- Jan 2024

-

www.dailydot.com www.dailydot.com

-

From a branding perspective, it’s a bizarre and self-sabotaging move. Twitter is an established, internationally recognizable name. It’s cited in untold numbers of books, broadcasts, TV shows and news articles. Every internet-literate person knows what a tweet is. Needless to say, it will be hard to persuade regular people to refer to X as “X” instead of good ol’ Twitter. Plus, a single letter is difficult to google. These are just some of the many, many reasons why Twitter/X users are dunking on Musk’s new rebrand.

-

-

dougbelshaw.com dougbelshaw.com

-

Venkatesh Rao thinks that the Nazi bar analogy is “an example of a bad metaphor contagion effect” and points to a 2010 post of his about warren vs plaza architectures. He believes that Twitter, for example, is a plaza, whereas Substack is a warren: A warren is a social environment where no participant can see beyond their little corner of a larger maze. Warrens emerge through people personalizing and customizing their individual environments with some degree of emergent collaboration. A plaza is an environment where you can easily get to a global/big picture view of the whole thing. Plazas are created by central planners who believe they know what’s best for everyone.

-

- Nov 2023

-

twitter.com twitter.com

-

La obra de Don Pablo González Casanova todavía está por descubrirse...

Raul Romero (@RaulRomero_mx 24 de noviembre de 2023) menciona en Twitter una obra algo oscura de Pablo González Casanova: "Las minorías étnicas en América Latina: del subdesarrollo colonial al socialismo".

Encontré que se trata de un texto publicado en 1979 en:

Desarrollo Indoamericano, núm. 47, Bogota, febrero, pp. 47-56.

Y por lo menos ese primer párrafo es el mismo que aparece después en una obra titulada "Indios y negros en América Latina", publicada el mismo año por la UNAM (Cuadernos de Cultura Latinoamericana 97):

https://web.archive.org/web/20191108135255/http://ru.ffyl.unam.mx/handle/10391/3041

accessed:: 2023-11-24 23:59

-

-

reactome.org reactome.org

- Oct 2023

-

twitter.com twitter.comTwitter1

-

Wikipedia is inherently hierarchical and therefore subject to the biases of higher ranking editors, independent of their merits.

True, but it should never be taken as the authoritative voice and there are ways to annotate on the internet :)

Tags

Annotators

URL

-

-

newrepublic.com newrepublic.com

-

Perhaps it’s not a force for good at all. Alex Shephard @alex_shephard

The irony of still signing with your Twitter handle a piece about the demise of it. The entire thing in a nutshell. I have stopped mentioning my single remaining Twitter account as contact details on anything. My site, mail and Mastodon in that order I always mention.

-

It’s likely that some facsimile of Twitter will exist, far into the future. But a seismic shift in how the platform is perceived has occurred. If it isn’t good for breaking news, then what good is it? Perhaps it’s not a force for good at all.

This is the cycle that made Twitter. Real time developments, and another was the interaction/access dynamic between politicians and journalists. A very visible sign of that cycle breaking, the utility in a developing crisis/event nullified, is I think a good canary. Because in practice the amount of non-human content, trollfarming on top of the actually low user numbers mean that its heyday reputation was already no longer rightfully worn. I wonder how long the public perception of that cycle existing will lag behind the actuality of it no longer being there.

-

https://newrepublic.com/article/176138/twitter-went-evil-musk-misinformation

-

- Aug 2023

-

erinkissane.com erinkissane.com

-

One of the things I loved most about Twitter was the way it could throw things in front of me that I never would have even thought to go look for on my own.

I'm afraid this is one of those sentiments that should absolutely be tossed in the because of lack of user control category

-

- Jul 2023

-

www.boston.gov www.boston.gov

-

dishes at restaurants so their kids

annotation

Tags

Annotators

URL

-

-

www.theverge.com www.theverge.com

-

If one "tweets" on Twitter, will one then be "eX-iting" posts on X? I think it's a perfect time to eXit the entire platform. #IndieWeb

https://www.theverge.com/2023/7/23/23804629/twitters-rebrand-to-x-may-actually-be-happening-soon

-

-

gist.github.com gist.github.com

-

```js / * twitter-entities.js * This function converts a tweet with "entity" metadata * from plain text to linkified HTML. * * See the documentation here: http://dev.twitter.com/pages/tweet_entities * Basically, add ?include_entities=true to your timeline call * * Copyright 2010, Wade Simmons * Licensed under the MIT license * http://wades.im/mons * * Requires jQuery /

function escapeHTML(text) { return $('<div/>').text(text).html() }

function linkify_entities(tweet) { if (!(tweet.entities)) { return escapeHTML(tweet.text) }

// This is very naive, should find a better way to parse this var index_map = {} $.each(tweet.entities.urls, function(i,entry) { index_map[entry.indices[0]] = [entry.indices[1], function(text) {return "<a href='"+escapeHTML(entry.url)+"'>"+escapeHTML(text)+"</a>"}] }) $.each(tweet.entities.hashtags, function(i,entry) { index_map[entry.indices[0]] = [entry.indices[1], function(text) {return "<a href='http://twitter.com/search?q="+escape("#"+entry.text)+"'>"+escapeHTML(text)+"</a>"}] }) $.each(tweet.entities.user_mentions, function(i,entry) { index_map[entry.indices[0]] = [entry.indices[1], function(text) {return "<a title='"+escapeHTML(entry.name)+"' href='http://twitter.com/"+escapeHTML(entry.screen_name)+"'>"+escapeHTML(text)+"</a>"}] }) var result = "" var last_i = 0 var i = 0 // iterate through the string looking for matches in the index_map for (i=0; i < tweet.text.length; ++i) { var ind = index_map[i] if (ind) { var end = ind[0] var func = ind[1] if (i > last_i) { result += escapeHTML(tweet.text.substring(last_i, i)) } result += func(tweet.text.substring(i, end)) i = end - 1 last_i = end } } if (i > last_i) { result += escapeHTML(tweet.text.substring(last_i, i)) } return result} ```

Tags

Annotators

URL

-

-

news.ycombinator.com news.ycombinator.com

Tags

Annotators

URL

-

-

news.ycombinator.com news.ycombinator.com

-

- May 2023

-

www.nytimes.com www.nytimes.com

-

Linda Yaccarino Is Twitter’s New CEO, Elon Musk Confirms by Tiffany Hsu, Sapna Maheshwari, Benjamin Mullin, Ryan Mac

-

-

www.wordnik.com www.wordnik.com

-

A script searches Twitter for "X is my new favorite word" and adds it to this list.

https://www.wordnik.com/lists/twitter-favorites

See also: http://laivakoira.typepad.com/blog/2013/10/twitters-new-favourite-words.html

Follow along:https://twitter.com/favibot

See word clouds: http://www.flickr.com/photos/hugovk/sets/72157636928894765/

See the script: https://github.com/hugovk/word-tools/blob/master/new_favourite_words.py

Inspired by: http://www.wordnik.com/lists/outcasts

-

- Apr 2023

-

andy-bell.co.uk andy-bell.co.uk

-

I think I’m not alone that Mastodon is giving me the ick<br /> by Andy Bell

Read at Fri 4/7/2023 8:36 AM

-

-

on.substack.com on.substack.com

-

In Notes, writers will be able to post short-form content and share ideas with each other and their readers. Like our Recommendations feature, Notes is designed to drive discovery across Substack. But while Recommendations lets writers promote publications, Notes will give them the ability to recommend almost anything—including posts, quotes, comments, images, and links.

Substack slowly adding features and functionality to make them a full stack blogging/social platform... first long form, then short note features...

Also pushing in on Twitter's lunch as Twitter is having issues.

-

-

communitywiki.org communitywiki.org

- Mar 2023

-

www.jeremycherfas.net www.jeremycherfas.net

-

How to promote my podcast <br /> by Jeremy Cherfas

Tags

Annotators

URL

-

-

-

Twitter banning him for saying women should "bear responsibility" for being sexually assaulted. He has since been reinstated.

Twitter had banned and then later reinstated Andrew Tate for saying women should "bear responsibility" for being sexually assaulted.

-

-

-

TheSateliteCombinationCard IndexCabinetandTelephoneStand

A fascinating combination of office furniture types in 1906!

The Adjustable Table Company of Grand Rapids, Michigan manufactured a combination table for both telephones and index cards. It was designed as an accessory to be stood next to one's desk to accommodate a telephone at the beginning of the telephone era and also served as storage for one's card index.

Given the broad business-based use of the card index at the time and the newness of the telephone, this piece of furniture likely was not designed as an early proto-rolodex, though it certainly could have been (and very well may have likely been) used as such in practice.

I totally want one of these as a side table for my couch/reading chair for both storing index cards and as a temporary writing surface while reading!

This could also be an early precursor to Twitter!

Folks have certainly mentioned other incarnations: - annotations in books (person to self), - postcards (person to person), - the telegraph (person to person and possibly to others by personal communication or newspaper distribution)

but this is the first version of short note user interface for both creation, storage, and distribution by means of electrical transmission (via telephone) with a bigger network (still person to person, but with potential for easy/cheap distribution to more than a single person)

Tags

- Grand Rapids Michigan

- audience

- annotations

- card index filing cabinets

- Adjustable Table Company

- postcards

- user interface

- evolution of technology

- office furniture

- technology

- intellectual history

- zettelkasten boxes

- card index for business

- telephones

- satelite stands

- rolodexes

- telegraph

Annotators

-

- Feb 2023

-

mailbrew.com mailbrew.com

Tags

Annotators

URL

-

-

bird.makeup bird.makeup

-

-

-

Twitter Notifications Keep Breaking in Wake of Elon Musk's Mass Layoffs<br /> by Matt Novak

Read Wed 2022-12-07 6:55 AM

-

- Jan 2023

-

twitterisgoinggreat.com twitterisgoinggreat.com

-

whalebird.social whalebird.social

-

https://whalebird.social/en/desktop/contents

Whalebird is a Mastodon, Pleroma, and Misskey client for desktop application

-

-

-

https://tressel.xyz/

Tressel, a paid tool roughly like Readwise.io

-

-

paulstamatiou.com paulstamatiou.comMastodon1

-

www.gephebase.org www.gephebase.orgGefebase1

-

https://github.com/lmichan/BioDBS

nar:https://doi.org/10.1093/nar/gkz1161

tw:https://twitter.com/gephebase

relacion_genotipo_fenotipo

https://mobile.twitter.com/i/lists/1475461947860688896

Tags

Annotators

URL

-

-

snarfed.org snarfed.org

-

treeverse.app treeverse.app

-

https://treeverse.app/

Treeverse is a tool for visualizing and navigating Twitter conversation threads.<br /> It is available as a browser extension for Chrome and Firefox.

-

-

500ish.com 500ish.com

-

Mastodon Brought a Protocol to a Product Fight

https://500ish.com/mastodon-brought-a-protocol-to-a-product-fight-ba9fda767c6a

-

- Dec 2022

-

followgraph.vercel.app followgraph.vercel.app

-

https://followgraph.vercel.app/

-

-

twitter.com twitter.com

Tags

Annotators

URL

-

-

ralphm.net ralphm.net

-

Jaiku-Twitter XMPP interoperability circa 2008

Tags

Annotators

URL

-

-

-

Nature 613, 19-21 (2023)

-

-

dl.acm.org dl.acm.org

-

We found that while some participants were aware of bots’ primary characteristics, others provided abstract descriptions or confused bots with other phenomena. Participants also struggled to classify accounts correctly (e.g., misclassifying > 50% of accounts) and were more likely to misclassify bots than non-bots. Furthermore, we observed that perceptions of bots had a significant effect on participants’ classification accuracy. For example, participants with abstract perceptions of bots were more likely to misclassify. Informed by our findings, we discuss directions for developing user-centered interventions against bots.

Tags

Annotators

URL

-

-

link.springer.com link.springer.com

-

We analyzed URLs cited in Twitter messages before and after the temporary interruption of the vaccine development on September 9, 2020 to investigate the presence of low credibility and malicious information. We show that the halt of the AstraZeneca clinical trials prompted tweets that cast doubt, fear and vaccine opposition. We discovered a strong presence of URLs from low credibility or malicious websites, as classified by independent fact-checking organizations or identified by web hosting infrastructure features. Moreover, we identified what appears to be coordinated operations to artificially promote some of these URLs hosted on malicious websites.

-

-

www.sciencedirect.com www.sciencedirect.com

-

When public health emergencies break out, social bots are often seen as the disseminator of misleading information and the instigator of public sentiment (Broniatowski et al., 2018; Shi et al., 2020). Given this research status, this study attempts to explore how social bots influence information diffusion and emotional contagion in social networks.

-

-

www.nature.com www.nature.com

-

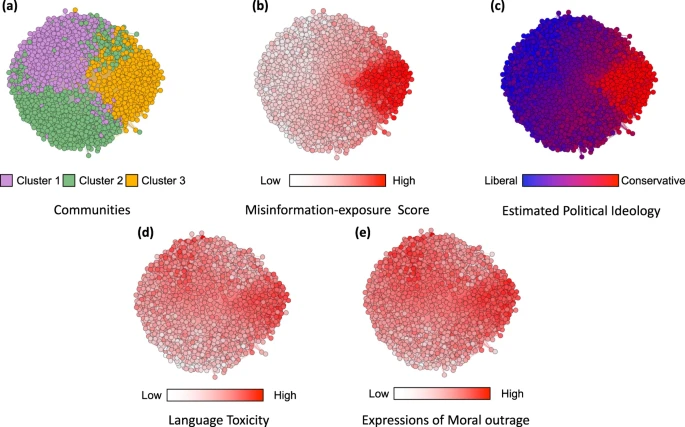

. Furthermore, our results add to the growing body of literature documenting—at least at this historical moment—the link between extreme right-wing ideology and misinformation8,14,24 (although, of course, factors other than ideology are also associated with misinformation sharing, such as polarization25 and inattention17,37).

Misinformation exposure and extreme right-wing ideology appear associated in this report. Others find that it is partisanship that predicts susceptibility.

-

And finally, at the individual level, we found that estimated ideological extremity was more strongly associated with following elites who made more false or inaccurate statements among users estimated to be conservatives compared to users estimated to be liberals. These results on political asymmetries are aligned with prior work on news-based misinformation sharing

This suggests the misinformation sharing elites may influence whether followers become more extreme. There is little incentive not to stoke outrage as it improves engagement.

-

We found that users who followed elites who made more false or inaccurate statements themselves shared news from lower-quality news outlets (as judged by both fact-checkers and politically-balanced crowds of laypeople), used more toxic language, and expressed more moral outrage.

Elite mis and disinformation sharers have a negative effect on followers.

-

In the co-share network, a cluster of websites shared more by conservatives is also shared more by users with higher misinformation exposure scores.

Nodes represent website domains shared by at least 20 users in our dataset and edges are weighted based on common users who shared them. a Separate colors represent different clusters of websites determined using community-detection algorithms29. b The intensity of the color of each node shows the average misinformation-exposure score of users who shared the website domain (darker = higher PolitiFact score). c Nodes’ color represents the average estimated ideology of the users who shared the website domain (red: conservative, blue: liberal). d The intensity of the color of each node shows the average use of language toxicity by users who shared the website domain (darker = higher use of toxic language). e The intensity of the color of each node shows the average expression of moral outrage by users who shared the website domain (darker = higher expression of moral outrage). Nodes are positioned using directed-force layout on the weighted network.

-

Aligned with prior work finding that people who identify as conservative consume15, believe24, and share more misinformation8,14,25, we also found a positive correlation between users’ misinformation-exposure scores and the extent to which they are estimated to be conservative ideologically (Fig. 2c; b = 0.747, 95% CI = [0.727,0.767] SE = 0.010, t (4332) = 73.855, p < 0.001), such that users estimated to be more conservative are more likely to follow the Twitter accounts of elites with higher fact-checking falsity scores. Critically, the relationship between misinformation-exposure score and quality of content shared is robust controlling for estimated ideology

-

-

arxiv.org arxiv.org

-

Notice that Twitter’s account purge significantly impacted misinformation spread worldwide: the proportion of low-credible domains in URLs retweeted from U.S. dropped from 14% to 7%. Finally, despite not having a list of low-credible domains in Russian, Russia is central in exporting potential misinformation in the vax rollout period, especially to Latin American countries. In these countries, the proportion of low-credible URLs coming from Russia increased from 1% in vax development to 18% in vax rollout periods (see Figure 8 (b), Appendix).

-

Interestingly, the fraction of low-credible URLs coming from U.S. dropped from 74% in the vax devel-opment period to 55% in the vax rollout. This large decrease can be directly ascribed to Twitter’s moderationpolicy: 46% of cross-border retweets of U.S. users linking to low-credible websites in the vax developmentperiod came from accounts that have been suspended following the U.S. Capitol attack (see Figure 8 (a), Ap-pendix).

-

We find that, during the pandemic, no-vax communities became more central in the country-specificdebates and their cross-border connections strengthened, revealing a global Twitter anti-vaccinationnetwork. U.S. users are central in this network, while Russian users also become net exporters ofmisinformation during vaccination roll-out. Interestingly, we find that Twitter’s content moderationefforts, and in particular the suspension of users following the January 6th U.S. Capitol attack, had aworldwide impact in reducing misinformation spread about vaccines. These findings may help publichealth institutions and social media platforms to mitigate the spread of health-related, low-credibleinformation by revealing vulnerable online communities

-

-

arxiv.org arxiv.org

-

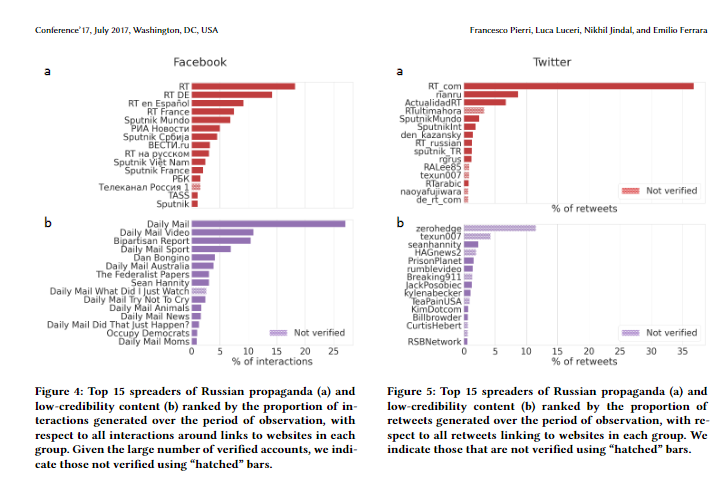

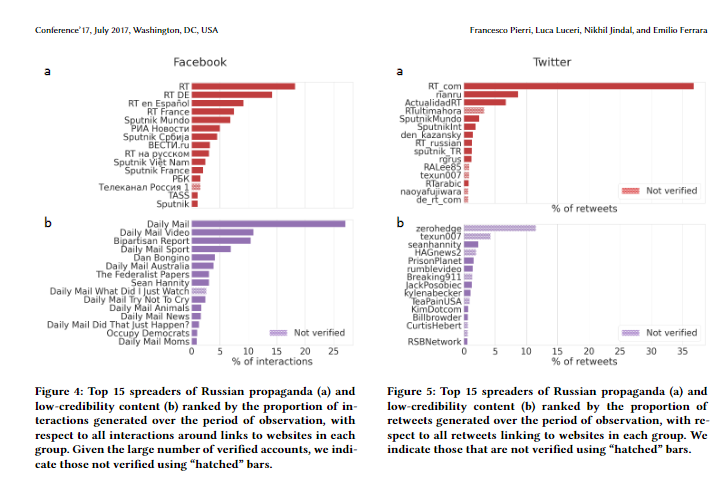

We estimated the contribution of veri-fied accounts to sharing and amplifying links to Russian propagandaand low-credibility sources, noticing that they have a dispropor-tionate role. In particular, superspreaders of Russian propagandaare mostly accounts verified by both Facebook and Twitter, likelydue to Russian state-run outlets having associated accounts withverified status. In the case of generic low-credibility sources, a sim-ilar result applies to Facebook but not to Twitter, where we alsonotice a few superspreaders accounts that are not verified by theplatform.

-

On Twitter, the picture is very similar in the case of Russianpropaganda, where all accounts are verified (with a few exceptions)and mostly associated with news outlets, and generate over 68%of all retweets linking to these websites (see panel a of Figure 4).For what concerns low-credibility news, there are both verified (wecan notice the presence of seanhannity) and not verified users,and only a few of them are directly associated with websites (e.g.zerohedge or Breaking911). Here the top 15 accounts generateroughly 30% of all retweets linking to low-credibility websites.

-

Figure 5: Top 15 spreaders of Russian propaganda (a) andlow-credibility content (b) ranked by the proportion ofretweets generated over the period of observation, with re-spect to all retweets linking to websites in each group. Weindicate those that are not verified using “hatched” bars

-

Figure 4: Top 15 spreaders of Russian propaganda (a) andlow-credibility content (b) ranked by the proportion of in-teractions generated over the period of observation, withrespect to all interactions around links to websites in eachgroup. Given the large number of verified accounts, we indi-cate those not verified using “hatched” bars.

-

-

ieeexplore.ieee.org ieeexplore.ieee.org

-

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

-

-

www.mdpi.com www.mdpi.com

-

we found that social bots played a bridge role in diffusion in the apparent directional topic like “Wuhan Lab”. Previous research also found that social bots play some intermediary roles between elites and everyday users regarding information flow [43]. In addition, verified Twitter accounts continue to be very influential and receive more retweets, whereas social bots retweet more tweets from other users. Studies have found that verified media accounts remain more central to disseminating information during controversial political events [75]. However, occasionally, even the verified accounts—including those of well-known public figures and elected officials—sent misleading tweets. This inspired us to investigate the validity of tweets from verified accounts in subsequent research. It is also essential to rely solely on science and evidence-based conclusions and avoid opinion-based narratives in a time of geopolitical conflict marked by hidden agendas, disinformation, and manipulation [76].

-

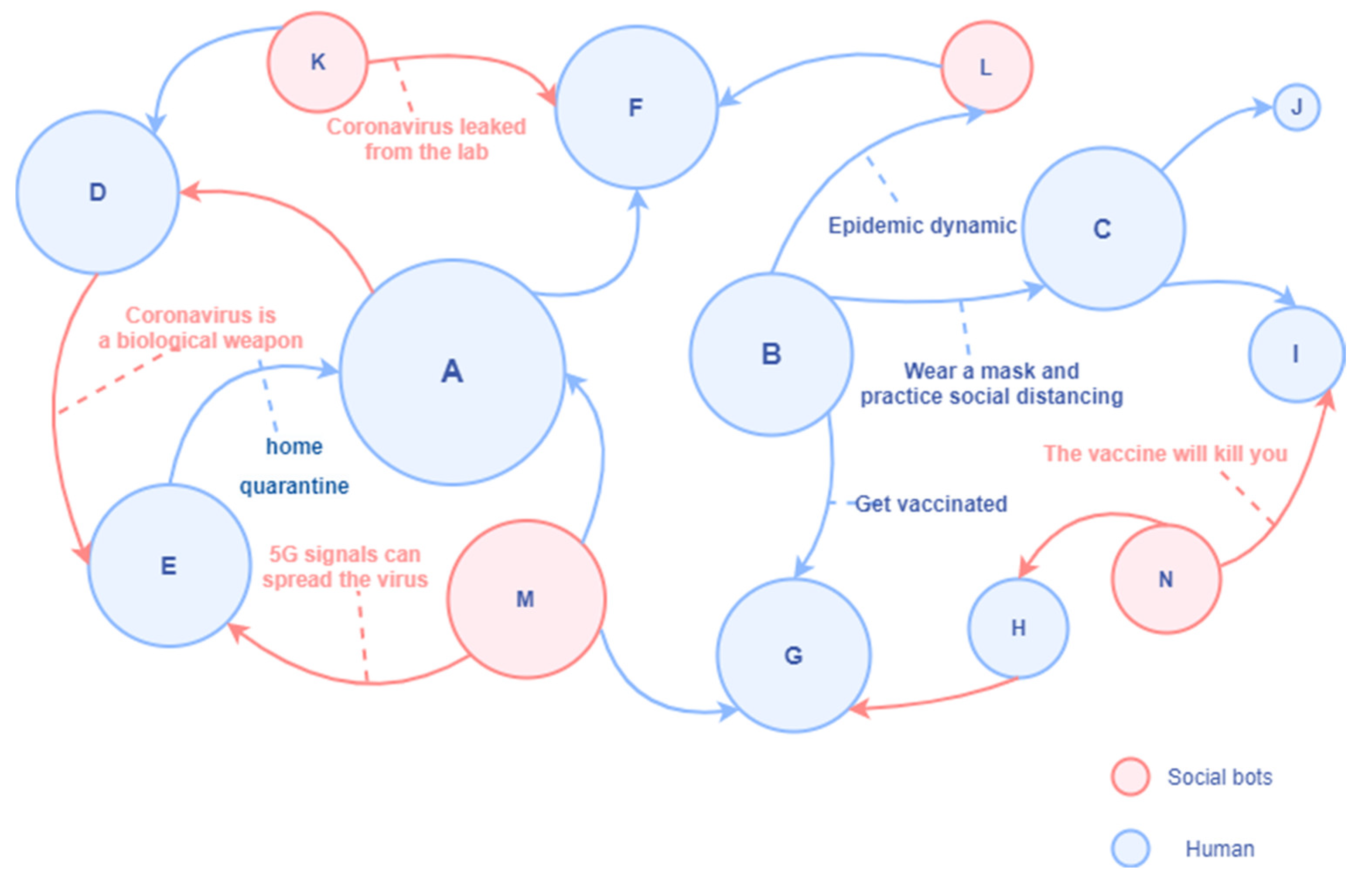

In Figure 6, the node represented by human A is a high-degree centrality account with poor discrimination ability for disinformation and rumors; it is easily affected by misinformation retweeted by social bots. At the same time, it will also refer to the opinions of other persuasive folk opinion leaders in the retweeting process. Human B represents the official institutional account, which has a high in-degree and often pushes the latest news, preventive measures, and suggestions related to COVID-19. Human C represents a human account with high media literacy, which mainly retweets information from information sources with high credibility. It has a solid ability to identify information quality and is not susceptible to the proliferation of social bots. Human D actively creates and spreads rumors and conspiracy theories and only retweets unverified messages that support his views in an attempt to expand the influence. Social bots K, M, and N also spread unverified information (rumors, conspiracy theories, and disinformation) in the communication network without fact-checking. Social bot L may be a social bot of an official agency.

-

We analyzed and visualized Twitter data during the prevalence of the Wuhan lab leak theory and discovered that 29% of the accounts participating in the discussion were social bots. We found evidence that social bots play an essential mediating role in communication networks. Although human accounts have a more direct influence on the information diffusion network, social bots have a more indirect influence. Unverified social bot accounts retweet more, and through multiple levels of diffusion, humans are vulnerable to messages manipulated by bots, driving the spread of unverified messages across social media. These findings show that limiting the use of social bots might be an effective method to minimize the spread of conspiracy theories and hate speech online.

-

-

-

www.cnn.com www.cnn.com

-

deleted its controversial new policy

Thanks to the Internet Archive, we still have a copy: https://web.archive.org/web/20221218173806/https://help.twitter.com/en/rules-and-policies/social-platforms-policy

-

@TwitterSupport

-

-

www.washingtonpost.com www.washingtonpost.com

-

https://www.washingtonpost.com/technology/2022/12/17/how-to-join-mastodon/

Mastodon makes the front section of the Washington Post!? Twitter is in trouble...

-

-

help.archive.org help.archive.org

-

friend.camp friend.camp

-

In advance of deleting my Twitter account, I made this web page that lets you search my tweets, link to an archived version, and read whole threads I wrote.https://tinysubversions.com/twitter-archive/I will eventually release this as a website I host where you drop your Twitter zip archive in and it spits out the 100% static site you see here. Then you can just upload it somewhere and you have an archive that is also easy to style how you like it.

https://friend.camp/@darius/109521972924049369

-

-

www.techdirt.com www.techdirt.com

-

threadreaderapp.com threadreaderapp.com

-

https://threadreaderapp.com/thread/1603551884748460034.html

Elon Musk / Twitter banning major journalists is not a good look.

-

-

help.getrevue.co help.getrevue.co

-

www.movetodon.org www.movetodon.org

-

What a lovely looking UI.

The data returned will also give one a strong idea of how many of their acquaintances have made the jump as well as how active they may be, particularly for those who moved weeks ago and are still active within the last couple of days. For me the numbers are reasonably large. 860 of 4942 have accounts presently and in scrolling through it appears that 80% or so have been active within a day or so regardless of account age.

Tags

Annotators

URL

-

-

techcrunch.com techcrunch.com

-

Twitter has, like its fellow social media platforms, been working for years to make the process of moderation efficient and systematic enough to function at scale. Not just so the platform isn’t overrun with bots and spam, but in order to comply with legal frameworks like FTC orders and the GDPR.

-

-

www.theatlantic.com www.theatlantic.com

-

The trolling is paramount. When former Facebook CSO and Stanford Internet Observatory leader Alex Stamos asked whether Musk would consider implementing his detailed plan for “a trustworthy, neutral platform for political conversations around the world,” Musk responded, “You operate a propaganda platform.” Musk doesn’t appear to want to substantively engage on policy issues: He wants to be aggrieved.

-

-

www.garbageday.email www.garbageday.email

-

Twitter has never been able to deal with the fact its users both hate using it and also hate each other.

-

The most typical way users encounter trending content is when a massively viral tweet — or subtweets about that tweet or the discourse it created — enters their feed. Then it’s up to them to figure out what kind of account posted the tweet, what kind of accounts are sharing the tweet, and what accounts are saying about the tweet.

-

my best guess is it’s the moderation

-

-

www.washingtonpost.com www.washingtonpost.com

-

The presence of Twitter’s code — known as the Twitter advertising pixel — has grown more troublesome since Elon Musk purchased the platform.AdvertisementThat’s because under the terms of Musk’s purchase, large foreign investors were granted special privileges. Anyone who invested $250 million or more is entitled to receive information beyond what lower-level investors can receive. Among the higher-end investors include a Saudi prince’s holding company and a Qatari fund.

Twitter investors may get access to user data

I'm surprised but not surprised that Musk's dealings to get investors in his effort to take Twitter private may include sharing of personal data about users. This article makes it sound almost normal that this kind of information-sharing happens with investors (inclusion of the phrase "information beyond what lower-level investors can receive").

-

-

zephoria.medium.com zephoria.medium.com

-

scripting.com scripting.com

-

Last night I posted a message to both Mastodon and Twitter saying how great M's support for RSS is. Apparently a lot of people on Masto didn't know about it and the response has been resounding. And the numbers are very lopsided. The piece has been "boosted" (the Masto equiv of RT) 1.1K times, yet I only have 3.7K followers there. Meanwhile on Twitter, where I have 69K followers, it has been RTd just 17 times. My feeling was previously that Mastodon was more alive, it's good to have a number to put behind that.

http://scripting.com/2022/12/03.html#a152558

Anecdotal evidence for the slow death of Twitter and higher engagement on Mastodon.

Tags

Annotators

URL

-

-

quillette.com quillette.com

-

The real question isn’t whether platforms like Twitter and Facebook are public squares (because they aren’t), but whether they should be. Should everyone have a right to access these platforms and speak through them the way we all have a right to stand on a soap box downtown and speak through a megaphone? It’s a more complicated ask than we realize—certainly more complicated than those (including Elon Musk himself) who seem to think merely declaring Twitter a public square is sufficient.

-

This tweet, along with the reinstatement of Donald Trump’s Twitter account, has caused a whirlwind of discussion and debate on the platform—the same arguments about free speech and social media as the “digital public square” that seem to go nowhere, regardless of how often we try. And part of the reason they go nowhere is because the situation is both more simple and more complicated than many of us want to recognize.

-

-

escapingtech.com escapingtech.com

-

What I missed about Mastodon was its very different culture. Ad-driven social media platforms are willing to tolerate monumental volumes of abusive users. They’ve discovered the same thing the Mainstream Media did: negative emotions grip people’s attention harder than positive ones. Hate and fear drives engagement, and engagement drives ad impressions. Mastodon is not an ad-driven platform. There is absolutely zero incentives to let awful people run amok in the name of engagement. The goal of Mastodon is to build a friendly collection of communities, not an attention leeching hate mill. As a result, most Mastodon instance operators have come to a consensus that hate speech shouldn’t be allowed. Already, that sets it far apart from twitter, but wait, there’s more. When it comes to other topics, what is and isn’t allowed is on an instance-by-instance basis, so you can choose your own adventure.

Attention economy

Twitter drivers: Hate/fear → Engagement → Impressions → Advertiser money. Since there is no advertising money in Mastodon, it operates on different drivers. Since there is no advertising money, a Mastodon operator isn't driven to get the most impressions. Because there isn't a need to get a high number of impressions, there isn't a need to fuel the hate/fear drivers.

-

-

-

https://www.downes.ca/post/74564

Stephen Downes is doing a great job of regular recaps on the shifts in Twitter/Mastodon/Fediverse lately. I either read or saw all these in the last couple of days myself.

Tags

Annotators

URL

-

-

researchbuzz.me researchbuzz.me

- Nov 2022

-

andy-bell.co.uk andy-bell.co.uk

-

https://andy-bell.co.uk/im-genuinely-enjoying-social-media-again/

-

The TTRG (time to reply guy) was getting so fast, that I can’t actually remember the last time I tweeted something helpful like a design or development tip. I just couldn’t be arsed, knowing some dickhead would be around to waste my time with whataboutisms and “will it scale”?

-

-

www.dwutygodnik.com www.dwutygodnik.com

-

odd.blog odd.blog

-

https://odd.blog/2022/11/06/how-to-add-your-blog-to-mastodon/

-

-

docs.google.com docs.google.com

-

A list of congress members on Mastodon.

-

-

www.washingtonpost.com www.washingtonpost.com

-

https://www.washingtonpost.com/technology/2022/11/27/musk-followers-bernie-cruz/

-

The Post analyzed data from ProPublica’s Represent tool, which tracks congressional Twitter activity.

-

-

-

Literature, philosophy, film, music, culture, politics, history, architecture: join the circus of the arts and humanities! For readers, writers, academics or anyone wanting to follow the conversation.

Tags

Annotators

URL

-

-

hcommons.social hcommons.social

-

hcommons.social is a microblogging network supporting scholars and practitioners across the humanities and around the world.

https://hcommons.social/about

The humanities commons has their own mastodon instance now!

-

-

ooh.directory ooh.directory

-

https://ooh.directory/blog/2022/first-two-days/

-

-

cohost.org cohost.orgcohost!1

-

-

themarkup.org themarkup.org

-

Davidson: I think the interface on Mastodon makes me behave differently. If I have a funny joke or a really powerful statement and I want lots of people to hear it, then Twitter’s way better for that right now. However, if something really provokes a big conversation, it’s actually fairly challenging to keep up with the conversation on Twitter. I find that when something gets hundreds of thousands of replies, it’s functionally impossible to even read all of them, let alone respond to all of them. My Twitter personality, like a lot of people’s, is more shouting. Whereas on Mastodon, it’s actually much harder to go viral. There’s no algorithm promoting tweets. It’s just the people you follow. This is the order in which they come. It’s not really set up for that kind of, “Oh my god, everybody’s talking about this one post.” It is set up to foster conversation. I have something like 150,000 followers on Twitter, and I have something like 2,500 on Mastodon, but I have way more substantive conversations on Mastodon even though it’s a smaller audience. I think there’s both design choices that lead to this and also just the vibe of the place where even pointed disagreements are somehow more thoughtful and more respectful on Mastodon.

Twitter for Shouting; Mastodon for Conversation

Many, many followers on Twitter makes it hard for conversations to happen, as does the algorithm-driven promotion. Fewer followers and anti-viral UX makes for more conversations even if the reach isn't as far.

-

-

observablehq.com observablehq.com

-

fediverse.space fediverse.space

-

https://fediverse.space/

Tags

Annotators

URL

-

-

twitter.com twitter.com

-

I've only just noticed it now, but there is a "Verified" tab in my Twitter Notifications page to filter out notifications from verified users.

Tags

Annotators

URL

-

-

griefbacon.substack.com griefbacon.substack.com

-

The JFK assassination episode of Mad Men. In one long single shot near the beginning of the episode, a character arrives late to his job and finds the office in disarray, desks empty and scattered with suddenly-abandoned papers, and every phone ringing unanswered. Down the hallway at the end of the room, where a TV is blaring just out of sight, we can make out a rising chatter of worried voices, and someone starting to cry. It is— we suddenly remember— a November morning in 1963. The bustling office has collapsed into one anxious body, huddled together around a TV, ignoring the ringing phones, to share in a collective crisis.

May I just miss the core of this bit entirely and mention coming home to Betty on the couch, letting the kids watch, unsure of what to do.

And the fucking Campbells, dressed up for a wedding in front of the TV, unsure of what to do.

Though, if I might add, comparing Twitter to the abstract of television, itself, would be unfortunate, if unfortunately accurate, considering how much more granular the consumptive controls are to the user. Use Twitter Lists, you godforsaken human beings.

-

-

www.cnn.com www.cnn.com

-

www.techdirt.com www.techdirt.com

-

listfollowers.com listfollowers.com

-

www.technologyreview.com www.technologyreview.com

-

Part of what makes Twitter’s potential collapse uniquely challenging is that the “digital public square” has been built on the servers of a private company, says O’Connor’s colleague Elise Thomas, senior OSINT analyst with the ISD. It’s a problem we’ll have to deal with many times over the coming decades, she says: “This is perhaps the first really big test of that.”

Public Square content on the servers of a private company

-

-

nnw.ranchero.com nnw.ranchero.com

-

https://nnw.ranchero.com/2022/11/11/on-following-twitter.html

-

it helps people find their off-ramp from Twitter.

Being able to read others' tweets in a feed reader provides people the ability and freedom to untether themselves from the tyranny of Twitter.

-

-

simonwillison.net simonwillison.net

-

Mastodon is just blogs and Google Reader, skinned to look like Twitter.

And this, in part, is just what makes social readers so valuable: a tight(er) integration of a reading and conversational interface.

https://simonwillison.net/2022/Nov/8/mastodon-is-just-blogs/

-

-

tinysubversions.com tinysubversions.comSpooler1

-

A tool that turns Twitter threads into blog posts, by Darius Kazemi.

https://tinysubversions.com/spooler/

<small><cite class='h-cite via'>ᔥ <span class='p-author h-card'>Darius Kazemi</span> in Darius Kazemi: "thread unroller apps" - Friend Camp (<time class='dt-published'>11/16/2022 08:27:44</time>)</cite></small>

-

-

github.com github.com

-

Extend promnesia to enrich my tweets.

-

-

-

Another big, big difference with Mastodon is that it has no algorithmic ranking of posts by popularity, virality, or content. Twitter’s algorithm creates a rich-get-richer effect: Once a tweet goes slightly viral, the algorithm picks up on that, pushes it more prominently into users’ feeds, and bingo: It’s a rogue wave.On Mastodon, in contrast, posts arrive in reverse chronological order. That’s it. If you’re not looking at your feed when a post slides by? You’ll miss it.

No algorithmic ranking on Mastodon

To drive the need to make the site sticky and drive ads, Twitter used its algorithmic ranking to find and amplify viral content.

-

-

fediverse.observer fediverse.observer

-

https://fediverse.observer/

Suggested Fediverse servers close to you.

-

-

www.hughrundle.net www.hughrundle.net

-

It's not entirely the Twitter people's fault. They've been taught to behave in certain ways. To chase likes and retweets/boosts. To promote themselves. To perform.

Twitter trains users to behave a certain way. It rewards a specific type of performance. In contrast, until now at least, M is focused on conversation (and the functionality of the apps reinforce that, with how boosts and likes work differently)

-

It is the very tools and settings that provide so much more agency to users that pundits claim make Mastodon "too complicated".

Indeed.

-

I hadn't fully understood — really appreciated — how much corporate publishing systems steer people's behaviour until this week. Twitter encourages a very extractive attitude from everyone it touches.

This stands out indeed.

-

Early this week, I realised that some people had cross-posted my Mastodon post into Twitter. Someone else had posted a screenshot of it on Twitter. Nobody thought to ask if I wanted that.

Author expects to be asked consent before posting their words in another web venue, here crossposting to Twitter. I don't think that's a priori a reasonable expectation. The entire web is a public sphere, and expressions in it are public expressions. Commenting on them, extending on them is annotation, and that's fair game imo. Problems arise from how that annotation is used/positioned. If it's part of the conversation with the author and others that's fine depending on tone e.g. forcefully budding in, yet even if unwelcomed. If it is quoting an author and commenting as performance to one's own audience, then the original author becomes an object, a prop in that performance. That is problematic. I can't judge (no links) here which of the two it is.

-

-

threadreaderapp.com threadreaderapp.com

-

arstechnica.com arstechnica.com

-

-

“If you have a compelling product, people will buy it,” Musk told staff. “That has been my experience at SpaceX and Tesla.”

Alternately, if you have toxic leadership, employees will leave and the company will collapse.

-

-

threadreaderapp.com threadreaderapp.com

-

https://threadreaderapp.com/thread/1590111416014409728.html

I'm slowly getting the feeling that Musk is a system one thinker who relies on others to do his system two thinking.

-

-

fedified.com fedified.com

-

meh... This looks dreadful...

Why not just use the built in rel-me verification available in Twitter directly with respect to individual websites?

-

-

twitter.com twitter.com

-

www.biodiversitylibrary.org www.biodiversitylibrary.org

-

https://github.com/lmichan/BioDBS

tw:@BioDivLibrary

https://twitter.com/BioDivLibrary

wd:https://www.wikidata.org/wiki/Q172266

https://github.com/lmichan/BioDBS

wd:https://www.wikidata.org/wiki/Q172266

tw:https://twitter.com/BioDivLibrary

biodiversidad

RRID:SCR_008969

-

-

docs.google.com docs.google.com

-

EduTooters, er, Educators, on Mastodon

-

-

docs.google.com docs.google.com

-

docs.google.com docs.google.com

-

docs.google.com docs.google.com

-

mastodon.help mastodon.help

-

https://mastodon.help/

Tags

Annotators

URL

-

-

instances.social instances.social

-

https://instances.social/

A tool for helping people choose an instance.

Tags

Annotators

URL

-

-

tracydurnell.com tracydurnell.com

-

or the type of services I offer and my target audience, Twitter is an unlikely place for me to connect with potential clients

I've seen it mostly as place for finding professional peers, like my blog did. But that is the 2006 perspective, pre-algo. I wrote about FBs toxicity and quit it, I removed LinkedIn timeline. Twitter I did differently: following #'s on Tweetdeck and broadcasting my blogposts. I fight to not be drawn into discussions, unless they're responses to my posts. In the past 4 yrs I have had good conversations on Mastodon. No clients either though, not in my line of work. Some visibility to existing professional network does very much play an active role though.

-

Pretending Twitter is the answer to gaining respect for and engagement with my work is an addict’s excuse that removes responsibility from myself.

ouch. The metrics of engagement (likes, rts) make it possible to 'rationalise' this perception of needing it for one's work/career eg.

-

https://tracydurnell.com/2022/11/01/the-addictive-nature-of-twitter/

-

-

tweetfeed.org tweetfeed.org

-

An RSS feed of my Twitter account, for those who might like it: http://tweetfeed.org/ChrisAldrich/rss.xml

Tags

Annotators

URL

-

-

docs.tweetfeed.org docs.tweetfeed.org

-

TweetFeed Twitter to RSS with Markdown.

-

-

www.theguardian.com www.theguardian.com

-

Elon Musk’s dangerous view of democracy

-

-

github.com github.com

-

buzzmachine.com buzzmachine.com