This article is very poor. Theillard de Chardin's theory is an attempt by a devout Catholic scientist to reconcile religious ideas of the love of God and of teleology - that our life and world has a purpose, with scientific understanding. To leave that aspect out is to miss the entire point of his work. The theory is very influential in both Christian theology generally and especially Catholic theology, not just in the nineteenth century but is still influential through to the present.

This article attempts to treat it as a purely scientific theory stripping away all religious elements. It cites mainly critics who ridicule the idea that religion is relevant to science and the idea that our universe may have any teleology or purpose. There would be the same problems writing an article about Christian ideas of the Resurrection that ignored the theological context. This approach is not used in other articles on Christian theology in Wikipedia.

Rather than annotate particular points in this article I think it is best to just direct the reader to the entry on him in the French Wikipedia, which is much better, written as theology, as well as some summaries of his work by other authors.

The Omega point is a dynamic concept created by Pierre Teilhard de Chardin , who gave it the name of the last letter of the Greek alphabet : Omega .

For Teilhard, the Omega Point is the ultimate point of the development of complexity and consciousness towards which the universe (1)

. According to his theory, exposed in The Future of Man and The Human Phenomenon , the universe is constantly evolving towards ever higher degrees of complexity and consciousness, (1)

the Omega point being the culmination but also the cause of this evolution (1)

. In other words, the Omega point exists in a supremely complex and supremely conscious way, transcending the universe in the making.

For Teilhard the Omega point evokes the Christian Logos , that is Christ , in that it attracts all things to him and is, according to the Nicene symbol , "God born of God, Light born of the Light, true God born of the true God ", with the indication: " and by him all things were done ".

Subsequently this concept was taken up by other authors, such as John G. Bennett (1965) or Frank Tipler (1994).

The Omega point has five attributes, which Teilhard details in The Human Phenomenon .

The five attributes

In the book The Human Phenomenon (The Human Phenomenon, 1955), Teilhard describes the five attributes of the Omega point:

It has always existed - only in this way you can explain the evolution of the universe to higher levels of consciousness.

must be personal - a person and not an abstract idea; the greater complexity of the question has not only led to higher forms of consciousness, but to greater personalization, of which humans are the highest forms of the "personalization" of the universe. They are fully "individualized", free activity centers. It is in this sense that it is said that man was made in the image of God, which is the highest form of personality. Teilhard de Chardin expressly maintains that the Omega point, when the universe by unification will become one, we will not see the elimination of people, but the super-personalizing. The personality will be infinitely richer. Indeed, the Omega point unites the creation, and it unites, the universe becomes more complex and increases its consciousness. Just as God created the universe evolves to forms more complexity, consciousness, and finally with man, personality because God, the universe attracting to itself is a person.

It must be transcendent - the Omega Point is not the result of complexity and consciousness. It exists before the evolution of the universe, because the Omega Point is the cause of the evolution of the universe towards greater complexity, consciousness and personality. This essentially means that the Omega Point is located outside the context in which the universe is evolving, because it is because of its magnetic attraction that the universe tends to it.

must be independent - without limits of space and time.

It must be irreversible - which must provide the ability to reach.

[1] Dominique de Gramont, Le Christianisme est un transhumanisme, Paris, Les éditions du cerf, septembre 2017, 365 p. (ISBN 978-2-204-11217-8)

Oxford Scholarship Online

This is how the idea is described by Linda Sargent Wood as summarized by Oxford Scholarship Online

Merging Catholicism and science, Teilhard asserted that evolution was God's ongoing creative act, that matter and spirit were one, and that all was converging into one complete, harmonious whole. Though controversial, his organismic ideas offered an alternative to reductionistic, dualistic, mechanistic evolutionary views. They satisfied many who were looking for ways to reconnect with nature and one another; who wanted to revitalize and make personal the spiritual part of life; and who hoped to tame, humanize, and spiritualize science. In the 1960s many Americans found his book The Phenomenon of Man and other mystical writings appealing. He attracted Catholics seeking to reconcile religion and evolution, and he proved to be one of the most inspirational voices for the human potential movement and New Age religious worshipers. Outlining the contours of Teilhard's holistic synthesis in this era of high scientific achievement helps explain how some Americans maintained a strong religious allegiance.

Wood, L.S., 2012. A More Perfect Union: Holistic Worldviews and the Transformation of American Culture after World War II. Oxford University Press.

This is what Pope Benedict says about his idea of the omega point

“Only where someone values love more highly than life, that is, only where someone is ready to put life second to love, for the sake of love, can love be stronger and more than death. If it is to be more than death, it must first be more than mere life. But if it could be this, not just in intention but in reality, then that would mean at the same time that the power of love had risen superior to the power of the merely biological and taken it into its service. To use Teilhard de Chardin’s terminology, where that took place, the decisive complexity or “complexification” would have occurred; bios, too, would be encompassed by and incorporated in the power of love. It would cross the boundary—death—and create unity where death divides. If the power of love for another were so strong somewhere that it could keep alive not just his memory, the shadow of his “I”, but that person himself, then a new stage in life would have been reached. This would mean that the realm of biological evolutions and mutations had been left behind and the leap made to a quite different plane, on which love was no longer subject to bios but made use of it. Such a final stage of “mutation” and “evolution” would itself no longer be a biological stage; it would signify the end of the sovereignty of bios, which is at the same time the sovereignty of death; it would open up the realm that the Greek Bible calls zoe, that is, definitive life, which has left behind the rule of death. The last stage of evolution needed by the world to reach its goal would then no longer be achieved within the realm of biology but by the spirit, by freedom, by love. It would no longer be evolution but decision and gift in one.”

Orthodoxy of Teilhard de Chardin: (Part V) (Resurrection, Evolution and the Omega Point)

Summary by Kahn Academy

His views have also been seen as relevant to modern tanshumanists who want to apply technology to overcome our human limitations. Some of them think that his ideas foreshadowed this.

A movement known as tranhumanism wants to apply technology to overcome human limitations. Followers believe that computers and humans may combine to form a “super brain,” or that computers may eventually exceed human brain capacity. Some transhumanists refer to that future time as the “Singularity.” In his 2008 article “Teilhard de Chardin and Transhumanism,” Eric Steinhart wrote that:

Teilhard de Chardin was among the first to give serious consideration to the future of human evolution.... [He] is almost certainly the first to describe the acceleration of technological progress to a singularity in which human intelligence will become super intelligence.

Teilhard challenged theologians to view their ideas in the perspective of evolution and challenged scientists to examine the ethical and spiritual implications of their knowledge. He fully affirmed cosmic and biological evolution and saw them as part of an even more encompassing spiritual evolution toward the goal of ultrahumans and complete divinity. This hypothesis still resonates for some as a way to place scientific fact within an overarching spiritual view of the cosmos, though most scientists today reject the notion that the Universe is moving toward some clear goal.

Pierre Teilhard de Chardin Paleontologist, Mystic and Jesuit Priest - Kahn Academy

Book review: The Phenomenon of Man by Pierre Teilhard de Chardin

By Tom Butler-Bowdon

In a nutshell: By appreciating and expressing your uniqueness, you literally enable the evolution of the world.

For Teilhard humankind was not the centre of the world but the ‘axis and leading shoot of evolution’. It is not that we will lift ourselves above nature but, in our intellectual and spiritual quests, dramatically raise its complexity and intelligence. The more complex and intelligent we become, the less of a hold the physical universe has on us, he believed.

Just as space, the stars and galaxies expand ever outwards, the universe is just as naturally undergoing ‘involution’ from the simple to the increasingly complex; the human psyche also develops according to this law. ‘Hominisation’ is what Teilhard called the process of humanity becoming more human, or the fulfilment of its potential.

...

Teilhard said as humanity became more self-reflective, able to appreciate its place in space and time, its evolution would start to move by great leaps instead of a slow climb. In place of the glacial pace of physical natural selection, there would be a supercharged refinement of ideas that would eventually free us of physicality altogether. We would move irresistibly toward a new type of existence, at which all potential would be reached. Teilhard called this the ‘omega point’.

Book review: The Phenomenon of Man by Pierre Teilhard de Chardin

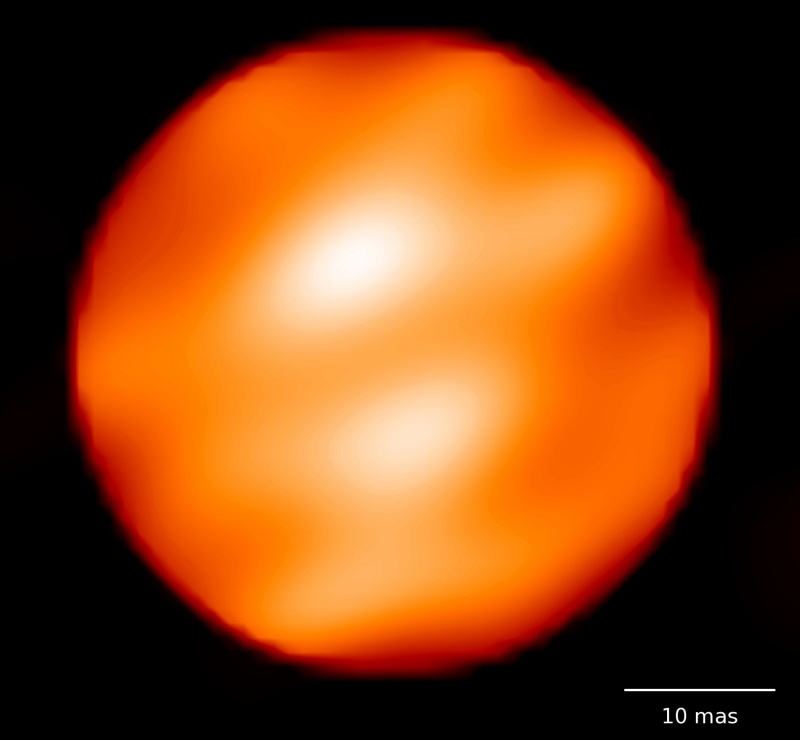

This is about a decade out of date. There is a higher resolution image from 2009

This is about a decade out of date. There is a higher resolution image from 2009