‘A“digitaldivide”isneveronlydigital;itsconsequencesplayoutwhereverpoliticalandeconomicdecisionsaremadeandwherevertheirresultsarefelt.’

- Oct 2017

-

Local file Local file

-

-

Inequalityisexpressedasleadingtotwodivisions:betweenthosewhodoanddonothaveaccessandbetweenthosewhodoordonotcontributetocontentorleavedigitaltraces.

Aumentar la capacidad en la comunidad de base para enunciar sus propias voces.

-

Wefocusonthreeactsthatsymbolizeparticularlywellthedemandsforopenness—participating,connecting,andsharing.Theseactsarenotallinclusive;therearecertainlyotheracts,buttheycoverwhatwesuggestarekeydigitalactsandtheirenablingdigitalactions

-

Ifwefocusoncallingsandtheactionstheymobilizeandhowtheymakeactspossible,wealsoshiftourfocusfromafreedomversuscontroldichotomytotheplayofobedience,submission,andsubversion.Thisisaplayconfiguredbytheforcesoflegality,performativity,andimaginarywhichcalluponsubjectstobeopenandresponsibleandthroughwhichmostlygovernmentalbutalsocommercialandnongovernmentalauthoritiestrytomaintaintheirgripontheconductofthosewhoaretheirsubjects.

-

Itisoftenforgottenthatthecitizensubjectisnotmerelyanintentionalagentofconductbutalsoaproductofcallingsthatmobilizethatconduct

-

WeagreewithCohenontheseeminglyparadoxicalrelationbetweenimaginariesofopenness(copyleft,commons)andclosedness(copyright,privacy)andtheirconnectiontoinformationfreedomandcontrol.Sheisrighttoarguethatthisparadoxcannotberesolvedinlegaltheoryfortwomainreasons.First,freedomandcontrolarenotseparatebutrequireeachother,andhowthisplaysoutinvolvescalibrationwithinspecificsituatedpractices.[6]Second,andrelatedly,legaltheorydependsonaconceptionofabstractliberalautonomousselvesratherthansubjectsthatemergefromthecreative,embodied,andmaterialpracticesofsituatedandnetworkedindividuals

-

Today,beingopenhasbecomeademandonorganizationsandindividualstoshareeverythingfromsoftwareandpublicationstodataaboutthemselves.Fromopendata,opengovernment,opensociety

-

Wecannotsimplyassumethatbeingadigitalcitizenalreadymeanssomething,suchastheabilitytoparticipate,andthenlookforwhoseconductconformstothismeaning.Rather,digitalactsarerefashioning,inventing,andmakingupcitizensubjectsthroughtheplayofobedience,submission,andsubversion

Nosotros hablábamos de deliberación, implementación y seguimiendo sobre las decisiones, como forma de participación. Desde el Data Week estamos yendo del seguimiento a las primeras.

-

Beingdigitalcitizensisnotsimplytheabilitytoparticipate.[2]Wediscussedinchapter1howJonKatzdescribedanethosofsharing,exchange,knowledge,andopennessinthe1990s.Today,thesehavebecomecallingstoperformourselvesincyberspacethroughactionssuchaspetitioning,posting,andblogging.Theseactionsrepeatedlycalluponcitizensubjectsofcyberspace,andherewewanttoaddresstheirlegal,performative,andimaginaryforce.

-

Tounderstanddigitalactswehavetounderstandspeechactsorspeechthatacts.Thespeechthatactsmeansnotonlythatinorbysayingsomethingwearedoingsomethingbutalsothatinorbydoingsomethingwearesayingsomething.ItisinthissensethatwehaveargueddigitalactsaredifferentfromspeechactsonlyinsofarastheconventionstheyrepeatanditerateandconventionsthattheyresignifyareconventionsthataremadepossiblethroughtheInternet.Ultimately,digitalactsresignifyquestionsofanonymity,extensity,traceability,andvelocityinpoliticalways.

-

Toputitsimply,whiledigitalactstraverseborders,digitalrightsdonot.Thisiswherewebelievethinkingaboutdigitalactsintermsoftheirlegality,performativity,andimaginaryiscrucialsincethereareinternationalandtransnationalspacesinwhichdigitalrightsarebeingclaimedthatifnotyetlegallyinforceareneverthelessemergingperformativelyandimaginatively.Yet,arguably,someemergingtransnationalandinternationallawsgoverningcyberspaceinturnarehavinganeffectonnationallegislations.Toputitdifferently,theclassicalargumentabouttherelationshipbetweenhumanrightsandcitizenshiprights,thattheformerarenormsandonlythelattercarrytheforceoflaw,isnotahelpfulstartingpoint.

-

Theimportantthingistoseparateacts(locutionary,illocutionary

The important thing is to separate acts (locutionary, illocutionary, perlocutionary), forces (legal, performative, imaginary), conventions, actions, bodies, and spaces that their relations produce.

-

Itiswellnighimpossibletomakedigitalutteranceswithoutatrace;onthecontrary,oftentheforceofadigitalspeechactdrawsitsstrengthfromthetracesthatitleaves.Aswesaidinchapter2,eachofthesequestionsraisedbydigitalactscanarguablybefoundinothertechnologiesofspeechacts—thetelegraph,megaphone,radio,andtelephonecometomindimmediately.Butitiswhentakentogetherthatwethinkdigitalactsresignifythesequestionsandcombinetomakethemdistinctfromspeechacts,intermsofboththeconventionsbywhichtheybecomepossibleandtheeffectsthattheyproduce.

-

Digitalactswillnoteliminatedistance(weunderstanddistancehereasnotmerelyquantitativebutalsoaqualitativemetric),butthespeedwithwhichdigitalactscanreverberateisphenomenal.

Estos efectos de reverberación fueron sentidos en la Gobernatón y, en la medida en que se crea capacidad en la base y no sólo se reacciona, también se sienten en los Data Weeks, con menos potencia.

-

‘codeistheonlylanguagethatisexecutable.’[49]‘So[forGalloway]codeisthefirstlanguagethatactuallydoeswhatitsays—itisamachineforconvertingmeaningintoaction.’[50]WithAustin(andWittgenstein),thisconclusioncomesasamajorsurprisetous.Aswehavearguedinthischapter,forAustin(andWittgenstein)languageisanactivity,andinorbysayingsomethinginlanguagewedosomethingwithit—weact.Toputitdifferently,languageisexecutable.[51]Thereisnouniquenesstocodeinthatregard,althoughwhilecodeislikelanguage,itisdifferent.WethinkthatdifferenceistobesoughtinitseffectsandtheconventionsitcreatesthroughtheInternetratherthaninitsostensibleuniquenature

El lenguaje es ejecutable!

-

ForGalloway,‘now,protocolsreferspecificallytostandardsgoverningtheimplementationofspecifictechnologies.Liketheirdiplomaticpredecessors,computerprotocolsestablishtheessentialpointsnecessarytoenactanagreed-uponstandardofaction.’

-

ThepremiseofthisbookisthatthecitizensubjectactingthroughtheInternetisthedigitalcitizenandthatthisisanewsubjectofpoliticswhoalsoactsthroughnewconventionsthatnotonlyinvolvedoingthingswithwordsbutdoingwordswiththings.

-

Ifcallingssummoncitizensubjects,theyalsoprovokeopeningsandclosingsformakingrightsclaims.Weconsideropeningsasthosepossibilitiesthatcreatenewwaysofsayinganddoingrights.Openingsarethosepossibilitiesthatenabletheperformanceofpreviouslyunimaginedorunarticulatedexperiencesofwaysofbeingcitizensubjects,aresignificationofbeingspeakingandactingbeings.Openingsarepossibilitiesthroughwhichcitizensubjectscomeintobeing.Closings,bycontrast,contractandreducepossibilitiesofbecomingcitizensubjects

-

Whatwemeanbythisisthatasaclaim,theutterance‘havearightto’placesdemandsontheothertoactinaparticularway.

[...] This is the sense in which the rights of a subject are obligations on others and the rights of others function as obligations on us.

-

Thisindividualisautonomousnotbecauseitisseparateorindependentfromsocietybutasitsproductretainsthecapabilitytoquestionitsowninstitution.Castoriadissaysthatthisnewtypeofbeingiscapableofcallingintoquestiontheverylawsofitsexistenceandhascreatedthepossibilityofbothdeliberationandpoliticalaction.

Esta parte se conecta con Fuchs y la dualidad agencia estructura

-

Theseareimaginariesnotbecausetheyfailtocorrespondtoconcreteandspecificexperiencesorthingsbutbecausetheyrequireactsofimagination.Theyaresocialbecausetheyareinstitutedandmaintainedbyimpersonalandanonymouscollectives.Beingbothsocialandimaginary,theseinstitutesocietyascoherentandunifiedyetalwaysincoherentandfragmented.Howeachsocietydealswiththistensionconstitutesitspolitics

Grafoscopio y el Data Week se ubican en imaginarios sociales sobre lo que es la participación política.

-

Tounderstandcitizensubjectswhomakerightsclaimsbysayinganddoing‘I,we,theyhavearightto’,wearemovingfromthefirstpersontothesecondandthethird,fromtheindividualtothecollective.Weneedtoconsidertwoadditionalforcesthatmakeactspossible.Thetwoforcesaretheforceofthelawandtheforceoftheimaginary.

Grafoscopio también permite esos pasos de lo individual a lo colectivo, desde la imaginación y lo legal.

-

Onthecontrary,citizensubjectsperformativelycomeintobeinginorbytheactofsayinganddoingsomething—whetherthroughwords,images,orotherthings—andthroughperformingthecontradictionsinherentinbecomingcitizens.

-

ThisistheprincipalreasonwhyweneedtoinvestigatenotonlythingsdoneinorbyspeakingthroughtheInternetbutalsothingssaidinorbydoingthingsthroughtheInternet.

-

‘Theforceoftheperformativeisthusnotinheritedfrompriorusage,butissuesforthpreciselyfromitsbreakwithanyandallpriorusage.Thatbreak,thatforceofrupture,istheforceoftheperformative,beyondallquestionoftruthormeaning.’[22]Forpoliticalsubjectivity,‘performativitycanworkinpreciselysuchcounter-hegemonicways.Thatmomentinwhichaspeechactwithoutpriorauthorizationneverthelessassumesauthorizationinthecourseofitsperformancemayanticipateandinstatealteredcontextsforitsfuturereception.’[23]Toconceiveruptureasasystemicortotalupheavalwouldbefutile.Rather,ruptureisamomentwherethefuturebreaksthroughintothepresent.[24]Itisthatmomentwhereitbecomespossibletodosomethingdifferentinorbysayingsomethingdifferent.

Acá los actos futuros guían la acción presente y le dan permiso de ocurrir. Del mismo modo como el derecho a ser olvidado es un derecho futuro imaginado que irrumpe en la legislación presente, pensar un retrato de datos o campañas políticas donde éstos sean importantes, le da forma al activismo presente.

La idea clave acá es hacer algo diferente, que ha sido el principio tras Grafoscopio y el Data Week, desde sus apuestas particulares de futuro, que en buena medida es discontinuo con las prácticas del presente, tanto ciudadanas, cono de alfabetismos y usos populares de la tecnología.

-

Thekeyissueinspeechactsbecomeswhether,andifsotowhatextent,whatissayableanddoablefollowsorexceedssocialconventionsthatgovernasituation.

-

Byadvancingtheideathatspeechisnotonlyadescription(constative)butalsoanact(performative),Austinushersinaradicallydifferentwayofthinkingaboutnotonlyspeakingandwritingbutalsodoingthingsinorbyspeakingandwriting.

-

butbodiesandtheirmovementsareimplicitinspeechthatacts.

-

Toputitdifferently,Austin’sconcernwithinfelicitousisnotaregretonhispartbutarecognitionthatspeechdoesnotonlyact,italsocanfailtoactorfailtoactinwaysanticipated.

-

Bysayingsomething,Ihaveaccomplishedsomething.Thus,‘of’sayingsomethinghasmeaning(locutionaryacts),whereas‘in’or‘by’sayingsomethinghasforce(illocutionaryandperlocutionaryacts).

-

Moreover,ourconcernwiththeInternetisnotthespeakingsubjectassuchbuthowmakingrightsclaimsbringscitizensubjectsintobeing.Howdodigitalactsbringcitizensubjectsintobeing?DoestheInternetintroducearadicaldifferenceforunderstandingcitizensubjects?DoesthelanguageoftheInternet—code—worklikenaturallanguage?

-

Thistraversingofactsproducesconsiderablecomplexitiesinbecomingdigitalcitizens.Second,weneedtospecifytowhatextentcertainrightsclaimedbydigitalactsareclassicalrights(e.g.,freedomofspeech),towhatextenttheyareanalogoustoclassicalrights(e.g.,anonymity),andtowhatextenttheyarenew(e.g.,therighttobeforgotten).

Tags

- tesis: marco teórico

- callings

- capacidad

- gobernatón

- estructura

- apertura

- brecha digital

- convención

- digital citizenship

- cosificación

- agencia

- protocolos

- tesis

- grafoscopio

- compromiso

- apertura vs clausura

- speech acts

- idea clave

- dicotomías

- control vs libertad

- imaginarios sociales

- participación

- enactive citizenship

- espacios

- bienes comunes

- data selfies

- data week

- digital rights

- trazabilidad

- infraestructura

- performance

- data activism

- forces of subjectivation

- anonimato

- corporeidad

- desigualdad

- distancia

- digital acts

Annotators

-

- Sep 2017

-

Local file Local file

-

wewillspecifydigitalacts—callings(demands,pressures,provocations),closings(tensions,conflicts,disputes),andopenings(opportunities,possibilities,beginnings)—aswaysofconductingourselvesthroughtheInternetanddiscusshowthesebringcyberspaceintobeing

-

weconsiderthesubjectcalledthecitizenasasubjectofpower.Whilesubjecttopowerisproducedbysovereignsocieties,thesubjectofpowerisproducedbydisciplinaryandcontrolsocieties.Itisabsolutelyimportanttomakeitclearthatthecontemporarysubjectembodiesallthesethreeformsofpower.Thisisthesenseinwhichweconsiderthecitizensubjectasasubjectofpowerandasasubjectweinherit.

Qué otras formas de estar en el mundo no fueron heredadas por la tradición colonialista? ¿Podrían rastrearse etnográficamente, diálogicamente y convivialmente en otros lugares?

-

Ifwedistinguishprivacyfromanonymity,werealizethatanonymityontheInternethasspawnedanewpoliticaldevelopment.Ifprivacyistherighttodeterminewhatonedecidestokeeptoherselfandwhattosharepublicly,anonymityconcernstherighttoactwithoutbeingidentified.ThesecondconcernsthevelocityofactingthroughtheInternet.Forbetterorforworse,itisalmostpossibletoperformanactontheInternetfasterthanonecanthink.ThethirdconcernstheextensityofactingthroughtheInternet.ThenumberofaddresseesanddestinationsthatarepossibleforactingthroughtheInternetisstaggering.So,too,aretheboundaries,borders,andjurisdictionsthatanactcantraverse.Thefourthconcernstraceability.IfitisperformedontheInternet,anactcanbetracedinwaysthatarepracticallyimpossibleoutsidetheInternet.Takentogether,anonymity,velocity,extensity,andtraceabilityarequestionsthatareresignifiedbybodiesactingthroughtheInternet

-

Itistritetosay,butbeinganAmericancitizeninNewYorkisdifferentfrombeinganIraniancitizeninTehranandnotequivalentregardlessofhumanrightsconventions.Second,theboundariesofwhatissayableanddoableandthustheperformativityofbeingcitizensareradicallydifferentin,say,TunisandMadrid.Finally,theimaginaryforceofactingasacitizeninAthenshasaradicallydifferenthistorythanithas,say,inIstanbul.ThesecomplexitiesanddifferentiationscometomakeahugedifferenceinhowcitizensubjectsuptakecertainpossibilitiesandactandorganizethemselvesthroughtheInternet.

Hay ejercicios conviviales, vinculados al territorio, pero no confinados por las leyes particulares del país, en lo referido a la creación de software libre y contenidos abiertos. Sin embargo, la fuerza del estado se hace presente en casos como los de Basil, donde su activismo lo llevo a la muerte.

-

Afourthgroup,inwhichFuchsseeshimself,arguesthattheobjectiveconditionsthatledpeopletoprotestfoundmechanismsforexpressingsubjectivepositions,therebyhelpingorganizetheseprotests.[83]ByemphasizingadifferencebetweentheInternetingeneralandsocialmedia,FuchsidentifiesthreedimensionsofInternetusage,especiallybytheOccupymovement:buildingasharedimaginaryofthemovement,communicatingitsideastotheworldoutside,andengaginginintensecollaboration.[84]Fuchsalsohelpfullydevelopsamuchwiderlistofplatformsusedbythemovementratherthandumpingthemallintoanall-encompassingcategoryof‘socialmedia’.

Ver https://hyp.is/R5PndKU_EeeMGeOQsHFMbw. Es el mismo Fuchs de mi marco teórico?

-

Althoughalloftheseprotestswerestagedwithinrelativelythesameperiod,thereweresignificantdifferences,asonewouldexpect,forthereasons,methods,reactions,andeffectsoftheseacts.Yetitisfairtosaythattheyallsharedtwoqualities:alltheseactswerestagedinsquaresandstreetsandwerevaryinglyenactedthroughtheInternet.[81]Thishasresultedinnumerousinterpretationsoftherelationbetweenthetwo:squaresandsocialmedia.ItisquiteunfortunateattheoutsetthattheinterpretivedebatesabouttheimportanceoftheInternetinthestagingoftheseactshavebeenframedintermsof‘socialmedia’.

El vínculo entre la plaza pública y las redes sociales populares, es efectivamente desafortunado, pues estas últimas se parecen más a centros comerciales, que a plazas públicas. Puede ĺa plaza pública reconfigurar las redes sociales en espacios como Ocuppy y 15M, donde hay largas acampadas que reconfigurarías las prácticas respecto a los artefactos virtuales que ayudaron a articular los encuentros presenciales?

-

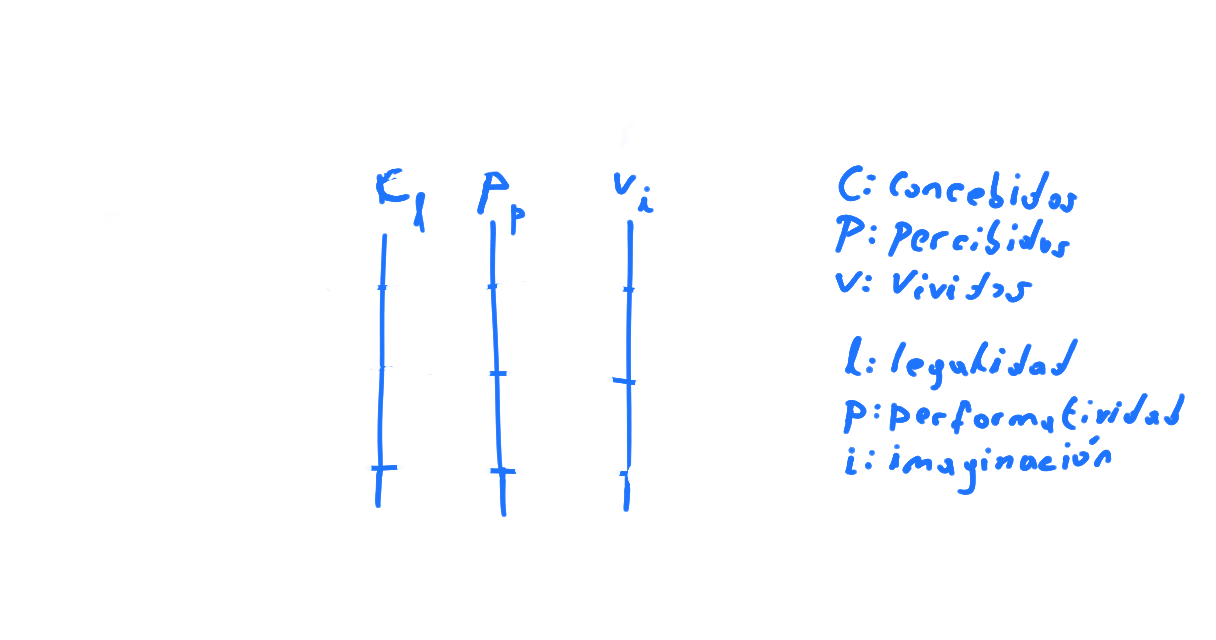

Butbeforeweproceed,letusnotethatwewillnotcontinuetousethelanguageofconceived,perceived,andlivedspace.Instead,wewillusethesamecategorieswehaveintroducedtodiscussthreeforcesofsubjectivationthatbringcitizensubjectsintobeing:legality,performativity,andimaginary.

The parallel is not perfect, but it will serve our purposes of maintaining a grip on the complexities of cyberspace as distinct from other spaces while approaching it from the perspective of acts that constitute it.

-

Havingrecognizedthatspaceexistsinvariousregisters,scholarsalsostudysuchspacesascultural,social,legal,economic,orpoliticalspaces.Theirassumptionisnotthatsuchspacesexistasseparateandindependentfromspacespeopleinhabit,buttheseareanalyticalmeanstoconcentrateonasubsetofrelationsthatconstitutesuchspacesfordeeperunderstandingofhowpeopleinhabit,say,aculturalspace,whichissimultaneouslyyetasynchronouslyaconceived,perceived,andlivedspace.

La descripción de los espacios hacker y maker podría conectarse con esta descripción.

-

FollowingHenriLefebvre,atleastthreeregistersofspaceshavebeenelaborated:conceivedspace,perceivedspace,andlivedspace.[76]Theessentialpointisthatinhabitingspacesinthreeregisters,weexperienceourbeing-in-the-worldthroughsimultaneousbutasynchronousregisters.Subjectsinhabitconceivedspacessuchasobjectifyingpracticesthatcode,recode,present,andrepresentspacetorenderitasalegibleandintelligiblespaceofhabitation.Peopleinhabitperceivedspacessuchassymbolicrepresentationsofspacethatguideourimaginativerelationshiptoit.Subjectsalsoinhabitlivedspacesthroughthingstheydoinorbyliving.Livedspacesarethespacesthroughwhichsubjectsact.Thesethreeregistersofspacearedistinctyetoverlappingbutalsointeracting:byinhabitingthem,wemakethem.

-

Deleuzethinksthat,bycontrast,incontrolsocieties‘thekeythingisnolongerasignatureornumberbutacode:codesarepasswords,whereasdisciplinarysocietiesareruled...byprecepts.’[67]Heobservesthat‘thedigitallanguageofcontrolismadeupofcodesindicatingwhetheraccesstosomeinformationshouldbeallowedordenied.’[68]ForDeleuze,controlsocietiesfunctionwithanewgenerationofmachinesandwithinformationtechnologyandcomputers.Forcontrolsocieties,‘thepassivedangerisnoiseandtheactive[dangersare]piracyandviralcontamination.

[...] For Deleuze, ‘[i]t’s true that, even before control societies are fully in place, forms of delinquency or resistance (two different things) are also appearing. Computer piracy and viruses, for example, will replace strikes and what the nineteenth century called “sabotage” (“clogging” the machinery).’

Otras formas de contestación pueden referirse a la creación de narrativas alternativas usando las mismas herramientas que crean las estructuras de control.

-

Deleuzewasnowconvincedthat‘[w]e’removingtowardcontrolsocietiesthatnolongeroperatebyconfiningpeoplebutthroughcontinuouscontrolandinstantcommunication.’[65]Thespaceofcontrolsocietieswasdiffuseanddispersedanddecisivelycyberneticinitsmodesofgovernment.

-

Thespacesthatsovereignpowerproducescorrespondtosuchstrategiesandtechnologiesofexclusion:expulsion,prohibition,banishment,eviction,exile,anddeportationaresuchexamples.Bycontrast,beingasubjectofpowermobilizesstrategiesandtechnologiesofdiscipline,whichrequiresubmissionbutopenuppossibilitiesofsubversion.Thespacesthatdisciplinarypowerproducesareappropriatetosuchstrategiesandtechnologiesofdiscipline:asylums,camps,andbarracksbutalsohospitals,prisons,schools,andmuseumsasspacesofconfinement.Eachofthesespacesisaspaceofcontestation,competitiveandsocialstrugglesinandthroughwhichcertainformsofknowledgeareproducedinenunciationsthatperformsubjects.Neitherspacesofexclusionnorspacesofdisciplinearestaticorcontainerspaces.Theyaredynamicandrelationalspaces.Thereareno‘physical’spacesseparatefrompowerrelationsandnopowerrelationsthatarenotembeddedinspatializingstrategiesandtechnologiesofpower.

La idea de un hackerspace como un tercer espacio, donde se juegan dinámicas de poder, como en todos, pero éste es transitorio, meritocrático, contestable, incluso a pesar de la falta de estructura evidente del mismo espacio. Como otros espacios, dicha contestación requiere saber los rituales del espacio y sus lenguajes. Tal contestación no tiene por qué tomar una forma confrontacional y puede ocurrir simplemente a través de la creación de alianzas transitorias y comunidades de práctica que eligen unas tecnologías y no otras. Incluso, prácticas como el Data Week dan la posibilidad de contestar esta práctica particular, al explicitar los saberes y materialidades que la constituyen.

-

Deibertrightlyarguesthat‘althoughcyberspacemayseemlikevirtualreality,it’snot.EverydeviceweusetoconnecttotheInternet,everycable,machine,application,andpointalongthefibre-opticandwirelessspectrumthroughwhichdatapassesisapossiblefilteror“chokepoint,”agreyareathatcanbemonitoredandthatcanconstrainwhatwecancommunicate,thatcansurveilandchokeoffthefreeflowofcommunicationandinformation.’[59]NotonlydoesthismeanthattheInternethasmaterialeffectssuchasdatacentres,serverclusters,andcode,thoughthisiscertainlytrueandtherearestudiesaboutthesematerialforms.

-

Ratherthanunderstandingcyberspaceasaseparateandindependentspace,weinterpretitasaspaceofrelations.Putdifferently,DonnaHaraway’sCyborgManifesto(1984),whichisnotaboutthethenincipientInternetbutabouttheinterconnectednessofhumansandmachines,isjustasrelevanttoourageoftheInternetthroughwhichwebothsayanddothings.

-

Ifthecomputerizationofsocietyraisessuchquestions,theanalysisoftheproduction,dissemination,andlegitimationofknowledge,onwhichithasaprofoundeffect,cannotberestrictedtounderstandingcomputerizationascommunicationorcomputer-mediatedcommunication.Rather,theobjectofinvestigationoughttobelanguagegamesthatbecamepossiblethroughwhatLyotardsawasnetworkedcomputers

-

WhatwefindinLyotard—albeitinincipientform—isthatratherthanconceivingaseparateandindependentspace,thepointistorecognizethatpowerrelationsincontemporarysocietiesarebeingincreasinglymediatedandconstitutedthroughcomputernetworksthateventuallycametobeknownastheInternet.

-

Sincethemeansofproduction,dissemination,andlegitimationofknowledgeprincipallyinvolveslanguage,Lyotardsawlanguageasthemainsiteofsocialstruggle.Itisnotsurprising,then,thatLyotardwasattractedtoLudwigWittgensteinandJ.L.Austintodevelopamethodofunderstandinglanguageasameansofsocialstruggle.

-

WehavealreadycharacterizedcyberspaceasaspaceofrelationsbetweenandamongbodiesactingthroughtheInternet.Wenotedearlierthat1984wasthebirthoftheconceptofcyberspace.Yetduringtheverysameyear,amuchlessknownwork,orrather,aworkknownmuchmoreforitstitle,Jean-FrançoisLyotard’sThePostmodernCondition(1984),appeared.

[...] We want to revisit both Lyotard’s substantive argument and his method because, writing before the concept of cyberspace, his starting point is not an ostensibly existing space but changing social relations through computerization.

-

Wedisagreewiththisviewofcode.AlthoughwegatherfromLessigandotherscholarssuchasRonDeibertandJulieCohentheimportanceofcode,wecannotagreethatcodecanordoeshavesuchadetermininginfluence.[45]Wewill,however,explainthislaterinchapter3,wherewediscussinmoredetailtheimportanceoflanguageandtheirreducibledifferencesbetweenspeech,writing,andcode.Fornow,wewanttoemphasizethatifweareboundtousetheconcept‘cyberspace’andcompareittosomethingcalled‘real’space,we’dbetterunderstandthecomplexregistersinwhichcyberspaceexistsratherthanbeingopposedtoanostensible‘real’space.

the irreducible differences between speech, writing, and code.

-

TheanathemaforLessigisthelossofthisfreedomincyberspace.Inrealspace,governingpeoplerequiresinducingthemtoactincertainways,butinthelastinstance,peoplehadthechoicetoactthiswayorthatway.Bycontrast,incyberspaceconductisgovernedbycode,whichtakesawaythatchoice.Incyberspace,‘iftheregulatorwantstoinduceacertainbehavior,sheneednotthreaten,orcajole,toinspirethechange.Sheneedonlychangethecode—thesoftwarethatdefinesthetermsuponwhichtheindividualgainsaccesstothesystem,orusesassetsonthesystem.’[37]Thisisbecause‘codeisanefficientmeansofregulation.Butitsperfectionmakesitsomethingdifferent.Oneobeystheselawsascodenotbecauseoneshould;oneobeystheselawsascodebecauseonecandonothingelse.Thereisnochoiceaboutwhethertoyieldtothedemandforapassword;onecompliesifonewantstoenterthesystem.Inthewellimplementedsystem,thereisnocivildisobedience.’[38]WhatLessigsuggestsisthatcyberspaceisnotonlyseparateandindependentbutconstitutesanewmodeofpower.Youconstituteyourselfasasubjectofpowerbysubmittingtocode.

En este caso particular la bifurcación es política a través del código, porque otros lugares del ciberespacio pueden ser creados ejerciendo este poder de bifurcar, si se entienden los códigos.

En una charla de 2008, con Jose David Cuartas, le mencionaba cómo las libertades del software libre son teóricas, si no se entiende el código fuente de dicho software (las instrucciones con las que opera y se construye). Las prácticas alrededor del código, asi como los entornos físicos, comunitarios, simbólico y computacionales, donde dichas prácticas se dan, son importantes para alentar (o no) estas comprensiones y en últimas permitir que otros códigos den la posibilidad del disenso y de construir lugares distintos. De ahí que las infraestructuras de bolsillo sean importantes, pues estas disminuyen los costos de bifuración y construcción desde la diferencia.

-

LeavingasidetheparadoxofusinganAmericanexperienceandlanguageforcreatingauniversal‘civilizationofthemind’,thedeclarationrevealsthatcyberspaceistobeconceivednotonlyasmetaphysical(nobodiesandnomatter)butalsoasanautonomousspace

-

InthedocumentaryfilmNoMapsforTheseTerritoriesherecounts,‘[A]llIknewabouttheword“cyberspace”whenIcoinedit,wasthatitseemedlikeaneffectivebuzzword.Itseemedevocativeandessentiallymeaningless.’[31]ThisisreminiscentofNietzsche’sgenealogicalprinciplethatjustbecausesomethingcomesintobeingforonepurposedoesnotmeanthatitwillservethatpurposeforever.

[K:] Revisar este documental.

-

Cyberspaceisaspaceofsocialstrugglesandnolessormore‘real’than,say,socialspaceorculturalspace—conceptsthatalsodescriberelationsbetweenbodiesandthings.Yetthisseparationbetween‘real’spaceandcyberspaceissopervasiveandcarriesabaggagethatneedsquestioning.

-

thefigureofthecitizencannotenterintodebatesabouttheInternetasasubjectwithouthistoryandwithoutgeography—andwithoutcontradictions.Rather,acriticalapproachtothefigureofthecitizenataminimumrecognizesthatitisbothasubjecttopowerandsubjectofpowerandthatthisfigureembodiesobedience,submission,andsubversionasitsdispositions

-

Theimaginaryofcitizenshipincludesawholeseriesofstatementsandutterancesaboutwhatcitizenshipis,oughttobe,hasbeen,willhavetobe,andsoon.Theimaginaryofcitizenshipisobviouslymobilizedbyandparticipatesintheformationofthelegalityofcitizenshipanditsperformativity

-

Wehaveidentifiedthisasthecontradictionbetweensubmissionandsubversionorconsentanddissent.JacquesRancièrecapturesthisasdissensus.[27]Wewillreturntodissensusinchapter7.Second,whilearticulatingaparticulardemand(forinclusion,recognition),performingcitizenshipenactsauniversalrighttoclaimrights.Thisisthecontradictionbetweentheuniversalismandparticularismofcitizenship.

Estos reclamos por el reconocimiento han tomado diferentes formas en las prácticas del Data Week. ¿Quiénes son nuestros supuestos interlocutores? ¿Por quién queremos ser reconocidos desde nuestras prácticas alternas? Yo diría que se trata de algún tipo de configuración insitucional: empresa, academía y sobre todo gobierno, pues si bien no todos estamos en los dos primeros lugares, si es cierto que todos habitamos el territorio colombiano. Uno de los esfuerzos de la Gobernatón, por ejemplo, fue pensar una manera de reparto más equitativo de los recursos públicos entre comunidades de base diversas y no sólo en aquellas enagenadas por el discurso de la innovación.

-

First,performingcitizenshipbothinvokesandbreaksconventions.Weshallcharacterizeconventionsbroadlyassociotechnicalarrangementsthatembodynorms,values,affects,laws,ideologies,andtechnologies.Associotechnicalarrangements,conventionsinvolveagreementorevenconsent—eitherdeliberateoroftenimplicit—thatconstitutesthelogicofanycustom,institution,opinion,ritual,andindeedlaworembodiesanyacceptedconduct.Sinceboththelogicandembodimentofconventionsareobjectsofagreement,performingtheseconventionsalsoproducesdisagreement.Anotherwayofsayingthisisthattheperformativityofconductsuchasmakingrightsclaimsoftenexceedsconventions

-

‘wemakerightsclaimstocriticizepracticeswefindobjectionable,toshedlightoninjustice,tolimitthepowerofgovernment,andtodemandstateaccountabilityandintervention.’

Puede esta performatividad construir alternativas en las que no está el estado, en lugar de contraponerse a él o cuestionarlo? Qué otras configuraciones de gobernanza son posibles?

-

Ifmakingrightsclaimsisperformative,itfollowsthattheserightsareneitherfixednorguaranteed:theyneedtoberepeatedlyperformed.Theircomingintobeingandremainingeffectiverequiresperformativity.Theperformativeforceofcitizenshipremindsusthatthefigureofthecitizenhastobebroughtintobeingrepeatedlythroughacts(repertoires,declarations,andproclamations)andconventions(rituals,customs,practices,traditions,laws,institutions,technologies,andprotocols).Withouttheperformanceofrights,thefigureofthecitizenwouldmerelyexistintheoryandwouldhavenomeaningindemocraticpolitics.

-

Ifindeedweunderstandthisdynamicoftakinguppositionsassubjectivation,wethenidentifythreeforcesthroughwhichcitizensubjectscomeintobeing:legality,performativity,andimaginary.Theseareneithersequentialnorparallelbutsimultaneousandintertwinedforcesofsubjectivation

-

Whoisthenthecitizen?Balibarsaysthatthecitizenisapersonwhoenjoysrightsincompletelyrealizingbeinghumanandisfreebecausebeinghumanisauniversalconditionforeveryone.[19]Wewouldsaythecitizenisasubjectwhoperformsrightsinrealizingbeingpoliticalbecausebecomingpoliticalisauniversalconditionforeveryone.

[...] ‘Western concepts and political principles such as the rights of [hu]man[s] and the citizen, however progressive a role they played in history, may not provide an adequate basis of critique in our current, increasingly global condition.’[20] Poster says this is so, among other things, because Western concepts arise out of imperial and colonial histories and because situated differences are as important as universal principles.[21] This contradiction of the figure of the citizen can be expressed in another paradoxical phrase: universalism as particularism.

-

Therightsthatthecitizenholdsarenottherightsofanalready-existingsovereignsubjectbuttherightsofafigurewhosubmitstoauthorityinthenameofthoserightsandactstocallintoquestionitsterms.Thisistheinescapableandinheritedcontradictionbetweensubmissionandsubversionofthefigureofthecitizenthatcanbeexpressedinaparadoxicalphrase:submissionasfreedom.

submission as freedom.

Ser sujeto de derechos en un estado (someterse al poder del mismo), implica también la posibilidad de sublevarse y pensar en otras formas de ciudadanía.

-

IfwefocusonhowpeopleenactthemselvesassubjectsofpowerthroughtheInternet,itinvolvesinvestigatinghowpeopleuselanguagetodescribethemselvesandtheirrelationstoothersandhowlanguagesummonsthemasspeakingbeings.Toputitdifferently,itinvolvesinvestigatinghowpeopledothingswithwordsandwordswiththingstoenactthemselves.ItalsomeansaddressinghowpeopleunderstandthemselvesassubjectsofpowerwhenactingthroughtheInternet.

-

Forus,thisalsomeansthatactsoftruthaffordpossibilitiesofsubversion.Beingasubjectofpowermeansrespondingtothecall‘howshouldone“governoneself”byperformingactionsinwhichoneisoneselftheobjectiveofthoseactions,thedomaininwhichtheyarebroughttobear,theinstrumenttheyemploy,andthesubjectthatacts?’[14]Indescribingthisashisapproach,Foucaultwasclearthatthe‘developmentofadomainofacts,practices,andthoughts’posesaproblemforpolitics.[15]ItisinthisrespectthatweconsidertheInternetinrelationtomyriadacts,practices,andthoughtsthatposeaproblemforthepoliticsofthesubjectincontemporarysocieties.

-

Whatdistinguishesthecitizenfromthesubjectisthatthecitizenisthiscompositesubjectofobedience,submission,andsubversion.Thebirthofthecitizenasasubjectofpowerdoesnotmeanthedisappearanceofthesubjectasasubjecttopower.Thecitizensubjectembodiestheseformsofpowerinwhichsheisimplicated,whereobedience,submission,andsubversionarenotseparatedispositionsbutarealways-presentpotentialities.

-

Butthesearenotpureforms;rather,thecitizensubjectembodiestheseaspotentialities.Beingasubjecttopowerismarkedbythecitizen’sdominationbythesovereign,andherrightsderivefromthatwhichisgiventoherbythe(patriarchal)sovereign.Beingasubjectofpowermeansbeinganagentofpower,evenifthisrequiressubmission.

-

asubjectisacompositeofmultipleforces,identifications,affiliations,andassociations.Thesubjectisdividedbytheseelementsratherthanbytraditionandmodernity.Italsoassertsthatasubjectisasiteofmultipleformsofpower(sovereign,disciplinary,control)thatembodiescompositedispositions(obedience,submission,subversion).

-

thatquestiontheassumptionthatcitizenshipismembershipinonlyanation-state

[...] Rather, critical citizenship studies often begins with the citizen as a historical and geographic figure—a figure that emerged in particular historical and geographical configurations and a dynamic, changing, and above all contested figure of politics that comes into being by performing politics.[7]

-

Thefieldbeginswithcitizenshipdefinedasrights,obligations,andbelongingtothenation-state.Threerights(civil,political,andsocial)andthreeobligations(conscription,taxation,andfranchise)governrelationshipsbetweencitizensandstates.Civilrightsincludetherighttofreespeech,toconscience,andtodignity;politicalrightsincludevotingandstandingforoffice;andsocialrightsincludeunemploymentinsurance,universalhealthcare,

and welfare.

-

howmultipleactorswouldneedtoresistsurveillancestrategiesbutalsothequestionofhowInternetuserswilladjusttheireverydayconduct.ItisanopenquestionwhetherInternetusers‘willcontinuetoparticipateintheirownsurveillancethroughself-exposureordevelopnewformsofsubjectivitythatismorereflexiveabouttheconsequencesoftheirownactions’

-

Givenitspervasivenessandomnipresence,avoidingorshunningcyberspaceisasdystopianasquittingsocialspace;itisalsocertainthatconductingourselvesincyberspacerequires,asmanyactivistsandscholarshavewarned,intensecriticalvigilance.Sincetherecannotbegenericoruniversalanswerstohowweconductourselves,moreorlesseveryincipientorexistingpoliticalsubjectneedstoaskinwhatwaysitisbeingcalleduponandsubjectifiedthroughcyberspace.Inotherwords,toreturnagaintotheconceptualapparatusofthisbook,thekindsofcitizensubjectscyberspacecultivatesarenothomogenousanduniversalbutfragmented,multiple,andagonistic.Atthesametime,thefigureofacitizenyettocomeisnotinevitable;whilecyberspaceisafragileandprecariousspace,italsoaffordsopenings,momentswhenthinking,speaking,andactingdifferentlybecomepossiblebychallengingandresignifyingitsconventions.Thesearethemomentsthatwehighlighttoarguethatdigitalrightsarenotonlyaprojectofinscriptionsbutalsoenactment.

¿A qué somos llamados y cómo respondemos a ello? Esta pregunta ha sido parte tácita de lo que hacemos en el Data Week.

-

digitalactsresignifyfourpoliticalquestionsabouttheInternet

anonymity, extensity, traceability, and velocity.

El primero y el tercero ha estado permanentemente en el discurso de colectivos a los que he estado vinculado (RedPaTodos, HackBo, Grafoscopio, etc)

-

Wearguethatmakingrightsclaimsinvolvesnotonlyperformativebutalsolegalandimaginaryforces.Wethenarguethatdigitalactsinvolveconventionsthatincludenotonlywordsbutalsoimagesandsoundsandvariousactionssuchasliking,coding,clicking,downloading,sorting,blocking,andquerying.

-

WedevelopourapproachtobeingdigitalcitizensbydrawingonMichelFoucaulttoarguethatsubjectsbecomecitizensthroughvariousprocessesofsubjectivationthatinvolverelationsbetweenbodiesandthingsthatconstitutethemassubjectsofpower.WefocusonhowpeopleenactthemselvesassubjectsofpowerthroughtheInternetandatthesametimebringcyberspaceintobeing.Wepositionthisunderstandingofsubjectivationagainstthatofinterpellation,whichassumesthatsubjectsarealwaysandalreadyformedandinhabitedbyexternalforces.Rather,wearguethatcitizensubjectsaresummonedandcalledupontoactthroughtheInternetand,assubjectsofpower,respondbyenactingthemselvesnotonlywithobedienceandsubmissionbutalsosubversion.

-

whenweconsiderTwitter,forinstance,wecanask:Howdoconventionssuchasmicrobloggingplatformsconfigureactionsandcreatepossibilitiesfordigitalcitizenstoact?

Es curioso que los autores también se hayan enfocado en esta plataforma, como lo hemos hecho en los Data Week de manera reiterada.

-

citizenshipasasiteofcontestationorsocialstruggleratherthanbundlesofgivenrightsandduties.[41]Itisanapproachthatunderstandsrightsasnotstaticoruniversalbuthistoricalandsituatedandarisingfromsocialstruggles.Thespaceofthisstruggleinvolvesthepoliticsofhowwebothshapeandareshapedbysociotechnicalarrangementsofwhichweareapart.Fromthisfollowsthatsubjectsembodyboththematerialandimmaterialaspectsofthesearrangementswheredistinctionsbetweenthetwobecomeuntenable.[42]Whowebecomeaspoliticalsubjects—orsubjectsofanykind,forthatmatter—isneithergivenordeterminedbutenactedbywhatwedoinrelationtoothersandthings.Ifso,beingdigitalandbeingcitizensaresimultaneouslytheobjectsandsubjectsofpoliticalstruggl

-

Soratherthandefiningdigitalcitizensnarrowlyas‘thosewhohavetheabilitytoread,write,comprehend,andnavigatetextualinformationonlineandwhohaveaccesstoaffordablebroadband’or‘activecitizensonline’oreven‘Internetactivists’,weunderstanddigitalcitizensasthosewhomakedigitalrightsclaims,whichwewillelaborateinchapter2.

Estas definiciones instrumentales de ciudadanía se presentaban en proyectos del gobierno orientados al desarrollo instrumental de competencias computacionales (particularmente en la ofimática) y no en clave de derechos. Un lenguaje desde los derechos, podría no estar vinculado a la idea de estado nación.

-

Butthefigureofcyberspaceisalsoabsentincitizenship

-> But the figure of cyberspace is also absent in citizenship studies as scholars have yet to find a way to conceive of the figure of the citizen beyond its modern configuration as a member of the nation-state. Consequently, when the acts of subjects traverse so many borders and involve a multiplicity of legal orders, identifying this political subject as a citizen becomes a fundamental challenge. So far, describing this traversing political subject as a global citizen or cosmopolitan citizen has proved difficult if not contentious.

-

Toputitdifferently,thefigureofthecitizenisaproblemofgovernment:howtoengage,cajole,coerce,incite,invite,orbroadlyencourageittoinhabitformsofconductthatarealreadydeemedtobeappropriatetobeingacitizen.WhatislosthereisthefigureofthecitizenasanembodiedsubjectofexperiencewhoactsthroughtheInternetformakingrightsclaims.Wewillfurtherelaborateonthissubjectofmakingrightsclaims,butthefigureofthecitizenthatweimagineisnotmerelyabearerorrecipientofrightsthatalreadyexistbutonewhoseactivisminvolvesmakingclaimstorightsthatmayormaynotexist.

[...] This absence is evinced by the fact that the figure of the citizen is rarely, if ever, used to describe the acts of crypto- anarchists, cyberactivists, cypherpunks, hackers, hacktivists, whistle-blowers, and other political figures of cyberspace. It sounds almost outrageous if not perverse to call the political heroes of cyberspace as citizen subjects since the figure of the citizen seems to betray their originality, rebelliousness, and vanguardism, if not their cosmopolitanism. Yet the irony here is that this is exactly the figure of the citizen we inherit as a figure who makes rights claims. It is that figure that has been betrayed and shorn of all its radicality in the contemporary politics of the Internet. Instead, and more recently, the figure of the citizen is being lost to the figure of the human as recent developments in corporate and state data snooping and spying have exacerbated.

La crítica hecha a la perspectiva hacker por estar definida en oposición a lo gubernamental, no considera estos espacios donde lo hacker se ha adelantado al estado (Ley De Software Libre), pensando derechos nuevos y nuevos escenarios de lo convivial en nuestra relación mediada por la tecnología. Por supuesto, no podemos deshacernos del contexto urbano en el que nos desemvolvemos y de la presencia totalizante del estado y las instituciones, por lo cual interactuamos con él, pero no estamos definidos exclusivamente como personas, en dicha interacción (por afirmación u oposición).

-

MarkPoster,forexample,arguesthattheseinvolvementsaregivingrisetonewpoliticalmovementsincyberspacewhosepoliticalsubjectsarenotcitizens,understoodasmembersofnation-states,butinsteadnetizens.[34]Byusingtheterm‘digitalcitizenship’asaheuristicconcept,NickCouldryandhiscolleaguesalsoillustratehowdigitalinfrastructuresunderstoodassocialrelationsandpracticesarecontributingtotheemergenceofaciviccultureasaconditionofcitizenship

-

WhatisimportanttorecognizeisthatalthoughtheInternetmaynothavechangedpoliticsradicallyinthefifteenyearsthatseparatethesetwostudies,ithasradicallychangedthemeaningandfunctionofbeingcitizenswiththeriseofbothcorporateandstatesurveillance

-

Moresignificantly,digitalstudiesspansbothsocialsciencesandhumanitiesaswellasscienceandtechnologystudiesandasksquestionsconcerningtherelationofdigitaltechnologiestosocialandculturalchange.

-

First,bybringingthepoliticalsubjecttothecentreofconcern,weinterferewithdeterministanalysesoftheInternetandhyperbolicassertionsaboutitsimpactthatimaginesubjectsaspassivedatasubjects.Instead,weattendtohowpoliticalsubjectivitiesarealwaysperformedinrelationtosociotechnicalarrangementstothenthinkabouthowtheyarebroughtintobeingthroughtheInternet.[13]WealsointerferewithlibertariananalysesoftheInternetandtheirhyperbolicassertionsofsovereignsubjects.Wecontendthatifweshiftouranalysisfromhowwearebeing‘controlled’(asbothdeterministandlibertarianviewsagree)tothecomplexitiesof‘acting’—byforegroundingcitizensubjectsnotinisolationbutinrelationtothearrangementsofwhichtheyareapart—wecanidentifywaysofbeingnotsimplyobedientandsubmissivebutalsosubversive.Whileusuallyreservedforhigh-profilehacktivistsandwhistle-blowers,weask,howdosubjectsactinwaysthattransgresstheexpectationsofandgobeyondspecificconventionsandindoingsomakerightsclaimsabouthowtoconductthemselvesasdigitalcitizens

La idea de que estamos imbrincados en arreglos socio técnicos y que ellos son deconstriuidos, estirados y deconstruidos por los hackers a través de su quehacer material también implica que existe una conexión entre la forma en que los hackers deconstruyen la tecnología y la forma en que se configuran las ciudadanías mediadas por dichos arreglos sociotécnicos.

-

Alongwiththesepoliticalsubjects,anewdesignationhasalsoemerged:digitalcitizens.Subjectssuchascitizenjournalists,citizenartists,citizenscientists,citizenphilanthropists,andcitizenprosecutorshavevariouslyaccompaniedit.[7]Goingbacktotheeuphoricyearsofthe1990s,JonKatzintroducedthetermtodescribegenerallythekindsofAmericanswhowereactiveontheInternet.[8]ForKatz,peoplewereinventingnewwaysofconductingthemselvespoliticallyontheInternetandweretranscendingthestraitjacketofatleastAmericanelectoralpoliticscaught

-

Moreover,withthedevelopmentoftheInternetofthings—ourphones,watches,dishwashers,fridges,cars,andmanyotherdevicesbeingalwaysalreadyconnectedtotheInternet—wenotonlydothingswithwordsbutalsodowordswiththings.

These connected devices generate enormous volumes of data about our movements, locations, activities, interests, encounters,and private and public relationships through which we become data subjects.

-

IftheInternet—or,moreprecisely,howweareincreasinglyactingthroughtheInternet—ischangingourpoliticalsubjectivity,whatdowethinkaboutthewayinwhichweunderstandourselvesaspoliticalsubjects,subjectswhohaverightstospeech,access,andprivacy,rightsthatconstituteusaspolitical,asbeingswithresponsibilitiesandobligations?

-

AsRonaldDeibertrecentlysuggested,whiletheInternetusedtobecharacterizedasanetworkofnetworksitisperhapsmoreappropriatenowtoseeitasanetworkoffiltersandchokepoints.[4]ThestruggleoverthethingswesayanddothroughtheInternetisnowapoliticalstruggleofourtimes,andsoistheInternetitself.

-

EvgenyMorozov’sTheNetDelusion(2011),Turkle’sownAloneTogether(2011),orJamieBartlett’sTheDarkNet(2014)strikemuchmoresombre,ifnotworried,moods.WhileMorozovdrawsattentiontotheconsequencesofgivingupdatainreturnforso-calledfreeservices,Turkledrawsattentiontohowpeoplearegettinglostintheirdevices.BartlettdrawsattentiontowhatishappeningincertainareasoftheInternetwhenpushedunderground(removedfromaccessviasearchengines)andthusgivingrisetonewformsofvigilantismandextremism.PerhapsthespyingandsnoopingbycorporationsandstatesintowhatpeoplesayanddothroughtheInternethasbecomeawatershedevent.

-

ThatformanypeopleAaronSwartz,Anonymous,DDoS,EdwardSnowden,GCHQ,JulianAssange,LulzSec,NSA,PirateBay,PRISM,orWikiLeakshardlyrequireintroductionisyetfurtherevidence.Thatpresidentsandfootballerstweet,hackersleaknudephotos,andmurderersandadvertisersuseFacebookorthatpeopleposttheirsexactsarenotsocontroversialasjustrecognizableeventsofourtimes.ThatAirbnbdisruptsthehospitalityindustryorUberthetaxiindustryistakenforgranted.ItcertainlyfeelslikesayinganddoingthingsthroughtheInternethasbecomeaneverydayexperiencewithdangerouspossibilities.

Tags

- poder

- sumisión

- digital citizenship

- imaginación

- distopia

- tesis: recomendaciones

- contestación

- protesta

- autocensura

- idea clave

- comunidad de práctica

- imbricación

- dispocisiones

- dicotomías

- infraestructuras de bolsillo

- alcance

- self description

- espacios

- self referential

- cibernética

- poder soberano

- amoldable

- ciudadanías otras

- diversidad

- privacy

- data week

- QOTD

- trazabilidad

- diálogo de materialidades

- sujeto

- Kittler

- infraestructura

- repolitización

- tesis: hackerspaces

- performance

- forces of subjectivation

- anonimato

- ciudadanía

- self governance

- ciberespacio

- tesis: resultados

- hackerspaces

- digital acts

- tesis: marco teórico

- subjetividades

- poder disciplinario

- computerization

- bifurcación

- juegos de lenguaje

- vigilantismo

- derechos

- self empowerment

- tesis

- velocidad

- convivialidad

- definition

- politics

- disenso

- materialidades

- subversión

- obediencia

- enactive citizenship

- popularización

- contradicciones

- microblogging

- bienes comunes

- governance

- lenguaje

- prácticas

- hackeable

- digital studies

- control

- kanban: por hacer

- data activism

- estado-nación

- vigilance

Annotators

-

-

www.thesociologicalreview.com www.thesociologicalreview.com

-

Theoretically informed sociological analyses of digital life can challenge the often implicit assumptions of those approaches which reinscribe divisions between humans and technologies, online and offline lives, agency and structure, and freedom and control. While these may be old dichotomies for some, they continue to have force and need to be challenged.

-

While much attention is reserved for whistleblowers and hactivists as the vanguards of Internet rights, there are many more anonymous political subjects of the Internet who are not only making rights claims by saying things but also by doing things through the Internet.

-

Like other social spaces that sociologists study, cyberspace is not designed and arranged and then experienced by passive subjects. Like the physical spaces of cities that geographers have long studied, it is a space that is bought into being by citizen subjects who act in ways that submit to but also at the same time go beyond and transgress the conventions of the Internet. In doing so they are not simply obedient and submissive but also subversive and participate in the making of and rights claims to cyberspace through their digital acts.

Interesante la idea de construir mapas de esas cibergeografías. Esta podría ser la cita para el capítulo de visualizaciones.

-

Such a conception moves us away from how we are being ‘liberated’ or ‘controlled’ to the complexities of ‘acting’ through the Internet where much of what makes it up is seemingly beyond the knowledge and consent of citizen subjects. To be sure, one cannot act in isolation but only in relation to the mediations, regulations and monitoring of the platforms, devices, and algorithms or more generally the conventions that format, organize and order what we do, how we relate, act, interact, and transact through the Internet. But it is here between and among these distributed relations that we can identify a space of possibility—a cyberspace perhaps—that is being brought into being by the acts of myriad subjects.

-

The problem is that popular critics have become too concerned about the Internet creating obedient subjects to power rather than understanding that it is also creating submissive subjects of power who are potentially and demonstrably capable of subversion. I believe that addressing the question I posed at the beginning requires revisiting the question of the (political) subject. By reading Michel Foucault, Etienne Balibar conceived of the citizen as not merely a subject to power or subject of power but as embodying both. Balibar argued that being a subject to power involves domination by and obedience to a sovereign whereas being a subject of power involves being an agent of power even if this requires participating in one’s own submission. However, it is this participation that opens up the possibility of subversion and this is what distinguishes the citizen from the subject: she is a composite subject of obedience, submission, and subversion where all three are always-present dynamic potentialities.

-

-

Local file Local file

-

. Fellows particularly advocate for improved transparency and improved effectiveness of local government services through open government data (Maruyama, Douglas, and Robertson 2013). After finishing the year fellows pursue a range of non- and for-profit career paths

-

Altering the infrastructure for governance marks CfA as different from other progressive organizations focused on, for example, electoral politics or youth mobilization. Participation entails personalized involvement where individuals create or alter digital infrastructures to support community need

-

-

Local file Local file

-

The benefit of these spaces is best summed up as flexibility. Members are supported as they join the space, become peripheral participants, and potentially, become longstanding members engaged in ongoing projects. Hacking, like art, becomes not the domain of the elite or reified objects but intimately tied with everyday experiences throughout one's life (Dewey, 1934). The pragmatic devotion of HMSs to recursive problem-solving attracts members who see HMSs bringing informal education and collaborative sociality to their city. In interviews GeekSpace members freely offered beliefs about why they saw HMSs as vital to reforming their city at large. Flexibility, exercised through the constant churn of hands-on work on projects, was coupled with optimism for making a better future. Kligler-Vilenchik et al. (2012) describe a similar desire in civically-minded youth organizations as a "wish to help" (para. 1.5), a form of engagement more familiar to volunteerism than hackers that exert their collective power through protest or software (Coleman, 2012; Sauters, 2013). Above all else, this optimism drives HMS members as they seek to reframe what hacking and making can accomplish.

-

democratization of hacking itself. This claim, however, threatens to unrealistically situate hackerspaces as paragons of learning and overly central to hacker culture at large, and democracy as a panacea. As discussed, GeekSpace was not without exclusion that operated in spite of its official ideology. Further, GeekSpace was constantly being re-built around individual conflicts, organizational collaborations, and cultural shifts. Returning to revisit the question of collectivity itself, the emphasis of the collective is on maximizing perceptions of individual agency through material and social encounters. This harkens back to Thomas' (2011) observation that "collectives provide tools for the unique and individual expression of identity within the collective itself'' (p. 2) and is why "community," which works quite oppositely, is likely the wrong form of social structure at work. HMSs provide a context for a negotiated sociality -sometimes warm conversations, frequently simply co-working. This provides a physical example of Turkle's (1985) observation that, online, "hacker culture is a culture of loners who are never alone" (p. 196). The failure of the first incarnation of GeekSpace was, in the eyes of members, an abundance of socialization.

Esta preferencia por la soledad también se ha visto en HackBo, así como la tensión entre lo individual y lo colectivo. Los proyectos hacen que el hackerspace funcione, pero no está claro como lo proyectan más allá de su estado actual, particularmente en lo que se refiere a ayudar a su sostenibilidad en el tiempo.

La existencia de un hackerspace no democratiza la noción de hacking, a pesar de hacerla cotidiana. La democracia, de hecho no es cotidiana, si se piensa que cristaliza sólo cada 4 años con las votaciones y de resto consiste en la queja generalizada sobre lo que hacen los gobernantes, sin vigilancia, ni control por parte de los ciudadanos. Nuevas formas de ciudadanía podrían ser articuladas en espacios como estos, desde el cotidiano.

-

Hacker and maker represented not so much discrete categories as fluid identities that emerged by on mode of work, personal history, and comfort with cultural alignment. This cozy relationship troubles easy stereotypes of hacking as related to scientific rationality and making to felt experience. For example, as Lingel and Regan (2014) observe, the experiences of software developers can be both highly rational and deeply embodied, resulting in their thinking about coding as process, embodiment, and community. The thrill or pleasure of hacking being linked simply to transgression or satisfaction of completing a difficult job seems lacking (Taylor, 1999; Turkle, 1984). From the side of craft, Daniella Rosner (2012) draws a historic connection to the humble bookbindery as a material-workspace collaboration and site of personalized routines and encounters with tools that lead to complex collaborations. These ethnographies take into account passions and relative definitions of technology that are often neglected in organizational studies. Thus, these inroads to informal learning could be mutually informed by Leonardi's (2011) notion of imbrication, where material and individual agencies are negotiated through routines over time.

-

Third, the space acted as a recruitment tool for participants and served as a source of solidarity as members rallied around the space. This emphasis on actively inviting new members drew attention to the group's latent desire for open-access, a radical shift from the often insular nature of hacker culture.

En HackBo, el espacio que atrae más miembros externos a la comunidad es el Data Week. Unos pocos de los cuales se convierten en miembros permanentes. Algunos miembros optan por mantener la membresía cerrada, si bien tenemos permanentes crisis respecto a pagar las mensualidades que permiten cubrir el arriendo y los servicios y es un espacio muy frágil económicamente, que requiere de la solidaridad constante de los miembros.

-

Hacker and maker spaces are nearly universally defined within relatively affluent western cultures, raising obvious questions of economic privilege as well as more difficult ones of how spaces are defined by various cultural imaginaries. Mirroring previous work on collective organizations, HMS members' desire for an idealized space appears driven both by their exposure to a participatory democracy (Turner, 2013) and shortcomings they see in that model. Participatory culture, as an ideal, may be a utopian goal (Jenkins & Carpentier, 2013, p. 2; Turner, 2008), imagined here through material engagement.

La crítica sobre la ausencia de referentes locales y un diálogo más fuerte con ellos ha sido expresada localmente: ¿Cómo el hackerspace se charla con ideas ancestrales como las del buen vivir? Algunos esfuerzos, como la iniciativa ecuatoriana iniciaron dichos diálogos, pero no han sido amplianete retomados o extendidos.

-

For example, in the old space, visitors needed to obtain two signatures from two members to be approved for membership. This was seen by the directorship as a small barrier to entry and by female visitors as an unnerving time to be "judged" by uncertain criteria and leadership. In other words, what the two founders saw as a "weak" culture was interpreted as a "strong" one by women visitors. Querying the power dynamics of interactions takes us further towards unpacking when a cultural style can be inhibiting of participation, particularly geeks among who may embrace alternative masculinities (Kendall, 2002; McRobbie & Garber, 1976; Wilkins, 2008).

Los dos votos para ingresar fueron implementados posteriormente, luego de una política laxa de filiación (podía entrar cualquiera que pagara la cuota) y que puso a un miembro con actitudes que incomodaban abiertamente a otros.

-

ad-hoc groups that form and just disband. They form again and disband. Then the individual ties between units in the group become stronger as part of this experience."

-

Projects were vital to linking personal interests to sharing and collaboration, with one member, echoing the overall pragmatism of the space, describing them as "education in disguise."

[...] Materiality took center stage as projects acted as an assemblage around which participation occurred.

Para el caso del Manual de Periodismo de datos, la naturaleza basada en proyectos y con una fecha límite fue importante en articular comunida alrededor de los mismos. Otros, como los data selfies de Twitter, fueron largamente iterados, entregando resultados visibles, pero sin fechas límite tan cerradas. Las dos formas de participación eran necesarias y complementarias y se adeuaban a distintos ritmos de los participantes, quienes agradecían el "call to action" de los primeros proyectos y otros que preferían la naturaleza estratégica y de largo alcance e iterativa de los segundos. Las dos materialidades y dinámicas estaban en un continuo y se presentaban más en un tipo de proyectos que en otros, sin ser exclusivo de ellos.

-

The "doing it together" instinct in HMSs may be one precipitated out around projects. Cognitive, classroom-based definitions of project-based learning tend to be formulaic (Blumenfeld et al., 1991). By comparison, the colloquial definition of project can be handily ambiguous about what is under construction. A project can be artistic, technical, or culinary (in the case of "food hacking"). It can be related to one's occupation, entirely recreational, or part of a movement from one to the other.

En el caso de HackBo, la meritocracia establecía jerarquías transitorias respecto a los proyectos que se realizaban y dependiendo de ello las personas con más experiencia en el campo guiaban el quehacer de los otros. La transición de un proyecto a otro se daba, pero también una vez se exploraban dichos proyectos, se podía establecerse en uno principalmente, como fue el caso de Grafoscopio y la visualización de datos.

-

A physical workshop, then, helped reconcile often highly individualistic and introverted personalities to pursue collaborative learning together. The community nature of the space meant that it was easy to drop by, and relationships often developed between members. However, GeekSpace was initially populated by members often in technical professions and used as a "social club" to blow off steam, play foosball, and drink. The learning that occurred there was mainly self-education and listening to the occasional guest speaker. In retrospect, members, particularly those not coming from technical backgrounds, saw the failure of this space as the lack of collaborative work in the form of projects.

Esto también ocurrió en HackBo. Al comienzo hubo una ausencia de proyectos y la mayoría tardaron en "cuajar". Algunas personas buscaban realizar proyectos productivos para asegurar la existencia del espacio, de maneras directas o colaterales (por ejemplo si una campaña de microfinanciación para otro proyecto era exitosa), pero esto implicaba entrar en lógicas de gestión de proyectos y demandas de tiempo que no todos los miembros querían asumir. Otros visitantes buscaban obtener soporte técnico y mentoría del espacio, pero no se pudieron organizar dichas sesiones de soporte de forma consistente. En varias ocasiones se habló de hacer una Casa Abierta, para que nuevas personas se familiarizaran con el espacio, pero esto tampoco ocurrió.

Al comienzo se trataba principalmente de un espacio social, con encuentros sociales recurrentes donde el grueso de la comunidad se reunía para hablar o cenar, más que para realizar proyectos y estos involucraban sólo a subgrupos en la comunidad.

-

Quite to the contrary, members were self-regulating and saw tools and space as providing deterministic solutions (Jordan, 2008). The materialities of the space and tools allowed them to operate a democratic-meritocratic system focused on shared work with a minimum of hierarchy or rules. This spatio-materialistic perspective echoed a hacker reliance on "rough consensus and running code" that moved projects forward (Davies, Clark, & Legare, 1992, p. 543).

-

The most important shift in learning during this period was members' relationship to knowledge. GeekSpace attempted to democratize hacking and move towards a more inclusive model. This stands in contrast to Jean Burgess' observation that hacking "as an ideal, permits rational mastery ... but in reality, it is only the technical avant-garde (like computer scientists or hacker subcultures) who achieve this mastery" (Burgess, 2012, p. 30). Individualized encounters with software gave way to making and hardware tinkering where users learned by doing (Rosenberg, 1982). Collaborative work in the space took place in small groups clustered around a project, or the projects were passed from person to person to solve specific problems. The frustrations members had with the first phase of the space organically shifted to a set of practices based in materials, routines, and projects. "Collaboration on ideas and [their] physical manifestations," in the words of a GeekSpace director, is "how you tell somebody's part of the community."

Un cambio similar se dio en HackBo, al menos en lo referido al Data Week y las Data Rodas como experiencias y rituales de aprendizaje intensional y semi-estructurado, en lugar de ir a ver gente haciendo cosas en solitario.

-

Somewhat paradoxically, the group took advantage of their backgrounds in software even as they were careful to denote they weren't ''those kinds" of hackers. In correcting misunderstandings they both negotiated the stigma around hacking while retaining the term as central to their operations, albeit less so than before. Wayne, a director, would use the term in public because he knew people might question it. He saw this as a chance for redefinition, to get people to "realize that we're not just guys who read 2600 and try and make free long distance calls." Nancy similarly described it as a "word we 're trying to take back" from the media. Even as outwardly the organization shifted to being a maker space the word hacker continued to be a potent way they could mark the difference of their space as compared with other types of shared workshops.

Algo similar pasó con HackBo ante la filtración de información sobre el proceso de paz en Colombia y la atención en los medios a las conotaciones inadecuadas del término hacker. Aún así el término se ha mantenido, así como la inteción de tomarlo de vuelta de los medios.

-

Mike, the most involved director, described a conscious move towards maker culture and away from "being like a closed little nerd group that requires a prerequisite of being able to program in C." Software production was frequently used as a point of contrast to the current space. Making captured a notion of productivity and openness that the previous iteration lacked. Mark described the current version of GeekSpace as "more of a makerspace ... there's a lot of physical fabrication happening." The original members, by comparison, were "more software [oriented]... specifically, hardcore infosec [information security]," harkening back to the group's roots in local 2600 meetings and professional occupations.

Una discusión similar la hemos tenidos en HackBo. La práctica, sin embargo ha sido preservar el nombre y lididar con la ignorancia y la cultura popular frente a la connotación de hacker. Otros espacios, como La Galería, en Armenia, se han alineado desde el comienzo a esa tradición artesanal referida al trabajo con maderas en la región y han elegido la connotación más abierta de maker, desde le comienzo.

-

Interviews were transcribed and color-coded using Microsoft Word, then categorized using Excel according to the central research questions of this study.

Acá también podría usarse hypothesis. Bastaría que las anotaciones ofrecieran un código de color extra para las categorías que van surgiendo. Sin embargo, con sólo tener etiquetas ya dicha funcionalidad se va logrando (aunque falta la convención de color para las mismas). Se podría agregar, usando el API de Hypothesis.

-

Recruitment was conducted in-person or over email. Interviews were conducted in-person, or if that option was not available, over Skype. The 13 interviews ranged from 25 to 63 minutes in length. No compensation was offered.

Esto contrasta ampliamente con la postura de Millan, cuando se entrevista un activista, por ejemplo.

-

Henry Jenkins similarly noted that "do it yourself' is a diffuse notion that can be conflated to an individualistic perspective on creative and technical work, and thus he advocates for moving towards more "collective enterprises within networked publics" (Knobel & Lankshear, 2010, p. 232). Compared with hacking, making is more involved with creating objects within a lineage of craft or art. Rather than hacking's strategic to bring about differences (an outcome), making is more

concerned with an ongoing process and the satisfaction that comes from it. These distinctions, however tentative given the fluid nature of the cultures under study, are conceptually useful because they capture ways members discuss how space should be used for informal learning.

Estoy en desacuerdo con la distinción hecha sobre el proceso y el producto y el vínculo con lo artesanal (si bien el autor habla de lo tentativo de esta distinción). Al menos en HackBo ,la idea software as a craft es una práctica importante, así como el proceso y la idea de que iterar sobre este es lo que deja algunos cambios estructurales, que, sin embargo, pueden ser iterados permanentemente.

-

Maker culture has been criticized for simply being a de-politicized version of hacker culture, naively unable to reconcile its own promises of a revolution (Morozov, 2014). While maker culture's connection with socio-economic change and hacker culture at larger is debatable, it seems more certain it comes with an attendant set of nested practices and attitudes. Lindtner and Li (2012) describe maker culture as ''technological and social practices of creative play, peer production, a commitment to open source principles, and a curiosity about the inner workings of technology" (p. 18). Chris Anderson (2012) claims that the maker movement has three characteristics: the use of digital tools for creating products, cultural norms of collaboration, and design file standards (p. 21 ). Hughes (2012) notes maker culture's emphasis on being open-source and posited that it ''ties together physical manufacturing skills with the higher end technical skills of hardware construction and software programming" (p. 3884).

-

Members of HMSs, driven by hacker and maker culture, infrastructure their own space and populate it with tools, effectively de-virtualizing materialities. Buildings and tools in HMSs are treated very much as code: malleable, changeable, and durable.

-

Compared with the contentious history of hacker culture, the history of making is comparatively unmapped. It can be most accurately described as a new craft movement (Rosner & Ryokai, 2009) that recalls relationships with materials through craft (Sennett, 2008) and hobbies (Gelber, 1999).

-

Jordan stipulates that the commonality of various perspectives on hacking (Himanen, 2001; Wark, 2004) is the hack, or the "ability to create new things, to make alterations, to produce differences" (Jordan, 2008, p. 7). These differences are linked with what Steven Levy (1984) called a "hands-on imperative" (p. 28) and enjoyment from deep concentration. By this line of thinking, the prerogative of hacking is that people should encounter technology not just to gain experience but for the enjoyment of pushing boundaries of what it was meant to do. Taylor (1999) describes the "kick" of hacking as "satisfying the technological urge of curiosity" (p. 17). This transgressing of the internal logic of systems lends a thrill that is difficult to pin down but is understood by those who have experienced it (Csikszentmihalyi, 1997). Tim Jordan's (2008) assertion that "hacking both demands and refutes technological determinism" (p. 133) gestures at a blending of material and social agencies in specific contexts. In other words, hackers see systems as malleable even as they rely on them to accomplish goals. Jordan saw this as paradoxical perhaps because technological determinism tends to be only viewed in the negative (Peters, 2011 ). Viewing his statements as a reflection on enabling and constraining (Giddens, 1986) engagements with materialities, rather than "determinism" per se, brings us towards a more productive theoretical framework for thinking about the connection of HMSs to informal learning.

Esto podría resolver el tema de si todo es hackear? Una materialidad que, a pesar de hacerse en lo ordinario, también tiene que ver con retar los límites y luchar desde la tecnología con el determinismo tecnológico, parece una adecuada aproximación al término, sin convertirlo en totalizante.

-

For example, Leonardi's (2011) theory of imbrication, drawing on Latour, posits that individuals and technologies both have agency. Investigating the interplay between the two requires micro-interactionist investigations of everyday routines. The most prominent example of imbrication in HMSs comes from an influential presentation at the 2007 Chaos Computer Congress (CCC) where Haas, Ohlig & Weiler presented "design patterns" to create a hackerspace. This PowerPoint turned into a widely-circulated PDF describing socio-material "patterns" to bring about changes to routines for better sustainability, independence, regularity and conflict resolution (Haas, Ohlig & Weiler, 2007). Their invoking of design patterns recalls similar efforts in software (Gamma, Helm, Johnson & Vlissides, 1995) and architecture (Alexander, 1979) to combine social and material agencies to bring about a beneficial goal. The presenters were eager to stress that these were not blueprints, only practices that could be reflexively modified as needed. They were no determinists, but were utterly pragmatic in the sense of relying on implementation, failure, and modification to solve problems in a given system.

-

Depending on when you happened to drop by, you might conclude that it was a raucous party spot, infosec operations center, or hat manufacturer. The LOpht did not host a single group or set of activities. Rather, it served multiple purposes for the hacker community. The permanence of HMSs similarly serves as a magnet to attract interested members, enabling and constraining the wide variety of activities that occur therein.

En HackBo también habitan estas diversas identidades.

-

served as third places (Oldenburg, 1997), or spaces for informal gathering and bonding outside of home and work. Touchstones for hacker and maker space members emerge time and again, such as German models imported to the United States via Noisebridge and Resistor NYC (Haas, Ohlig & Weiler, 2007). However their "true" origins will likely be always be subject to debate because, in addition to a lack of documentation, these spaces are both quotidian and encourage a plurality of uses.

-

The public nature of these gatherings was an advantage but also a source of frustration for "elite" members who grumbled about having to deal with "newbies." The publicness of these meetings ensured interplay between pseudo-anonymous bulletin-board systems (BBSs), longstanding members and genuinely interested newcomers.

Recuerdo que algunas de las primeras reuniones de Linux Col tenían mesas que separaban los "gurús" de los "novatos" y eran en lugares costosos, para molestia de muchos. Ese elitismo, fue dando paso a relaciones más horizontales y amigables.

-

Coleman (2010) asserts that previous literature such as Taylor's "fails to substantially address (and sometimes even barely acknowledge) is the existence and growing importance of face-to-face interactions" (p. 48). For example, Vichot (2009) notes how communities of hackers that coalesce online use "real space" to gain visibility needed to accomplish their collective political goals.

En Colombia tenemos ejemplos como la SLUD, JSL, el FLISoL y el Data Week.

-

However, hacker and maker spaces are not synonymous with hacker culture at large. As previously discussed, since at least the mid-1990s, hackers have encompassed too wide an array of concerns and histories to safely be referred to as a unified group. Hacker and maker spaces, while a significant movement and informed by a more popular definition of "hacker," hardly define everyone who calls themselves a hacker.

-

Doug Thomas (2002) concluded that "hacker culture, in shifting away from the traditional norms of subculture formation, forces us to rethink the basic relationships between parent culture and subculture" (p. 171). Similarly, such a splintering of meanings draws into question how conveniently a lineage by generations can be identified (Coleman, 2012; Taylor, 2005). Hacker and maker space members draw on the "shared background of cultural references, values, and ideas" (Soderberg, 2013, p. 3) of a more accessible hacker culture that is social, everyday, and lived (Williams, 1995). The culture of HMSs is made visible through interactions as members draw on hacker and maker culture at large as an explanation for what it is that goes on there

Esto tiene que ver con la idea de popularización de la cultura hacker (me recuerda la noción de lo popular como un lugar donde se perpetúa y reta la cultura). Una cultura hacker, informada por tradiciones anteriores, pero que encarna de maneras particulares en los contextos en los que se da.

-

This popularization is well captured by Brian Alleyne's (2011) observation that "we are all hackers now," a far cry from the insularity of the late 1980s (Meyer, 1989). The term hacker is freely applied to contexts as diverse as data-driven journalism (Lewis & Usher, 2013), urban exploration (Garrett, 2012), and creative use of IKEA products (Rosner & Bean, 2009). I frame hacking as "popular" to underscore its accessible, immediate, and participatory aspects (Jenkins, 2006), even if it is not popular in the same way as "fan cultures" (Jenkins, McPherson & Shattuc, 2002). Rather than media, the popular tum in hacking is linked to interactions with objects, platforms, and practices that invite participation and thereby increase the scope of who have typically considered themselves hackers in new and unforeseen ways.

Sin embargo puede pasar que con la popularización se pierda la noción de hacker. La idea de un quehacer artesanal parece más apropiada en este contexto.

-

By comparison, HMSs are collective organizations centered around maintaining a specific space. The current study's site of investigation is a single relatively bounded group (GeekSpace). Members are circumscribed by the built environment of the shared workshop and a shared repertoire of online communication tools (website, mailing lists, wikis).

Esto hace parte del repertorio material y simbólico compartido de una comunidad de práctica.

Al comienzo la tesis quería indagar y alentar las relaciones entre las comunidades y sus hábitats digitales y físicos, pero luego se fue enfocando más en cosas como Grafoscopio y en comunidades más pequeñas.

-

this pragmatic attitude enable collaborations across ideological boundaries, it also facilitates partnerships" (p. 5), echoing William James' (1975) observation that pragmatism serves as a "method for settling metaphysical disputes that might otherwise be interminable" (p. 27). A pragmatic attitude from various iterations of

hacker culture similarly permit HMS members to construct "social laboratories or workshops that people join in order to learn and share knowledge" (Hunsinger, 2011, p. 1) even if they differ in ideology or worldview.

-

I retain hacker because hackers pace members draw upon hacker culture, broadly considered, even though the sites that identify as HMSs vary widely.

Estos términos son auto-denotativos. Es una manera en que los miembros de la comunidad se refieren a sí mismos.

-