"Forget about computational models -- let's see the physical world itself as a sequence of unchanging states. Let's think about and work with the physical world as a frozen sequence of states. Let's decouple time from reality, and be able to freely pan across time and abstract over time in the physical world."

- Sep 2025

-

dynamicland.org dynamicland.org

-

- Feb 2025

-

archive.org archive.org

-

Hurd, Cuthbert C., ed. Proceedings: IBM Computation Seminar December 1949. New York: Internation Buisiness Machines Corporation, 1951. http://archive.org/details/bitsavers_ibmproceedeminarDec49_14295048.

In a variety of context here the idea of "cards" could be held to be synonymous with "notes".

Collision cards (though used in a physics setting) could be a bit hilarious with the idea of "atomic notes" and the idea of "combinatorial creativity".

-

- Oct 2024

-

-

Baez, John C., and Mike Stay. “Physics, Topology, Logic and Computation: A Rosetta Stone.” Quantum Physics; Category Theory. arXiv:0903.0340 [Quant-Ph] 813 (March 2, 2009): 95–172. https://doi.org/10.1007/978-3-642-12821-9_2.

-

- Sep 2024

-

writings.stephenwolfram.com writings.stephenwolfram.com

-

A major theme of my work since the early 1980s had been exploring the consequences of simple computational rules. And I had found the surprising result that even extremely simple rules could lead to immensely complex behavior. So what about the universe? Could it be that at a fundamental level our whole universe is just following some simple computational rule?

-

- Jun 2024

-

coevolving.com coevolving.com

-

how such a system is born, itself, of a generative system, establishing the duality between the object as a computing agent and the method as a computational process.

-

computation of such interrelational, complex behaviour-based systems

-

-

www.belfercenter.org www.belfercenter.org

-

Beyond simply dividing time, computation has enabled the division of information.

-

- May 2024

-

shkspr.mobi shkspr.mobi

-

Terence Eden (posted #2023/05/28 ) on the question if an app provider does have a say on being willing to run their code on your device, in contrast me being in control of a device and determining which code to run there or not. In this case a bank that would disallow their app on a rooted phone, because of risk profiles attached to that. Interesting tension: my risk assessment, control over general computation devices versus a service provider for which their software is a conduit and their risk assessments. I suspect the issue underneath this is such tensions need to be a conversation or negotiation to resolve, but in practice it's a dictate by one party based on a power differential (the bank controls your money, so they can set demands for your device, because you will need to keep access to your account.)

-

- Apr 2024

-

arxiv.org arxiv.org

-

A computation of T S is a sequenceof transitions s −→ s′ −→ · · · ,

Tags

Annotators

URL

-

- Nov 2021

-

www.reddit.com www.reddit.com

-

Sure, you could just use thousands of cubes, but you'd probably run into speed problems. And storing them individually requires a lot of redundant information.

-

- Jun 2021

- Mar 2021

-

academic.oup.com academic.oup.com

-

Blakely, Tony, John Lynch, Koen Simons, Rebecca Bentley, and Sherri Rose. ‘Reflection on Modern Methods: When Worlds Collide—Prediction, Machine Learning and Causal Inference’. International Journal of Epidemiology 49, no. 6 (1 December 2020): 2058–64. https://doi.org/10.1093/ije/dyz132.

-

- Feb 2021

-

en.wikipedia.org en.wikipedia.org

-

Monads achieve this by providing their own data type (a particular type for each type of monad), which represents a specific form of computation

-

-

en.wikipedia.org en.wikipedia.org

-

purely functional programming usually designates a programming paradigm—a style of building the structure and elements of computer programs—that treats all computation as the evaluation of mathematical functions.

-

-

-

Centre for Cognition, Computation, & Modelling on Twitter. (n.d.). Twitter. Retrieved 20 February 2021, from https://twitter.com/BBK_CCCM/status/1359132159953559557

-

-

en.wikipedia.org en.wikipedia.org

- Jan 2021

-

-

Help is coming in the form of specialized AI processors that can execute computations more efficiently and optimization techniques, such as model compression and cross-compilation, that reduce the number of computations needed. But it’s not clear what the shape of the efficiency curve will look like. In many problem domains, exponentially more processing and data are needed to get incrementally more accuracy. This means – as we’ve noted before – that model complexity is growing at an incredible rate, and it’s unlikely processors will be able to keep up. Moore’s Law is not enough. (For example, the compute resources required to train state-of-the-art AI models has grown over 300,000x since 2012, while the transistor count of NVIDIA GPUs has grown only ~4x!) Distributed computing is a compelling solution to this problem, but it primarily addresses speed – not cost.

-

- Dec 2020

-

-

Perkel. J. M., (2020). Challenge to scientists: does your ten-year-old code still run? Nature. Retrieved from: https://www.nature.com/articles/d41586-020-02462-7?utm_source=twt_nnc&utm_medium=social&utm_campaign=naturenews&sf237106326=1

-

- Nov 2020

-

www.npmjs.com www.npmjs.com

-

Note that when using sass (Dart Sass), synchronous compilation is twice as fast as asynchronous compilation by default, due to the overhead of asynchronous callbacks.

If you consider using asynchronous to be an optimization, then this could be surprising.

-

- Oct 2020

-

github.com github.com

-

The reason why we don't just create a real DOM tree is that creating DOM nodes and reading the node properties is an expensive operation which is what we are trying to avoid.

-

-

github.com github.com

-

Parsing HTML has significant overhead. Being able to parse HTML statically, ahead of time can speed up rendering to be about twice as fast.

-

-

medium.com medium.com

-

But is overhead always bad? I believe no — otherwise Svelte maintainers would have to write their compiler in Rust or C, because garbage collector is a single biggest overhead of JavaScript.

-

- Sep 2020

-

github.com github.com

-

Forwarding events from the native element through the wrapper element comes with a cost, so to avoid adding extra event handlers only a few are forwarded. For all elements except <br> and <hr>, on:focus, on:blur, on:keypress, and on:click are forwarded. For audio and video, on:pause and on:play are also forwarded.

-

-

www.scientificamerican.com www.scientificamerican.com

-

Hotz, J. (n.d.). Can an Algorithm Help Solve Political Paralysis? Scientific American. Retrieved September 21, 2020, from https://www.scientificamerican.com/article/can-an-algorithm-help-solve-political-paralysis/

-

- Aug 2020

-

journals.plos.org journals.plos.org

-

Aiken, E. L., McGough, S. F., Majumder, M. S., Wachtel, G., Nguyen, A. T., Viboud, C., & Santillana, M. (2020). Real-time estimation of disease activity in emerging outbreaks using internet search information. PLOS Computational Biology, 16(8), e1008117. https://doi.org/10.1371/journal.pcbi.1008117

-

-

-

Young, J.-G., Cantwell, G. T., & Newman, M. E. J. (2020). Robust Bayesian inference of network structure from unreliable data. ArXiv:2008.03334 [Physics, Stat]. http://arxiv.org/abs/2008.03334

-

- Jun 2020

-

www.nature.com www.nature.com

-

Ledford, H. (2020). How Facebook, Twitter and other data troves are revolutionizing social science. Nature, 582(7812), 328–330. https://doi.org/10.1038/d41586-020-01747-1

-

-

arxiv.org arxiv.org

- May 2020

-

-

Lobato, E. J. C., Powell, M., Padilla, L., & Holbrook, C. (2020). Factors Predicting Willingness to Share COVID-19 Misinformation. https://doi.org/10.31234/osf.io/r4p5z

-

-

Local file Local file

-

Australian Reproducibility Network materials. (2020). https://doi.org/None

Tags

Annotators

-

-

www.sciencedirect.com www.sciencedirect.com

-

Hart, O. E., & Halden, R. U. (2020). Computational analysis of SARS-CoV-2/COVID-19 surveillance by wastewater-based epidemiology locally and globally: Feasibility, economy, opportunities and challenges. Science of The Total Environment, 730, 138875. https://doi.org/10.1016/j.scitotenv.2020.138875

-

- Apr 2020

-

security.googleblog.com security.googleblog.com

-

Our approach strikes a balance between privacy, computation overhead, and network latency. While single-party private information retrieval (PIR) and 1-out-of-N oblivious transfer solve some of our requirements, the communication overhead involved for a database of over 4 billion records is presently intractable. Alternatively, k-party PIR and hardware enclaves present efficient alternatives, but they require user trust in schemes that are not widely deployed yet in practice. For k-party PIR, there is a risk of collusion; for enclaves, there is a risk of hardware vulnerabilities and side-channels.

-

-

-

Wojcik, S., et al. (2020 March 30). Survey data and human computation for improved flu tracking. Cornell University. arXiv:2003.13822

-

-

-

Ting, C., Palminteri, S., Lebreton, M., & Engelmann, J. B. (2020, March 25). The elusive effects of incidental anxiety on reinforcement-learning. https://doi.org/10.31234/osf.io/7d4tc MLA

-

-

www.cmu.edu www.cmu.edu

-

Fischhoff, B., de Bruin, W. B., Güvenç, Ü., Caruso, D., & Brilliant, L. (2006). Analyzing disaster risks and plans: An avian flu example. Journal of Risk and Uncertainty, 33(1–2), 131–149. https://doi.org/10.1007/s11166-006-0175-8

-

- Mar 2020

-

-

10,000 CPU cores

10,000 CPU cores for 2 weeks Question:

- where can we find 10,000 CPU cores in China? AWS? Ali? Tencent?

-

- Oct 2019

-

en.wikipedia.org en.wikipedia.org

-

The world can be resolved into digital bits, with each bit made of smaller bits. These bits form a fractal pattern in fact-space. The pattern behaves like a cellular automaton. The pattern is inconceivably large in size and dimensions. Although the world started simply, its computation is irreducibly complex.

Tags

Annotators

URL

-

-

-

categorical formalism should provide a much needed high level language for theory of computation, flexible enough to allow abstracting away the low level implementation details when they are irrelevant, or taking them into account when they are genuinely needed. A salient feature of the approach through monoidal categories is the formal graphical language of string diagrams, which supports visual reasoning about programs and computations. In the present paper, we provide a coalgebraic characterization of monoidal computer. It turns out that the availability of interpreters and specializers, that make a monoidal category into a monoidal computer, is equivalent with the existence of a *universal state space*, that carries a weakly final state machine for any pair of input and output types. Being able to program state machines in monoidal computers allows us to represent Turing machines, to capture their execution, count their steps, as well as, e.g., the memory cells that they use. The coalgebraic view of monoidal computer thus provides a convenient diagrammatic language for studying computability and complexity.

monoidal (category -> computer)

Tags

Annotators

URL

-

- Jun 2019

-

tele.informatik.uni-freiburg.de tele.informatik.uni-freiburg.de

-

Currently, when we say fractal computation, we are simulating fractals using binary operations. What if "binary" emerges on fractals? Can we find a new computation realm that can simulate binary? Can "fractal computing" be a lower level approach to our current binary understanding of computation?

-

- May 2019

-

fs.blog fs.blog

-

There’s a bug in the evolutionary code that makes up our brains.

Saying it's a "bug" implies that it's bad. But something this significant likely improves our evolutionary fitness in the past. This "bug" is more of a previously-useful adaptation. Whether it's still useful or not is another question, but it might be.

-

- Aug 2018

-

wendynorris.com wendynorris.com

-

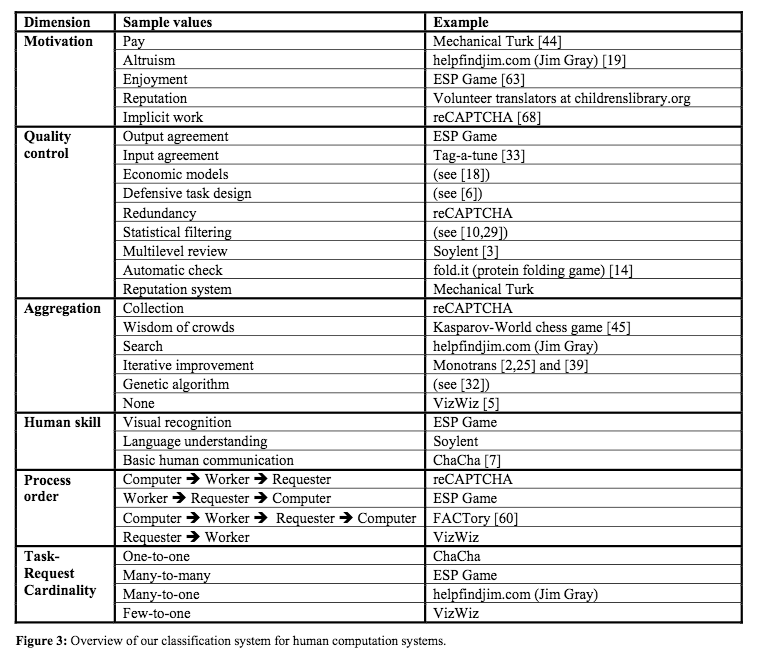

Another way to use a classification system is to consider if there are other possible values that could be used for a given dimension.

Future direction: Identify additional sample values and examples in the literature or in situ to expand the options within each dimension.

-

For researchers looking for new avenues within human computation, a starting point would be to pick two dimensions and list all possible combinations of values.

Future direction: Apply two different human computation dimensions to imagine a new approach.

-

These properties formed three of our dimensions: motivation, human skill, and aggregation.

These dimensions were inductively revealed through a search of the human computation literature.

They contrast with properties that cut across human computational systems: quality control, process order and task-request cardinality.

-

A subtle distinction among human computation systems is the order in which these three roles are performed. We consider the computer to be active only when it is playing an active role in solving the problem, as opposed to simply aggregating results or acting as an information channel. Many permutations are possible.

3 roles in human computation — requester, worker and computer — can be ordered in 4 different ways:

C > W > R // W > R > C // C > W > R > C // R > W

-

The classification system we are presenting is based on six of the most salient distinguishing factors. These are summarized in Figure 3.

Classification dimensions: Motivation, Quality control, Aggregation, Human skill, Process order, Task-Request Cardinality

-

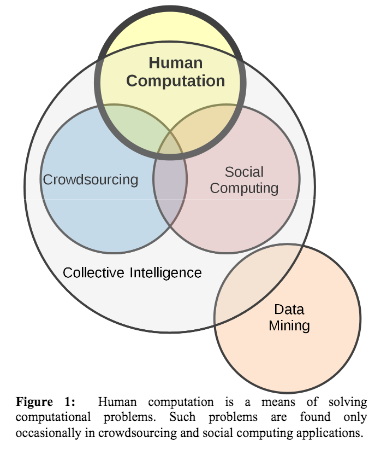

"... groups of individuals doing things collectively that seem intelligent.” [41]

Collective intelligence definition.

Per the authors, "collective intelligence is a superset of social computing and crowdsourcing, because both are defined in terms of social behavior."

Collective intelligence is differentiated from human computation because the latter doesn't require a group.

It is differentiated from crowdsourcing because it doesn't require a public crowd and it can happen without an open call.

-

Data mining can be defined broadly as: “the application of specific algorithms for extracting patterns from data.” [17]

Data mining definition

No human is involved in the extraction of data via a computer.

-

“... applications and services that facilitate collective action and social interaction online with rich exchange of multimedia information and evolution of aggregate knowledge...” [48]

Social computing definition

Humans perform a social role while communication is mediated by technology. The interaction between human social role and CMC is key here.

-

The intersection of crowdsourcing with human computation in Figure 1 represents applications that could reasonably be considered as replacements for either traditional human roles or computer roles.

Authors provide example of language translation which could be performed by a machine (when speed and cost matter) or via crowdsourcing (when quality matters)

-

“Crowdsourcing is the act of taking a job traditionally performed by a designated agent (usually an employee) and outsourcing it to an undefined, generally large group of people in the form of an open call.” [24

Crowdsourcing definition

Labor process of worker replaced by public.

-

modern usage was inspired by von Ahn’s 2005 dissertation titled "Human Computation" [64] and the work leading to it. That thesis defines the term as: “...a paradigm for utilizing human processing power to solve problems that computers cannot yet solve.”

Human computation definition.

Problem solving by human reasoning and not a computer.

-

When classifying an artifact, we consider not what it aspires to be, but what it is in its present state.

Criterion for determining when/if the artifact is a product of human computation.

-

human computation does not encompass online discussions or creative projects where the initiative and flow of activity are directed primarily by the participants’ inspiration, as opposed to a predetermined plan designed to solve a computational problem.

What human computation is not.

The authors cite Wikipedia as not an example of human computation.

"Wikipedia was designed not to fill the place of a machine but as a collaborative writing project in place of the professional encyclopedia authors of yore."

-

Human computation is related to, but not synonymous with terms such as collective intelligence, crowdsourcing, and social computing, though all are important to understanding the landscape in which human computation is situated.

-

-

wendynorris.com wendynorris.com

-

n human computation, people act as computational components and perform the work that AI systemslack the skillstocomplete

Human computation definition.

-

- Jun 2018

-

www.informatics.indiana.edu www.informatics.indiana.edu

-

So far, we have dealt with self-reference, but the situation is quite similar with the notion of self-modification. Partial self- modification is easy to achieve; the complete form goes beyond ordinary mathematics and anything we can formulate. Consider, for instance, recursive programs. Every recursive program can be said to modify itself in some sense, since (by the definition of recursiveness) the exact operation carried out at time t depends on the result of the operation at t-1, and so on: therefore, the final "shape" of the transformation is getting defined iteratively, in runtime (a fact somewhat obscured by the usual way in which recursion is written down in high-level programming languages like C). At the same time, as we can expect, to every finite recursive program there belongs an equivalent "straight" program, that uses no recursion at all, and is perfectly well defined in advance, so that it does not change in any respect; it is simply a fixed sequence of a priori given elementary operations.

So unbounded recursion automatically implies a form of self-reference and self-modification?

-

- Aug 2016

-

slatestarcodex.com slatestarcodex.com

-

That was in 1960. If computing power doubles every two years, we’ve undergone about 25 doubling times since then, suggesting that we ought to be able to perform Glushkov’s calculations in three years – or three days, if we give him a lab of three hundred sixty five computers to work with.

The last part of this sentence seems ignorant of Amdahl's Law.

-

- Dec 2015

-

storm.apache.org storm.apache.org

-

Why use Storm? Apache Storm is a free and open source distributed realtime computation system. Storm makes it easy to reliably process unbounded streams of data, doing for realtime processing what Hadoop did for batch processing. Storm is simple, can be used with any programming language, and is a lot of fun to use! Storm has many use cases: realtime analytics, online machine learning, continuous computation, distributed RPC, ETL, and more. Storm is fast: a benchmark clocked it at over a million tuples processed per second per node. It is scalable, fault-tolerant, guarantees your data will be processed, and is easy to set up and operate. Storm integrates with the queueing and database technologies you already use. A Storm topology consumes streams of data and processes those streams in arbitrarily complex ways, repartitioning the streams between each stage of the computation however needed. Read more in the tutorial.

stream computation

Tags

Annotators

URL

-