Global Supply Chain Analytics Market forecast to 2035 The global Supply Chain Analytics Market study report provides an exclusive analysis of the current market size and future growth opportunities. In this latest report, our researchers have provided valuable insight into market dynamics to business strategists, investors, industrial leaders, and stakeholders during the forecast period 2024-2035. The market report highlights the current market value, emerging trends, and projected growth rate for the upcoming years. According to our analytical research on the Supply Chain Analytics industry, the Supply Chain Analytics market is projected to reach a sizeable valuation of $ 47.96 billion by 2035. To know more about the report, visit @ https://www.rootsanalysis.com/supply-chain-analytics-market

- Mar 2025

- May 2024

-

www.theguardian.com www.theguardian.com

-

Untersuchungen zeigen, dass die COP28 mit dem Emissions Peak für Treibhausgase zusammenfallen könnte. Um das 1,5°-Ziel zu erreichen, müssten allerdings die Emissionen bis 2030 um die Hälfte sinken. https://www.theguardian.com/environment/ng-interactive/2023/nov/29/cop28-what-could-climate-conference-achieve

Tags

- Council on Energy, Environment and Water

- OECD

- COP28 global methane summit

- Global Centre on Adaptation

- BNEF

- Joeri Rogelj

- Lauri Myllyvirta

- Durwood Zaelke

- NOCs

- climate finance

- Jenny Chase

- Eamon Ryan

- Greenpeace

- Climate Analytics

- Christiana Figueres

- Shady Khalil

- Centre for Research on Energy and Clean Air

- Arunabha Ghosh

- Romain Ioualalen

- Paul Bledsoe

- Jeanne d’Arc Mujawamariya

- Macky Small

- Patrick Verkooijen

- Mia Mottley

- Nicholas Stern

- COP28

- Saudi-Arabia

- Mariana Mazzucato

- Vera Singer

- coal phase-out

- Global Optimism

- Harjeet Singh

- actor: Sultan Al Jaber

- Institute for Governance and Sustainable Development

- fossil fuels phase-out

- Avinash Persaud

- Climate Action Network International

- China

- Simon Stiell

- 2023-11-29

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

Die Pläne der Kohle-, Öl- und gasproduzierenden Staaten zur Ausweitung der Förderung würden 2030 zu 460% mehr Kohle, 83% mehr Gas und 29% mehr Ölproduktion führen, als mit dem Pariser Abkommen vereinbar ist. Der aktuelle Production Gap Report der Vereinten Nationen konzentriert sich auf die 20 stärksten Verschmutzer-Staaten, deren Pläne fast durchgängig in radikalem Widerspruch zum Pariser Abkommen stehen. https://www.theguardian.com/environment/2023/nov/08/insanity-petrostates-planning-huge-expansion-of-fossil-fuels-says-un-report

Report: https://productiongap.org/

Tags

- country: Norway

- expert: Ploy Achakulwisut

- country: India

- country: Nigeria

- country: China

- institution: Stockholm Environment Institute (SEI)

- expert: Michael Lazarus

- 2023-11-08

- country: Saudi Arabia

- country: UAE

- country: Kuwait

- country: Qatar

- country: Australia

- expert: Neil Grant

- country: Canada

- actor: Inger Andersen

- report: Production Gap Report 2023

- fossil expansion

- country: Russia

- country: Brazil

- country: Germany

- country: Uk

- institution: Climate Analytics

- country: Colombia

- expert: Romain Ioualalen

- country: Indonesia

- institution: UNEP

- country: USA

- institution: Oil Change International

Annotators

URL

-

- Feb 2024

-

www.scirp.org www.scirp.org

-

ergi

Highlight and annotate at least 2 areas for each question. The annotations should be 1-2 sentences explaining the following: A. New learning B. Familiar with this C. Use this in practice

-

- Dec 2023

-

www.theguardian.com www.theguardian.com

-

Für den Zuwachs sind vor allem China und Indien verantwortlich. In Europa haben die Emissionen um 7,4, in den USA um 3% abgenommen. Eine große Rolle spielten die um fast 12% gestiegenen Emissionen der Luftfahrt https://www.theguardian.com/environment/2023/dec/05/global-carbon-emissions-fossil-fuels-record

-

- Sep 2023

-

-

“Given the high unemployment rate in South Africa as well … you cannot sell it as a climate change intervention,” says Deborah Ramalope, head of climate policy analysis at the policy institute Climate Analytics in Berlin. “You really need to sell it as a socioeconomic intervention.”

- for: quote, quote - climate change intervention, Trojan horse, Deborah Ramalope

- quote

- Given the high unemployment rate in South Africa as well … you cannot sell it as a climate change intervention, you really need to sell it as a socioeconomic intervention.

- author: Deborah Ramalop

- date: Aug. 15, 2023

- source: https://www.wired.co.uk/article/just-energy-transition-partnerships-south-africa-cop

- comment

- A Trojan horse strategy

-

- Jul 2023

-

tokenterminal.com tokenterminal.com

-

Fundamentals for crypto Token Terminal is a platform that aggregates financial data on the leading blockchains and decentralized applications.

Tags

Annotators

URL

-

- Jun 2023

-

www.optimizesmart.com www.optimizesmart.com

-

Debug mode allows you to see only the data generated by your device while validating analytics and also solves the purpose of having separate data streams for staging and production (no more separate data streams for staging and production).

good to know.

Seems to contradict their advice on https://www.optimizesmart.com/using-the-ga4-test-property/ to create a test property...

-

-

developers.google.com developers.google.com

-

Will not read or write first-party [analytics cookies]. Cookieless pings will be sent to Google Analytics for basic measurement and modeling purposes.

-

- Feb 2023

-

journals.publishing.umich.edu journals.publishing.umich.edu

-

student outcomes, including learning, persistence, or attitudes.

I would think that this would be one of the easiest things to measure and also would provide significant and useful data. We should check in with Brian (?) to see what data is currently being tracked.

-

-

www.complexityexplorer.org www.complexityexplorer.org

-

docdrop.org docdrop.org

-

DeDeo, Simon, and Elizabeth A. Hobson. “From Equality to Hierarchy.” Proceedings of the National Academy of Sciences 118, no. 21 (May 25, 2021): e2106186118. https://doi.org/10.1073/pnas.2106186118.

-

- Jan 2023

-

www.complexityexplorer.org www.complexityexplorer.org

-

3.1 Guest Lecture: Lauren Klein » Q&A on "What is Feminist Data Science?"<br /> https://www.complexityexplorer.org/courses/162-foundations-applications-of-humanities-analytics/segments/15631

https://www.youtube.com/watch?v=c7HmG5b87B8

Theories of Power

Patricia Hill Collins' matrix of domination - no hierarchy, thus the matrix format

What are other broad theories of power? are there schools?

Relationship to Mary Parker Follett's work?

Bright, Liam Kofi, Daniel Malinsky, and Morgan Thompson. “Causally Interpreting Intersectionality Theory.” Philosophy of Science 83, no. 1 (January 2016): 60–81. https://doi.org/10.1086/684173.

about Bayesian modeling for intersectionality

Where is Foucault in all this? Klein may have references, as I've not got the context.

How do words index action? —Laura Klein

The power to shape discourse and choose words - relationship to soft power - linguistic memes

Color Conventions Project

20:15 Word embeddings as a method within her research

General result (outside of the proximal research) discussed: women are more likely to change language... references for this?

[[academic research skills]]: It's important to be aware of the current discussions within one's field. (LK)

36:36 quantitative imperialism is not the goal of humanities analytics, lived experiences are incredibly important as well. (DK)

-

-

www.complexityexplorer.org www.complexityexplorer.org

-

www.complexityexplorer.org www.complexityexplorer.org

-

https://www.youtube.com/watch?v=vZklLt80wqg

Looking at three broad ideas with examples of each to follow: - signals - patterns - pattern making, pattern breaking

Proceedings of the Old Bailey, 1674-1913

Jane Kent for witchcraft

250 years with ~200,000 trial transcripts

Can be viewed as: - storytelling, - history - information process of signals

All the best trials include the words "Covent Garden".

Example: 1163. Emma Smith and Corfe indictment for stealing.

19:45 Norbert Elias. The Civilizing Process. (book)

Prozhito: large-scale archive of Russian (and Soviet) diaries; 1900s - 2000s

How do people understand the act of diary-writing?

Diaries are:

Leo Tolstoy

a convenient way to evaluate the self

Franz Kafka

a means to see, with reassuring clarity [...] the changes which you constantly suffer.

Virginia Woolf'

a kindly blankfaced old confidante

Diary entries in five categories - spirit - routine - literary - material form (talking about the diary itself) - interpersonal (people sharing diaries)

Are there specific periods in which these emerge or how do they fluctuate? How would these change between and over cultures?

The pattern of talking about diaries in this study are relatively stable over the century.

pre-print available of DeDeo's work here

Pattern making, pattern breaking

Individuals, institutions, and innovation in the debates of the French Revolution

- transcripts of debates in the constituent assembly

the idea of revolution through tedium and boredom is fascinating.

speeches broken into combinations of patterns using topic modeling

(what would this look like on commonplace book and zettelkasten corpora?)

emergent patterns from one speech to the next (information theory) question of novelty - hi novelty versus low novelty as predictors of leaders and followers

Robespierre bringing in novel ideas

How do you differentiate Robespierre versus a Muppet (like Animal)? What is the level of following after novelty?

Four parts (2x2 grid) - high novelty, high imitation (novelty with ideas that stick) - high novelty, low imitation (new ideas ignored) - low novelty, high imitation - low novelty, low imitation (discussion killers)

Could one analyze television scripts over time to determine the good/bad, when they'll "jump the shark"?

-

-

www.complexityexplorer.org www.complexityexplorer.org

-

www.complexityexplorer.org www.complexityexplorer.org

-

www.complexityexplorer.org www.complexityexplorer.org

-

https://www.youtube.com/watch?v=5pSGniUOyLc

Digital humanities aka Humanities Analytics

5:54 Simon DeDeo mentioned Alastair McKinnon the philosopher in the 60s did a stylopheric study of Kierkegaard pseudonyms - Kierkegaard's Pseudonyms: A New Hierarchy by Alastair McKinnon https://www.jstor.org/stable/20009297

Tools for supplementing research and scholarship

core audience is Ph.D. students

-

-

www.complexityexplorer.org www.complexityexplorer.org

-

https://www.youtube.com/watch?v=7RV99eO_oZU

Foundations & Applications of Humanities Analytics

1.3 About the course

- history of space, genealogy

- science / tools for learning

- examples via guest lecturers

Simon and David indicate that they are not "two cultures" people.

"You can get really far by counting." -Simon DeDeo

Digital humanities is another method of storytelling.

-

- Jul 2022

-

climateactiontracker.org climateactiontracker.org

-

Zusammenfassende Studie zu den Auswirkungen des Ukraine-Kriegs auf Gas-Förderung und Konsum

-

- May 2022

-

-

faciliter l’accès aux données des systèmes du MES, notamment par rapport à laréussite de groupes ciblés d’étudiants (par exemple, les étudiants en situationde handicap, les étudiants autochtones, les étudiants issus de l’immigration, lesétudiants de première génération3, les étudiants internationaux)

-

- Apr 2022

-

twitter.com twitter.com

-

Adam Kucharski. (2021, February 6). COVID outlasts another dashboard... Https://t.co/S9kLCva3WQ Illustrates the importance of incentivising sustainable outbreak analytics—If a tool is useful, people will come to rely on it, which creates a dilemma if it can’t be maintained. [Tweet]. @AdamJKucharski. https://twitter.com/AdamJKucharski/status/1357970753199763457

-

- Feb 2022

-

-

Contemporary digital learning technologies generate, store, and share terabytes of learner data—which must flow seamlessly and securely across systems. To enable interoperability and ensure systems can perform at-scale, the ADL Initiative is developing the Data and Training Analytics Simulated Input Modeler (DATASIM), a tool for producing simulated learner data that can mimic millions of diverse user interactions. view image full screen DATASIM application screen capture. DATASIM is an open-source platform for generating realistic Experience Application Programming Interface (xAPI) data at very large scale. The xAPI statements model realistic behaviors for a cohort of simulated learner/users, producing tailorable streams of data that can be used to benchmark and stress-test systems. DATASIM requires no specialized hardware, and it includes a user-friendly graphical interface that allows precise control over the simulation parameters and learner attributes.

-

-

www.sciencedirect.com www.sciencedirect.com

-

learning analytics

-

-

profiles.usalearning.net profiles.usalearning.net

-

xAPI profiles make learning design, development, and analytics better.

-

-

torrancelearning.com torrancelearning.com

-

The video profile of the xAPI was created to identify and standardize the common types of interactions that can be tracked in any video player.

-

- Jan 2022

-

adlnet-archive.github.io adlnet-archive.github.io

-

xAPI Wrapper Tutorial Introduction This tutorial will demonstrate how to integrate xAPI Wrapper with existing content to capture and dispatch learning records to an LRS.

roll your own JSON rather than using a service like xapi.ly

-

-

-

Storyline 360 xAPI Updates (Winter 2021)Exciting xAPI update for Storyline users! Articulate has updated Storyline 360 to support custom xAPI statements alongside a few other xAPI-related updates. (These changes will likely come to Storyline 3 soon, though not as of November 30, 2021.)

-

-

xapi.ly xapi.ly

-

Making xAPI Easier Use the xapi.ly® Statement Builder to get more and better xAPI data from elearning created in common authoring platforms. xapi.ly helps you create the JavaScript triggers to send a wide variety of rich xAPI statements to the Learning Record Store (LRS) of your choice.

criteria for use and pricing listed on site

Tags

Annotators

URL

-

-

registry.tincanapi.com registry.tincanapi.com

-

Here you will find a well curated list of activities, activity types, attachments types, extensions, and verbs. You can also add to the registry and we will give you a permanently resolvable URL - one less thing you have to worry about. The registry is a community resource, so that we can build together towards a working Tin Can data ecosystem.

**participant in the Spring 2022 XAPI cohort suggested that 'Registry is not maintained, and they generally suggest using the Vocab Server (which is also the data source for components in the Profile Server).'

-

-

www.json.org www.json.orgJSON1

-

JSON (JavaScript Object Notation) is a lightweight data-interchange format. It is easy for humans to read and write. It is easy for machines to parse and generate. It is based on a subset of the JavaScript Programming Language Standard ECMA-262 3rd Edition - December 1999. JSON is a text format that is completely language independent but uses conventions that are familiar to programmers of the C-family of languages, including C, C++, C#, Java, JavaScript, Perl, Python, and many others. These properties make JSON an ideal data-interchange language.

Tags

Annotators

URL

-

-

xapi.vocab.pub xapi.vocab.pub

-

The xAPI Vocabulary and Profile Server is a curated list of xAPI vocabulary concepts and profiles maintained by the xAPI community.

Tags

Annotators

URL

-

-

www.td.org www.td.org

-

xAPI Foundations Leverage xAPI to develop more comprehensive learning experiences. This on-demand e-learning course is available online immediately after purchase. Within the course, you will have the opportunity to personalize your learning by viewing videos, interacting with content, hearing from experts, and planning for your future. You will have access to the course(s) for 12 months from your registration date.

-

-

www.watershedlrs.com www.watershedlrs.com

-

Learning program analytics seek to understand how an overall learning program is performing. A learning program typically encompasses many learners and many learning experiences (although it could easily contain just a few).

-

Learning experience analytics seek to understand more about a specific learning activity.

-

Learner analytics seek to understand more about a specific person or group of people engaged in activities where learning is one of the outputs.

-

There are many types of learning analytics and things you can measure and analyze. We segment these analytics into three categories: learning experience analytics, learner analytics, and learning program analytics.

-

Learning analytics is the measurement, collection, analysis, and reporting of data about learners, learning experiences, and learning programs for purposes of understanding and optimizing learning and its impact on an organization’s performance.

-

-

learningsolutionsmag.com learningsolutionsmag.comxAPI1

-

xAPI

Learning Solutions XAPI articles Spring 2022 #xAPICohort resource

-

-

learn.filtered.com learn.filtered.com

-

Social learning This is a feature the LXP has really expanded. Although some of the more advanced LMSs boast social features, the Learning Experience Platform is better formatted for them and far more likely to provide. Firstly, the LXP caters for a broader range of learning options than the LMS. It’s usually not difficult to use your LXP to set up an online class or webinar. LXPs also provide a chance for learners to share their opinions on content: liking, sharing, or commenting on an article or online class. Users can follow and interact with others, above or below them in the organisation. Sometimes LXPs even provide people curation, matching learners and mentors. Users also have a chance to make the LXP their own by setting up a personalised profile page. It might seem low-priority, but a sense of ownership usually corresponds with a boost in engagement. As well prepared as Learning & Development leaders are, there’ll be things that people doing a job every day will know that you won’t. They can use their personal experience to recommend or create learning content in an LXP. This helps on-the-job learning and gives employees a greater chance of picking up the skills they need to progress in their role.

-

-

blog.aula.education blog.aula.education

-

How, exactly, can we design for engagement and conversation? In comparison to content-focused educational technology such as the Learning Management System (LMS), our (not so secret) recipe is this:1. Eliminate the noise2. Bring people into the same room3. Make conversation easy and meaningful4. Create modularity and flexibility

Spring 2022 #xAPICohort resource

-

-

www.linkedin.com www.linkedin.com

-

To learn more, there are two books I highly recommend. "Digital Body Language," by Steve Woods, and "Big Data: Does Size Matter?" by Timandra Harkness. If you would like a deeper dive into data-driven learning design, there's a free e-book and toolkit you can download from my blog. You can also reach me there at loriniles.com. Remember, start with the data you have readily available. Data does not have to be intimidating Excel spreadsheets. Be prepared with data every single time you meet with your stakeholders. And before you design any strategy, ask what data you have to support every decision. You're on an exciting journey to becoming a more well-rounded HR leader. Get started, and good luck.

Spring 2022 #xAPICohort resource

-

-

www.valamis.com www.valamis.com

-

LXPs and LMSs accomplish two different objectives. An LMS enables administrators to manage learning, while an LXP enables learners to explore learning. Organizations may have an LXP, an LMS or both. If they have both, they may use the LXP as the delivery platform and the LMS to handle the administrative work.

Spring 2022 #xAPICohort resource

-

4. Highly intuitive interfaces

Spring 2022 #xAPICohort resource

-

3. Supports various types of learning

Spring 2022 #xAPICohort resource

-

2. Rich learning experience through deeper personalization

Spring 2022 #xAPICohort resource

-

Here are some other characteristics that set LXPs apart from LMS’s: 1. Extensive integration capabilities

Spring 2022 #xAPICohort resource

-

The gradual shift, from one-time pay to cloud-based subscription-based business has lead learning platforms to also offer Software-as-a-Service (SaaS) models to their clients. As such content becomes part of digital learning networks, they are integrated into commercial learning solutions and then become part of broader LXPs. Looking back at all these developments, from how new data consumption platforms evolved, to the emergence of newer content development approaches and publishing channels, it’s easy to understand why LXPs naturally evolved as a result of DXPs.

Spring 2022 #xAPICohort resource

-

The growth of social learning has also created multiple learning opportunities for people to share their knowledge and expertise. As they socialize on these platforms (Facebook, LinkedIn, YouTube, Instagram and many others), individuals and groups learn from each other through various types of social interactions – sharing content, exchanging mutually-liked links to external content. LXP leverage similar approaches in corporate learning environments, and scale learning experience and opportunities with such user-generated content as found in social and community-based learning.

Spring 2022 #xAPICohort resource

-

Integrations are also possible with AI. If you integrate LXP and your Human Resource Management (HRM) system, the corporate intranet, your Learning Record Store (LRS) or the enterprise Customer Relationship Management (CRM) system, and collect the data from all of them, you can identify many different trends and patterns. And based on those patterns, all stakeholders can make informed training and learning decisions. Standard LMS’s cannot do any of that. And though LMS developers are trying to get there, they’ve still got a long way to go to bridge the functionality gap with LXPs. As a result, there was an even greater impetus to the emergence of LXPs.

Spring 2022 #xAPICohort resource

-

Another driver for the emergence of LXP’s is the standards adopted by modern-day LMS’s – which are SCORM-based. While SCORM does “get results”, it is limited in what it can do. One of the main goals of any corporate learning platform is to connect learning with on-the-job performance. And SCORM makes it very difficult to decide how effective the courses really are, or how learners benefit from these courses. Experience API (xAPI) on the other hand – the standard embraced by LXPs – offers significantly enhanced capabilities to the platform. When you use xAPI, you can follow different parameters both while you learn and perform on the job tasks. And, what’s even better is that you can do that on a variety of digital devices.

Spring 2022 #xAPICohort resource

-

LMS’s primarily served as a centralized catalog of corporate digital learning assets. Users of those platforms often found it hard to navigate through vast amounts of content to find an appropriate piece of learning. LMS providers sought to bridge that gap by introducing smart searches and innovative querying features – but that didn’t entirely address the core challenge: LMS’s were still like huge libraries where you should only go to when you have an idea of what you need, and then spend inordinate amounts of time searching for what you specifically want!

Spring 2022 #xAPICohort resource

-

-

www.linkedin.com www.linkedin.com

-

Experience API (xAPI) is a tool for gaining insight into how learners are using, navigating, consuming, and completing learning activities. In this course, Anthony Altieri provides an in-depth look at using xAPI for learning projects, including practical examples that show xAPI in action.

Spring 2022 #xAPICohort resource

-

-

www.learningguild.com www.learningguild.com

-

Intro to xAPI: What Do Instructional Designers Need to Know

Spring 2022 #xAPICohort resource

-

-

xapicohort.com xapicohort.com

-

The xAPI Learning Cohort is a free, vendor-neutral, 12-week learning-by-doing project-based team learning experience about the Experience API. (Yep, you read that right – free!) It’s an opportunity for those who are brand new to xAPI and those who are looking to experiment with it to learn from each other and from the work itself.

Spring 2022 #xAPICohort resource

Tags

Annotators

URL

-

-

www.td.org www.td.org

-

If your current course development tools don't create the activity statements you need, keep in mind that sending xAPI statements requires only simple JavaScript, so many developers are coding their own form of statements from scratch.

Spring 2022 #xAPICohort resource

-

An xAPI activity statement records experiences in an "I did this" format. The format specifies the actor, verb, object: the actor (who did it), a verb (what was done), a direct object (what it was done to) and a variety of contextual data, including score, rating, language, and almost anything else you want to track. Some learning experiences are tracked with a single activity statement. In other instances, dozens, if not hundreds, of activity statements can be generated during the course of a learning experience. Activity statements are up to the instructional designer and are driven by the need for granularity in reporting.

Spring 2022 #xAPICohort resource

-

xAPI is a simple, lightweight way to store and retrieve records about learners and share these data across platforms. These records (known as activity statements) can be captured in a consistent format from any number of sources (known as activity providers) and they are aggregated in a learning record store (LRS). The LRS is analogous to the SCORM database in an LMS. The x in xAPI is short for "experience," and implies that these activity providers are not just limited to traditional AICC- and SCORM-based e-learning. With experience API or xAPI you can track classroom activities, usage of performance support tools, participation in online communities, mentoring discussions, performance assessment, and actual business results. The goal is to create a full picture of an individual's learning experience and how that relates to her performance.

Spring 2022 #xAPICohort resource

-

-

www.watershedlrs.com www.watershedlrs.com

-

For any xAPI implementation, these five things need to happen:A person does something (e.g., watches a video).That interaction is tracked by an application.Data about the interaction is sent to an LRS.The data is stored in the LRS and made available for use.Use the data for reporting and personalizing a learning experience.In most implementations, multiple learner actions are tracked by multiple applications, and data may be used in a number of ways. In all cases, there’s an LRS at the center receiving, storing, and returning the data as required.

Spring 2022 #xAPICohort resource

-

Experience API (also xAPI or Tin Can API) is a learning technology interoperability specification that makes it easier for learning technology products to communicate and work with one another.

Spring 2022 #xAPICohort resource

-

-

www.watershedlrs.com www.watershedlrs.com

-

Instructional DesignerWhen implementing xAPI across an organization, there isn’t usually a need for instructional designers to take on new roles or duties. However, they may experience a learning curve that presents an opportunity to understand how to best package and effectively deploy xAPI in newly created content. Your learning designer(s) is a key partner in getting good data, so keep them in the loop regarding your strategy, goals, and expected outcomes.

Spring 2022 #xAPICohort resource

-

- Nov 2021

-

drive.google.com drive.google.com

-

Distance learning, learning analytics, COVID-19, technology-enhanced learning

Distance learning, learning analytics, COVID-19, technology- enhanced learning

-

- Oct 2021

-

firebase.googleblog.com firebase.googleblog.com

-

Analytics is the key to understanding your app's users: Where are they spending the most time in your app? When do they churn? What actions are they taking?

-

-

docs.digitalocean.com docs.digitalocean.com

-

How to Install the DigitalOcean Metrics Agent

DigitalOcean Monitoring

DigitalOcean Monitoring is a free, opt-in service that gathers metrics about Droplet-level resource utilization. It provides additional Droplet graphs and supports configurable metrics alert policies with integrated email Slack notifications to help you track the operational health of your infrastructure.

-

- Aug 2021

-

library.scholarcy.com library.scholarcy.com

-

The Recorded Future system contains many components, which are summarized in the following diagram: The system is centered round the database, which contains information about all canonical event and entities, together with information about event and entity references, documents containing these references, and the sources from which these documents were obtained

-

We have decided on the term “temporal analytics” to describe the time oriented analysis tasks supported by our systems

RF have decided on the term “temporal analytics” to describe the time oriented analysis tasks supported by our systems

-

-

psyarxiv.com psyarxiv.com

-

Saire, Josimar. E. Chire., & Masuyama, A. (2021). How Japanese citizens faced the COVID-19 pandemic?: Exploration from twitter [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/64x7s

-

- Jul 2021

-

blog.jonudell.net blog.jonudell.net

-

https://blog.jonudell.net/2021/07/21/a-virtuous-cycle-for-analytics/

Some basic data patterns and questions occur in almost any business setting and having a toolset to handle them efficiently for both the end users and the programmers is an incredibly important function.

Too often I see businesses that don't own their own data or their contracting out the programming portion (or both).

-

- Mar 2021

-

plausible.io plausible.io

-

Plausible is a lightweight, self-hostable, and open-source website analytics tool. No cookies and fully compliant with GDPR, CCPA and PECR. Made and hosted in the EU 🇪🇺

Built by

<script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>Introducing https://t.co/mccxgAHIWo 🎉<br><br>📊 Simple, privacy-focused web analytics<br>👨💻 Stop big corporations from collecting data on your users<br>👉 Time to ditch Google Analytics for a more ethical alternative#indiehackers #myelixirstatus #privacy

— Uku Täht (@ukutaht) April 29, 2019

Tags

Annotators

URL

-

-

-

Szabelska, A., Pollet, T. V., Dujols, O., Klein, R. A., & IJzerman, H. (2021). A Tutorial for Exploratory Research: An Eight-Step Approach. PsyArXiv. https://doi.org/10.31234/osf.io/cy9mz

-

- Feb 2021

-

mxb.dev mxb.dev

- Nov 2020

-

-

Spotting a gem in it takes something more. Without domain knowledge, business acumen, and strong intuition about the practical value of discoveries—as well as the communication skills to convey them to decision-makers effectively—analysts will struggle to be useful. It takes time for them to learn to judge what’s important in addition to what’s interesting. You can’t expect them to be an instant solution to charting a course through your latest crisis. Instead, see them as an investment in your future nimbleness.

This is where the expectations from today's organizations differ and lead to a big gap in expectations from Analyts and Analytics as a function.

-

- Oct 2020

-

www.iic.uam.es www.iic.uam.es

-

Conclusiones

Tratando de encontrarle una utilidad real al HR analytics, pudieran considerarse las siguientes:

- conocer los ingresos promedio que genera cada empleado, como una medida de la eficiencia de una organización

- conocer la tasa de aceptación de ofertas, es decir, el número de ofertas de trabajo formales aceptadas entre el número total de ofertas de trabajo, para redefinir la estrategia de adquisición de talento de la empresa.

- conocer los gastos de formación por empleado, para reevaluar el gasto de capacitación por empleado.

- conocer la eficiencia de la formación, analizando la mejora del rendimiento, para evaluar la eficacia de un programa de formación.

- conocer la tasa de rotación voluntaria e involuntaria, para identificar la experiencia de los empleados que lo conducen a la deserción voluntaria o para desarrollar un plan para mejorar la calidad de las contrataciones para evitar la rotación involuntaria.

- conocer el tiempo de reclutamiento y de contratación, para reducir este tiempo.

- conocer el absentismo, que es una métrica de productividad, o como un indicador de la satisfacción laboral de los empleados.

- conocer el riesgo de capital humano, para identificar la ausencia de una habilidad específica para ocupar un nuevo tipo de trabajo, o la falta de empleados calificados para ocupar puestos de liderazgo.

-

Los Modelos HR Analytics

Existe una gran variedad de software para HR analytics, entre otros Sisense, Domo, Clic data, Domo, Activ trak. Pero para utilizarlos un departamento de RRHH debe estar capacitado específicamente en ello.

-

Lecciones aprendidas

para utilizar esta herramienta se requiere que participen personas que conozcan los procesos organizacionales de la empresa, además de expertos en psicometría y analítica estadística.

-

Human Resources Analytics (HR Analytics

HR Analytics es una metodología para obtener datos de los empleados para analizarlos y hallar evidencias para la toma de decisiones estratégicas.

-

-

formnerd.co formnerd.co

-

-

css-tricks.com css-tricks.com

-

Om Malik writes about a renewed focus on his own blog: My first decree was to eschew any and all analytics. I don’t want to be driven by “views,” or what Google deems worthy of rank. I write what pleases me, not some algorithm. Walking away from quantification of my creativity was an act of taking back control.

I love this quote.

-

What I dwell on the most regarding syndication is the Twitter stuff. I look back at the analytics on this site at the end of every year and look at where the traffic came from — every year, Twitter is a teeny-weeny itty-bitty slice of the pie. Measuring traffic alone, that’s nowhere near the amount of effort we put into making the stuff we’re tweeting there. I always rationalize it to myself in other ways. I feel like Twitter is one of the major ways I stay updated with the industry and it’s a major source of ideas for articles.

So it sounds like Twitter isn't driving traffic to his website, but it is providing ideas and news. Given this I would syndicate content to Twitter as easily and quickly as possible, use webmentions to deal with the interactions and then just use the Twitter timeline for reading and consuming and nothing else.

-

- Jul 2020

-

psyarxiv.com psyarxiv.com

-

Rahman, M., Ali, G. G. M. N., Li, X. J., Paul, K. C., & Chong, P. H. J. (2020). Twitter and Census Data Analytics to Explore Socioeconomic Factors for Post-COVID-19 Reopening Sentiment [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/fz4ry

-

- Jun 2020

-

web.archive.org web.archive.org

-

The bit.ly links that are created are also very diverse. Its harder to summarise this without offering a list of 100,000 of URL’s — but suffice it to say that there are a lot of pages from the major web publishers, lots of YouTube links, lots of Amazon and eBay product pages, and lots of maps. And then there is a long, long tail of other URL’s. When a pile-up happens in the social web it is invariably triggered by link-sharing, and so bit.ly usually sees it in the seconds before it happens.

link shortener: rich insight into web activity...

-

- May 2020

-

support.google.com support.google.com

-

You should then also create a new View and apply the following filter so as to be able to tell apart which domain a particular pageview occurred onFilter Type: Custom filter > AdvancedField A --> Extract A: Hostname = (.*)Field B --> Extract B: Request URI = (.*)Output To --> Constructor: Request URI = $A1$B1

-

-

-

Now you can use Google Analytics without annoying Cookie Notices.

-

-

www.iubenda.com www.iubenda.com

-

In other words, it’s the procedure to prevent Google from “cross-referencing” information from Analytics with other data in its possession.

-

-

-

developers.google.com developers.google.com

-

You can enable an app-level opt out flag that will disable Google Analytics across the entire app.

-

-

support.google.com support.google.com

-

www.bounteous.com www.bounteous.com

-

www.fullstory.com www.fullstory.com

-

- Mar 2020

-

-

Biased towards Piwik Pro.

seeking/keep an eye out for: Would love to see a similar comparison done by the Matomo folks.

-

-

Tags

Annotators

URL

-

-

matomo.org matomo.org

-

It’s the ethical alternative to Google Analytics.

-

-

matomo.org matomo.org

-

Source at: https://github.com/matomo-org/matomo

-

-

github.com github.com

Tags

Annotators

URL

-

-

daan.dev daan.dev

-

If you want to disable Google Analytics-tracking for this site, please click here: [delete_cookies]. The cookie which enabled tracking on Google Analytics is immediately removed.

This is incomplete. The button is missing.

-

-

-

-

Google Analytics created an option to remove the last octet (the last group of 3 numbers) from your visitor’s IP-address. This is called ‘IP Anonymization‘. Although this isn’t complete anonymization, the GDPR demands you use this option if you want to use Analytics without prior consent from your visitors. Some countris (e.g. Germany) demand this setting to be enabled at all times.

-

-

support.google.com support.google.com

-

support.google.com support.google.com

-

Do you consider visitor interaction with the home page video an important engagement signal? If so, you would want interaction with the video to be included in the bounce rate calculation, so that sessions including only your home page with clicks on the video are not calculated as bounces. On the other hand, you might prefer a more strict calculation of bounce rate for your home page, in which you want to know the percentage of sessions including only your home page regardless of clicks on the video.

-

-

consent.guide consent.guide

-

Here you need to decide if you want to take a cautious road and put it into an “anonymous” mode or go all out and collect user identifiable data. If you go with anonymous, you have the ability to not need consent.

-

- Feb 2020

-

journals.sagepub.com journals.sagepub.com

-

One important aspect of critical social media research is the study of not just ideolo-gies of the Internet but also ideologies on the Internet. Critical discourse analysis and ideology critique as research method have only been applied in a limited manner to social media data. Majid KhosraviNik (2013) argues in this context that ‘critical dis-course analysis appears to have shied away from new media research in the bulk of its research’ (p. 292). Critical social media discourse analysis is a critical digital method for the study of how ideologies are expressed on social media in light of society’s power structures and contradictions that form the texts’ contexts.

-

t has, for example, been common to study contemporary revolutions and protests (such as the 2011 Arab Spring) by collecting large amounts of tweets and analysing them. Such analyses can, however, tell us nothing about the degree to which activists use social and other media in protest communication, what their motivations are to use or not use social media, what their experiences have been, what problems they encounter in such uses and so on. If we only analyse big data, then the one-sided conclusion that con-temporary rebellions are Facebook and Twitter revolutions is often the logical conse-quence (see Aouragh, 2016; Gerbaudo, 2012). Digital methods do not outdate but require traditional methods in order to avoid the pitfall of digital positivism. Traditional socio-logical methods, such as semi-structured interviews, participant observation, surveys, content and critical discourse analysis, focus groups, experiments, creative methods, par-ticipatory action research, statistical analysis of secondary data and so on, have not lost importance. We do not just have to understand what people do on the Internet but also why they do it, what the broader implications are, and how power structures frame and shape online activities

-

Challenging big data analytics as the mainstream of digital media studies requires us to think about theoretical (ontological), methodological (epistemological) and ethical dimensions of an alternative paradigm

Making the case for the need for digitally native research methodologies.

-

Who communicates what to whom on social media with what effects? It forgets users’ subjectivity, experiences, norms, values and interpre-tations, as well as the embeddedness of the media into society’s power structures and social struggles. We need a paradigm shift from administrative digital positivist big data analytics towards critical social media research. Critical social media research combines critical social media theory, critical digital methods and critical-realist social media research ethics.

-

de-emphasis of philosophy, theory, critique and qualitative analysis advances what Paul Lazarsfeld (2004 [1941]) termed administrative research, research that is predominantly concerned with how to make technologies and administration more efficient and effective.

-

Big data analytics’ trouble is that it often does not connect statistical and computational research results to a broader analysis of human meanings, interpretations, experiences, atti-tudes, moral values, ethical dilemmas, uses, contradictions and macro-sociological implica-tions of social media.

-

Such funding initiatives privilege quantitative, com-putational approaches over qualitative, interpretative ones.

-

- Jan 2020

-

github.com github.com

Tags

Annotators

URL

-

-

marketplace.digitalocean.com marketplace.digitalocean.com

- Nov 2019

- Sep 2019

-

wisc.pb.unizin.org wisc.pb.unizin.org

-

“But then again,” a person who used information in this way might say, “it’s not like I would be deliberately discriminating against anyone. It’s just an unfortunate proxy variable for lack of privilege and proximity to state violence.

In the current universe, Twitter also makes a number of predictions about users that could be used as proxy variables for economic and cultural characteristics. It can display things like your audience's net worth as well as indicators commonly linked to political orientation. Triangulating some of this data could allow for other forms of intended or unintended discrimination.

I've already been able to view a wide range (possibly spurious) information about my own reading audience through these analytics. On September 9th, 2019, I started a Twitter account for my 19th Century Open Pedagogy project and began serializing installments of critical edition, The Woman in White: Grangerized. The @OPP19c Twitter account has 62 followers as of September 17th.

Having followers means I have access to an audience analytics toolbar. Some of the account's followers are nineteenth-century studies or pedagogy organizations rather than individuals. Twitter tracks each account as an individual, however, and I was surprised to see some of the demographics Twitter broke them down into. (If you're one of these followers: thank you and sorry. I find this data a bit uncomfortable.)

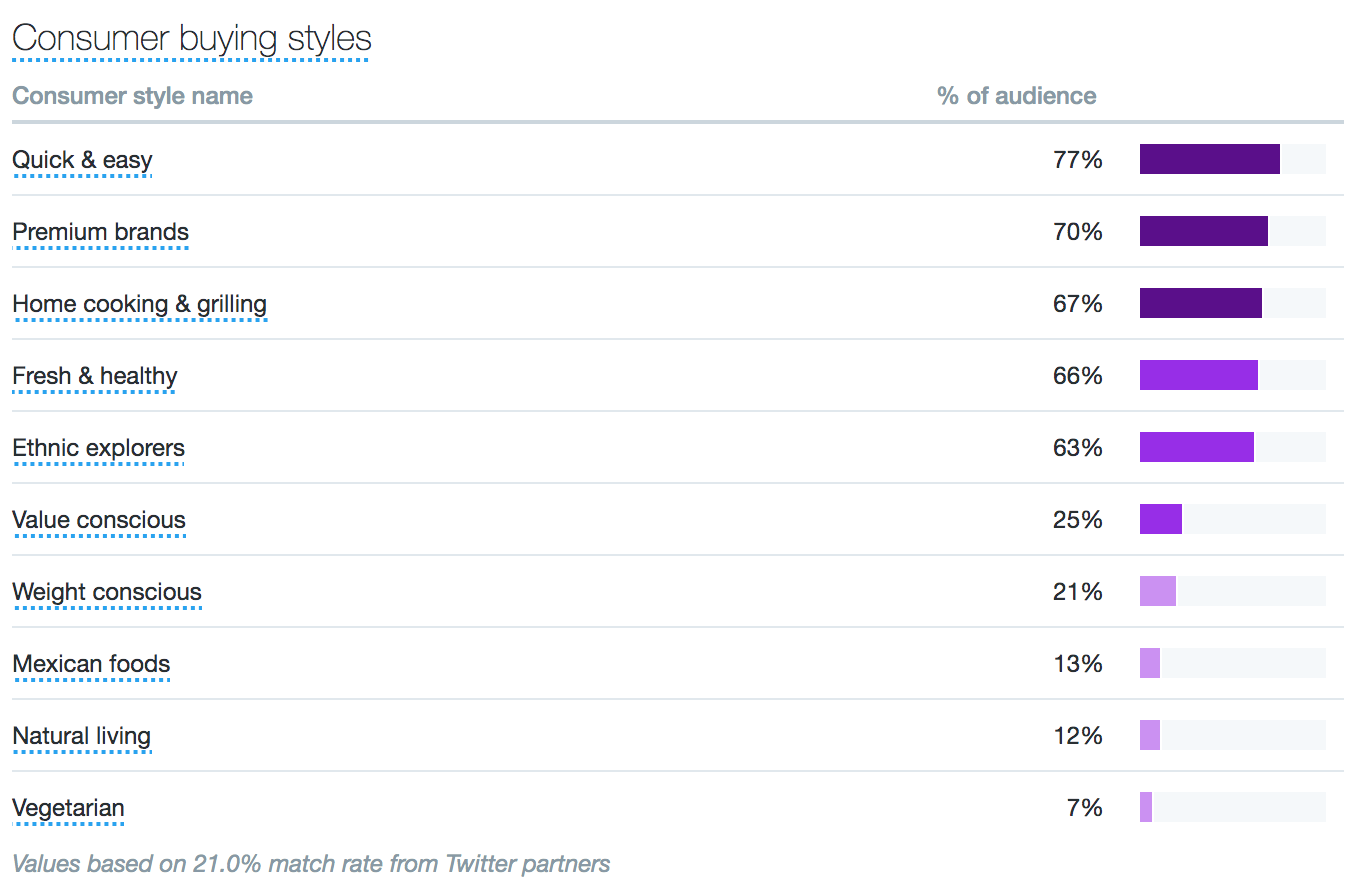

Within this dashboard, I have a "Consumer Buying Styles" display that identifies categories such as "quick and easy" "ethnic explorers" "value conscious" and "weight conscious." These categories strike me as equal parts confusing and problematic: (Link to image expansion)

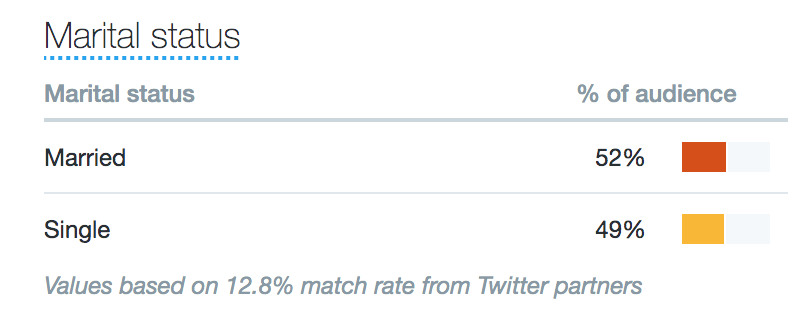

I have a "Marital Status" toolbar alleging that 52% of my audience is married and 49% single.

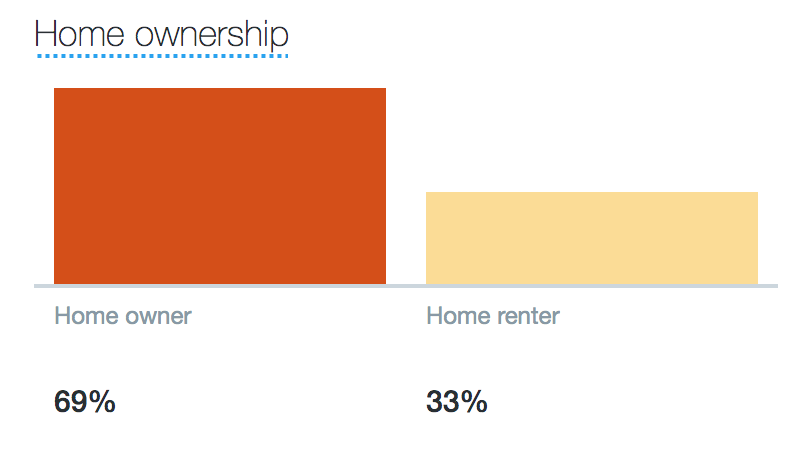

I also have a "Home Ownership" chart. (I'm presuming that the Elizabeth Gaskell House Museum's Twitter is counted as an owner...)

....and more

-

- Jul 2019

-

www.ohdsi-europe.org www.ohdsi-europe.org

-

We translate all patient measurements into statisticsthat are predictive of unsuccesfull discharge

Egy analitikai pipeline, kb amit nekünk is össze kéne hozni a végére.

-

- Apr 2019

-

www.imsglobal.org www.imsglobal.org

-

Annotation Profile Follow learners as they bookmark content, highlight selected text, and tag digital resources. Analyze annotations to better assess learner engagement, comprehension and satisfaction with the materials assigned.

There is already a Caliper profile for "annotation." Do we have any suggestions about the model?

-

-

www.imsglobal.org www.imsglobal.org

Tags

Annotators

URL

-

- Mar 2019

-

dougengelbart.org dougengelbart.org

-

ow the human made use of that time.

In education, learning data and analytics?

-

- Feb 2019

-

www.imsglobal.org www.imsglobal.org

-

Which segments of text are being highlighted?

Do we capture this data? Can we?

-

What types of annotations are being created?

How is this defined?

-

Who is posting most often? Which posts create the most replies?

These apply to social annotation as well.

-

Session Profile

Are we capturing the right data/how can Hypothesis contribute to this profile?

-

Does overall time spent reading correlate with assessment scores? Are particular viewing patterns/habits predictive of student success? What are the average viewing patterns of students? Do they differ between courses, course sections, instructors, or student demographics?

Can H itself capture some of this data? Through the LMS?

-

- Dec 2018

-

www.niemanlab.org www.niemanlab.org

-

And while content analytics tools (e.g., Chartbeat, Parsely, Content Insights) and feedback platforms (e.g., Hearken, GroundSource) have thankfully helped close the gap, the core content management experience remains, for most of us, little improved when it comes to including the audience in the process.

-

- Jul 2018

-

er.educause.edu er.educause.edu

-

What has changed, what remains the same, and what general patterns can be discerned from the past twenty years in the fast-changing field of edtech?

Join me in annotating @mweller's thoughtful exercise at thinking through the last 20 years of edtech. Given Martin's acknowledgements of the caveats of such an exercise, how can we augment this list to tell an even richer story?

-

- May 2018

-

hypothes.is hypothes.is

-

hi there check on the SAS Training and Tutorial with better analysis On the Data and forecasting methods for better implication on Business analytics

-

- Mar 2018

-

the-other-jeff.com the-other-jeff.com

-

Predictive student analytics are algorithmic systems that use data from student behavior and performance to generate individual predictions for student outcomes

-

- Jan 2018

-

www.chronicle.com www.chronicle.com

-

niversity’s General Counsel to Resign

asdtast

-

- Nov 2017

-

files.eric.ed.gov files.eric.ed.gov

-

Mount St. Mary’s use of predictive analytics to encourage at-risk students to drop out to elevate the retention rate reveals how analytics can be abused without student knowledge and consent

Wow. Not that we need such an extreme case to shed light on the perverse incentives at stake in Learning Analytics, but this surely made readers react. On the other hand, there’s a lot more to be said about retention policies. People often act as though they were essential to learning. Retention is important to the institution but are we treating drop-outs as escapees? One learner in my class (whose major is criminology) was describing the similarities between schools and prisons. It can be hard to dissipate this notion when leaving an institution is perceived as a big failure of that institution. (Plus, Learning Analytics can really feel like the Panopticon.) Some comments about drop-outs make it sound like they got no learning done. Meanwhile, some entrepreneurs are encouraging students to leave institutions or to not enroll in the first place. Going back to that important question by @sarahfr: why do people go to university?

-

-

-

Information from this will be used to develop learning analytics software features, which will have these functions: Description of learning engagement and progress, Diagnosis of learning engagement and progress, Prediction of learning progress, and Prescription (recommendations) for improvement of learning progress.

As good a summary of Learning Analytics as any.

-

-

-

full Caliper Analytics compliance

Oh? Not xAPI?

-

- Oct 2017

-

www.engadget.com www.engadget.com

-

The company's API for scoring toxicity in online discussions already behaves like a racist hand dryer.

this is just so awful.

-

-

www.truth-out.org www.truth-out.org

-

Examples of such violence can be seen in the forms of an audit culture and empirically-driven teaching that dominates higher education. These educational projects amount to pedagogies of repression and serve primarily to numb the mind and produce what might be called dead zones of the imagination. These are pedagogies that are largely disciplinary and have little regard for contexts, history, making knowledge meaningful, or expanding what it means for students to be critically engaged agents.

On audit culture in education. How do personalized/adaptive/competency-based learning and learning analytics support it?

-

-

blog.blackboard.com blog.blackboard.com

-

By giving student data to the students themselves, and encouraging active reflection on the relationship between behavior and outcomes, colleges and universities can encourage students to take active responsibility for their education in a way that not only affects their chances of academic success, but also cultivates the kind of mindset that will increase their chances of success in life and career after graduation.

-

If students do not complete the courses they need to graduate, they can’t progress.

The #retention perspective in Learning Analytics: learners succeed by completing courses. Can we think of learning success in other ways? Maybe through other forms of recognition than passing grades?

-

-

epress.lib.uts.edu.au epress.lib.uts.edu.au

-

Ethics and privacy in learning analytics

-

- Sep 2017

-

www.ictliteracy.info www.ictliteracy.info

-

Develop appropriate measurement and monitoring strategies

Recommendation 4

-

- Aug 2017

-

analytics.jiscinvolve.org analytics.jiscinvolve.org

-

This has much in common with a customer relationship management system and facilitates the workflow around interventions as well as various visualisations. It’s unclear how the at risk metric is calculated but a more sophisticated predictive analytics engine might help in this regard.

Have yet to notice much discussion of the relationships between SIS (Student Information Systems), CRM (Customer Relationship Management), ERP (Enterprise Resource Planning), and LMS (Learning Management Systems).

-

- Mar 2017

-

www.newamerica.org www.newamerica.org

-

The plan should also include a discussion about any possible unintended consequences and steps your institution and its partners (such as third-party vendors) can take to mitigate them.

Need to create a risk management plan associated with the use of predictive analytics. Talking as an organization about the risks is important - that way we can help keep each other responsible for using analytics in a responsible way.

-

-

blog.blackboard.com blog.blackboard.com

-

we think analytics is just now trying to get past the trough of disillusionment.

-

Analytics isn’t a thing. Analytics help solve problems like retention, student success, operational efficiency, or engagement.

-

Analytics doesn’t solve a problem. Analytics provides data and insight that can be leveraged to solve problems.

Tags

Annotators

URL

-

- Feb 2017

-

files.eric.ed.gov files.eric.ed.gov

-

this kind of assessmen

Which assessment? Analytics aren't measures. We need to be more forthcoming with faculty about their role in measuring student learning. Such as, http://www.sheeo.org/msc

-

- Dec 2016

-

www.cygresearch.com www.cygresearch.com

-

your own website remains your single greatest advantage in convincing donors to stay loyal or in drawing new supporters to your cause. Do you know what donors are looking for when they land on your site? Are you talking about it?

This underscores the importance of using Google Analytics, as well as website user surveys.

-

- Nov 2016

-

mfeldstein.com mfeldstein.com

-

Data should extend our senses, not be a substitute for them. Likewise, analytics should augment rather than replace our native sense-making capabilities.

-

- Oct 2016

-

www.businessinsider.com www.businessinsider.com

-

Devices connected to the cloud allow professors to gather data on their students and then determine which ones need the most individual attention and care.

-

-

www.google.com www.google.com

-

For G Suite users in primary/secondary (K-12) schools, Google does not use any user personal information (or any information associated with a Google Account) to target ads.

In other words, Google does use everyone’s information (Data as New Oil) and can use such things to target ads in Higher Education.

Tags

Annotators

URL

-

- Sep 2016

-

www.sr.ithaka.org www.sr.ithaka.org

-

Data sharing over open-source platforms can create ambiguous rules about data ownership and publication authorship, or raise concerns about data misuse by others, thus discouraging liberal sharing of data.

Surprising mention of “open-source platforms”, here. Doesn’t sound like these issues are absent from proprietary platforms. Maybe they mean non-institutional platforms (say, social media), where these issues are really pressing. But the wording is quite strange if that is the case.

-

Activities such as time spent on task and discussion board interactions are at the forefront of research.

Really? These aren’t uncontroversial, to say the least. For instance, discussion board interactions often call for careful, mixed-method work with an eye to preventing instructor effect and confirmation bias. “Time on task” is almost a codeword for distinctions between models of learning. Research in cognitive science gives very nuanced value to “time spent on task” while the Malcolm Gladwells of the world usurp some research results. A major insight behind Competency-Based Education is that it can allow for some variance in terms of “time on task”. So it’s kind of surprising that this summary puts those two things to the fore.

-

Research: Student data are used to conduct empirical studies designed primarily to advance knowledge in the field, though with the potential to influence institutional practices and interventions. Application: Student data are used to inform changes in institutional practices, programs, or policies, in order to improve student learning and support. Representation: Student data are used to report on the educational experiences and achievements of students to internal and external audiences, in ways that are more extensive and nuanced than the traditional transcript.

Ha! The Chronicle’s summary framed these categories somewhat differently. Interesting. To me, the “application” part is really about student retention. But maybe that’s a bit of a cynical reading, based on an over-emphasis in the Learning Analytics sphere towards teleological, linear, and insular models of learning. Then, the “representation” part sounds closer to UDL than to learner-driven microcredentials. Both approaches are really interesting and chances are that the report brings them together. Finally, the Chronicle made it sound as though the research implied here were less directed. The mention that it has “the potential to influence institutional practices and interventions” may be strategic, as applied research meant to influence “decision-makers” is more likely to sway them than the type of exploratory research we so badly need.

-

-

www.chronicle.com www.chronicle.com

-

often private companies whose technologies power the systems universities use for predictive analytics and adaptive courseware

-

the use of data in scholarly research about student learning; the use of data in systems like the admissions process or predictive-analytics programs that colleges use to spot students who should be referred to an academic counselor; and the ways colleges should treat nontraditional transcript data, alternative credentials, and other forms of documentation about students’ activities, such as badges, that recognize them for nonacademic skills.

Useful breakdown. Research, predictive models, and recognition are quite distinct from one another and the approaches to data that they imply are quite different. In a way, the “personalized learning” model at the core of the second topic is close to the Big Data attitude (collect all the things and sense will come through eventually) with corresponding ethical problems. Through projects vary greatly, research has a much more solid base in both ethics and epistemology than the kind of Big Data approach used by technocentric outlets. The part about recognition, though, opens the most interesting door. Microcredentials and badges are a part of a broader picture. The data shared in those cases need not be so comprehensive and learners have a lot of agency in the matter. In fact, when then-Ashoka Charles Tsai interviewed Mozilla executive director Mark Surman about badges, the message was quite clear: badges are a way to rethink education as a learner-driven “create your own path” adventure. The contrast between the three models reveals a lot. From the abstract world of research, to the top-down models of Minority Report-style predictive educating, all the way to a form of heutagogy. Lots to chew on.

-

- Jul 2016

-

hybridpedagogy.org hybridpedagogy.org

-

what do we do with that information?

Interestingly enough, a lot of teachers either don’t know that such data might be available or perceive very little value in monitoring learners in such a way. But a lot of this can be negotiated with learners themselves.

-

E-texts could record how much time is spent in textbook study. All such data could be accessed by the LMS or various other applications for use in analytics for faculty and students.”

-

not as a way to monitor and regulate

-

-

hackeducation.com hackeducation.com

-

demanded by education policies — for more data

-

more efficient (whatever that means)

-

-

medium.com medium.com

-

data being collected about individuals for purposes unknown to these individuals

-

-

campustechnology.com campustechnology.com

-

While TAs are intended to help students understand the material, their teaching skills vary and they come at the job with widely different backgrounds.

-

-

www.businessinsider.com www.businessinsider.com

-

which applicants are most likely to matriculate

-

Data collection on students should be considered a joint venture, with all parties — students, parents, instructors, administrators — on the same page about how the information is being used.

-

"We know the day before the course starts which students are highly unlikely to succeed,"

Easier to do with a strict model for success.

-

-

www.educationdive.com www.educationdive.com

-

there is some disparity and implicit bias

-

-

medium.com medium.com

-

improving teaching, not amplifying learning.

Though it’s not exactly the same thing, you could call this “instrumental” or “pragmatic”. Of course, you could have something very practical to amplify learning, and #EdTech is predicated on that idea. But when you do, you make learning so goal-oriented that it shifts its meaning. Very hard to have a “solution” for open-ended learning, though it’s very easy to have tools which can enhance open approaches to learning. Teachers have a tough time and it doesn’t feel so strange to make teachers’ lives easier. Teachers typically don’t make big purchasing decisions but there’s a level of influence from teachers when a “solution” imposes itself. At least, based on the insistence of #BigEdTech on trying to influence teachers (who then pressure administrators to make purchases), one might think that teachers have a say in the matter. If something makes a teaching-related task easier, administrators are likely to perceive the value. Comes down to figures, dollars, expense, expenditures, supplies, HR, budgets… Pedagogy may not even come into play.

-

- Jun 2016

-

musicfordeckchairs.com musicfordeckchairs.com

-

nothing we did is visible to our analytics systems

If it’s not counted, does it count?

-

-

www.linkedin.com www.linkedin.com

-

Massively scaling the reach and engagement of LinkedIn by using the network to power the social and identity layers of Microsoft's ecosystem of over one billion customers. Think about things like LinkedIn's graph interwoven throughout Outlook, Calendar, Active Directory, Office, Windows, Skype, Dynamics, Cortana, Bing and more.

The integration of external social/collab data with internal enterprise data could be really powerful, and open up interesting opportunities for analytics.

-

-

www.edsurge.com www.edsurge.com

-

It shifted its work to faculty-driven initiatives.

DIY, grassroots, bottom-up… but not learner-driven.

-

learning agenda on learning analytics

-

Learning analytics cannot be left to the researchers, IT leadership, the faculty, the provost or any other single sector alone.

-

An executive at a large provider of digital learning tools pushed back against what he saw as Thille’s “complaint about capitalism.”

Why so coy?

R.G. Wilmot Lampros, chief product officer for Aleks, says the underlying ideas, referred to as Knowledge Space Theory, were developed by professors at the University of California at Irvine and are in the public domain. It's "there for anybody to vet," he says. But McGraw-Hill has no more plans to make its analytics algorithms public than Google would for its latest search algorithm.

"I know that there are a few results that our customers have found counterintuitive," Mr. Lampros says, but the company's own analyses of its algebra products have found they are 97 percent accurate in predicting when a student is ready to learn the next topic.

As for Ms. Thille's broader critique, he is unpersuaded. "It's a complaint about capitalism," he says. The original theoretical work behind Aleks was financed by the National Science Foundation, but after that, he says, "it would have been dead without business revenues."

MS. THILLE stops short of decrying capitalism. But she does say that letting the market alone shape the future of learning analytics would be a mistake.

-

a debate over who should control the field of learning analytics

Who Decides?

-

-

www.eschoolnews.com www.eschoolnews.com

-

What teachers want in a data dashboard

Though much of it may sound trite and the writeup is somewhat awkward (diverse opinions strung together haphazardly), there’s something which can help us focus on somewhat distinct attitudes towards Learning Analytics. Much of it hinges on what may or may not be measured. One might argue that learning happens outside the measurement parameters.

-

timely

Time-sensitive, mission-critical, just-in-time, realtime, 24/7…

-

Data “was something you would use as an autopsy when everything was over,” she said.

The autopsy/biopsy distinction can indeed be useful, here. Leading to insight. Especially if it’s not about which one is better. A biopsy can help prevent something in an individual patient, but it’s also a dangerous, potentially life-threatening operation. An autopsy can famously identify a “cause of death” but, more broadly, it’s been the way we’ve learnt a lot about health, not just about individual patients. So, while Teamann frames it as a severe limitation, the “autopsy” part of Learning Analytics could do a lot to bring us beyond the individual focus.

-

-

www.teachthought.com www.teachthought.com

-

While generally misused today, analytics can (theoretically) be used to predict and personalize many facets of teaching & learning, inc. pace, complexity, content, and more.

-

- May 2016

-

www.insidehighered.com www.insidehighered.com

-

The entirely quantitative methods and variables employed by Academic Analytics -- a corporation intruding upon academic freedom, peer evaluation and shared governance -- hardly capture the range and quality of scholarly inquiry, while utterly ignoring the teaching, service and civic engagement that faculty perform,

-

- Apr 2016

-

googleguacamole.wordpress.com googleguacamole.wordpress.com

-

techcrunch.com techcrunch.com

-

Researchers have tracked student emotions while using Crystal Island–a game-based learning environment– and used that research to predict how students will react in other learning situations

-

-

allthingsanalytics.com allthingsanalytics.com

-

“fundamentally if we want to realize the potential of human networks to change how we work then we need analytics to transform information into insight otherwise we will be drowning in a sea of content and deafened by a cacophony of voices”

Marie Wallace's perspective on the potential of bigdata analytics, specifically analysis of human networks, in the context of creating a smarter workplace.

-

- Mar 2016

-

www.jonbecker.net www.jonbecker.net

-

Ranty Blog Post about Big Data, Learning Analytics, & Higher Ed

-

-

timothyharfield.com timothyharfield.com

-

The future of learning analytics, a future in which it is not passé, is one in which learning comes before management

-

- Dec 2015

-

mfeldstein.com mfeldstein.com

-

focus groups where students self-report the effectiveness of the materials are common, particularly among textbook publishers

Paving the way for learning analytics.

-

It’s educators who come up with hypotheses and test them using a large data set.

And we need an ever-larger data set, right?

-

a good example of the kind of insight that big data is completely blind to

Not sure it follows directly, but also important to point out.

-

-

mfeldstein.com mfeldstein.com

-

I will investigate the details on this, including the relevant contractual clauses, when I get the chance.

-

taking a swipe at Knewton

Snap!

-

they are making a bet against the software as a replacement for the teacher and against big data

-

a set of algorithms designed to optimize the commitment of knowledge to long-term memory

-

-

larrycuban.wordpress.com larrycuban.wordpress.com

-

numbers have to be interpreted by those who do the daily work of classroom teaching

-

-

radar.oreilly.com radar.oreilly.com

-

The challenge, of course, is how to balance concerns of the Hawthorne effect with privacy.

-

-

mfeldstein.com mfeldstein.com

-

Regular readers know that I am a big fan of the standard-in-development.

From 2013

-

-

mfeldstein.com mfeldstein.com

-

If Knewton were doing more of this, I wouldn’t be as critical.

-

-

hackeducation.com hackeducation.com

-

As usual, @AudreyWatters puts things in proper perspective.

-