Planet Wild is a community of nearly 15,000 people who collectively every month fund a new carefully selected project to protect

for - planetary boundary - biodiversity loss - planet wild

Planet Wild is a community of nearly 15,000 people who collectively every month fund a new carefully selected project to protect

for - planetary boundary - biodiversity loss - planet wild

A streaming platform shows a banner saying: “Your free trial ends tomorrow upgrade now or lose access to your favorite shows.” Instead of just highlighting the benefits of subscribing, it stresses what the user will lose if they don’t act. This creates urgency and pushes people to keep the service, which is a direct use of loss aversion.

Consider the consequences of remaining stuck using language that assumesand hence sustains a state of radical differentiation. Jung describes how thedevelopment of consciousness contributed to the corresponding radical dif-ferentiation within language:

for - quote - adjacency - Carl Jung - consciousness - language - dualism - loss of holism

Noch nie gab es in einem arktischen Winter so wenig mehr als wie in diesem Jahr. Die Tats Interview dazu, den Klimaforscher Raphael Köhler. Zurzeit geht das arktische Mehrheit mehr als Prodikade um ca. 2,5% zurück. Der Rückgang des Meeraises verstärkt die an der Akt des Unterhins. Schnelle Erwärmung Sie führt zu Veränderungen des Jet Streams und ist damit auch an der Zunahme von Extremwetterereignissen in Europa beteiligt. Anders als früher nimmt inzwischen auch das antarktische Mehr-Eis-Ab. Die Gründe für diesen Vorgang sind noch nicht geklärt. https://taz.de/Physiker-ueber-Temperaturen-in-der-Arktis/!6079629/

losing synaptic density they're losing synapsis they're not brain cells

> for - addiction - graph - years of use vs loss of synaptic connections

Der französische Senat hat ein Rahmengesetz für die Landwirtschaft so verändert, dass nur noch die Interessen der Agrarindustrie berücksichtigr werden — mit gravierenden Folgen für den Schutz der Biodiversität. Ein radikaler Abbau von Vorschriften bedroht die biologische Landwirtschaft und auch Institutionen, die die Landwirtschaft wissenschaftsgestützt regulieren. Weiterhin verlieren immer mehr kleine Landwirtschaftsbetriebe die Existenzgrundlage. Ein Abgeordneter von La France Insoumise fasst in diesem Kommentar die aktuelle Situation zusammen. https://www.liberation.fr/idees-et-debats/tribunes/lagroecologie-balayee-par-lultraliberalisme-obscurantiste-20250223_YV6DZBVDZRDPNHDXS33U2WCZLA/

via @breadandcircuses@climatejustice.social

Der französische Klimatologe Robert Vautard stellt fest, dass die Temperaturen des Jahr 2024 noch stärker als 2023 vom Erwartet abgewichen sind. Seit zwei Jahren erlebe der Planet einen zusätzlichen Fieberschub. Die Gründe seien noch nicht geklärt, die Reduzierung der Reflexion von Sonneneinstrahlung durch die Abnahme des arktischen und antarktischen Meereises spiele wahrscheinlich eine Rolle. https://www.liberation.fr/environnement/climat/rechauffement-pour-le-copresident-du-giec-2024-a-ete-encore-plus-hors-norme-que-2023-et-cela-pose-beaucoup-de-questions-20241231_Q4A5ZQBEBRHWREZIY3UYGSFEFM/?redirected=1

Science-Studie zur Abnahme der Albedo der Erde: https://www.science.org/doi/10.1126/science.adq7280

Das EU-Parlament hat einer Aufweichung der wichtigsten Bestimmungen zum Schutz der Biodiversität als Voraussetzung für Agrarsubventionen zugestimmt. Greenpeace bezeichnet die Entscheidung als schockierend. 13% der in Deutschland imitierten Treibhausgase stammen laut Umweltbundesamt aus der Landwirtschaft. https://taz.de/Rollback-bei-EU-Agrarsubventionen/!6003674/

mutualizing forms of governance and ownership, can also have extraordinary effects on the amount of needed energy and materials. For example, in the context of shared transport, one shared car can replace 9 to 13 private cars, without any loss of mobility.

for - stats - climate crisis - example - positive impacts of mutualisation / sharing - car sharing - 1 Shared car can replace 9 to 13 cars without loss of mobility - from Substack article - The Cosmo-Local Plan for our Next Civilization - Michel Bauwens - 2024, Dec 20

Der Weltbiodiversitätsrat IPBES fordert in zwei unmittelbar hintereinander publizierten Berichten, dem „Nexus Report“ und dem „Transformative Change Report“, ein radikale Transformation des bestehenden Wirtschaftssystems, um Kipppunkte nicht zu überschreiten und die miteinander zusammenhängenden ökologisch-sozialen Krisen zu bekämpfen https://www.lapresse.ca/actualites/environnement/2024-12-18/crise-de-la-biodiversite/un-rapport-choc-propose-de-reformer-le-capitalisme.php

Zum Transformative Change Report: https://www.ipbes.net/transformative-change/media-release

Zum Nexus Report: https://www.ipbes.net/nexus/media-release

Eine neue Studie erhebt systematisch, wieviel weniger Frosttage es in den Ländern und Städten der Nordhalbkugel 2014-2023 aufgrund der globalen Erhitzung gab. Österreich hat inzwischen 13, Wien 22 Frosttage weniger als in einer Welt ohne fossile Emissionen. Diese Veränderungen haben gravierende Folgen für die Ökosysteme, die Verbreitung von Krankheiten, den Tourimus und das Lebensgefühl. https://www.derstandard.at/story/3000000249512/der-klimawandel-kostete-wien-22-frosttage-im-winter

people from a conservative perspective maybe can uh blame it on the loss of the Sacred

for - New media landscape - dark forest - media communities - right wing media blames it on loss of the sacred - front YouTube - situational assessment - Luigi Mangione - The Stoa - Deep Humanity - also sees loss of a living principle of the sacred as a major factor in the polycrisis - but is neither right, left or religious

comment - This comment is itself also perspectival as is any. - Deep Humanity does not consider itself right, left out even religious but also see's an absence of a living principle of the sacred as playing a major role in our current polycrisis

Allerdings ist die Geschwindigkeit, mit der das gerade geschieht, atemberaubend und bewegt sich außerhalb dieser Norm. Sie ist, das kann nicht oft genug betont werden, mindestens zehn bis hundert Mal höher als in der Zeit, bevor der Mensch die Welt dominierte, und deshalb ein klarer Hinweis darauf, dass wir die Verursacher dieses Sterbens sind.

Wie beim Klima ist die Geschwindigkeit der anthropogenen Veränderung mit einer Vervielfachung der Risiken verbunden.

we made this thing called the clarify for people with high frequency hearing loss

for - BEing journey - consumer electronic device - the Clarify - sensory substitution - auditory to vibration compensation - for high frequency hearing loss in older people - Neosensory - David Eagleman

Eine neue Studie bestätigt, dass die Hauptursache des immer schnelleren Anstiegs des Methan-Gehalts der Atmosphäre die Aktivität von Mikroaorganismen ist, die durch die globale Erhitzung zunimmt. Damit handelt es sich um einen Feedback-Mechanismus, durch den sich die globale Erhitzung selbst verstärkt. https://taz.de/Zu-viel-Methan-in-der-Atmosphaere/!6045201/

Studie: https://www.pnas.org/doi/10.1073/pnas.2411212121

Vorangehende Studien: https://www.nature.com/articles/s41558-023-01629-0, https://www.nature.com/articles/s41558-022-01296-7.epdf?sharing_token=CDMa5-ti34UNBqv3kfuCB9RgN0jAjWel9jnR3ZoTv0NZRKXEI-7kyXEEvNI7duu65JLcZpmhGxWTeSfYcMCqxqYk5nUrdR60izmjToMNw56RgBqIcn3JXKxSjx13vmB9ZYndGTUMt-52Vs7HT_T6K9Oth4QFRyP51eOpz8pV8l65HFDo2VSfQ6xDXklMtmvt-HGwltAINb_2xgmtAR-V4g%3D%3D&tracking_referrer=taz.de

Die aktuelle weltweite Korallenbleiche ist bereits die vierte in 25 Jahren. Die Temperaturen in einem großen Teil der tropischen Meere lagen in diesem Sommer 3° über dem Durchschnitt. Im Gespräch mit der Repubblica erklärt der Korallenexperte Roberto Danovaro, dass ein Viertel der globalen Korallenbestände bereits verloren ist. Korallenriffe, die Ökosysteme mit der größten Biodiversität, sind durch die globale Erhitzung besonders verwundbar https://www.repubblica.it/green-and-blue/dossier/negazionisti-climatici/2024/10/07/news/barriera_corallina_sbiancamento_crisi_clima_roberto_danovaro-423531902/

Kurz vor der COP16 zur Biodiversität geht die EU immer deutlicher von ihrer bisherigem Politik zum Schutz der Biodiversität ab. Man nimmt Rücksicht auf konventionelle Landwirt:innen, rechtsradikale und auch zunehmend antiökologisch agierende konservative Parteien. An die Stelle des Green Deal tritt das Bestreben, die Unternehmen im globalen Wettbewerb konkurrenzfähiger zu machen und die Wirtschaft wachsen zu lassen. Ajit Niranjan berichtet zusammenhängend über diese Entwicklungen und verweist auf wichtiger Meilensteine in der Geschichte von Abkommen zum Schutz der Biodiversität. https://www.theguardian.com/environment/2024/oct/09/europe-eu-green-deal-backsliding-nature-biodiversity-farmers-far-right-cop16-aoe

Der kurz vor der COP16 zur Biodiversität veröffentlichte Living Planet Index zeigt das Ausmaß des Biodiversitätsverlusts in den vergangenen 50 Jahren, auch wenn an den dabei angewendeten statistischen Verfahren starke Zweifel bestehen. Die Wirbeltier-Populationen haben nach diesem Index um 73% abgenommen, am stärksten in Lateinamerika und der Karibik. Die wichtigste Ursache ist die veränderte Landnutzung. https://www.theguardian.com/environment/2024/oct/10/collapsing-wildlife-populations-points-no-return-living-planet-report-wwf-zsl-warns

for - The projected timing of climate departure from recent variability - Camilo Mora et al. - 6th mass extinction - biodiversity loss - to - climate departure map - of major cities around the world - 2013

Summary - This is an extremely important paper with a startling conclusion of the magnitude of the social and economic impacts of the biodiversity disruption coming down the pipeline - It is likely that very few governments are prepared to adapt to these levels of ecosystemic disruption - Climate departure is defined as an index of the year when: - The projected mean climate of a given location moves to a state that is - continuously outside the bounds of historical variability - Climate departure is projected to happen regardless of how aggressive our climate mitigation pathway - The business-as-usual (BAU) scenario in the study is RCP85 and leads to a global climate departure mean of 2047 (+/- 14 years s.d.) while - The more aggressive RCP45 scenario (which we are currently far from) leads to a global climate departure mean of 2069 (+/- 18 years s.d.) - So regardless of how aggressive we mitigate, we cannot avoid climate departure. - What consequences will this have on economies around the world? How will we adapt? - The world is not prepared for the vast ecosystem changes, which will reshape our entire economy all around the globe.

from - Nature publication - https://hyp.is/3wZrokX9Ee-XrSvMGWEN2g/www.nature.com/articles/nature12540

to - climate departure map - of major cities around the globe - 2013 - https://hyp.is/tV1UOFsKEe-HFQ-jL-6-cw/www.hawaii.edu/news/2013/10/09/study-in-nature-reveals-urgent-new-time-frame-for-climate-change/

Hitzewellen im Mittelmeer mit Wassertemperaturen von über 30° haben dazu geführt, dass die Muscheln einer der bekanntesten Arten an einigen Küsten fast vollständig gestorben sind. Betroffen sind sowohl wild wachsende wie kultivierte Exemplare und damit auch eine wichtige Nahrungsquelle und Erwerbsquelle für die Fischerei. Massensterben aufgrund von thermischen Anomalien sind inzwischen auch bei 50 anderen Arten beschrieben worden. https://www.greenandblue.it/2024/09/17/news/moria_di_mitili_nell_adriatico_a_causa_del_caldo-423504229/

Daten sprechen dafür, dass die Eisfläche um die Antarktis in diesem antarktischen Sommer noch mehr schrumpft als 2023. Am 7. September war die von Eis bedeckte Fläche kleiner als vor einem Jahr. Forschende sehen darin ein Anzeichen dafür, dass das ganze antarktische System in einen anderen Zustand übergegangen ist, weil sich die erhöhten Lufttemperaturen jetzt auch auf den Ozean auswirken. Zu den Folgen gehören Veränderungen der Strömungen und ein schnelleres Abschmelzen der antarktischen Gletscher. https://www.theguardian.com/world/article/2024/sep/10/two-incredible-extreme-events-antarctic-sea-ice-on-cusp-of-record-winter-low-for-second-year-running

Extremwettereignisse als Folgen.der globalen Erhitzung haben viele Brücken in den USA beschädigt. Jede vierte der 80.000 Stahlbrücken droht bis 2050 einzubrechen. Vor allem aber gefährdet die hitzebedingte Erosion des Bodens die Stabilität der Pfeiler. Neue Standards für klimaresilienten Brückenbau vergrößern den ohnehin enormen Investitionsbedarf fürdie Erneuerung der US-Infrastruktur. Allein in Colorado düfte das Vier- bis Fünffache der vorhandenen Beträge gebraucht werden. https://www.nytimes.com/2024/09/02/climate/climate-change-bridges.html

Yerba Maté tea (non-smoked) as a tool for fasting to reduce the feeling of hunger?

Also useful for weight loss as it converts white fat (adipose) cells into brown and beige adipose cells which are useful fat cells used for heat generation, stored around the neck and clavicle. This is done through a process of thermogenesis.

The loss of the tail is inferred to have occurred around 25 million years ago when the hominoid lineage diverged from the ancient Old World monkeys (Fig. 1a), leaving only 3–5 caudal vertebrae to form the coccyx, or tailbone, in modern humans14.

for - human evolution - loss of tail approximately 25 million years ago - identified genomic mechanism leading to loss of tail and human bipedalism - human ancestors - up to 60 Ma years ago

The loss of the Amazon forest impacts (micro)climate,water supply, carbon storage and soil integrity.Deforestation affects water supplies in Brazilian cities andneighboring countries. It also impacts the actual farmsdriving deforestation, causing water scarcity and soildegradation. Further deforestation may also impact watersupply globally

for - question - economic impact of loss of Amazon Rainforest

question - economic impact of loss of Amazon Rainforest - If the Amazon rainforest breaches its tipping point, it seems this study does not consider the impacts of such a large scale impact?

A review from the mid-1990s pulled together the existing experiments on this issue and reported that, in 22 experiments using test questions that demanded students recall information (for instance, “What years in U.S. history are often called the Gilded Age?”), learning loss was about 28 percent. Retention was even better when questions required recognizing the correct answer, as on a multiple-choice test. For such tests, the average learning loss across 52 experiments was just 16 percent.

Tests taken a year later, with multiple choice answers showed only 16% learning losd

This decline in agricultural produc-tion, coupled with a sheer reduction in wages due toa lack of labor and regulatory standards in the agree-ment, created over 1.3 million lost jobs in the Mexicanagricultural sector alone, leading to an unprecedentedlevel of immigration into the United States

for - quote - Mexico - NAFTA job loss - stats - Mexico - NAFTA job loss

quote - Mexico - NAFTA job loss - - This decline in agricultural production, - coupled with a sheer reduction in wages due to a lack of labor and regulatory standards in the agree- ment, - created over 1.3 million lost jobs in the Mexican agricultural sector alone, - leading to an unprecedented level of immigration into the United States

stats - Mexico - NAFTA job loss - Mexico lost 1.3 million jobs due to mass migration to the US due to NAFTA

2023 ist so viel antarktisches Meeteis geschmolzen wie nie zuvor seit Messbeginn. Es bedeckte 2 Millionen Quadratkilometer weniger als im langjährigen Durchschnitt, das entspricht der vierfachen Oberfläche Frankreichs. Ein Abschmelzen in diesem Ausmaß ist durch die globale Erhitzung deutlich wahrscheinlicher geworden, wie eine neue Studie der British Antarctic Survey zeigt. https://www.liberation.fr/environnement/climat/le-record-de-fonte-de-la-banquise-en-antarctique-un-evenement-au-risque-multiplie-par-quatre-par-le-rechauffement-climatique-20240520_LVEG42DUB5BONPRXYZDTGWQJTQ/

Eine neue, grundlegende Studie zu Klima-Reparationen ergibt, dass die größten Fosssilkonzerne jählich mindestens 209 Milliarden Dollar als Reparationen an von ihnen besonders geschädigte Communities zahlen müssen. Dabei sind Schäden wie der Verlust von Menschenleben und Zerstörung der Biodiversität nicht einberechnet. https://www.theguardian.com/environment/2023/may/19/fossil-fuel-firms-owe-climate-reparations-of-209bn-a-year-says-study

Studie: Time to pay the piper: Fossil fuel companies’ reparations for climate damages https://www.cell.com/one-earth/fulltext/S2590-3322(23)00198-7

Der bisherige Präsident der G77 Gruppe, Pedro Pedroso, fordert von den Industrieländern sich endlich an den Pariser Zielen zu orientieren. Neben Verzicht auf die geplante fossile Expansion ist dazu Finanzierung erneuerbarer Energien in globalen Süden nötig. Der zusammenfassende Guardian-Artikel enthält Infografiken zu der geplanten Steigerung von Öl- und Gasproduktion sowie LNG-Exporten der USA. https://www.theguardian.com/environment/2024/jan/19/cop28-fossil-fuels-climate-deal-pedro-pedroso-us-uk-canada-pollution

Eine Gruppe von NGOs hat ein Konzept für eine Klimaschaden-Steuer ausgearbeitet, zu der Öl- und Gasgesellschaften ausgehend vom von ihnen verursachten CO2-Ausstoß herangezogen würden. Würde die Steuer in den OECD-Ländern mit 5$ pro Kilotonne CO2 beginnen und sich jährlich um weitere 5$ erhöhen, stünden 2030 jährlich 900 Milliarden $ vor allem für den Loss and Damage Fund zur Verfügung, der bei der COP28 beschlossen wurde.

Bericht: https://www.greenpeace.fr/wp-content/uploads/2024/03/CDT_guide_2024_embargoed_version.pdf

Pakistan hat bei einer Geberkonferenz Zusagen über ca. 9 Milliarden USD für den Wiederaufbau nach den Überflutungen des letzten Jahres erhalten. Der pakistanische Premierminister wies darauf hin, dass das internationale Finanzsystem katastrophal schlecht auf Loss and Damage durch die Klimakrise ausgerichtet ist

Extremer Regen hat in Pakistan und Afghanistan mindestens 135 Todesfälle verursacht und große Flächen bebauten Landes zerstört. Vorausgegangen war eine lange Periode ungewöhnlicher Trockenheit. Die Regenfälle wurden von demselben Wettersystem verursacht, das kurz zuvor Überflutungen in den Vereinigten Arabischen Emiraten und Oman verursacht hatte.

Zusammenfassender Bericht der EU über die Folgen der globalen Erhitzung in Europa im vergangenen Jahr. Europa erwärmt sich von allen Kontinenten am schnellsten. Die Menschen in Südeuropa waren über 100 Tage extender gute ausgesetzt. 2022 war das trockenste Jahr der ausgezeichneten Wettergeschichte, und es hatte den mit Abstand heißesten Sommer. https://www.theguardian.com/environment/2023/apr/20/frightening-record-busting-heat-and-drought-hit-europe-in-2022

So last move (2 years ago) my Kiddo(chronic mental and medical high time demand) dumped my cards and I am still trying to sort them back. ** As of now I am using hair ties to make sure they don't get "accidentally" dumped again. If they do it will have to be done with some fore thought.

Example of someone using hair ties and smaller internal boxes to prevent zettelkasten cards from being dumped out due to child mischief.

via: https://www.reddit.com/r/antinet/comments/1bv6sxy/moving_again_and_a_tip_on_transporting/

Biodiversity loss and deforestation are directly linked to the rise of infectious diseases, with 1/3 of zoonotic diseases attributed to these factors.

Such losses extend to wetland areas; more than 85% of the wetlands present in 1700 had been lost by 2000, and loss of wetlands is currently 3 times faster than forest loss.

Interview mit dem Sea Shepherd-Leiter Peter Hammerstedt. Die industriell betriebene Fischerei ist ein Beispiel für die Erschöpfung der Ressourcen des Planeten aus Profitgier. Die Methoden von Sea Shepherd zeigen, dass radikaler Aktivismus wirksam ist. man er fährt in dem Interview unter anderem, dass das Mittelmeer nur noch 10% der ursprünglichen Fischbestände hat, und das für Nahrungsergänzungsmittel in arktischen Gewässern in einem Ausmaß Krill gefischt wird, das für die Biodiversität eine weitere Gefahr bedeutet.. https://www.derstandard.de/story/3000000210873/kapitaen-und-aktivist-wenn-sie-thunfisch-essen-beteiligen-sie-sich-am-toeten-der-haie

Der Standard fasst mehrere Studien zum zur Verringerung der Schneedecke durch die globale Erhitzung in den Alpen und auch in anderen Gebirgszonen der Welt zusammen.

Katrin Böhning-Gaese.

Längeres Interview mit Katrin Böhningk-Gaese zum Biodiversitätsverlust und zu ihrem Buch vom Verschwinden der Arten. Grundinformationen zum Thema, vor allem zur Bedrohung der Biodiversität durch Intensivlandwirtschaft und fleischbasierte Ernährung. https://www.derstandard.de/story/3000000212745/biologin-wir-haben-ein-mass-an-perfektion-erreicht-die-keinem-lebewesen-noch-etwas-goennt

Once you’re aware of the suitcase/handle problem, you’ll see it everywhere. People glomonto words and stories that are often just stand-ins for real action and meaning. Advertiserslook for words that imply a product’s value and use that as a substitute for value itself.Companies constantly tell us about their commitment to excellence, implying that this meansthey will make only top-shelf products. Words like quality and excellence are misapplied sorelentlessly that they border on meaningless.

“Story Is King” differentiated us, we thought, not just because we said it but also becausewe believed it and acted accordingly. As I talked to more people in the industry and learnedmore about other studios, however, I found that everyone repeated some version of thismantra—it didn’t matter whether they were making a genuine work of art or complete dreck,they all said that story is the most important thing. This was a reminder of something thatsounds obvious but isn’t: Merely repeating ideas means nothing. You must act—and think—accordingly. Parroting the phrase “Story Is King” at Pixar didn’t help the inexperienceddirectors on Toy Story 2 one bit. What I’m saying is that this guiding principle, while simplystated and easily repeated, didn’t protect us from things going wrong. In fact, it gave us falseassurance that things would be okay.

Having a good catch phrase for guidance can become a useless trap if it becomes repeated so frequently that it loses meaning. Guiding principles need to be revisited, actively worked on, and ensconced into daily activities and culture.

examples: - Google and "don't be evil" - Pixar (and many others) and "story is king" (cross Reference Ed Catmull in Creativity, Inc.) - Pixar and "trust the process" (ibid) #

Die weltwetterorganisation WMO fast in ihrem Bericht über 2023 die Daten verschiedener Services zusammen und kommt zu dramatischen Aussagen über die Entwicklung der Temperatur auf der Erdoberfläche insbesondere insgesamt und besonders an der Oberfläche der Meere. Gleichzeitig ergibt eine Studie der BU Wien dass die Prognosen vieler, darunter großer starken über die Entwicklung der Emissionen deutlich zu optimistisch sind. https://www.derstandard.de/story/3000000212370/weltwetterorganisation-zeichnet-duesteres-bild-vom-klima-des-letzten-jahres

Bei einem hohen Emissionsszenario wird es weltweit in jeder achten der jetzigen Ski-Destinationen am Ende dieses Jahrhunderts keinen Schnee mehr geben. Auch für viele andere Skiorte hat die globale Erhitzung dramatische folgen. Eine neue Studie erfasst sie aufgrund genauer regionaler Modellierungen. https://www.repubblica.it/green-and-blue/2024/03/13/news/neve_sci_impianti_chiusi-422305098/

Interview mit Mia Mottley, der Premierministerin von Barbados und Hauptvorkämpferin der von ihr ins Leben gerufenen Bridgetown Initiative zur Klimafinanzierung für den globalen Süden. Mottley geht auf die Schuldenkrise in vielen Ländern nach der Pandemie ein und fordert, wie sie sagt, unorthodoxe CO2-Steuern, z.B Abgaben von fossilen Konzernen und Fluggesellschaften. Die derzeit Mächtigen verhinderten eine wirksame Klimafinanzierung, obwohl es Fortschritte z.B. bei Finanzinstitutionen gebe. Dass Finanztransfers vor allem zu einer klimagerechten Transformation nötig sei, werde nicht anerkannt. https://taz.de/Barbados-Premier-ueber-Klimakrise/!5994100/

Das Tempo der Temperaturerhöhung an der Oberfläche der Ozeane ist auch für erfahrene Forschende schockierend. Besonders hoch ist es im Nordatlantik, dessen Erwärmung zu schwereren Hurricans führen könnte. Aber auch der Südatlantik und damit das antarktische Meereis sind betroffen. Die Ursachen sind nicht geklärt; das El Niño-Phänomen reicht zur Erklärung nicht aus. Es könnten Feedback-Mechanismen eine Rolle spielen. Die New York Times hat mehrere Wissenschaftler befragt.

https://www.nytimes.com/2024/02/27/climate/scientists-are-freaking-out-about-ocean-temperatures.html

Grönland erhitzt sich 5-7mal schneller als der Durchschnitt des Planeten – an manchen Stellen in 10 Jahren um 2,7°. Das Meereis verschwindet schneller als je zuvor; gleichzeitig beschleunigen sich die Methanemissionen durch das Schmelzen des Permafrosts. Die Repubblica berichtet ausführlich über die Jahrestagung des italienischen Programms zur Erforschung der Arktis. https://www.repubblica.it/green-and-blue/2024/02/23/news/artico_ghiaccio_marino_riduzione_record-422193100/

Presseaussendung zur Tagung: https://www.cnr.it/it/nota-stampa/n-12540/

Able to see lots of cards at once.

ZK practice inspired by Ahrens, but had practice based on Umberto Eco's book before that.

Broad subjects for his Ph.D. studies: Ecology in architecture / environmentalism

3 parts: - zk main cards - bibliography / keywords - chronological section (history of ecology)

Four "drawers" and space for blank cards and supplies. Built on wheels to allow movement. Has a foldable cover.

He has analog practice because he worries about companies closing and taking notes with them.

Watched TheNoPoet's How I use my analog Zettelkasten.

, one of the reasons that the New York Public Library had toclose its public catalog was that the public was destroying it. TheHetty Green cards disappeared. Someone calling himself Cosmoswas periodically making o� with all the cards for Mein Kampf. Cardsfor two Dante manuscripts were stolen: not the manuscripts, thecards for the manuscripts.

book at a public phone rather than bothering to copy down anaddress and a phone number, library visitors—the heedless, thecrazy—have, especially since the late eighties, been increasinglycapable of tearing out the card referring to a book they want.

The huge frozen card catalog of the Library of Congress currently suers from alarming levels of public trauma: like the movie trope in which the private eye tears a page from a phone

Radical students destroyed roughly ahundred thousand cards from the catalog at the University of Illinoisin the sixties. Berkeley’s library sta� was told to keep watch overthe university’s card catalogs during the antiwar turmoil there.Someone reportedly poured ink on the Henry Cabot Lodge cards atStanford

They donot grow mold, as the card catalog of the Engineering Library of theUniversity of Toronto once did, following water damage.

(The more modi�cation a library demands of eachMARC record, the more it costs.) In Harvard’s case she typicallyaccepts the record as is, even when the original card bearsadditional subject headings or enriching notes of various kinds.

Information loss in digitizing catalog cards...

An image of the front of every card for Widener thus now exists onmicro�che, available to users in a room o� the lobby. (Anyinformation on the backs of the cards—and many notes do carryover—was not photographed

Curtis mentioned one example of information that he found: “One of the first drawers of the author-title catalog I looked through held a card for Benjamin Smith Barton’s ‘Elements of Botany’ [the 1804 edition]. The card indicated that UVA’s copy of this book was signed by Joseph C. Cabell, who was instrumental in the founding of the University.”Curtis checked to see if the Virgo entry included this detail, but he found no record of the book at all. “I thought perhaps that was because the book had been lost, and the Virgo entry deleted, but just in case, I emailed David Whitesell [curator in the Albert and Shirley Small Special Collections Library] and asked him if this signed copy of Barton’s ‘Elements of Botany’ was on the shelves over there. Indeed it was.

Digitization efforts in card collections may result in the loss or damage of cards or loss of the materials which the original cards represented in the case of library card catalogs.

for - 2nd Trump term - 2nd Trump presidency - 2024 U.S. election - existential threat for climate crisis - Title:Trump 2.0: The climate cannot survive another Trump term - Author: Michael Mann - Date: Nov 5, 2023

Summary - Michael Mann repeats a similiar warning he made before the 2020 U.S. elections. Now the urgency is even greater. - Trump's "Project 2025" fossil-fuel -friendly plan would be a victory for the fossil fuel industry. It would - defund renewable energy research and rollout - decimate the EPA, - encourage drilling and - defund the Loss and Damage Fund, so vital for bringing the rest of the world onboard for rapid decarbonization. - Whoever wins the next U.S. election will be leading the U.S. in the most critical period of the human history because our remaining carbon budget stands at 5 years and 172 days at the current rate we are burning fossil fuels. Most of this time window overlaps with the next term of the U.S. presidency. - While Mann points out that the Inflation Reduction Act only takes us to 40% rather than Paris Climate Agreement 60% less emissions by 2030, it is still a big step in the right direction. - Trump would most definitely take a giant step in the wrong direction. - So Trump could singlehandedly set human civilization on a course of irreversible global devastation.

The GOP has threatened to weaponize a potential second Trump term

for - 2nd Trump term - regressive climate policy

other nations are wary of what a second Trump presidency could portend,

for - 2nd Trump presidency - elimination of loss and damage fund - impact on global decarbonization effort

That’s what the “loss and damage” agreement does,

for - loss and damage fund - global impact

Der grönländische Eisschild verliert aufgrund der globalen Erhitzung 30 Millionen Tonnen Eis pro Stunde und damit 20% mehr als bisher angenommen. Manche Forschende fürchten, dass damit das Risiko eines Kollaps des Amoc größer ist als bisher angenommen. Der Eisverlust ist außerdem relevant für die Berechnung des Energie-Ungleichgewichts der Erde durch Treibhausgas-Emissionen. https://www.theguardian.com/environment/2024/jan/17/greenland-losing-30m-tonnes-of-ice-an-hour-study-reveals

20% der Schneemasse auf der Nordhalbkugel bedecken Gebiete, die im Winter meist wärmer sind als 8°. In diesen Gebieten hat die Schneedecke in den letzten Jahrzehnten bereits deutlich abgenommen. Für ihre Zukunft ist jedes Zehntelgrad mehr oder weniger Erhitzung entscheidend. Der Verlust der Schneedecke führt zu Problemen bei der Wasserversorgung etwa der Donau und des Mississippi. https://www.liberation.fr/environnement/y-aura-t-il-encore-de-la-neige-en-2050-20240117_UPOQVWROIZEBVDQRD5JBRA4EH4/

Mehr zur selben Studie: https://hypothes.is/search?q=tag%3A%22Evidence%20of%20human%20influence%20on%20Northern%20Hemisphere%20snow%20loss%22

Die Schneedecken sind in einigen Regionen bder Nordhalbkugel wir den Alpen zwischen 1981 und 2020 pro Jahrzehnt um 10 bis 20% zurückgegangen. Eine Studie Leistung zum ersten Mal nach, dass dieser Prozess, auf die anthropogene globale Erhitzung zurückzuführen ist. Der Prozess wird sich fortsetzen und möglicherweise inGegenden, in denen die Flüsse bisher in großem Ausmaß von Schnee gespeist wurden, zu Trockenheit führen. https://www.derstandard.at/story/3000000202524/fehlender-schnee-geht-auf-menschengemachten-klimawandel-zurueck

FireKing File Cabinet, 1-Hour Fire Protection, 6-Drawer, Small Document Size, 31" Deep<br /> https://www.filing.com/FireKing-Card-Check-Note-Cabinet-6-Drawer-p/6-2552-c.htm

A modern index card catalog filing solution with locks and fireproofing offered by FireKing for $6,218.00 with shipment in 2-4 weeks. 6 Drawers with three sections each. Weighs 860 lbs.

at 0.0072" per average card, with filing space of 25 15/16" per section with 18 sections, this should hold 64,843 index cards.

Zusammenfassender Artikel über Studien zu Klimafolgen in der Antarktis und zu dafür relevanten Ereignissen. 2023 sind Entwicklungen sichtbar geworden, die erst für wesentlich später in diesem Jahrhundert erwartet worden waren. Der enorme und möglicherweise dauerhafte Verlust an Merreis ist dafür genauso relevant wie die zunehmende Instabilität des westantarktischen und möglicherweise inzwischen auch des ostantarktischen Eisschilds. https://www.theguardian.com/world/2023/dec/31/red-alert-in-antarctica-the-year-rapid-dramatic-change-hit-climate-scientists-like-a-punch-in-the-guts

Ein Strömungssystem im südlichen Ozean, das man mit dem Golfstrom im nördlichen Atlantik vergleichen kann, hat seit den 90er Jahren um 30% Intensität verloren. Die Folgen dieser Entwicklung können dramatisch sein, unter anderem für die Nahrungsversorgung von Lebewesen im Meer und für die Erhöhung des Meeresspiegels. https://www.theguardian.com/science/2023/may/25/slowing-ocean-current-caused-by-melting-antarctic-ice-could-have-drastic-climate-impact-study-says

One of the benefits of having a separate working bibliography for a project is that it provides a back up copy in the case that one loses or misplaces one's original bibliography note cards. (p 50)

Eine neue französische Studie beschäftigt sich mit einer Folge des dramatischen Rückgangs der Insekt. Mangels bestäubender Insekten befruchten sich Pflanzen selbst. Dadurch werden die nächsten Generationen kleiner und liefern weniger Nektar. Es kommt zu einem Rückkopplungseffekt, weil so noch weniger Insekten überleben. In dem Interview mit der liberation erklärt der Biologe diesen Effekt als Beispiel für eine unkontrollierbare und nicht mehr zurücknehmbare, von Menschen ausgelöste Entwicklung. https://www.liberation.fr/environnement/biodiversite/pollinisation-et-disparition-des-insectes-nous-sommes-dans-une-spirale-incontrolable-20231221_CV6BZLN2WJANLN2A2EFUGCJLZI/

https://www.amazon.com/JUNDUN-Dividers-Collapsible-Fireproof-4x6-Inch/dp/B0BNHZ3JTR/?th=1

A card index box which is waterproof and fireproof for potential travel.

Deutschland hat sich bereiterklärt, 100 Millionen Euro in den Fonds für Klimaschäden einzuzahlen. Das ist etwa ein Promille der geplanten Militärausgaben l. https://taz.de/Deutsche-Zusagen-zur-Klimafinanzierung/!5973914/

CO?28-Präsident Sultan Al Jaber hat den Verdacht zurückgewiesen, er benutze Klimagespräche, um Geschäfte des von ihm geleiteten staatlichen Ölkonzerns Adnoc vorzubereiten. Aktivist:innen nahmen seine Erklärungen nicht ernst. Die geleakten Information müssten der letzte Nagel im Sarg der schon längst enttarnten Idee sein, dass die Fossilindustrie eine Rolle bei der Lösung der Krise spielen könne, die sie geschaften hat (Alice Harrison) https://www.theguardian.com/environment/2023/nov/29/cop28-president-denies-on-eve-of-summit-he-abused-his-position-to-sign-oil-deals

Zu Beginn der COP28 wurde die Einrichtung eines loss and damage-Fonds entsprechend den kurz vorher vereinbarten Regularien beschlossen. Deutschland und die UAE sind die ersten Einzahler, andere Länder folgen. Damit kann die Konferenz mit einer Erfolgsmeldung beginnen. Die weitere Finanzierung ist allerdings unklar; die zu erwartenden Beträge bleiben weit hinter dem Bedarf zurück. https://www.theguardian.com/environment/2023/nov/30/agreement-on-loss-and-damage-deal-expected-on-first-day-of-cop28-talks

Rich in manuscripts and correspondence for Arendt’s productive years as a writer and lecturer after World War II, the papers are sparse before the mid-1940s because of Arendt’s forced departure from Nazi Germany in 1933 and her escape from occupied France in 1941.

To look at the world in wonder, and to stay with that sense of wonder without jumping straight past it, has become almost impossible for someone taking science seriously.

Der Standard zeigt an zehn Beispielen wie wichtig das Abkommen zum Schutz der Biodiversität von Montreal/Kunming ist und wie schwierig es ist, es in die Praxis umzusetzen. https://www.derstandard.at/story/3000000192855/vom-globalen-artensterben-und-jenen-die-es-bekaempfen

Kurzer grundsätzlicher Artikel zur Climate Accountabilty kurz vor der COP28. Einer der Autor:innen, der gerade verstorbene Saleemul Huq, war ein wichtiger Kämpfer gegen globale Klima-Ungerechtigkeit. Das Übergangskomitee der Vereinten Nationen kam erst im November zu einer umstrittenen Einigung über die Zahlungen an den Loss-and damage-Fonds. Großbritannien und die USA (der historisch größte Verschmutzer) lehnen Klimareparationen grundsätzlich ab. https://www.theguardian.com/commentisfree/2023/nov/01/climate-destruction-rich-countries-cop28

Kurz vor der COP28 kam es zu einer grundsätzlichen Einigung über die Umsetzung des Loss-and-damage-Fonds, der auf der letzten COP beschlossen worden war. Er wird zunächst von der Weltbank verwaltet werden. Die Höhe der Einzahlungen ist noch nicht klar. Aktivist:innen reagierten enttäuscht. https://www.theguardian.com/environment/2023/nov/05/countries-agree-key-measures-to-fund-most-vulnerable-to-climate-breakdown

in every case suggesting the failure of o cially sanctioned structures to requite loss, restore order, address human feeling, and commemorate the dead.

he challenges the state by showing his own personal emotion rather than ritual in grieving for his mum

He de ed the absolute moral clarity of o cial narratives, absorbing the rhetoric of virtue into an account that privileged loss and emotion

It explained how her life had ended, virtuously, and it enabled her family to include her in their application for state honors along with the other relatives who died in 1861

uthenticity of his grief through references to tears, physical pain, wailing, and other uncontrolled responses, which contrast neatly with hierarchical and orderly commemorative arrangements within established ritual settings.

Extrem hohe Wassertemperaturen verursachten den Tod von über 100 Flussdelfinen in der Region des Tefé-Sees in Amazonien. https://taz.de/Tote-Delfine-im-Amazonas/!5964353/

Dass mehr als um die Antarktis erreicht im September seine größte Ausdehnung. Die von Eis bedeckte Fläche dort war nie so gering wie in diesem September. Im Vergleich zu der Periode zwischen 1981 und 2010 ging sie etwa um die Fläche von Alaska zurück. Diese Entwicklung ist auch deshalb beunruhigend, weil sie erst 2016 einsetzte und das antarktische Meereis offenbar insgesamt in einen anderen Zustand geraten ist. https://www.nytimes.com/2023/10/04/climate/antarctic-sea-ice-record-low.html

MONDAY, NOVEMBER 2, 1942Dear Kitty,Bep stayed with us Friday evening. It was fun, but she didn’t sleepvery well because she’d drunk some wine. For the rest, there’snothing special to report. I had an awful headache yesterday andwent to bed early. Margot’s being exasperating again.This morning I began sorting out an index card �le from theo�ce, because it’d fallen over and gotten all mixed up. Before long Iwas going nuts. I asked Margot and Peter to help, but they were toolazy, so I put it away. I’m not crazy enough to do it all by myself!Anne Frank

In a diary entry dated Monday, November 2, 1942, Anne Frank in an entry in which she includes a post script about the "important news that [she's] probably going to get [her] period soon." she mentions spending some time sorting out an index card file. Presumably it had been used for business purposes as she mentions that she got it from the office. Given that it had "fallen over and gotten all mixed up", it presumably didn't use a card rod to hold the cards in. It must have been a fairly big task as she asked for help from two people and not getting it, she abandoned the task because, as she wrote: "I'm not crazy enough to do it all by myself!"

Die Veränderungen des Klimas und der Verlust ihres Lebensraums bedohen die europäischen Hummelarten. Bis zu 75% der Arten sind in pessimistischen Szenarein in den kommende 40-60% Jahren gefährdet. Hummeln spielen eine entscheidende Rolle bei der Bestäubung von 90% der Wildpflanzeen und bei vielen Kulturpflanzen. https://www.repubblica.it/green-and-blue/2023/09/14/news/bombi_europa_insetti_declino_specie-414449398/

In the Amazon and other regions under threat, destroying biodiversity will reduce the reservoir of apparently redundant of rare species. Among these may be those able to flourish and sustain the ecosystem when the next perturbation occurs

found via:

<script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>Niklas Luhmann rejected out of town teaching positions for fear that his hard copy / analog zettelkasten might get destroyed in the moving process 🧵

— Bob Doto (@thehighpony) August 19, 2022

<script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>"He rejected a number of other universities' interests in hiring him...at an early stage, arguing that he couldn't risk taking his Zettelkasten with him in the event of an accident to lose by car, ship, train or plane." https://t.co/SmK2gLJpQ0

— Bob Doto (@thehighpony) August 19, 2022

reference ostensibly in this text, but may need to hunt it down.

Bob confirmed that it was Luhmann Handbuch: Leben, Werk, Wirkung, 2012

As someone who lost multiple notebooks to water (I love typhoon season), I will actively refute the claim that an analog zettelkasten is more secure than a digital one1[3:05 AM] Both have weaknesses, both die to water[3:05 AM] The distinction comes from how much water is necessary for them to die

—Halleyscomet08 on 2023-07-07 3:05 AM at https://discord.com/channels/686053708261228577/979886299785863178/1126816131659878491

Das Meeeis um die Antarktis bedeckt in diesem September so wenig Ozean Fläche wie in keinem September der Messgeschichte. Im September erreicht es seine maximale Ausdehnung. In diesem diesem Jahr liegt sie 1,75 Millionen Quadratmeter Kilometer unter dem langjährigen Durchschnitt und eine Million Quadratmeter unter dem bisher niedrigsten September-Maximum. Im Februar wurde auch bei der geringsten Ausdehnung des antarktischen Meereises ein Rekord verzeichnet. Ob und wie diese Entwicklung mit der globalen Erhitzung zusammenhängt ist noch unklar. Die obersten 300 m des Ozeans um die Antarktis sind deutlich wärmer als früher. https://www.theguardian.com/world/2023/sep/26/antarctic-sea-ice-shrinks-to-lowest-annual-maximum-level-on-record-data-shows

Reportage der New York Times über die awkwatch Expedition des deutschen Forschungsschiffs Polarstern. Das Schiff ist ungefähr zu dem Zeitpunkt am Nordpol, an dem das mehr als die niedrigste Ausdehnung hat. Seit 1980 ist das Eis ihre Dekade um etwa 13% zurückgegangen. Zitate von Antje Boetius über die vergangene und zu erwartende Transformation der Arktis.

https://www.nytimes.com/2023/09/22/climate/arctic-sea-ice-minimum.html

A widely publicized study published last year by researchers at the University of Northern Arizona analyzed satellite images taken between 1985 and 2019. They show that large parts of the boreal forest have “browned” (i.e., died) in the south and greened with trees and shrubs in the north. If this shift, long hypothesized as a future outcome of warming, is already underway, the effects will be profound, transforming natural habitats, animal migration and human settlements.

Küsten-Ökosysteme können sich nur eingeschränkt an eine schnelle Erhöhung des Meeresspiegels anpassen. Über 90% der Mangrovenwälder, Korallenriffe und Gezeitensümpfe würden bei einen Temperaturanstieg um 3° nicht mit dem schnellen Anstieg des Meeresspiegels Schritt halten und damit die Fähigkeit verlieren, CO2 zu binden, wie eine neue Studie prognostiziert. https://www.liberation.fr/environnement/biodiversite/rechauffement-climatique-les-ecosystemes-cotiers-risquent-detre-noyes-par-la-montee-des-eaux-20230830_OHOQ4F5XRRA4RCJLRZEWHTJYRE/

near-term forecasts of this event were good, albeit underestimating the magnitude of the maximum temperatures.

An unprecedented heatwave occurred in the Pacific Northwest (PNW) from ~25 June to 2 July 2021, over lands colonially named British Columbia (BC) and Alberta (AB) in Canada, Washington (WA), and Oregon (OR) in the United States.

None of the 28 streams Cunningham and his colleagues studied hit summertime highs warmer than 25.9 C, the point where warming water can become lethal. But in four rivers, temperatures climbed past 20.3 C, the threshold where some have found juvenile coho stop growing.

for: climate change - impacts, extinction, biodiversity loss, fish kill, salmon dieoff, stats, stats - salmon, logging, human activity

paraphrase

stats

comment

Bei einer Temperaturerhöhung um 3° wären 91% der europäischen Skigebiete von Schneemangel betroffen. Er ließe sich nur zum Teil durch Schneekanonen ausgleichen. Nicht nur das Verschwinden der Schneedecke, sondern auch zunehmender Wassermangel werden den Wintertourismus erschweren oder unmöglich machen, wie eine neue Studie belegt.

This joke card has a comic clipped from a newspaper glued to it. During the digitization process, the index card was put in a clear Mylar sleeve to prevent the comic, with its brittle glue, from being damaged or separated from the card.

The potential separation of newspaper clippings from index cards and their attendant annotations/meta data (due to aging of glue) can be a potential source of note loss when creating a physical card index.

Der Verlust des antarktischen Meereises, der sich in diesem Jahr beschleunigt hat, könnte schon in den folgenden Jahrzehnten zum Untergang der Kaiserpinguine führen. Die Biologin Barbara Wienecke, die diese Pinguine schon lange erforscht, schreibt im Guardian über den drohende Ende einer ikonischen Art.

https://www.theguardian.com/commentisfree/2023/aug/28/emperor-penguin-extinction

In Hobart treffen sich Antarktis-Forschende zu einem Symposion, bei dem die neuesten Daten zum antarktischen Meereis und den Temperaturen der die Antarktis umgebenden Ozeane besprochen werden. Im Juli nahm das Meereis um die Antarkris 15% weniger Fläche ein als 1981-2010. Die Abnahme begann 2016 und hat wie die Temperatursteigerungen der Ozean ein dramatisches Ausmaß erreicht. Die Abnahme kann schwerwiegende Folgen für das Festlandseis und die Meeresströmungen haben.

Die globale Erhitzung droht die Anzahl der Organismen in den tiefsten noch vom Licht erreichten Zonen (200-1000m) in diesem Jahrhundert um bis zu 40% zu reduzieren. Bei höheren Temperaturen zerfallen Mikroorganismen schneller, so dass weniger Nahrung zur Verfügung steht. Diese Zone ist eine wichtige CO2-Senke. https://www.bbc.com/news/science-environment-65460128

At the same time, our whole sense of community has been lost as the requirement of modern societies rely on us living in anonymous neighbourhoods with people we don’t know or share much of anything in common. Who are these representatives presuming to represent anymore anyway?

In the documentary California Typewriter (Gravitas Pictures, 2016) musician John Mayer mentions that he's never lost a typed version of his notes, while digital versions of his work essentially remain out of sight and thus out of mind or else they risk digital erasure by means of either data loss, formatting changes, or other damage.

Mayer also mentions that he loves typewriters for their ability to easily get out stream of consciousness thinking which is a mode of creativity he prefers for writing lyrics.

Die aktuellen Vorbereitungen eines Fonds zum Ausgleich von Loss and Damage durch die Klimakrise berücksichtigen die Bedürfnisse von Ländern mit mittlerem Einkommen zu wenig. Der Präsident der karibischen Entwicklungsbank, Hyginus Leon, weist in einem Interview mit dem Guardian darauf hin, dass auch viele dieser Länder so verwundbar sind, dass sie die nötigen Maßnahmen nach und gegen – nicht von ihnen verursachte – Katastrophen nicht finanzieren können. https://www.theguardian.com/environment/2023/jul/28/mid-income-developing-countries-risk-losing-out-on-climate-rescue-funds-banker-warns

They now have the chance to understandthemselves through understanding their tradition.

It feels odd that people wouldn't understand their own traditions, but it obviously happens. Information overload can obviously heavily afflict societies toward forgetting their traditions and the formation of new traditions, particularly in non-oral traditions which focus more on written texts which can more easily be ignored (not read) and then later replaced with seemingly newer traditions.

Take for example the resurgence of note taking ideas circa 2014-2020 which completely disregarded the prior histories, particularly in lieu of new technologies for doing them.

As a means of focusing on Western Culture, the editors here have highlighted some of the most important thoughts for encapsulating and influencing their current and future cultures.

How do oral traditions embrace the idea of the "Great Conversation"?

Das Verschwinden des arktischen meereises hat – in Verbindung mit den Spannungen in anderen Regionen – gravierende geopolitische Konsequenzen. Russland ist dabei, die Arktis massiv zu militarisieren. Dabei kooperiert ist mit China. Es will andererseits von den Schiffsrouten durch das eisfreie Nordpolarmeer profitieren. Wissenschaftliche Kooperation in der Arktis findet seit der Invasion der ganzen Ukraine im Februar 2022 nicht mehr statt. https://www.theguardian.com/commentisfree/2023/jun/13/arctic-russia-nato-putin-climate

ble to pay $170tn in climate reparations by 2050 to ensure targets to curtail climate breakdown are met, a new study calculates.

Eine neue Studie hat erstmals berechtigt, wieviele Klima-Reparationen die Industrieländer, die die meisten Emissionen verursacht haben, an Staaten des globalen Südens bezahlen müssten. In der Summe sind es 170 Billionen US-Dollar. Berechnet wird, welchen wirtschaftlichen Verlust ärmere Länder ausgleichen müssen, weil ihnen fossile Energien nicht mehr zur Verfügung stehen. Daei wird der Verbrauch seit 1060 zugrundegelegt. https://www.theguardian.com/environment/2023/jun/05/climate-change-carbon-budget-emissions-payment-usa-uk-germany

Edit: also, my antinet gaining some personality points by having parrot bite marks here and there. ‘:D

via u/nagytimi85 at https://www.reddit.com/r/antinet/comments/13s84q3/comment/jlof6b9/?utm_source=reddit&utm_medium=web2x&context=3

File this one under the most bizarre potential note collection damage vector: a parrot who bites index cards!

Trakt DataRecoveryIMPORTANTOn December 11 at 7:30 pm PST our main database crashed and corrupted some of the data. We're deeply sorry for the extended downtime and we'll do better moving forward. Updates to our automated backups are already in place and they will be tested on an ongoing basis.Data prior to November 7 is fully restored.Watched history between November 7 and Decmber 11 has been recovered. There is a separate message on your dashboard allowing you to review and import any recovered data.All other data (besides watched history) after November 7 has already been restored and imported.Some data might be permanently lost due to data corruption.Trakt API is back online as of December 20.Active VIP members will get 2 free months added to their expiration date

From late 2022

In Europa verschwinden seit 4 Jahrzehnten jährlich 20 Millionen Vögel. Das ist eines der Ergebnisse der bisher größten Studie über den Vogelschwund in Europa. Hauptursache ist die Intensivlandwirtschaft.

In Vietnam ist das letzte bekannte weibliche Exemplar der Yangtse-Riesenweichschildkröte gestorben. Die Zerstörung von Fluss-Biotopen ist ein Grund für das Aussterben dieser Art.

Wirbellose Tiere in den Schmelzwasser-Flüssen des Alpenraums verlieren ihre Lebensgrundlage durch die globale Erhitzung und drochen bald auszusterben. Sie erfüllen wichtige Funktionen in den Ökosystemen der Alpen. https://www.theguardian.com/environment/2023/may/04/melting-glaciers-in-alps-threaten-biodiversity-of-invertebrates-says-study

Die Europäische Umweltagentur hat daher Österreich im Oktober 2020 ein vernichtendes Urteil ausgestellt - mehr als 80 % der durch Natura 2000 zu schützenden Arten und Lebensräume befinden sich in einem mangelhaften Zustand6 .

Eine neue Studie ergibt, dass der Verlust der Biodiversität noch dramatischer und Gegenmaßnahmen noch dringender sind als bisher angenommen. Untersuchungen zu großen Säugetieren und Vögeln zeigen, dass bisher zu wenig berücksichtigt wurde, dass sich wichtige Treiber des Artensterbens erst mit jahrzehntelanger Verzögerung auswirken. https://www.bbc.com/news/science-environment-65315823

Aus einer neuen Studie geht hervor, dass ein großer Teil der vom Aussterben bedrohten Insektenarten von den bestehenden Schutzgebieten nicht geschützt wird. Die Ausweitung der Schutzgebiete, die bei der Kopf 15 international beschlossen wurde, ist nur wirksam, wenn die Lebensräume von Insekten bei der Ausweisung von Naturschutzgebieten berücksichtigt werden. https://taz.de/Insektensterben-weltweit/!5925443/

One idea to store pictures of an analog Zettelkasten: Tropy - it's a side project to Zotero. https://tropy.org/

—u/stockwestfale at https://www.reddit.com/r/antinet/comments/12jjsgl/about_archiving_my_analog_zettelkasten/

Insekten sind für das Überleben der Menschen und vieler anderer Arten notwendig. Sie sterben aufgrund menschlicher Einflüsse so schnell aus, dass ein Kipppunkt bevorsteht oder sogar schon erreicht sein könnte, an dem sie völlig verschwinden. Langes Interview mit Dave Goulson, der über diese unmittelbar bevorstehende existentielle Gefahr das Buch Silent Earth (dt. Stumme Erde. Warum wir die Insekten retten müssen. Hanser, 2022) geschrieben hat. https://www.liberation.fr/environnement/biodiversite/un-monde-sans-insectes-ce-serait-une-catastrophe-20230411_KXU7INKK35BIRK63L53BFAEYZ4/

The TLL was moved to a monastery in Bavaria during WWII because they were worried that the building that contained the zettelkasten would be bombed and it actually was.

The slips have been microfilmed and a copy of them is on store at Princeton as a back up just i case.

[01:17:00]

Keine der bisherigen Regelungen internationalen Regelungen zum Schutz der Biodiversität wurde auch nur annähernd eingehalten. Zur Zeit ist eine von 8 Millionen Arten auf der Erde vom Aussterben bedroht. Ein Abkommen dass wirklich umgesetzt wird, muss laut Inger Anderson, checking der un Umweltorganisation, kl are quantitative Ziele enthalten. Aaußerdem muss es auf Daten von hoher Qualität beruhen. Außerdem sind klar definierte nationale Ziele nötig

Seit dem Beginn von Satelliten-Beobachtungen vor vier Jahrzehnten ist das antarktische Meereis noch nie so geschrumpft wie im Februar 2023.

Related here is the horcrux problem of note taking or even social media. The mental friction of where did I put that thing? As a result, it's best to put it all in one place.

How can you build on a single foundation if you're in multiple locations? The primary (only?) benefit of multiple locations is redundancy in case of loss.

Ryan Holiday and Robert Greene are counter examples, though Greene's books are distinct projects generally while Holiday's work has a lot of overlap.

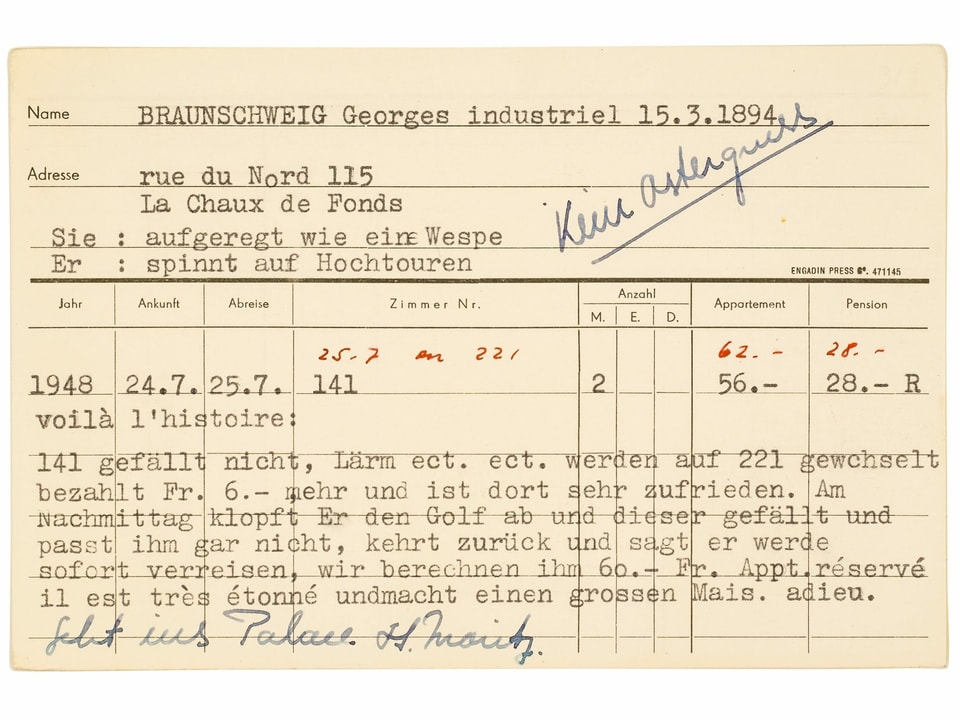

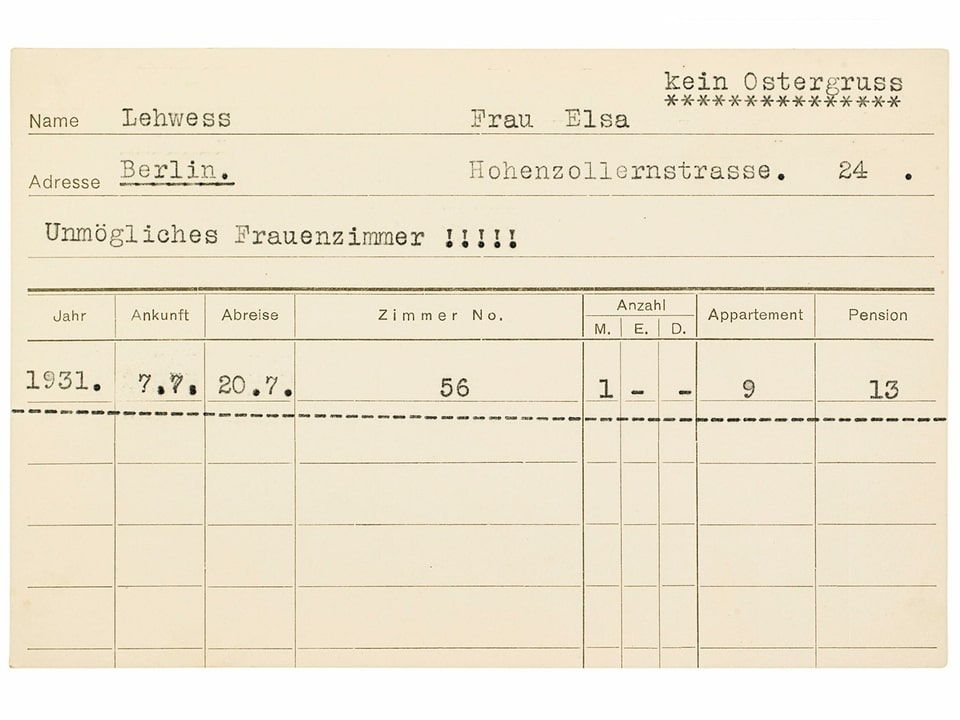

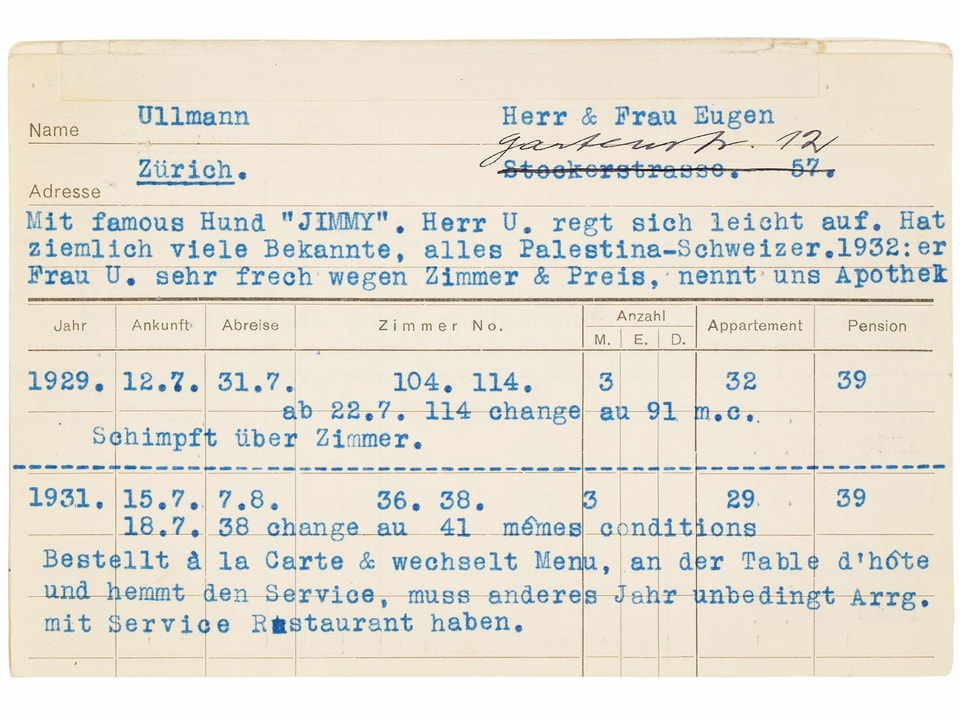

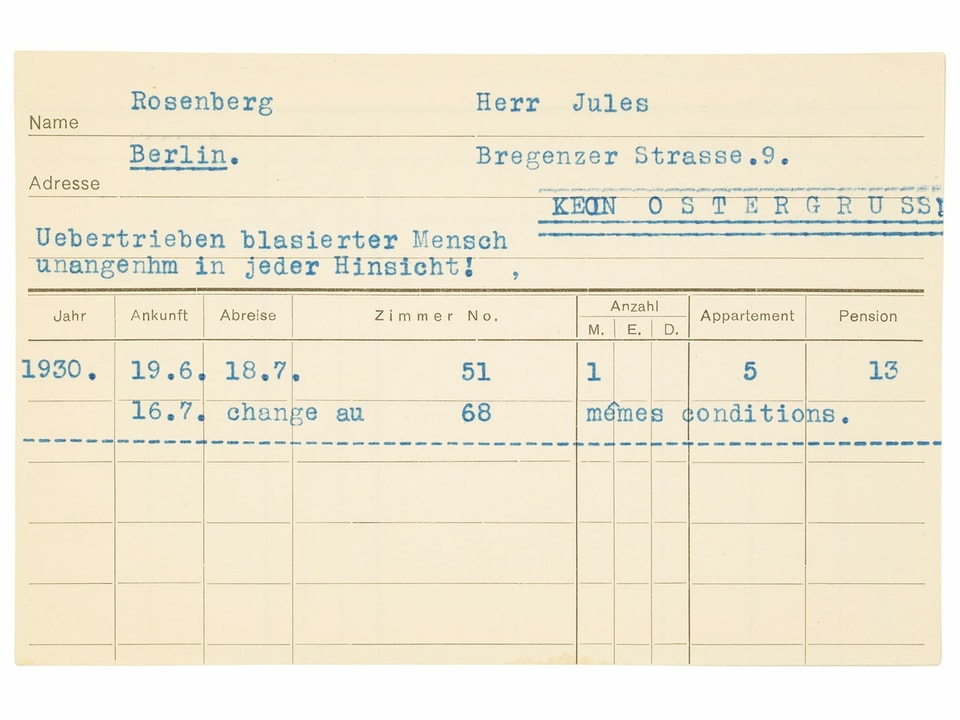

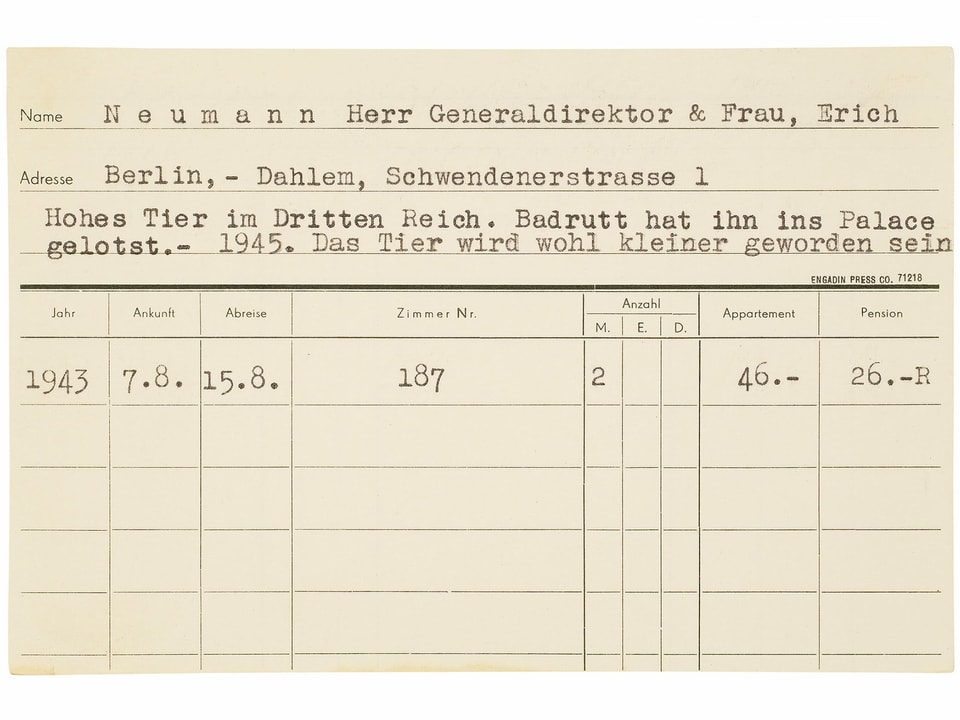

Wie durch ein Wunder blieben vier Holzkisten mit hochbrisantem Inhalt verschont. Sie waren zum Zeitpunkt des Infernos in einem anderen Gebäude eingelagert. Sie enthielten 20'000 Gästekarten, die Concierges und Rezeptionisten zwischen 1920 und 1960 heimlich geführt hatten.

Google translate:

four wooden boxes with highly explosive contents were spared. They were stored in a different building at the time of the inferno. They contained 20,000 guest cards that concierges and receptionists had kept secretly between 1920 and 1960.

The Grandhotel Waldhaus burned down in 1989, but saved from the inferno were 20,000 guest cards with annotations about them that were compiled between 1920 and 1960.

At the Grandhotel Waldhaus in Vulpera, Switzerland concierges and receptionists maintained a business-focused zettelkasten of cards. In addition to the typical business function these cards served denoting names, addresses, and rooms, the staff also made annotations commenting on the guests and their proclivities.

1:

2:

3:

4:

5:

6:

Note 9/8j says - "There is a note in the Zettelkasten that contains the argument that refutes the claims on every other note. But this note disappears as soon as one opens the Zettelkasten. I.e. it appropriates a different number, changes position (or: disguises itself) and is then not to be found. A joker." Is he talking about some hypothetical note? What did he mean by disappearing? Can someone please shed some light on what he really meant?

9/8j Im Zettelkasten ist ein Zettel, der das Argument enthält, das die Behauptungen auf allen anderen Zetteln widerlegt.

Aber dieser Zettel verschwindet, sobald man den Zettelkasten aufzieht.

D.h. er nimmt eine andere Nummer an, verstellt sich und ist dann nicht zu finden.

Ein Joker.

—Niklas Luhmann, ZK II: Zettel 9/8j

Translation:

9/8j In the slip box is a slip containing the argument that refutes the claims on all the other slips. But this slip disappears as soon as you open the slip box. That is, he assumes a different number, disguises himself and then cannot be found. A joker.

Many have asked about the meaning of this jokerzettel over the past several years. Here's my slightly extended interpretation, based on my own practice with thousands of cards, about what Luhmann meant:

Imagine you've spent your life making and collecting notes and ideas and placing them lovingly on index cards. You've made tens of thousands and they're a major part of your daily workflow and support your life's work. They define you and how you think. You agree with Friedrich Nietzsche's concession to Heinrich Köselitz that “You are right — our writing tools take part in the forming of our thoughts.” Your time is alive with McLuhan's idea that "The medium is the message." or in which his friend John Culkin said, "We shape our tools and thereafter they shape us."

Eventually you're going to worry about accidentally throwing your cards away, people stealing or copying them, fires (oh! the fires), floods, or other natural disasters. You don't have the ability to do digital back ups yet. You ask yourself, can I truly trust my spouse not to destroy them?,What about accidents like dropping them all over the floor and needing to reorganize them or worse, the ghost in the machine should rear its head?

You'll fear the worst, but the worst only grows logarithmically in proportion to your collection.

Eventually you pass on opportunities elsewhere because you're worried about moving your ever-growing collection. What if the war should obliterate your work? Maybe you should take them into the war with you, because you can't bear to be apart?

If you grow up at a time when Schrodinger's cat is in the zeitgeist, you're definitely going to have nightmares that what's written on your cards could horrifyingly change every time you look at them. Worse, knowing about the Heisenberg Uncertainly Principle, you're deathly afraid that there might be cards, like electrons, which are always changing position in ways you'll never be able to know or predict.

As a systems theorist, you view your own note taking system as a input/output machine. Then you see Claude Shannon's "useless machine" (based on an idea of Marvin Minsky) whose only function is to switch itself off. You become horrified with the idea that the knowledge machine you've painstakingly built and have documented the ways it acts as an independent thought partner may somehow become self-aware and shut itself off!?!

https://www.youtube.com/watch?v=gNa9v8Z7Rac

And worst of all, on top of all this, all your hard work, effort, and untold hours of sweat creating thousands of cards will be wiped away by a potential unknowable single bit of information on a lone, malicious card and your only recourse is suicide, the unfortunate victim of dataism.

Of course, if you somehow manage to overcome the hurdle of suicidal thoughts, and your collection keeps growing without bound, then you're sure to die in a torrential whirlwind avalanche of information and cards, literally done in by information overload.

But, not wishing to admit any of this, much less all of this, you imagine a simple trickster, a joker, something silly. You write it down on yet another card and you file it away into the box, linked only to the card in front of it, the end of a short line of cards with nothing following it, because what could follow it? Put it out of your mind and hope your fears disappear away with it, lost in your box like the jokerzettel you imagined. You do this with a self-assured confidence that this way of making sense of the world works well for you, and you settle back into the methodical work of reading and writing, intent on making your next thousands of cards.

He saw that her suitcase had shoved all his trays of slips over to one side of the pilot berth.They were for a book he was working on and one of the four long card-catalog-type trays wasby an edge where it could fall off. That's all he needed, he thought, about three thousand four-by-six slips of note pad paper all over the floor.He got up and adjusted the sliding rest inside each tray so that it was tight against the slipsand they couldn't fall out. Then he carefully pushed the trays back into a safer place in therear of the berth. Then he went back and sat down again.It would actually be easier to lose the boat than it would be to lose those slips. There wereabout eleven thousand of them. They'd grown out of almost four years of organizing andreorganizing and reorganizing so many times he'd become dizzy trying to fit them all together.He'd just about given up.

Worry about dropping a tray of slips and needing to reorganize them.

The freedom objection to effective climate governance says that we must make a tragic choice on behalf of freedom. We must choose the loss of some present and future lives in order to preserve a way of life, the lynchpins of which are individual freedom, private property, steep social and monetary inequality, economic growth, and energy-intensive production and consumption. Yet the lives some would choose to lose need not be lost if the right and the good—living up to the best in our humanity and being morally responsible—were seen in new ways.

!- a middle way solution : meeting libertarians half way? - present palatable alternatives that are not so threatening?

https://www.youtube.com/watch?v=SYj1jneBUQo

Forrest Perry shows part of his note taking and idea development process in his hybrid digital-analog zettelkasten practice. He's read a book and written down some brief fleeting notes on an index card. He then chooses a few key ideas he wants to expand upon, finds the physical index card he's going to link his new idea to, then reviews the relevant portion of the book and writes a draft of a card in his notebook. Once satisfied with it, he transfers his draft from his notebook into Obsidian (ostensibly for search and as a digital back up) where he may also be refining the note further. Finally he writes a final draft of his "permanent" (my framing, not his) note on a physical index card, numbers it with respect to his earlier card, and then (presumably) installs it into his card collection.

In comparison to my own practice, it seems like he's spending a lot of time after-the-fact in reviewing over the original material to write and rewrite an awful lot of material for what seems (at least to me—and perhaps some of it is as a result of lack of interest in the proximal topic), not much substance. For things like this that I've got more direct interest in, I'll usually have a more direct (written) conversation with the text and work out more of the details while reading directly. This saves me from re-contextualizing the author's original words and arguments while I'm making my arguments and writing against the substrate of the author's thoughts. Putting this work in up front is often more productive at least for areas of direct interest. I would suspect that in Perry's case, he was generally interested in the book, but it doesn't impinge on his immediate areas of research and he only got three or four solid ideas out of it as opposed to a dozen or so.

The level of one's conversation with the text will obviously depend on their interest and goals, a topic which is relatively well laid out by Adler & Van Doren (1940).

Before they were sent, however, the contents of itstwenty-six drawers were photographed in Princeton, resulting in thirty mi-crofilm rolls. Recently, digital pdf copies of these microfilm rolls have been

circulating among scholars of the documentary Geniza.

Prior to being shipped to the National Library of Israel, Goitein's index card collection was photographed in Princeton and transferred to thirty microfilm rolls from which digital copies in .pdf format have been circulating among scholars of the documentary Geniza.

Link to other examples of digitized note collections: - Niklas Luhmann - W. Ross Ashby - Jonathan Edwards

Are there collections by Charles Darwin and Linnaeus as well?

https://youtu.be/VFs3_COOMp8?t=1130

He mentions (not seriously) getting into a spat with his wife who threatens to throw his zettelkasten out the window as a means of retaliation! 🤣

https://youtu.be/VFs3_COOMp8?t=1103

Mention of worry over losing a zettelkasten due to fire or water damage, versus digital loss due to electric/power failure.

Hard drive failure and lack of back ups are also a problem.

Ketogenic Diet for Weight Loss

seemed

Already there are hints that all is not as it seems. Emma's life is not so perfect. We shortly learn her mother has died, yes, she's been replaced by a loving governess but losing a mother is still a big deal

even Socrates himself, we learnby way of his followers, derided the emerging popularity of taking physicalnotes.

I recall portions about Socrates deriding "writing" as a mode of expression, but I don't recall specific sections on note taking. What is Ann Blair's referent for this?

The "emerging popularity of taking physical notes" seems not to be in evidence with only one exemplar of a student who lost their notes within the Blair text.

DanAllosso · 36 min. agoThanks, Scott! I'll have a Scrintal "board" with photographed analog notes to show soon, too. Solved the fire and flood problem.

I continued to use this analog method right up through my Ph.D. dissertation and first monograph. After a scare in the early stages of researching my second monograph, when I thought all of my index cards had been lost in a flood, I switched to an electronic version: a Word doc containing a table with four cells that I can type or paste information into (and easily back up).

What is More Important for Weight Loss? Diet or Exercise

Our kids have lost so much—family members, connections to friends and teachers, emotional well-being, and for many, financial stability at home. And, of course, they’ve lost some of their academic progress. The pressure to measure—and remediate—this “learning loss” is intense; many advocates for educational equity are rightly focused on getting students back on track. But I am concerned about how this growing narrative of loss will affect our students, emotionally and academically. Research shows a direct connection between a student’s mindset and academic success.

“Our kids have lost so much—family members, connections to friends and teachers, emotional well-being, and for many, financial stability at home,” the article begins, sifting through a now-familiar inventory of devastation, before turning to a problem of a different order. “And of course, they’ve lost some of their academic progress.”

As one expert reminded, “Bereavement is the No. 1 predictor of poor school outcomes.

Analysts have labelled this as “learning loss,” and many have blamed school closures and remote instruction in the course of the past two years as the culprit. Essentially, schools serving largely Black and Latino populations were more likely to turn to remote teaching.

Article on Posteezy

Will Skipping Meals Help with Weight Loss?

"If the Reagans' home in Palisades (Calif.) were burning," Brinkley says, "this would be one of the things Reagan would immediately drag out of the house. He carried them with him all over like a carpenter brings their tools. These were the tools for his trade."

Another example of someone saying that if their house were to catch fire, they'd save their commonplace book (first or foremost).

He took it toWashington when he went into war service in 1917-1918;

Frederic Paxson took his note file from Wisconsin to Washington D.C. when he went into war service from 1917-1918, which Earl Pomeroy notes as an indicator of how little burden it was, but he doesn't make any notation about worries about loss or damage during travel, which may have potentially occurred to Paxson, given his practice and the value to him of the collection.

May be worth looking deeper into to see if he had such worries.

Filing is a tedious activity and bundles of unsorted notes accumulate. Some of them get loose and blow around the house, turning up months later under a carpet or a cushion. A few of my most valued envelopes have disappeared altogether. I strongly suspect that they fell into the large basket at the side of my desk full of the waste paper with which they are only too easily confused.

Relying on cut up slips of paper rather than the standard cards of equal size, Keith Thomas has relayed that his slips often "get loose and blow around the house, turning up months later under a carpet or cushion."

He also suspects that some of his notes have accidentally been thrown away by falling off his desk and into the nearby waste basket which camouflages his notes amongst similar looking trash.

best that deep study invites you to gather in like a harvest, and to store up as the wealth of life.

Like centuries of rhetors before him, he's using ideas and metaphors of harvest, treasure, and wealth to describe note taking.

Why have these passed out of popular Western thought since the 1920-1960s when this book was popular?

I am less worried about natural disaster than my own negligence. I take the cards with me too much. I am not stationary in my office and so to use the cards I am taking them. I am afraid they will lost or destroyed. I have started to scan into apple notes. I will see how that goes. It is easy and might be a great overall solution.

episcopal-orthodox reply to: https://www.reddit.com/r/antinet/comments/y77414/comment/isyqc7b/

As long as you're not using flimsy, standard paper for your slips like Luhmann (they deteriorate too rapidly with repeated use), you can frame your carrying them around more positively by thinking that use over time creates a lovely patina to your words and ideas. The value of this far outweighs the fear of loss, at least for me. And if you're still concerned, there's always the option that you could use ars memoria to memorize all of your cards and meditate on them combinatorially using Llullan wheels the way Raymond Llull originally did. 🛞🗃️🚀🤩

Worried about paper cards being lost or destroyed .t3_y77414._2FCtq-QzlfuN-SwVMUZMM3 { --postTitle-VisitedLinkColor: #9b9b9b; --postTitleLink-VisitedLinkColor: #9b9b9b; --postBodyLink-VisitedLinkColor: #989898; } I am loving using paper index cards. I am, however, worried that something could happen to the cards and I could lose years of work. I did not have this work when my notes were all online. are there any apps that you are using to make a digital copy of the notes? Ideally, I would love to have a digital mirror, but I am not willing to do 2x the work.

u/LBHO https://www.reddit.com/r/antinet/comments/y77414/worried_about_paper_cards_being_lost_or_destroyed/

As a firm believer in the programming principle of DRY (Don't Repeat Yourself), I can appreciate the desire not to do the work twice.

Note card loss and destruction is definitely a thing folks have worried about. The easiest thing may be to spend a minute or two every day and make quick photo back ups of your cards as you make them. Then if things are lost, you'll have a back up from which you can likely find OCR (optical character recognition) software to pull your notes from to recreate them if necessary. I've outlined some details I've used in the past. Incidentally, opening a photo in Google Docs will automatically do a pretty reasonable OCR on it.

I know some have written about bringing old notes into their (new) zettelkasten practice, and the general advice here has been to only pull in new things as needed or as heavily interested to ease the cognitive load of thinking you need to do everything at once. If you did lose everything and had to restore from back up, I suspect this would probably be the best advice for proceeding as well.

Historically many have worried about loss, but the only actual example of loss I've run across is that of Hans Blumenberg whose zettelkasten from the early 1940s was lost during the war, but he continued apace in another dating from 1947 accumulating over 30,000 cards at the rate of about 1.5 per day over 50 some odd years.

"In the event of a fire, the black-bound excerpts are to be saved first," instructed the poet Jean Paul to his wife before setting off on a trip in 1812.

Writer Jean Paul on the importance of his Zettelkasten.

»Bei Feuer sind die schwarzeingebundnen Exzerpten zuerst zu retten«, wies der Dichter Jean Paul seine Frau vor Antritt einer Reise im Jahr 1812 an.

"In the event of a fire, the black-bound excerpts are to be saved first," the poet Jean Paul instructed his wife before setting out on a journey in 1812.

https://www.reddit.com/r/antinet/comments/ur5xjv/handwritten_cards_to_a_digital_back_up_workflow/

For those who keep a physical pen and paper system who either want to be a bit on the hybrid side, or just have a digital backup "just in case", I thought I'd share a workflow that I outlined back in December that works reasonably well. (Backups or emergency plans for one's notes are important as evidenced by poet Jean Paul's admonition to his wife before setting off on a trip in 1812: "In the event of a fire, the black-bound excerpts are to be saved first.") It's framed as posting to a website/digital garden, but it works just as well for many of the digital text platforms one might have or consider. For those on other platforms (like iOS) there are some useful suggestions in the comments section. Handwriting My Website (or Zettelkasten) with a Digital Amanuensis

What if something happened to your box? My house recently got robbed and I was so fucking terrified that someone took it, you have no idea. Thankfully they didn’t. I am actually thinking of using TaskRabbit to have someone create a digital backup. In the meantime, these boxes are what I’m running back into a fire for to pull out (in fact, I sometimes keep them in a fireproof safe).

His collection is incredibly important to him. He states this in a way that's highly reminiscent of Jean Paul.

"In the event of a fire, the black-bound excerpts are to be saved first." —instructions from Jean Paul to his wife before setting off on a trip in 1812 #

Blu-menberg’s first collection of note cards dates back to the early 1940s butwas lost during the war; the Marbach collection contains cards from 1947onwards. 18

18 Von Bülow and Krusche, “Vorla ̈ ufiges,” 273.

Hans Blumenberg's first zettelkasten dates to the early 1940s, but was lost during the war though he continued the practice afterwards. The collection of his notes housed at Marbach dates from 1947 onward.

There is a box stored in the German Literature Archive in Marbach, thewooden box Hans Blumenberg kept in a fireproof steel cabinet, for it con-tained his collection of about thirty thousand typed and handwritten notecards.1

Hans Blumenberg's zettelkasten of about thirty thousand typed and handwritten note cards is now kept at the German Literature Archive in Marbach. Blumenberg kept it in a wooden box which he kept in a fireproof steel cabinet.

Popular Diet Trends for Weight Loss

Best Weight Loss Drinks - According to Nutritionists

If akey is lost, this invariably means that the secureddata asset is irrevocably lost

Counterpart, be careful! If a key is lost, the secured data asset is lost

Yates, C., & Feil, E. (2021, February 1). Will coronavirus really evolve to become less deadly? The Conversation. http://theconversation.com/will-coronavirus-really-evolve-to-become-less-deadly-153817

Gut aufbereiteter Bericht zum Verlust der Biodiversität, sehr viele Infografiken

Contemporary scholarship is not in a position to give a definitive assessmentof the achievements of philosophical grammar. The ground-work has not beenlaid for such an assessment, the original work is all but unknown in itself, andmuch of it is almost unobtainable. For example, I have been unable to locate asingle copy, in the United States, of the only critical edition of the Port-RoyalGrammar, produced over a century ago; and although the French original isnow once again available, 3 the one English translation of this important workis apparently to be found only in the British Museum. It is a pity that this workshould have been so totally disregarded, since what little is known about it isintriguing and quite illuminating.

He's railing against the loss of theory for use over time and translation.

similar to me and note taking...

Ergebnisse des Farmland Bird Index für 2021

20:19 - Loss aversion

We evolved to have this trait of loss aversion to help us survive in lean years by holding onto the precious resources necessary for survivai.