- Feb 2021

-

www.technologyreview.com www.technologyreview.com

-

These noncommercial alternatives would not have to be funded by the government (which is fortunate, given that government funding for public media such as PBS is in doubt these days). Ralph Engelman, a media historian at Long Island University who wrote Public Radio and Television in America: A Political History, points out that the creation of public broadcasting was led by—and partially funded by—prominent nonprofit groups such as the Ford and Carnegie Foundations. In the past few years, several nonprofit journalism outlets such as ProPublica have sprung up; perhaps now their backers and other foundations could do more to ensure the existence of more avenues for such work to be read and shared.

这些非商业性的替代方案不必由政府资助(这是幸运的,考虑到政府对公共媒体如公共广播公司PBS的资助最近受到质疑)。长岛大学的媒体历史学家拉尔夫-恩格尔曼(Ralph Engelman)撰写了《美国公共广播和电视:一部政治史》(Public Radio and Television in America: a Political History)一书指出,公共广播的创立是由福特基金会和卡内基基金会等著名非营利组织领导的,并在一定程度上得到了它们的资助。在过去几年中,一些非营利性新闻机构如ProPublica如雨后春笋般涌现;也许现在它们的支持者和其他基金会可以做更多的工作,以确保这些工作有更多的途径被阅读和分享。

-

Facebook is fundamentally not a network of ideas. It’s a network of people. And though it has two billion active users every month, you can’t just start trading insights with all of them. As Facebook advises, your Facebook friends are generally people you already know in real life. That makes it more likely, not less, to stimulate homogeneity of thought. You can encounter strangers if you join groups that interest you, but those people’s posts are not necessarily going to get much airtime in your News Feed. The News Feed is engineered to show you things you probably will want to click on. It exists to keep you happy to be on Facebook and coming back many times a day, which by its nature means it is going to favor emotional and sensational stories.

Facebook从根本上来说不是一个思想的网络。它是一个人际网络。虽然它每个月有20亿活跃用户,但你不能就这样开始和他们所有人交换见解。正如Facebook建议的那样,你的Facebook好友一般都是你在现实生活中已经认识的人。这使它更有可能,而不是更少,刺激思想的同质性。如果你加入你感兴趣的群组,你可能会遇到陌生人,但这些人的帖子不一定会在你的新闻动态(News Feed)中占据太多时间。新闻动态(News Feed)的设计是为了让你看到你可能会想点击的东西。它的存在是为了让你在Facebook上开心,并每天查看多次,这意味着它会是情感和耸人听闻的故事。

-

- Jan 2021

-

seirdy.one seirdy.one

-

Facebook further its core mission: the optimization and auctioning of human behavior (colloquially known as “targeted advertising”).

A better description of what Facebook does than it's whitewashed description of "Connecting everyone".

-

-

groupkit.com groupkit.com

Tags

Annotators

URL

-

-

themarkup.org themarkup.org

-

Documents examined by the Wall Street Journal last May show Facebook’s internal research found 64 percent of new members in extremist groups joined because of the social network’s “Groups you should join” and “Discover” algorithms.

-

- Dec 2020

-

www.theatlantic.com www.theatlantic.com

-

The few people who are willing to defend these sites unconditionally do so from a position of free-speech absolutism. That argument is worthy of consideration. But there’s something architectural about the site that merits attention, too: There are no algorithms on 8kun, only a community of users who post what they want. People use 8kun to publish abhorrent ideas, but at least the community isn’t pretending to be something it’s not. The biggest social platforms claim to be similarly neutral and pro–free speech when in fact no two people see the same feed. Algorithmically tweaked environments feed on user data and manipulate user experience, and not ultimately for the purpose of serving the user. Evidence of real-world violence can be easily traced back to both Facebook and 8kun. But 8kun doesn’t manipulate its users or the informational environment they’re in. Both sites are harmful. But Facebook might actually be worse for humanity.

-

Facebook’s stated mission—to make the world more open and connected—

If they were truly serious about the connectedness part, they would implement the Webmention spec and microformats, or something just like it, but open and standardized.

-

Every time you click a reaction button on Facebook, an algorithm records it, and sharpens its portrait of who you are.

It might be argued that the design is not creating a portrait of who you are, but of who Facebook wants you to become. The real question is: Who does Facebook want you to be, and are you comfortable with being that?

-

No one, not even Mark Zuckerberg, can control the product he made. I’ve come to realize that Facebook is not a media company. It’s a Doomsday Machine.

-

-

ag.ny.gov ag.ny.gov

-

On August 10, 2009, FriendFeed accepted Facebook’s offer. As Facebook employees internally discussed via email on the day of the acquisition, “I remember you said to me a long time (6 months ago): ‘we can just buy them’ when I said to you that Friendfeed is the company I fear most. That was prescient! :).”

-

- Nov 2020

-

advances.sciencemag.org advances.sciencemag.org

-

Charoenwong, B., Kwan, A., & Pursiainen, V. (2020). Social connections with COVID-19–affected areas increase compliance with mobility restrictions. Science Advances, 6(47), eabc3054. https://doi.org/10.1126/sciadv.abc3054

-

-

www.wsj.com www.wsj.com

-

Facebook Inc. FB 0.73% is demanding that a New York University research project cease collecting data about its political-ad-targeting practices, setting up a fight with academics seeking to study the platform without the company’s permission. The dispute involves the NYU Ad Observatory, a project launched last month by the university’s engineering school that has recruited more than 6,500 volunteers to use a specially designed browser extension to collect data about the political ads Facebook shows them.

I haven't seen a reference to it in any of the stories I've seen about Facebook over the past decade, but at it's root, Facebook is creating a Potemkin village for each individual user of their service.

Not being able to compare my Potemkin Village to the possibly completely different version you see makes it incredibly hard for all of us to live in the same world.

It's been said that on the internet, no one knows you're a dog, but this is even worse: you probably have slipped so far, you're not able to be sure what world you're actually living in.

-

-

www.nytimes.com www.nytimes.com

-

No, I’ve never been a fan of Facebook, as you probably know. I’ve never been a big Zuckerberg fan. I think he’s a real problem. I think ——

Joe Biden is geen fan van Facebook

-

-

blendle.com blendle.com

-

Maar denk eens verder. Gaan we de wereld beter maken door verder te groeien? Dat willen ondernemers. En daar zijn neveneffecten aan verbonden. Uber is zogenaamd idealistisch. Ze willen de klant een betere en goedkopere taxirit bieden, maar hun chauffeurs worden uitgebuit. Met Airbnb kun je een leuk zakcentje verdienen, maar woonwijken worden hotels zonder sociale patronen. Facebook is één grote fake-news-show. Amazon is zo goedkoop dat ze alle kleine ondernemers van de weg drukken. Musk wil ons naar Mars brengen. Dat gaat zeker gebeuren, maar wat gaan we daar doen? Maakt het ons gelukkig? Waarom kiezen we er niet voor om elkaar en onze natuur op deze planeet vooruit te helpen?

Alef Arendsen

-

-

www.schneier.com www.schneier.com

-

But as long as the most important measure of success is short-term profit, doing things that help strengthen communities will fall by the wayside. Surveillance, which allows individually targeted advertising, will be prioritized over user privacy. Outrage, which drives engagement, will be prioritized over feelings of belonging. And corporate secrecy, which allows Facebook to evade both regulators and its users, will be prioritized over societal oversight.

Schneier is saying here that as long as the incentives are still pointing in the direction of short-term profit, privacy will be neglected.

Surveillance, which allows for targeted advertising will win out over user privacy. Outrage, will be prioritized over more wholesome feelings. Corporate secrecy will allow Facebook to evade regulators and its users.

-

Increased pressure on Facebook to manage propaganda and hate speech could easily lead to more surveillance. But there is pressure in the other direction as well, as users equate privacy with increased control over how they present themselves on the platform.

Two forces acting on the big tech platforms.

One, towards more surveillance, to stop hate and propaganda.

The other, towards less surveillance, stemming from people wanting more privacy and more control.

-

Facebook makes choices about what content is acceptable on its site. Those choices are controversial, implemented by thousands of low-paid workers quickly implementing unclear rules. These are tremendously hard problems without clear solutions. Even obvious rules like banning hateful words run into challenges when people try to legitimately discuss certain important topics.

How Facebook decides what to censor.

-

-

www.facebook.com www.facebook.com

-

At the same time, working through these principles is only the first step in building out a privacy-focused social platform. Beyond that, significant thought needs to go into all of the services we build on top of that foundation -- from how people do payments and financial transactions, to the role of businesses and advertising, to how we can offer a platform for other private services.

This is what Facebook is really after. They want to build the trust to be able to offer payment services on top of Facebook.

-

People want to be able to choose which service they use to communicate with people. However, today if you want to message people on Facebook you have to use Messenger, on Instagram you have to use Direct, and on WhatsApp you have to use WhatsApp. We want to give people a choice so they can reach their friends across these networks from whichever app they prefer.We plan to start by making it possible for you to send messages to your contacts using any of our services, and then to extend that interoperability to SMS too. Of course, this would be opt-in and you will be able to keep your accounts separate if you'd like.

Facebook plans to make messaging interoperable across Instagram, Facebook and Whatsapp. It will be opt-in.

-

An important part of the solution is to collect less personal data in the first place, which is the way WhatsApp was built from the outset.

Zuckerberg claims Whatsapp was built with the goal of not collecting much data from the outset.

-

As we build up large collections of messages and photos over time, they can become a liability as well as an asset. For example, many people who have been on Facebook for a long time have photos from when they were younger that could be embarrassing. But people also really love keeping a record of their lives.

Large collections of photos are both a liability and an asset. They might be embarrassing but it might also be fun to look back.

-

We increasingly believe it's important to keep information around for shorter periods of time. People want to know that what they share won't come back to hurt them later, and reducing the length of time their information is stored and accessible will help.

In addition to a focus on privacy, Zuckerberg underlines a focus on impermanence — appeasing people's fears that their content will come back to haunt them.

-

I understand that many people don't think Facebook can or would even want to build this kind of privacy-focused platform -- because frankly we don't currently have a strong reputation for building privacy protective services, and we've historically focused on tools for more open sharing.

Zuckerberg acknowledges that Facebook is not known for its reputation on privacy and has focused on open sharing in the past.

-

Today we already see that private messaging, ephemeral stories, and small groups are by far the fastest growing areas of online communication.

According to Zuckerberg, in 2019 we're seeing private messaging, ephemeral stories and small groups as the fastest growing areas of online communication.

-

As I think about the future of the internet, I believe a privacy-focused communications platform will become even more important than today's open platforms. Privacy gives people the freedom to be themselves and connect more naturally, which is why we build social networks.

Mark Zuckerberg claims he believes privacy focused communications will become even more important than today's open platforms (like Facebook).

-

- Oct 2020

-

about.fb.com about.fb.com

-

Facebook AI is introducing M2M-100, the first multilingual machine translation (MMT) model that can translate between any pair of 100 languages without relying on English data. It’s open sourced here. When translating, say, Chinese to French, most English-centric multilingual models train on Chinese to English and English to French, because English training data is the most widely available. Our model directly trains on Chinese to French data to better preserve meaning. It outperforms English-centric systems by 10 points on the widely used BLEU metric for evaluating machine translations. M2M-100 is trained on a total of 2,200 language directions — or 10x more than previous best, English-centric multilingual models. Deploying M2M-100 will improve the quality of translations for billions of people, especially those that speak low-resource languages. This milestone is a culmination of years of Facebook AI’s foundational work in machine translation. Today, we’re sharing details on how we built a more diverse MMT training data set and model for 100 languages. We’re also releasing the model, training, and evaluation setup to help other researchers reproduce and further advance multilingual models.

Summary of the 1st AI model from Facebook that translates directly between languages (not relying on English data)

-

-

www.facebook.com www.facebook.com

-

you grant us a non-exclusive, transferable, sub-licensable, royalty-free, and worldwide license to host, use, distribute, modify, run, copy, publicly perform or display, translate, and create derivative works of your content (consistent with your privacy and application settings).

-

-

www.newyorker.com www.newyorker.com

-

This is the story of how Facebook tried and failed at moderating content. The article cites many sources (employees) that were tasked with flagging posts according to platform policies. Things started to be complicated when high-profile people (such as Trump) started posting hate speech on his profile.

Moderators have no way of getting honest remarks from Facebook. Moreover, they are badly treated and exploited.

The article cites examples from different countries, not only the US, including extreme right groups in the UK, Bolsonaro in Brazil, the massacre in Myanmar, and more.

In the end, the only thing that changes Facebook behavior is bad press.

-

-

www.bloomberg.com www.bloomberg.com

-

When Wojcicki took over, in 2014, YouTube was a third of the way to the goal, she recalled in investor John Doerr’s 2018 book Measure What Matters.“They thought it would break the internet! But it seemed to me that such a clear and measurable objective would energize people, and I cheered them on,” Wojcicki told Doerr. “The billion hours of daily watch time gave our tech people a North Star.” By October, 2016, YouTube hit its goal.

Obviously they took the easy route. You may need to measure what matters, but getting to that goal by any means necessary or using indefensible shortcuts is the fallacy here. They could have had that North Star, but it's the means they used by which to reach it that were wrong.

This is another great example of tech ignoring basic ethics to get to a monetary goal. (Another good one is Marc Zuckerberg's "connecting people" mantra when what he should be is "connecting people for good" or "creating positive connections".

-

-

techcrunch.com techcrunch.com

-

Meta co-founder and CEO Sam Molyneux writes that “Going forward, our intent is not to profit from Meta’s data and capabilities; instead we aim to ensure they get to those who need them most, across sectors and as quickly as possible, for the benefit of the world.”

Odd statement from a company that was just acquired by Facebook founder's CVI.

-

-

www.newyorker.com www.newyorker.com

-

A spokeswoman for Summit said in an e-mail, “We only use information for educational purposes. There are no exceptions to this.” She added, “Facebook plays no role in the Summit Learning Program and has no access to any student data.”

As if Facebook needed it. The fact that this statement is made sort of goes to papering over the idea that Summit itself wouldn't necessarily do something as nefarious or worse with it than Facebook might.

-

-

vega.micro.blog vega.micro.blog

-

M.B can’t be reduced to stereotypes, of course. But there’s also a bar to entry into this social-media network, and it’s a distinctly technophilic, first-world, Western bar.

You can only say this because I suspect you're comparing it to platforms that are massively larger by many orders of magnitude. You can't compare it to Twitter or Facebook yet. In fact, if you were to compare it to them, then it would be to their early versions. Twitter was very technophilic for almost all of it's first three years until it crossed over into the broader conscious in early 2009.

Your argument is somewhat akin to doing a national level political poll and only sampling a dozen people in one small town.

-

-

people.well.com people.well.comMetacrap1

-

Schemas aren't neutral

This section highlights why relying on algorithmic feeds in social media platforms like Facebook and Twitter can be toxic. Your feed is full of what they think you'll like and click on instead of giving you the choice.

-

-

ruben.verborgh.org ruben.verborgh.org

-

In fact, these platforms have become inseparable from their data: we use “Facebook” to refer to both the application and the data that drives that application. The result is that nearly every Web app today tries to ask you for more and more data again and again, leading to dangling data on duplicate and inconsistent profiles we can no longer manage. And of course, this comes with significant privacy concerns.

-

-

www.twitterandteargas.org www.twitterandteargas.org

-

hanks to a Facebook page, perhaps for the first time in history, an in-ternet user could click yes on an electronic invitation to a revolution

-

-

www.newyorker.com www.newyorker.com

-

Most previous explanations had focussed on explaining how someone’s beliefs might be altered in the moment.

Knowing a little of what is coming in advance here, I can't help but thinking: How can this riot theory potentially be used to influence politics and/or political campaigns? It could be particularly effective to get people "riled up" just before a particular election to create a political riot of sorts and thereby influence the outcome.

Facebook has done several social experiments with elections in showing that their friends and family voted and thereby affecting other potential voters. When done in a way that targets people of particular political beliefs to increase turn out, one is given a means of drastically influencing elections. In some sense, this is an example of this "Riot Theory".

-

-

www.americanpressinstitute.org www.americanpressinstitute.org

-

Even publishers with the most social media-savvy newsrooms can feel at a disadvantage when Facebook rolls out a new product.

The same goes in triplicate when they pull the plug without notice too!

-

-

-

People come to Google looking for information they can trust, and that information often comes from the reporting of journalists and news organizations around the world.

Heavy hit in light of the Facebook data scandal this week on top of accusations about fake news spreading.

-

We’re now in the early stages of testing a “Propensity to Subscribe” signal based on machine learning models in DoubleClick to make it easier for publishers to recognize potential subscribers, and to present them the right offer at the right time.

Interestingly the technology here isn't that different than the Facebook Data that Cambridge Analytica was using, the difference is that they're not using it to directly impact politics, but to drive sales. Does this mean they're more "ethical"?

-

-

reallifemag.com reallifemag.com

-

Facebook’s use of “ethnic affinity” as a proxy for race is a prime example. The platform’s interface does not offer users a way to self-identify according to race, but advertisers can nonetheless target people based on Facebook’s ascription of an “affinity” along racial lines. In other words. race is deployed as an externally assigned category for purposes of commercial exploitation and social control, not part of self-generated identity for reasons of personal expression. The ability to define one’s self and tell one’s own stories is central to being human and how one relates to others; platforms’ ascribing identity through data undermines both.

-

Facebook’s use of “ethnic affinity” as a proxy for race is a prime example. The platform’s interface does not offer users a way to self-identify according to race, but advertisers can nonetheless target people based on Facebook’s ascription of an “affinity” along racial lines. In other words, race is deployed as an externally assigned category for purposes of commercial exploitation and social control, not part of self-generated identity for reasons of personal expression. The ability to define one’s self and tell one’s own stories is central to being human and how one relates to others; platforms’ ascribing identity through data undermines both.

Tags

Annotators

URL

-

-

-

You could throw the pack away and deactivate your Facebook account altogether. It will get harder the longer you wait — the more photos you post there, or apps you connect to it.

Links create value over time, and so destroying links typically destroys the value.

-

-

edtechfactotum.com edtechfactotum.com

-

My hope is that it will somehow bring comments on Facebook back to the blog and display them as comments here.

Sadly, Aaron Davis is right that Facebook turned off their API access for this on August 1st, so there currently aren't any services, including Brid.gy, anywhere that allow this. Even WordPress and JetPack got cut off from posting from WordPress to Facebook, much less the larger challenge of pulling responses back.

-

- Sep 2020

-

www.theverge.com www.theverge.com

-

What were the “right things” to serve the community, as Zuckerberg put it, when the community had grown to more than 3 billion people?

This is just one of the contradictions of having a global medium/platform of communication being controlled by a single operator.

It is extremely difficult to create global policies to moderate the conversations of 3 billion people across different languages and cultures. No team, no document, is qualified for such a task, because so much is dependent on context.

The approach to moderation taken by federated social media like Mastodon makes a lot more sense. Communities moderate themselves, based on their own codes of conduct. In smaller servers, a strict code of conduct may not even be necessary - moderation decisions can be based on a combination of consensus and common sense (just like in real life social groups and social interactions). And there is no question of censorship, since their moderation actions don't apply to the whole network.

-

-

www.buzzfeednews.com www.buzzfeednews.com

-

“With no oversight whatsoever, I was left in a situation where I was trusted with immense influence in my spare time,” she wrote. “A manager on Strategic Response mused to myself that most of the world outside the West was effectively the Wild West with myself as the part-time dictator – he meant the statement as a compliment, but it illustrated the immense pressures upon me.”

-

“There was so much violating behavior worldwide that it was left to my personal assessment of which cases to further investigate, to file tasks, and escalate for prioritization afterwards,” she wrote.

Wow.

-

Facebook ignored or was slow to act on evidence that fake accounts on its platform have been undermining elections and political affairs around the world, according to an explosive memo sent by a recently fired Facebook employee and obtained by BuzzFeed News.The 6,600-word memo, written by former Facebook data scientist Sophie Zhang, is filled with concrete examples of heads of government and political parties in Azerbaijan and Honduras using fake accounts or misrepresenting themselves to sway public opinion. In countries including India, Ukraine, Spain, Brazil, Bolivia, and Ecuador, she found evidence of coordinated campaigns of varying sizes to boost or hinder political candidates or outcomes, though she did not always conclude who was behind them.

-

“In the office, I realized that my viewpoints weren’t respected unless I acted like an arrogant asshole,” Zhang said.

-

-

-

Obradovich, N., Özak, Ö., Martín, I., Ortuño-Ortín, I., Awad, E., Cebrián, M., Cuevas, R., Desmet, K., Rahwan, I., & Cuevas, Á. (2020). Expanding the measurement of culture with a sample of two billion humans [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/qkf42

-

-

jamesshelley.com jamesshelley.com

-

On and on it goes, until the perceived cost of not being on Facebook is higher than the perceived downsides of joining the platform.

De kosten om niet op Facebook te zijn, zijn hoger dan de nadelen van het lid worden van het platform. Die zin moet ik nog een paar keer op me in laten werken. Ik zie het nog niet voor me.

-

-

blog.sens-public.org blog.sens-public.org

-

il est fondamental de comprendre que, sur Facebook, je suis comme devant une fenêtre

... mais le caractère diaphane de Facebook n'est pas évident: certes, pour ce qu'on Like ou met en ligne publiquement; mais de manière beaucoup plus significative, le suivi à la trace de chacun de nos comportements – chaque clic sur un lien, chaque site web visité (où Facebook ou une de ses filiales est présent), chaque fraction de seconde pendant laquelle nous cessons de défiler… ce regard profondément asymmétrique qu’a Facebook sur nous, à notre insu, est majeur.

-

-

dataforgood.fb.com dataforgood.fb.com

-

COVID-19 Symptom Survey—Request for Data Access. (n.d.). Facebook Data for Good. Retrieved May 29, 2020, from https://dataforgood.fb.com/docs/covid-19-symptom-survey-request-for-data-access/

-

- Aug 2020

-

ar.al ar.al

-

The mass surveillance and factory farming of human beings on a global scale is the business model of people farmers like Facebook and Google. It is the primary driver of the socioeconomic system we call surveillance capitalism.

-

-

www.theregister.com www.theregister.com

-

Facebook has apologized to its users and advertisers for being forced to respect people’s privacy in an upcoming update to Apple’s mobile operating system – and promised it will do its best to invade their privacy on other platforms.

Sometimes I forget how funny The Register can be. This is terrific.

-

-

arstechnica.com arstechnica.com

-

Facebook is warning developers that privacy changes in an upcoming iOS update will severely curtail its ability to track users' activity across the entire Internet and app ecosystem and prevent the social media platform from serving targeted ads to users inside other, non-Facebook apps on iPhones.

I fail to see anything bad about this.

-

-

www.nber.org www.nber.org

-

Kuchler, T., Russel, D., & Stroebel, J. (2020). The Geographic Spread of COVID-19 Correlates with Structure of Social Networks as Measured by Facebook (Working Paper No. 26990; Working Paper Series). National Bureau of Economic Research. https://doi.org/10.3386/w26990

-

-

covid-19.iza.org covid-19.iza.org

-

Urban Density and COVID-19. COVID-19 and the Labor Market. (n.d.). IZA – Institute of Labor Economics. Retrieved July 30, 2020, from https://covid-19.iza.org/publications/dp13440/

-

- Jul 2020

-

epjdatascience.springeropen.com epjdatascience.springeropen.com

-

Fatehkia, M., Tingzon, I., Orden, A., Sy, S., Sekara, V., Garcia-Herranz, M., & Weber, I. (2020). Mapping socioeconomic indicators using social media advertising data. EPJ Data Science, 9(1), 1–15. https://doi.org/10.1140/epjds/s13688-020-00235-w

-

-

www.buzzfeednews.com www.buzzfeednews.com

-

www.theguardian.com www.theguardian.com

-

But the business model that we now call surveillance capitalism put paid to that, which is why you should never post anything on Facebook without being prepared to face the algorithmic consequences.

I'm reminded a bit of the season 3 episode of Breaking Bad where Jesse Pinkman invites his drug dealing pals to a Narcotics Anonymous-type meeting so that they can target their meth sales. Fortunately the two low lifes had more morality and compassion than Facebook can manage.

-

-

www.youtube.com www.youtube.com

-

Using Facebook Ad Library | Webinar with Maddy Webb and Laura Garcia. (2020, May 22). First Draft. https://www.youtube.com/watch?v=YuzUr0V_fEk&feature=emb_logo

Tags

Annotators

URL

-

-

www.youtube.com www.youtube.com

-

Mark Zuckerberg & Thierry Breton: Towards a post COVID-19 Digital Deal between tech and governments? (2020, May 18). https://www.youtube.com/watch?v=uZfi6WkIfgU&feature=youtu.be

-

-

osf.io osf.io

-

Grow, A., Perrotta, D., Del Fava, E., Cimentada, J., Rampazzo, F., Gil-Clavel, S., & Zagheni, E. (2020). Addressing Public Health Emergencies via Facebook Surveys: Advantages, Challenges, and Practical Considerations [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/ez9pb

-

-

-

Why Most Advertisers Can’t Afford to Boycott Facebook. (2020, July 8). Pro Market. https://promarket.org/2020/07/08/why-most-advertisers-cant-afford-to-boycott-facebook/

-

-

niklasblog.com niklasblog.com

-

Nefarious corporations

As somewhat detailed here.

For far more information I strongly recommend reading Shoshana Zuboff's The Age of Surveillance Capitalism.

-

- Jun 2020

-

psyarxiv.com psyarxiv.com

-

Midgley, C., Thai, S., Lockwood, P., Kovacheff, C., & Page-Gould, E. (2020). When Every Day is a High School Reunion: Social Media Comparisons and Self-Esteem [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/zmy29

-

-

www.sciencemag.org www.sciencemag.org

-

CornwallApr. 16, W., 2020, & Am, 10:50. (2020, April 16). Crushing coronavirus means ‘breaking the habits of a lifetime.’ Behavior scientists have some tips. Science | AAAS. https://www.sciencemag.org/news/2020/04/crushing-coronavirus-means-breaking-habits-lifetime-behavior-scientists-have-some-tips

-

-

twitter.com twitter.com

-

David G. Rand en Twitter: “Today @GordPennycook & I wrote a @nytimes op ed ‘The Right Way to Fix Fake News’ https://t.co/dyF84g6oqv tl;dr: Platforms must rigorously TEST interventions, b/c intuitions about what will work are often wrong In this thread I unpack the many studies behind our op ed 1/” / Twitter. (n.d.). Twitter. Retrieved April 15, 2020, from https://twitter.com/dg_rand/status/1242526565793136641

-

-

twitter.com twitter.com

-

David G. Rand en Twitter: “Today @GordPennycook & I wrote a @nytimes op ed ‘The Right Way to Fix Fake News’ https://t.co/dyF84g6oqv tl;dr: Platforms must rigorously TEST interventions, b/c intuitions about what will work are often wrong In this thread I unpack the many studies behind our op ed 1/” / Twitter. (n.d.). Twitter. Retrieved April 15, 2020, from https://twitter.com/DG_Rand/status/1242526565793136641

-

-

www.nature.com www.nature.com

-

Ledford, H. (2020). How Facebook, Twitter and other data troves are revolutionizing social science. Nature, 582(7812), 328–330. https://doi.org/10.1038/d41586-020-01747-1

-

-

theintercept.com theintercept.com

-

One of the new tools debuted by Facebook allows administrators to remove and block certain trending topics among employees. The presentation discussed the “benefits” of “content control.” And it offered one example of a topic employers might find it useful to blacklist: the word “unionize.”

Imagine your employer looking over your shoulder constantly.

Imagine that you're surveilled not only in regard to what you produce, but to what you—if you're an office worker—tap our in chats to colleagues.

This is what Facebook does and it's not very different to what China has created with their Social Credit System.

This is Orwellian.

-

-

-

Layer, R. M., Fosdick, B., Larremore, D. B., Bradshaw, M., & Doherty, P. (2020). Case Study: Using Facebook Data to Monitor Adherence to Stay-at-home Orders in Colorado and Utah. MedRxiv, 2020.06.04.20122093. https://doi.org/10.1101/2020.06.04.20122093

-

-

sg.finance.yahoo.com sg.finance.yahoo.com

-

President’s Reaction to Twitter Fact Check Is ‘Slapped Together,’ Says Stanford’s DiResta. (n.d.). Retrieved 9 June 2020, from https://sg.news.yahoo.com/presidents-reaction-twitter-fact-check-231129841.html

-

-

-

Seetharaman, J. H. and D. (2020, May 26). Facebook Executives Shut Down Efforts to Make the Site Less Divisive. Wall Street Journal. https://www.wsj.com/articles/facebook-knows-it-encourages-division-top-executives-nixed-solutions-11590507499

-

-

spreadprivacy.com spreadprivacy.com

-

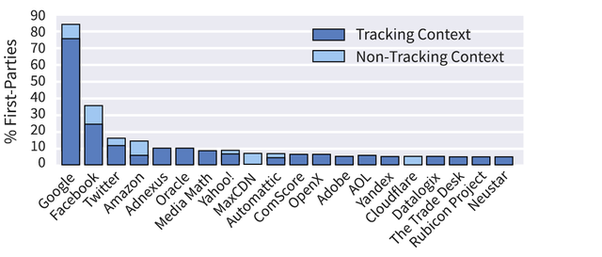

Alarmingly, Google now deploys hidden trackers on 76% of websites across the web to monitor your behavior and Facebook has hidden trackers on about 25% of websites, according to the Princeton Web Transparency & Accountability Project. It is likely that Google and/or Facebook are watching you on most sites you visit, in addition to tracking you when using their products.

-

-

-

Cinelli, M., Morales, G. D. F., Galeazzi, A., Quattrociocchi, W., & Starnini, M. (2020). Echo Chambers on Social Media: A comparative analysis. ArXiv:2004.09603 [Physics]. http://arxiv.org/abs/2004.09603

-

-

dataforgood.fb.com dataforgood.fb.com

-

Our Work on COVID-19. (n.d.). Facebook Data for Good. Retrieved April 20, 2020, from https://dataforgood.fb.com/docs/covid19/

-

-

arxiv.org arxiv.org

-

coronavirus.frontiersin.org coronavirus.frontiersin.org

-

Global public health data on COVID-19. (n.d.). Coronavirus (COVID-19): The Latest Science & Expert Commentary | Frontiers. Retrieved June 4, 2020, from https://coronavirus.frontiersin.org/covid-19-data-resources

-

-

goodmorningamerica.com goodmorningamerica.com

-

News, A. B. C. (n.d.). Mark Zuckerberg explains plans for Facebook health surveys to research and combat coronavirus. ABC News. Retrieved April 22, 2020, from https://goodmorningamerica.com/news/story/mark-zuckerberg-explains-plans-facebook-health-surveys-research-70238069

-

-

www.visualcapitalist.com www.visualcapitalist.com

-

Routley, N. (2019, August 7). Ranking the Top 100 Websites in the World. Visual Capitalist. https://www.visualcapitalist.com/ranking-the-top-100-websites-in-the-world/

-

-

www.forbes.com www.forbes.com

-

And while all major tech platforms deploying end-to-end encryption argue against weakening their security, Facebook has become the champion-in-chief fighting against government moves, supported by Apple and others.

-

-

www.forbes.com www.forbes.com

-

WhatsApp has become the dominant messaging platform, dwarfing all other contenders with the exception of its Facebook stablemate Messenger. In doing so, this hyper-scale “over-the-top” platform has also pushed legacy SMS messaging into the background

-

-

securitytoday.com securitytoday.com

-

The breach was caused by Facebook’s “View As” feature, which allows users to view their own account as if they were a stranger visiting it.

-

“We have a responsibility to protect your data,” said Zuckerburg, in March. “And if we can’t, then we don’t deserve to serve you.”

-

-

lifehacker.com lifehacker.com

-

Facebook already harvests some data from WhatsApp. Without Koum at the helm, it’s possible that could increase—a move that wouldn’t be out of character for the social network, considering that the company’s entire business model hinges on targeted advertising around personal data.

-

- May 2020

-

www.brookings.edu www.brookings.edu

-

Muro, M. (2020, May 26). Could Big Tech’s move to permanent remote work save the American heartland? Brookings. https://www.brookings.edu/blog/the-avenue/2020/05/26/could-big-techs-move-to-permanent-remote-work-save-the-american-heartland/

-

-

-

Battiston, P., Kashyap, R., & Rotondi, V. (2020, May 11). Trust in science and experts during the COVID-19 outbreak in Italy. https://doi.org/10.31235/osf.io/5tch8

-

-

www.washingtonpost.com www.washingtonpost.com

-

Bode, L., & Vraga, E. (2020 May 7). Analysis | Americans are fighting coronavirus misinformation on social media. Washington Post.https://www.washingtonpost.com/politics/2020/05/07/americans-are-fighting-coronavirus-misinformation-social-media/

-

-

www.wired.com www.wired.com

-

Porter, E. & Wood. T.J. (2020 May 14). Why is Facebook so afraid of checking facts? Wired. https://www.wired.com/story/why-is-facebook-so-afraid-of-checking-facts/

-

-

theintercept.com theintercept.com

-

daringfireball.net daringfireball.net

-

The high number of extremist groups was concerning, the presentation says. Worse was Facebook’s realization that its algorithms were responsible for their growth. The 2016 presentation states that “64% of all extremist group joins are due to our recommendation tools” and that most of the activity came from the platform’s “Groups You Should Join” and “Discover” algorithms: “Our recommendation systems grow the problem.”

-

-

standuprepublic.com standuprepublic.com

-

McKew, M. (2020, May 13) Disinformation Starts at Home. Stand Up Republic. https://standuprepublic.com/disinformation-starts-at-home/

-

-

www.nature.com www.nature.com

-

Ball, P. (2020). Anti-vaccine movement could undermine efforts to end coronavirus pandemic, researchers warn. Nature, 581(7808), 251–251. https://doi.org/10.1038/d41586-020-01423-4

-

-

-

Johnson, N.F., Velásquez, N., Restrepo, N.J. et al. The online competition between pro- and anti-vaccination views. Nature (2020). https://doi.org/10.1038/s41586-020-2281-1

-

-

-

Kennedy, B., Atari, M., Davani, A. M., Hoover, J., Omrani, A., Graham, J., & Dehghani, M. (2020, May 7). Moral Concerns are Differentially Observable in Language. https://doi.org/10.31234/osf.io/uqmty

-

-

www.fastcompany.com www.fastcompany.com

-

For instance, Google’s reCaptcha cookie follows the same logic of the Facebook “like” button when it’s embedded in other websites—it gives that site some social media functionality, but it also lets Facebook know that you’re there.

-

- Apr 2020

-

www.cnbc.com www.cnbc.com

-

www.engadget.com www.engadget.com

-

www.forbes.com www.forbes.com

-

Acton's $850M moral stand and the $122mn fine for deliberately lying to the EU Competition Commission

Under pressure from Mark Zuckerberg and Sheryl Sandberg to monetize WhatsApp, he pushed back as Facebook questioned the encryption he'd helped build and laid the groundwork to show targeted ads and facilitate commercial messaging. Acton also walked away from Facebook a year before his final tranche of stock grants vested. “It was like, okay, well, you want to do these things I don’t want to do,” Acton says. “It’s better if I get out of your way. And I did.” It was perhaps the most expensive moral stand in history. Acton took a screenshot of the stock price on his way out the door—the decision cost him $850 million.

Despite a transfer of several billion dollars, Acton says he never developed a rapport with Zuckerberg. “I couldn’t tell you much about the guy,” he says. In one of their dozen or so meetings, Zuck told Acton unromantically that WhatsApp, which had a stipulated degree of autonomy within the Facebook universe and continued to operate for a while out of its original offices, was “a product group to him, like Instagram.”

For his part, Acton had proposed monetizing WhatsApp through a metered-user model, charging, say, a tenth of a penny after a certain large number of free messages were used up. “You build it once, it runs everywhere in every country,” Acton says. “You don’t need a sophisticated sales force. It’s a very simple business.”

Acton’s plan was shot down by Sandberg. “Her words were ‘It won’t scale.’ ”

“I called her out one time,” says Acton, who sensed there might be greed at play. “I was like, ‘No, you don’t mean that it won’t scale. You mean it won’t make as much money as . . . ,’ and she kind of hemmed and hawed a little. And we moved on. I think I made my point. . . . They are businesspeople, they are good businesspeople. They just represent a set of business practices, principles and ethics, and policies that I don’t necessarily agree with.”

Questioning Zuckerberg’s true intentions wasn’t easy when he was offering what became $22 billion. “He came with a large sum of money and made us an offer we couldn’t refuse,” Acton says. The Facebook founder also promised Koum a board seat, showered the founders with admiration and, according to a source who took part in discussions, told them that they would have “zero pressure” on monetization for the next five years... Internally, Facebook had targeted a $10 billion revenue run rate within five years of monetization, but such numbers sounded too high to Acton—and reliant on advertising.

T he warning signs emerged before the deal even closed that November. The deal needed to get past Europe’s famously strict antitrust officials, and Facebook prepared Acton to meet with around a dozen representatives of the European Competition Commission in a teleconference. “I was coached to explain that it would be really difficult to merge or blend data between the two systems,” Acton says. He told the regulators as much, adding that he and Koum had no desire to do so.

Later he learned that elsewhere in Facebook, there were “plans and technologies to blend data.” Specifically, Facebook could use the 128-bit string of numbers assigned to each phone as a kind of bridge between accounts. The other method was phone-number matching, or pinpointing Facebook accounts with phone numbers and matching them to WhatsApp accounts with the same phone number.

Within 18 months, a new WhatsApp terms of service linked the accounts and made Acton look like a liar. “I think everyone was gambling because they thought that the EU might have forgotten because enough time had passed.” No such luck: Facebook wound up paying a $122 million fine for giving “incorrect or misleading information” to the EU—a cost of doing business, as the deal got done and such linking continues today (though not yet in Europe). “The errors we made in our 2014 filings were not intentional," says a Facebook spokesman.

Acton had left a management position on Yahoo’s ad division over a decade earlier with frustrations at the Web portal’s so-called “Nascar approach” of putting ad banners all over a Web page. The drive for revenue at the expense of a good product experience “gave me a bad taste in my mouth,” Acton remembers. He was now seeing history repeat. “This is what I hated about Facebook and what I also hated about Yahoo,” Acton says. “If it made us a buck, we’d do it.” In other words, it was time to go.

-

-

www.nytimes.com www.nytimes.com

-

Also see Social Capital's 2018 Annual Letter which noted that 40c of every VC dollar is now spent on Google, Facebook, and Amazon (ads)

The leaders of more than half a dozen new online retailers all told me they spent the greatest portion of their ad money on Facebook and Instagram.

“In the start-up-industrial complex, it’s like a systematic transfer of money” from venture-capital firms to start-ups to Facebook.

Steph Korey, a founder of Away, a luggage company based in New York that opened in 2015, says that when the company was starting, it made $5 for every $1 it spent on Facebook Lookalike ads.

They began trading their Lookalike groups with other online retailers, figuring that the kind of people who buy one product from social media will probably buy others. This sort of audience sharing is becoming more common on Facebook: There is even a company, TapFwd, that pools together Lookalike groups for various brands, helping them show ads to other groups.

-

-

www.ithinkso.co www.ithinkso.co

-

Facebook Reklamlarının Avantajları Facebook Reklamları diğer sosyal medya platformlarına tercih etmeniz için birçok sebebiniz var. Platformu öğrendikten sonra reklamlarınızı yayınlayıp işletmeniz için kar elde etmeniz çok kolay. Peki Facebook'un neden tercih etmelisiniz? Gelin birlikte bakalım.

-

-

theconversation.com theconversation.com

-

Ces dernières ont de toute évidence un rôle clé dans la lutte contre les cyberviolences qui se déroulent la plupart du temps en leur sein. On peut citer les dernières mesures prises par Facebook contre l’intimidation et le harcèlement : possibilité de masquer ou de supprimer plusieurs commentaires à la fois sous un post, ou encore possibilité de signaler un contenu jugé injurieux publié sur le compte d’un ami.

Exemple de Facebook qui a pris des mesures pour limiter les cyberviolences. La victime peut donc supprimer des commentaires injurieux (après les avoir lu) et il est possible, en tant qu'ami, d'intervenir. Mais à quand de réelles mesures comme la suppression du compte du contrevenant, sa signalisation aux autorités ou des vérifications suffisantes pour s'assurer qu'un enfant de moins de X ans ne soit pas présent sur la plateforme ?

-

-

adtransparency.mozilla.org adtransparency.mozilla.org

-

This page documents our efforts to track political advertising and produce an ad transparency report for the 2019 European Parliament Election.We attempted to download a copy of the political ads on a daily basis using the Facebook Ad Library API and the Google Ad Library, starting on March 29 and May 11 respectively, when the two companies released their political ads archive. We provide this data collection log, so that external researchers, journalists, analysts, and readers may examine our methods and assess the data presented in our reports.Facebook Ad Library APIScroll down Facebook provides an Application Programmable Interface ("API") to authorized users who may search for ads in their archive. However, due to the inconsistent state of the Facebook Ad Library API, our methods to scan and discover ads must be adapted on a daily and sometimes hourly basis — to deal with design limitations, data issues, and numerous software bugs in the Facebook Ad Library API.Despite our best efforts to help Facebook debug their system, the majority of the issues were not resolved. The API delivered incomplete data on most days from its release through May 16, when Facebook fixed a critical bug. The API was broken again from May 18 through May 26, the last day of the elections.We regret we do not have reliable or predictable instructions on how to retrieve political ads from Facebook. Visit the methods page for our default crawler settings and a list of suggested workarounds for known bugs, or scroll down to see our log.In general, we encountered three categories of issues with the Facebook Ad Library API. First, software programming errors that cripple a user's ability to complete even a single search including the following bugs:

Classic example of transparency hobbled by non-bulk access and poor coding. Contrast with Google who just provided straight up bulk access.

Also reflects FB's lack of technical prowess: they are craigslist x 10. No technical quality: just the perfect monopoly platform able to hoover up cents on massive volume.

Tags

Annotators

URL

-

- Mar 2020

-

www.nytimes.com www.nytimes.com

-

Right now, Facebook is tackling “misinformation that has imminent risk of danger, telling people if they have certain symptoms, don’t bother going getting treated … things like ‘you can cure this by drinking bleach.’ I mean, that’s just in a different class.”

-

-

techcrunch.com techcrunch.com

-

Facebook does not even offer an absolute opt out of targeted advertising on its platform. The ‘choice’ it gives users is to agree to its targeted advertising or to delete their account and leave the service entirely. Which isn’t really a choice when balanced against the power of Facebook’s platform and the network effect it exploits to keep people using its service.

-

So it’s not surprising that Facebook is so coy about explaining why a certain user on its platform is seeing a specific advert. Because if the huge surveillance operation underpinning the algorithmic decision to serve a particular ad was made clear, the person seeing it might feel manipulated. And then they would probably be less inclined to look favorably upon the brand they were being urged to buy. Or the political opinion they were being pushed to form. And Facebook’s ad tech business stands to suffer.

-

-

www.calnewport.com www.calnewport.com

-

Using Facebook ads, the researchers recruited 2,743 users who were willing to leave Facebook for one month in exchange for a cash reward. They then randomly divided these users into a Treatment group, that followed through with the deactivation, and a Control group, that was asked to keep using the platform.

The effects of not using Facebook for a month:

- on average another 60 free mins per day

- small but significant improvement in well-being, and in particular in self-reported happiness, life satisfaction, depression and anxiety

- participants were less willing to use Facebook from now

- the group was less likely to follow politics

- deactivation significantly reduced polarization of views on policy issues and a measure of exposure to polarizing news

- 80% agreed that the deactivation was good for them

-

- Feb 2020

-

thenextweb.com thenextweb.com

-

Last year, Facebook said it would stop listening to voice notes in messenger to improve its speech recognition technology. Now, the company is starting a new program where it will explicitly ask you to submit your recordings, and earn money in return.

Given Facebook's history with things like breaking laws that end up with them paying billions of USD in damages (even though it's a joke), sold ads to people who explicitly want to target people who hate jews, and have spent millions of USD every year solely on lobbyism, don't sell your personal experiences and behaviours to them.

Facebook is nefarious and psychopathic.

-

-

www.reuters.com www.reuters.com

-

From 2010 to 2016, Facebook Ireland paid Facebook U.S. more than $14 billion in royalties and cost-sharing payments

-

the Internal Revenue Service tries to convince a judge the world’s largest social media company owes more than $9 billion

-

-

danmackinlay.name danmackinlay.name

-

Facebook is informative in the same way that thumb sucking is nourishing.

Tags

Annotators

URL

-

- Jan 2020

-

www.cnbc.com www.cnbc.com

-

privacy and security improvements

Seems like money well spent!

-

-

medium.com medium.com

-

received a message telling me that my account had been locked because I was incarcerated and as such, disallowed from using Facebook

-

- Dec 2019

-

www.theatlantic.com www.theatlantic.com

-

Madison’s design has proved durable. But what would happen to American democracy if, one day in the early 21st century, a technology appeared that—over the course of a decade—changed several fundamental parameters of social and political life? What if this technology greatly increased the amount of “mutual animosity” and the speed at which outrage spread? Might we witness the political equivalent of buildings collapsing, birds falling from the sky, and the Earth moving closer to the sun?

Jonathan Haidt, you might have noticed, is a scholar that I admire very much. In this piece, his colleague Tobias Rose-Stockwell and he ask the following questions: Is social media a threat to our democracy? Let's read the following article together and think about their question together.

-

-

www.dylanpaulus.com www.dylanpaulus.com

- Nov 2019

-

simplelogin.io simplelogin.io

-

Loading this iframe allows Facebook to know that this specific user is currently on your website. Facebook therefore knows about user browsing behaviour without user’s explicit consent. If more and more websites adopt Facebook SDK then Facebook would potentially have user’s full browsing history! And as with “With great power comes great responsibility”, it’s part of our job as developers to protect users privacy even when they don’t ask for.

Tags

Annotators

URL

-

-

en.wikipedia.org en.wikipedia.org

-

In June 2012, Facebook announced it would no longer use its own money system, Facebook Credits.

Gave up in 2012 on their scam. Why hasn't this been brought up? Especially by regulators?

Tags

Annotators

URL

-

-

twitter.com twitter.com

-

Found a @facebook #security & #privacy issue. When the app is open it actively uses the camera. I found a bug in the app that lets you see the camera open behind your feed.

So, Facebook uses your camera even while not active.

Tags

Annotators

URL

-

-

www.businessinsider.com www.businessinsider.com

-

An explosive trove of nearly 4,000 pages of confidential internal Facebook documentation has been made public, shedding unprecedented light on the inner workings of the Silicon Valley social networking giant.

I can't even start telling you how much schadenfreude I feel at this. Even though this paints a vulgar picture, Facebook are still doing it, worse and worse.

Talk about hiding in plain sight.

-

-

www.eff.org www.eff.org

-

Somewhere in a cavernous, evaporative cooled datacenter, one of millions of blinking Facebook servers took our credentials, used them to authenticate to our private email account, and tried to pull information about all of our contacts. After clicking Continue, we were dumped into the Facebook home page, email successfully “confirmed,” and our privacy thoroughly violated.

-

-

www.eff.org www.eff.org

-

In 2013, Facebook began offering a “secure” VPN app, Onavo Protect, as a way for users to supposedly protect their web activity from prying eyes. But Facebook simultaneously used Onavo to collect data from its users about their usage of competitors like Twitter. Last year, Apple banned Onavo from its App Store for violating its Terms of Service. Facebook then released a very similar program, now dubbed variously “Project Atlas” and “Facebook Research.” It used Apple’s enterprise app system, intended only for distributing internal corporate apps to employees, to continue offering the app to iOS users. When the news broke this week, Apple shut down the app and threw Facebook into some chaos when it (briefly) booted the company from its Enterprise Developer program altogether.

-

-

www.engadget.com www.engadget.com

-

Take Facebook, for example. CEO Mark Zuckerberg will stand onstage at F8 and wax poetic about the beauty of connecting billions of people across the globe, while at the same time patenting technologies to determine users' social classes and enable discrimination in the lending process, and allowing housing advertisers to exclude racial and ethnic groups or families with women and children from their listings.

-

-

thereader.mitpress.mit.edu thereader.mitpress.mit.edu

-

If the apparatus of total surveillance that we have described here were deliberate, centralized, and explicit, a Big Brother machine toggling between cameras, it would demand revolt, and we could conceive of a life outside the totalitarian microscope.

-

-

www.reuters.com www.reuters.com

-

Senior government officials in multiple U.S.-allied countries were targeted earlier this year with hacking software that used Facebook Inc’s (FB.O) WhatsApp to take over users’ phones, according to people familiar with the messaging company’s investigation.

-

- Oct 2019

-

www.pastemagazine.com www.pastemagazine.com

-

In case you wanted to be even more skeptical of Mark Zuckerberg and his cohorts, Facebook has now changed its advertising policies to make it easier for politicians to lie in paid ads. Donald Trump is taking full advantage of this policy change, as popular info reports.

-

The claim in this ad was ruled false by those Facebook-approved third-party fact-checkers, but it is still up and running. Why? Because Facebook changed its policy on what constitutes misinformation in advertising. Prior to last week, Facebook’s rule against “false and misleading content” didn’t leave room for gray areas: “Ads landing pages, and business practices must not contain deceptive, false, or misleading content, including deceptive claims, offers, or methods.”

-

- Sep 2019

-

www.vanschneider.com www.vanschneider.com

-

On social media, we are at the mercy of the platform. It crops our images the way it wants to. It puts our posts in the same, uniform grids. We are yet another profile contained in a platform with a million others, pushed around by the changing tides of a company's whims. Algorithms determine where our posts show up in people’s feeds and in what order, how someone swipes through our photos, where we can and can’t post a link. The company decides whether we're in violation of privacy laws for sharing content we created ourselves. It can ban or shut us down without notice or explanation. On social media, we are not in control.

This is why I love personal web sites. They're your own, you do whatever you want with them, and you control them. Nothing is owned by others and you're completely free to do whatever you want.

That's not the case with Facebook, Microsoft, Slack, Jira, whatever.

-

-

techcrunch.com techcrunch.com

-

To wit: iOS 13, which will be generally released later this week, has already been spotted catching Facebook’s app trying to use Bluetooth to track nearby users.

-

-

www.privacyinternational.org www.privacyinternational.org

-

There is already a lot of information Facebook can assume from that simple notification: that you are probably a woman, probably menstruating, possibly trying to have (or trying to avoid having) a baby. Moreover, even though you are asked to agree to their privacy policy, Maya starts sharing data with Facebook before you get to agree to anything. This raises some serious transparency concerns.

Privacy International are highlighting how period-tracking apps are violating users' privacy.

-

-

techcrunch.com techcrunch.com

-

Each record contained a user’s unique Facebook ID and the phone number listed on the account.

-

Hundreds of millions of phone numbers linked to Facebook accounts have been found online.

-

- Jul 2019

-

www.theverge.com www.theverge.com

-

Even if we never see this brain-reading tech in Facebook products (something that would probably cause just a little concern), researchers could use it to improve the lives of people who can’t speak due to paralysis or other issues.

-

That’s very different from the system Facebook described in 2017: a noninvasive, mass-market cap that lets people type more than 100 words per minute without manual text entry or speech-to-text transcription.

-

Their work demonstrates a method of quickly “reading” whole words and phrases from the brain — getting Facebook slightly closer to its dream of a noninvasive thought-typing system.

-

-

www.nytimes.com www.nytimes.com

-

“We are a nation with a tradition of reining in monopolies, no matter how well-intentioned the leaders of these companies may be.”Mr. Hughes went on to describe the power held by Facebook and its leader Mr. Zuckerberg, his former college roommate, as “unprecedented.” He added, “It is time to break up Facebook.”

-

-

niklasblog.com niklasblog.com

-

CA enabled Ted Cruz’s campaign trail

More on the matter is found here.

Tags

Annotators

URL

-

-

www.theguardian.com www.theguardian.com

-

According to Shoshana Zuboff, professor emerita at Harvard Business School, the Cambridge Analytica scandal was a landmark moment, because it revealed a micro version “of the larger phenomenon that is surveillance capitalism”. Zuboff is responsible for formulating the concept of surveillance capitalism, and published a magisterial, indispensible book with that title soon after the scandal broke. In the book, Zuboff creates a framework and a language for understanding this new world. She believes The Great Hack is an important landmark in terms of public understanding, and that Noujaim and Amer capture “what living under the conditions of surveillance capitalism means. That every action is being repurposed as raw material for behavioural data. And that these data are being lifted from our lives in ways that are systematically engineered to be invisible. And therefore we can never resist.”

Shoshana Zuboff's comments on The Great Hack.

-

-

jackjamieson.net jackjamieson.net

-

Further, Humphreys [23] observes that the stabilization that occursduring a technology’s maturation is temporary, and so possibilities for intepretive flexibility canresurface when the context surrounding a technology changes

Thus the broader "context collapse" for users of Facebook as the platform matured and their surveillance capitalism came to the fore over their "connecting" priorities from earlier days.

-

-

www.nakedcapitalism.com www.nakedcapitalism.com

-

the question we should really be discussing is “How many years should Mark Zuckerberg and Sheryl Sandberg ultimately serve in prison?”

-

how many fake accounts did Facebook report being created in Q2 2019? Only 2.2 billion, with a “B,” which is approximately the same as the number of active users Facebook would like us to believe that it has. A comprehensive look back at Facebook’s disclosures suggests that of the company’s 12 billion total accounts ever created, about 10 billion are fake. And as many as 1 billion are probably active, if not more. (Facebook says that this estimate is “not based on any facts,” but much like the false statistics it provided to advertisers on video viewership, that too is a lie.)

I truly wonder how many Facebook accounts are real.

-

“those who were responsible for ensuring the accuracy ‘did not give a shit.’” Another individual, “a former Operations Contractor with Facebook, stated that Facebook was not concerned with stopping duplicate or fake accounts.”

-

The last time Mark suggested that Facebook’s growth heyday might be behind it, in July 2018, the stock took a nosedive that ended up being the single largest one-day fall of any company’s stock in the history of the United States

-

Facebook is not growing anymore in the United States, with zero million new accounts in Q1 2019, and only four million new accounts since Q1 2017

Facebook is stagnant. Yes!

-

-

journals.sagepub.com journals.sagepub.com

-

In contrast to such pseudonymous social networking, Facebook is notable for its longstanding emphasis on real identities and social connections.

Lack of anonymity also increases Facebook's ability to properly link shadow profiles purchased from other data brokers.

-

-

www.theguardian.com www.theguardian.com

-

What should lawmakers do? First, interrupt and outlaw surveillance capitalism’s data supplies and revenue flows. This means, at the front end, outlawing the secret theft of private experience. At the back end, we can disrupt revenues by outlawing markets that trade in human futures knowing that their imperatives are fundamentally anti-democratic. We already outlaw markets that traffic in slavery or human organs. Second, research over the past decade suggests that when “users” are informed of surveillance capitalism’s backstage operations, they want protection, and they want alternatives. We need laws and regulation designed to advantage companies that want to break with surveillance capitalism. Competitors that align themselves with the actual needs of people and the norms of a market democracy are likely to attract just about every person on Earth as their customer. Third, lawmakers will need to support new forms of collective action, just as nearly a century ago workers won legal protection for their rights to organise, to bargain collectively and to strike. Lawmakers need citizen support, and citizens need the leadership of their elected officials.

Shoshana Zuboff's answer to surveillance capitalism

-

- Jun 2019

-

-

The cryptocurrency, called Libra, will also have to overcome concern that Facebook does not effectively protect the private information of its users — a fundamental task for a bank or anyone handling financial transactions.

-

The company has sky-high hopes that Libra could become the foundation for a new financial system not controlled by today’s power brokers on Wall Street or central banks.

Facebook want another way to circumvent government? Well, let's circumvent Facebook.

-

- May 2019

-

www.crossfit.com www.crossfit.com

-

Facebook is surveillance capitalism.

-

-

www.americanpressinstitute.org www.americanpressinstitute.org

-

The more transparency in the media industry, the more publishers at all levels will be on equal playing field with new platforms. If everyone makes the shift to Facebook Instant Articles and sees a negative return, but no one talks about it, Facebook will always have all the power.

-

-

theintercept.com theintercept.com

-

“If Facebook is providing a consumer’s data to be used for the purposes of credit screening by the third party, Facebook would be a credit reporting agency,” Reidenberg explained. “The [FCRA] statute applies when the data ‘is used or expected to be used or collected in whole or in part for the purpose of serving as a factor in establishing the consumer’s eligibility for … credit.'” If Facebook is providing data about you and your friends that eventually ends up in a corporate credit screening operation, “It’s no different from Equifax providing the data to Chase to determine whether or not to issue a credit card to the consumer,” according to Reidenberg.

-

-

ruben.verborgh.org ruben.verborgh.org

-

Unsurprisingly living up to its reputation, Facebook refuses to comply with my GDPR Subject Access Requests in an appropriate manner.

Facebook never has cared about privacy of individuals. This is highly interesting.

Tags

Annotators

URL

-

-

www.theverge.com www.theverge.com

-

The problem with Facebook goes beyond economics however, Hughes argues. The News Feed’s algorithms dictate the content that millions of people see every day, its content rules define what counts as hate speech, and there’s no democratic oversight of its processes.

-

-

www.theatlantic.com www.theatlantic.com

-

“On the ground in Syria,” he continued, “Assad is doing everything he can to make sure the physical evidence [of potential human-rights violations] is destroyed, and the digital evidence, too. The combination of all this—the filters, the machine-learning algorithms, and new laws—will make it harder for us to document what’s happening in closed societies.” That, he fears, is what dictators want.

-

Google and Facebook break out the numbers in their quarterly transparency reports. YouTube pulled 33 million videos off its network in 2018—roughly 90,000 a day. Of the videos removed after automated systems flagged them, 73 percent were removed so fast that no community members ever saw them. Meanwhile, Facebook removed 15 million pieces of content it deemed “terrorist propaganda” from October 2017 to September 2018. In the third quarter of 2018, machines performed 99.5 percent of Facebook’s “terrorist content” takedowns. Just 0.5 percent of the purged material was reported by users first.Those statistics are deeply troubling to open-source investigators, who complain that the machine-learning tools are black boxes.

-

“We were collecting, archiving, and geolocating evidence, doing all sorts of verification for the case,” Khatib recalled. “Then one day we noticed that all the videos that we had been going through, all of a sudden, all of them were gone.”It wasn’t a sophisticated hack attack by pro-Assad forces that wiped out their work. It was the ruthlessly efficient work of machine-learning algorithms deployed by social networks, particularly YouTube and Facebook.

-

-

www.ynharari.com www.ynharari.com

-

in Conversation

...in Conversation about something other than how Facebook only wants to use humans to consume and give it our data.

-

- Apr 2019

-

www.nytimes.com www.nytimes.com

-

Facebook said on Wednesday that it expected to be fined up to $5 billion by the Federal Trade Commission for privacy violations. The penalty would be a record by the agency against a technology company and a sign that the United States was willing to punish big tech companies.

This is where surveillance capitalism brings you.

Sure, five billion American Dollars won't make much of a difference to Facebook, but it's notable.

-

-

gizmodo.com gizmodo.com

-

Per a Wednesday report in Business Insider, Facebook has now said that it automatically extracted contact lists from around 1.5 million email accounts it was given access to via this method without ever actually asking for their permission. Again, this is exactly the type of thing one would expect to see in a phishing attack.

Facebook are worse than Nixon, when he said "I'm not a crook".

-

-

www.theguardian.com www.theguardian.com

-

“They are morally bankrupt pathological liars who enable genocide (Myanmar), facilitate foreign undermining of democratic institutions. “[They] allow the live streaming of suicides, rapes, and murders, continue to host and publish the mosque attack video, allow advertisers to target ‘Jew haters’ and other hateful market segments, and refuse to accept any responsibility for any content or harm. “They #dontgiveazuck” wrote Edwards.

Well, I don't think he should have deleted his tweets.

-

-

www.thedailybeast.com www.thedailybeast.com

-

Facebook users are being interrupted by an interstitial demanding they provide the password for the email account they gave to Facebook when signing up. “To continue using Facebook, you’ll need to confirm your email,” the message demands. “Since you signed up with [email address], you can do that automatically …”A form below the message asked for the users’ “email password.”

So, Facebook tries to get users to give them their private and non-Facebook e-mail-account password.

This practice is called spear phishing.

-

- Mar 2019

-

www.forbes.com www.forbes.com

-

While employees were up in arms because of Google’s “Dragonfly” censored search engine with China and its Project Maven’s drone surveillance program with DARPA, there exist very few mechanisms to stop these initiatives from taking flight without proper oversight. The tech community argues they are different than Big Pharma or Banking. Regulating them would strangle the internet.

This is an old maxim with corporations, Google, Facebook, and Microsoft alike; if you don't break laws by simply doing what you want because of, well, greed, then you're hampering "evolution".

Evolution of their wallets, yes.

-

-

www.activelikes.com www.activelikes.com

-

Facebook reviews are another effective strategy, that makes your business go fluent and profitable. Our discussion is all about buying Facebook reviews with quality and cheap. If you apply paid service and get a good number of reviews on your Facebook page, It makes Your business credible to the audiences.

Facebook reviews are another effective strategy, that makes your business go fluent and profitable. Our discussion is all about buying Facebook reviews with quality and cheap. If you apply paid service and get a good number of reviews on your Facebook page, It makes Your business credible to the audiences.

-

- Feb 2019

-

www.theguardian.com www.theguardian.com

-

We saw the experimental development of this new “means of behavioural modification” in Facebook’s contagion experiments and the Google-incubated augmented reality game Pokémon Go.

-

- Jan 2019

-

time.com time.com

-

Google and Facebook are artificially profitable because they do not pay for the damage they cause.

-

-

static1.squarespace.com static1.squarespace.com

-

the calcu-lation of uses and applications that might be made of the vastly increased available means in order to devise new ends and to elimi-nate oppositions and segregations based on past competitions for scarce means. (24)

Does this sound like Mark Zuckerberg's idealism before it devolved into a data-mining project in the service of neoliberal economics?

-

-

chainb.com chainb.com

-

被修复的并不是这些互联网巨头,而是区块链本身。那些承诺将世界从资本主义的枷锁中解放出来的加密货币创业公司,现在甚至无法保证其自己员工的收益。Facebook的方法是整合区块链的碎片并紧跟潮流,从而让股东更容易接受。

<big>评:</big><br/><br/>鲁迅先生曾说过这么一句话:「我家院子里有两棵树,一棵是枣树,另一棵也是枣树」。有趣的是,以「榨取」用户隐私商业价值起家的 Facebook,其创始人 Zuckerberg 为了避免狗仔队的骚扰,把自家房子周围的其他四所房子也给买了下来。现在,我们可以回答,what is beside the walled garden? It’s another walled garden.

Tags

Annotators

URL

-

-

www.techdirt.com www.techdirt.com

-

Do we want technology to keep giving more people a voice, or will traditional gatekeepers control what ideas can be expressed?

Part of the unstated problem here is that Facebook has supplanted the "traditional gatekeepers" and their black box feed algorithm is now the gatekeeper which decides what people in the network either see or don't see. Things that crazy people used to decry to a non-listening crowd in the town commons are now blasted from the rooftops, spread far and wide by Facebook's algorithm, and can potentially say major elections.

I hope they talk about this.

-

- Dec 2018

-

www.sinembargo.mx www.sinembargo.mx

-

Instagram, otra red de su propiedad. “¿Por qué debería alguien seguir creyendo en Facebook?”, fue uno de los artículos publicados. Foto: AP

Willing to find refugee, to escape from one's own mind. The high winners. Their realities replicated in millions of minds.

-

La crítica ha venido después de una aluvión de revelaciones que ahondan en la crisis de la empresa de Zuckerberg. Facebook compartió sus datos con decenas de compañías a las que otorgaba “un acceso especial a la información de los usuarios”. También el New York Times publicó el pasado martes una investigación que sacó a la luz las prácticas de la red social con Microsoft, Amazon, Spotify o Netflix, a las que permitía husmear entre los datos de los usuarios para que así estas ofrecieran anuncios personalizados.

Y a cada paso que des; Publicidad. En el baño, en la sopa, en tu sexo, en la moda. Publicidad.

-

-

www.forbes.com www.forbes.com

-

Perhaps it's finally time to stop trusting Facebook before its too late.

Finally? This is what it took to say "finally"?

-

- Nov 2018

-

www.nytimes.com www.nytimes.com

-

While the NTK Network does not have a large audience of its own, its content is frequently picked up by popular conservative outlets, including Breitbart.

One wonders if they're seeding it and spreading it falsely on Facebook? Why not use the problem as a feature?!

-

Then Facebook went on the offensive. Mr. Kaplan prevailed on Ms. Sandberg to promote Kevin Martin, a former Federal Communications Commission chairman and fellow Bush administration veteran, to lead the company’s American lobbying efforts. Facebook also expanded its work with Definers.