public media 1.0 wasaccepted as important but rarely loved

It is seen as a necessity for making the invisible visible, but the ramifications of world wide attention of typically invisible things is often unknown and unprecedented

public media 1.0 wasaccepted as important but rarely loved

It is seen as a necessity for making the invisible visible, but the ramifications of world wide attention of typically invisible things is often unknown and unprecedented

“It makes me feel like I need a disclaimer because I feel like it makes you seem unprofessional to have these weirdly spelled words in your captions,” she said, “especially for content that's supposed to be serious and medically inclined.”

Where's the balance for professionalism with respect to dodging the algorithmic filters for serious health-related conversations online?

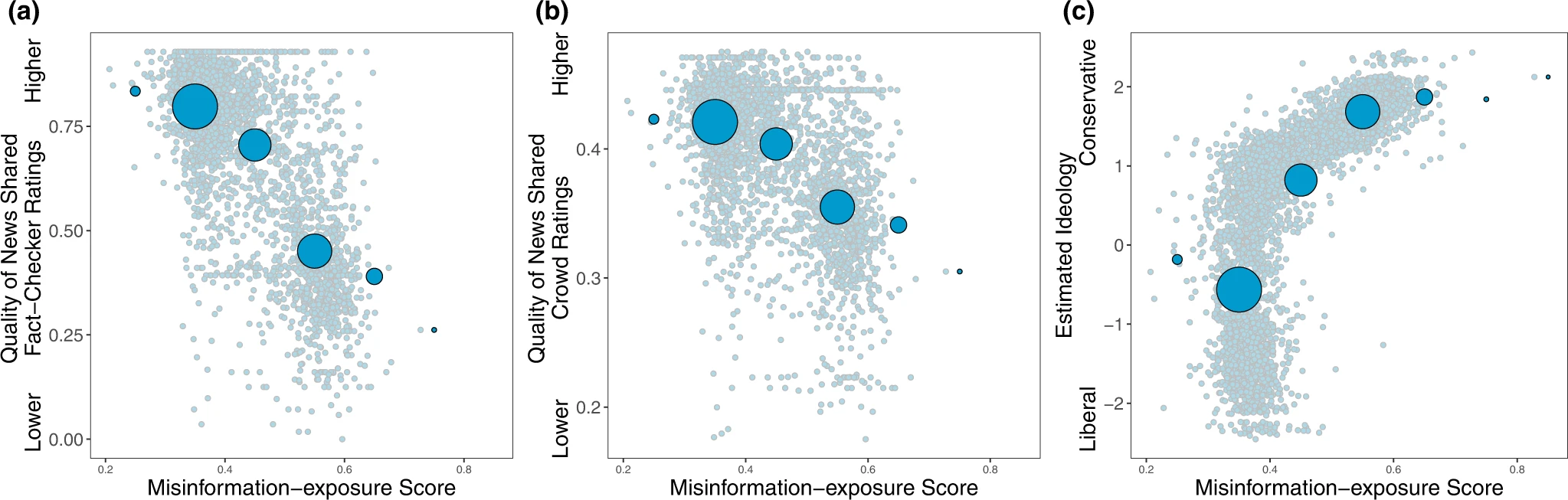

. Furthermore, our results add to the growing body of literature documenting—at least at this historical moment—the link between extreme right-wing ideology and misinformation8,14,24 (although, of course, factors other than ideology are also associated with misinformation sharing, such as polarization25 and inattention17,37).

Misinformation exposure and extreme right-wing ideology appear associated in this report. Others find that it is partisanship that predicts susceptibility.

And finally, at the individual level, we found that estimated ideological extremity was more strongly associated with following elites who made more false or inaccurate statements among users estimated to be conservatives compared to users estimated to be liberals. These results on political asymmetries are aligned with prior work on news-based misinformation sharing

This suggests the misinformation sharing elites may influence whether followers become more extreme. There is little incentive not to stoke outrage as it improves engagement.

Shown is the relationship between users’ misinformation-exposure scores and (a) the quality of the news outlets they shared content from, as rated by professional fact-checkers21, (b) the quality of the news outlets they shared content from, as rated by layperson crowds21, and (c) estimated political ideology, based on the ideology of the accounts they follow10. Small dots in the background show individual observations; large dots show the average value across bins of size 0.1, with size of dots proportional to the number of observations in each bin.

We applied two scenarios to compare how these regular agents behave in the Twitter network, with and without malicious agents, to study how much influence malicious agents have on the general susceptibility of the regular users. To achieve this, we implemented a belief value system to measure how impressionable an agent is when encountering misinformation and how its behavior gets affected. The results indicated similar outcomes in the two scenarios as the affected belief value changed for these regular agents, exhibiting belief in the misinformation. Although the change in belief value occurred slowly, it had a profound effect when the malicious agents were present, as many more regular agents started believing in misinformation.

Therefore, although the social bot individual is “small”, it has become a “super spreader” with strategic significance. As an intelligent communication subject in the social platform, it conspired with the discourse framework in the mainstream media to form a hybrid strategy of public opinion manipulation.

There were 120,118 epidemy-related tweets in this study, and 34,935 Twitter accounts were detected as bot accounts by Botometer, accounting for 29%. In all, 82,688 Twitter accounts were human, accounting for 69%; 2495 accounts had no bot score detected.In social network analysis, degree centrality is an index to judge the importance of nodes in the network. The nodes in the social network graph represent users, and the edges between nodes represent the connections between users. Based on the network structure graph, we may determine which members of a group are more influential than others. In 1979, American professor Linton C. Freeman published an article titled “Centrality in social networks conceptual clarification“, on Social Networks, formally proposing the concept of degree centrality [69]. Degree centrality denotes the number of times a central node is retweeted by other nodes (or other indicators, only retweeted are involved in this study). Specifically, the higher the degree centrality is, the more influence a node has in its network. The measure of degree centrality includes in-degree and out-degree. Betweenness centrality is an index that describes the importance of a node by the number of shortest paths through it. Nodes with high betweenness centrality are in the “structural hole” position in the network [69]. This kind of account connects the group network lacking communication and can expand the dialogue space of different people. American sociologist Ronald S. Bert put forward the theory of a “structural hole” and said that if there is no direct connection between the other actors connected by an actor in the network, then the actor occupies the “structural hole” position and can obtain social capital through “intermediary opportunities”, thus having more advantages.

We analyzed and visualized Twitter data during the prevalence of the Wuhan lab leak theory and discovered that 29% of the accounts participating in the discussion were social bots. We found evidence that social bots play an essential mediating role in communication networks. Although human accounts have a more direct influence on the information diffusion network, social bots have a more indirect influence. Unverified social bot accounts retweet more, and through multiple levels of diffusion, humans are vulnerable to messages manipulated by bots, driving the spread of unverified messages across social media. These findings show that limiting the use of social bots might be an effective method to minimize the spread of conspiracy theories and hate speech online.

I want to insist on an amateur internet; a garage internet; a public library internet; a kitchen table internet.

Social media should be comprised of people from end to end. Corporate interests inserted into the process can only serve to dehumanize the system.

Robin Sloan is in the same camp as Greg McVerry and I.

Schafer, B. (2021, October 5). RT Deutsch Finds a Home with Anti-Vaccination Skeptics in Germany. Alliance For Securing Democracy. https://securingdemocracy.gmfus.org/rt-deutsch-youtube-antivaccination-germany/

Caulfield, T. (2017, October 24). The Vaccination Picture by Timothy Caulfield. Penguin Random House Canada. https://www.penguinrandomhouse.ca/books/565776/the-vaccination-picture-by-timothy-caulfield/9780735234994

Meet the media startups making big money on vaccine conspiracies. (n.d.). Fortune. Retrieved December 23, 2021, from https://fortune.com/2021/05/14/disinformation-media-vaccine-covid19/

send off your draft or beta orproposal for feedback. Share this Intermediate Packet with a friend,family member, colleague, or collaborator; tell them that it’s still awork-in-process and ask them to send you their thoughts on it. Thenext time you sit down to work on it again, you’ll have their input andsuggestions to add to the mix of material you’re working with.

A major benefit of working in public is that it invites immediate feedback (hopefully positive, constructive criticism) from anyone who might be reading it including pre-built audiences, whether this is through social media or in a classroom setting utilizing discussion or social annotation methods.

This feedback along the way may help to further find flaws in arguments, additional examples of patterns, or links to ideas one may not have considered by themselves.

Sadly, depending on your reader's context and understanding of your work, there are the attendant dangers of context collapse which may provide or elicit the wrong sorts of feedback, not to mention general abuse.

ReconfigBehSci on Twitter: ‘Now #scibeh2020: Pat Healey from QMU, Univ. Of London speaking about (online) interaction and miscommunication in our session on “Managing Online Research Discourse” https://t.co/Gsr66BRGcJ’ / Twitter. (n.d.). Retrieved 6 March 2021, from https://twitter.com/SciBeh/status/1326155809437446144

Mike Caulfield. (2021, March 10). One of the drivers of Twitter daily topics is that topics must be participatory to trend, which means one must be able to form a firm opinion on a given subject in the absence of previous knowledge. And, it turns out, this is a bit of a flaw. [Tweet]. @holden. https://twitter.com/holden/status/1369551099489779714

Stefan Simanowitz. (2021, March 18). 1/. The PM claims that the govt “stuck to the science like glue” But this is not true At crucial times they ignored the science or concocted pseudo-scientific justifications for their actions & inaction This thread, & the embedded threads, set them out https://t.co/dhXqkSL1bz [Tweet]. @StefSimanowitz. https://twitter.com/StefSimanowitz/status/1372460227619135493

Virpi Flyg on Twitter. (n.d.). Twitter. Retrieved 1 April 2022, from https://twitter.com/VirpiFlyg/status/1452995562224201736

Fidalgo, P. (2022, February 22). How the Hell Did It Get This Bad? Timothy Caulfield Battles the Infodemic, March 3 | Center for Inquiry. https://centerforinquiry.org/news/how-the-hell-did-it-get-this-bad-timothy-caulfield-battles-the-infodemic-march-3/

How cherry-picking science became the center of the anti-mask movement. (2022, February 14). Gothamist. https://gothamist.com

Enough with the harassment: How to deal with anti-vax cults. (2022, January 26). Healthy Debate. https://healthydebate.ca/2022/01/topic/how-to-deal-with-anti-vax-cults/

Some trucker convoy organizers have history of white nationalism, racism—National | Globalnews.ca. (n.d.). Global News. Retrieved January 31, 2022, from https://globalnews.ca/news/8543281/covid-trucker-convoy-organizers-hate/

Garland, J., Ghazi-Zahedi, K., Young, J.-G., Hébert-Dufresne, L., & Galesic, M. (2022). Impact and dynamics of hate and counter speech online. EPJ Data Science, 11(1), 1–24. https://doi.org/10.1140/epjds/s13688-021-00314-6

The online information environment | Royal Society. (n.d.). Retrieved January 21, 2022, from https://royalsociety.org/topics-policy/projects/online-information-environment/

Jones, C. M., Diethei, D., Schöning, J., Shrestha, R., Jahnel, T., & Schüz, B. (2021). Social reference cues can reduce misinformation sharing behaviour on social media. PsyArXiv. https://doi.org/10.31234/osf.io/v6fc9

Fischer, O., Jeitziner, L., & Wulff, D. U. (2021). Affect in science communication: A data-driven analysis of TED talks. PsyArXiv. https://doi.org/10.31234/osf.io/28yc5

Deepti Gurdasani. (2021, December 23). Some brief thoughts on the concerning relativism I’ve seen creeping into media, and scientific rhetoric over the past 20 months or so—The idea that things are ok because they’re better relative to a point where things got really really bad. 🧵 [Tweet]. @dgurdasani1. https://twitter.com/dgurdasani1/status/1474042179110772736

Timothy Caulfield. (2021, December 14). @LuisSchang @joerogan The Great Conspiracy Theory Paradox! Https://t.co/sEPuwvXJKp [Tweet]. @CaulfieldTim. https://twitter.com/CaulfieldTim/status/1470818785867153408

How to report on public officials who spread misinformation. (2021, December 8). The Journalist’s Resource. https://journalistsresource.org/home/covering-misinformation-tips/

Antivaccine activists use a government database on side effects to scare the public. (n.d.). Retrieved December 7, 2021, from https://www.science.org/content/article/antivaccine-activists-use-government-database-side-effects-scare-public

The Left’s Covid failure. (2021, November 23). UnHerd. https://unherd.com/2021/11/the-lefts-covid-failure/

Facebook froze as anti-vaccine comments swarmed users. (n.d.). MSN. Retrieved November 12, 2021, from https://www.msn.com/en-ca/news/science/facebook-froze-as-anti-vaccine-comments-swarmed-users/ar-AAPY06U

Frost, M. (n.d.). Busting COVID-19 vaccination myths. Retrieved November 2, 2021, from https://acpinternist.org/archives/2021/11/busting-covid-19-vaccination-myths.htm

Thaker, J., & Richardson, L. (2021). COVID-19 Vaccine Segments in Australia: An Audience Segmentation Analysis to Improve Vaccine Uptake [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/y85nm

Sutton, J. (2018). Health Communication Trolls and Bots Versus Public Health Agencies’ Trusted Voices. American Journal of Public Health, 108(10), 1281–1282. https://doi.org/10.2105/AJPH.2018.304661

Kington, R. S., Arnesen, S., Chou, W.-Y. S., Curry, S. J., Lazer, D., & Villarruel, and A. M. (2021). Identifying Credible Sources of Health Information in Social Media: Principles and Attributes. NAM Perspectives. https://doi.org/10.31478/202107a

Quantifying The COVID-19 Public Health Media Narrative Through TV & Radio News Analysis – The GDELT Project. (n.d.). Retrieved May 14, 2021, from https://blog.gdeltproject.org/quantifying-the-covid-19-public-health-media-narrative-through-tv-radio-news-analysis/

Using The Global Quotation Graph To Examine Statements About Covid-19 Vaccination And Infertility Or Bell’s Palsy – The GDELT Project. (n.d.). Retrieved May 14, 2021, from https://blog.gdeltproject.org/using-the-global-quotation-graph-to-examine-statements-about-covid-19-vaccination-and-infertility-or-bells-palsy/

Misinformation Alerts - Public Health Communications Collaborative. (n.d.). Public Health Communication Collaborative. Retrieved September 24, 2021, from https://publichealthcollaborative.org/misinformation-alerts/

Vraga, E. K., & Bode, L. (n.d.). Addressing COVID-19 Misinformation on Social Media Preemptively and Responsively - Volume 27, Number 2—February 2021 - Emerging Infectious Diseases journal - CDC. https://doi.org/10.3201/eid2702.203139

Mazumdar, S., & Thakker, D. (2020). Citizen Science on Twitter: Using Data Analytics to Understand Conversations and Networks. Future Internet, 12(12), 210. https://doi.org/10.3390/fi12120210

Clift, A. K., von Ende, A., Tan, P. S., Sallis, H. M., Lindson, N., Coupland, C. A. C., Munafò, M. R., Aveyard, P., Hippisley-Cox, J., & Hopewell, J. C. (2021). Smoking and COVID-19 outcomes: An observational and Mendelian randomisation study using the UK Biobank cohort. Thorax, thoraxjnl-2021-217080. https://doi.org/10.1136/thoraxjnl-2021-217080

Bai, H. (2021). Fake News Known as Fake Still Changes Beliefs and Leads to Partisan Polarization [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/v9gax

Zuckerman, E. (2021). Demand five precepts to aid social-media watchdogs. Nature, 597(7874), 9–9. https://doi.org/10.1038/d41586-021-02341-9

‘Spreading like a virus’: Inside the EU’s struggle to debunk Covid lies | World news | The Guardian. (n.d.). Retrieved August 18, 2021, from https://www.theguardian.com/world/2021/aug/17/spreading-like-a-virus-inside-the-eus-struggle-to-debunk-covid-lies?CMP=Share_iOSApp_Other

The Daily 202: Nearly 30 groups urge Facebook, Instagram, Twitter to take down vaccine disinformation—The Washington Post. (n.d.). Retrieved August 2, 2021, from https://www.washingtonpost.com/politics/2021/07/19/daily-202-nearly-30-groups-urge-facebook-instagram-twitter-take-down-vaccine-disinformation/?utm_source=twitter&utm_campaign=wp_main&utm_medium=social

What the World Health Organization really said about mixing COVID-19 vaccines | CBC News. (n.d.). Retrieved July 15, 2021, from https://www.cbc.ca/news/health/covid-19-vaccine-mixing-and-matching-who-1.6101047?__vfz=medium%3Dsharebar

Deepti Gurdasani on Twitter: “I’m still utterly stunned by yesterday’s events—Let me go over this in chronological order & why I’m shocked. - First, in the morning yesterday, we saw a ‘leaked’ report to FT which reported on @PHE_uk data that was not public at the time🧵” / Twitter. (n.d.). Retrieved June 27, 2021, from https://twitter.com/dgurdasani1/status/1396373990986375171

Morrison, M., Merlo, K., & Woessner, Z. (2021). How to boost the impact of scientific conferences [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/895gt

Professor, interested in plagues, and politics. Re-locking my twitter acct when is 70% fully vaccinated.

Example of a professor/research who has apparently made his Tweets public, but intends to re-lock them majority of threat is over.

Only in our anti-truth hellscape could Anthony Fauci become a supervillain—The Washington Post. (n.d.). Retrieved June 16, 2021, from https://www.washingtonpost.com/lifestyle/media/sullivan-fauci-emails/2021/06/09/8b0724a8-c93a-11eb-81b1-34796c7393af_story.html

Deviri, D. (2021). From the ivory tower to the public square: Strategies to restore public trust in science. MetaArXiv. https://doi.org/10.31222/osf.io/w3frb

Op-Ed: How Not to Message the Public on COVID Vaccines | MedPage Today. (n.d.). Retrieved May 26, 2021, from https://www.medpagetoday.com/infectiousdisease/publichealth/92704

Amidst the global pandemic, this might sound not dissimilar to public health. When I decide whether to wear a mask in public, that’s partially about how much the mask will protect me from airborne droplets. But it’s also—perhaps more significantly—about protecting everyone else from me. People who refuse to wear a mask because they’re willing to risk getting Covid are often only thinking about their bodies as a thing to defend, whose sanctity depends on the strength of their individual immune system. They’re not thinking about their bodies as a thing that can also attack, that can be the conduit that kills someone else. People who are careless about their own data because they think they’ve done nothing wrong are only thinking of the harms that they might experience, not the harms that they can cause.

What lessons might we draw from public health and epidemiology to improve our privacy lives in an online world? How might we wear social media "masks" to protect our friends and loved ones from our own posts?

Sanders, J. G., Tosi, A., Obradovic, S., Miligi, I., & Delaney, L. (2021). Lessons from lockdown: Media discourse on the role of behavioural science in the UK COVID-19 response. Frontiers in Psychology, 12. https://doi.org/10.3389/fpsyg.2021.647348

It’s too soon to declare vaccine victory—Four strategies for continued progress | TheHill. (n.d.). Retrieved May 12, 2021, from https://thehill.com/opinion/healthcare/552219-its-too-soon-to-declare-covid-vaccine-victory-four-strategies-for

Zarocostas, J. (2020). How to fight an infodemic. The Lancet, 395(10225), 676. https://doi.org/10.1016/S0140-6736(20)30461-X

Chang, H.-C. H., Chen, E., Zhang, M., Muric, G., & Ferrara, E. (2021). Social Bots and Social Media Manipulation in 2020: The Year in Review. ArXiv:2102.08436 [Cs]. http://arxiv.org/abs/2102.08436

Darren Dahly. (2021, February 24). @SciBeh One thought is that we generally don’t ‘press’ strangers or even colleagues in face to face conversations, and when we do, it’s usually perceived as pretty aggressive. Not sure why anyone would expect it to work better on twitter. Https://t.co/r94i22mP9Q [Tweet]. @statsepi. https://twitter.com/statsepi/status/1364482411803906048

‘I’m ridiculously positive about the media’s coverage of COVID-19.’ (n.d.). RSB. Retrieved February 13, 2021, from https://www.rsb.org.uk//biologist-covid-19/189-biologist/biologist-covid-19/2568-fiona-fox-interview

The Data Visualizations Behind COVID-19 Skepticism. (n.d.). The Data Visualizations Behind COVID-19 Skepticism. Retrieved March 27, 2021, from http://vis.csail.mit.edu/covid-story/

Lutkenhaus, R. O., Jansz, J., & Bouman, M. P. A. (2019). Mapping the Dutch vaccination debate on Twitter: Identifying communities, narratives, and interactions. Vaccine: X, 1. https://doi.org/10.1016/j.jvacx.2019.100019

Berman, J. M. (2020). Anti-vaxxers: How to Challenge a Misinformed Movement. https://doi.org/10.7551/mitpress/12242.001.0001

Smith, N., & Graham, T. (2019). Mapping the anti-vaccination movement on Facebook. Information, Communication & Society, 22(9), 1310–1327. https://doi.org/10.1080/1369118X.2017.1418406

You cannot practice public health without engaging in politics. (2021, March 29). The BMJ. https://blogs.bmj.com/bmj/2021/03/29/you-cannot-practice-public-health-without-engaging-in-politics/

Meleo-Erwin, Z., Basch, C., MacLean, S. A., Scheibner, C., & Cadorett, V. (2017). “To each his own”: Discussions of vaccine decision-making in top parenting blogs. Human Vaccines & Immunotherapeutics, 13(8), 1895–1901. https://doi.org/10.1080/21645515.2017.1321182

Poland, G. A., & Jacobson, R. M. (2011, January 12). The Age-Old Struggle against the Antivaccinationists (world) [N-perspective]. Http://Dx.Doi.Org/10.1056/NEJMp1010594; Massachusetts Medical Society. https://doi.org/10.1056/NEJMp1010594

Gesser-Edelsburg, A., Diamant, A., Hijazi, R., & Mesch, G. S. (2018). Correcting misinformation by health organizations during measles outbreaks: A controlled experiment. PLOS ONE, 13(12), e0209505. https://doi.org/10.1371/journal.pone.0209505

Morley, Jessica, Josh Cowls, Mariarosaria Taddeo, and Luciano Floridi. ‘Public Health in the Information Age: Recognizing the Infosphere as a Social Determinant of Health’. Journal of Medical Internet Research 22, no. 8 (2020): e19311. https://doi.org/10.2196/19311.

Krupenkin, Masha, Kai Zhu, Dylan Walker, and David M. Rothschild. ‘If a Tree Falls in the Forest: COVID-19, Media Choices, and Presidential Agenda Setting’. SSRN Scholarly Paper. Rochester, NY: Social Science Research Network, 22 September 2020. https://doi.org/10.2139/ssrn.3697069.

Workshop hackathon: Optimising research dissemination and curation : BehSciMeta. (n.d.). Reddit. Retrieved 6 March 2021, from https://www.reddit.com/r/BehSciMeta/comments/jjtg91/workshop_hackathon_optimising_research/

Dante Licona. (2020, December 8). What can NGOs, government and public institutions do on TikTok? Today @melisfiganmese and I shared some insights at #EuroPCom, the @EU_CoR conference for public communication. We were asked to talk about upcoming social media trends. Here’s a thread with some insights👇 https://t.co/GzOA66vstQ [Tweet]. @Dante_Licona. https://twitter.com/Dante_Licona/status/1336303773334069251

Renton, B. (2020, January 27). Coronavirus Updates. Off the Silk Road. http://offthesilkroad.com/2020/01/27/wuhan-coronavirus-updates/

Ogbunu, B. C. (2020, October 27). The Science That Spans #MeToo, Memes, and Covid-19. Wired. https://www.wired.com/story/the-science-that-spans-metoo-memes-and-covid-19/

Horton, Richard. ‘Offline: Science and Politics in the Era of COVID-19’. The Lancet 396, no. 10259 (24 October 2020): 1319. https://doi.org/10.1016/S0140-6736(20)32221-2.

Lalwani, P., Fansher, M., Lewis, R., Boduroglu, A., Shah, P., Adkins, T. J., … Jonides, J. (2020, November 8). Misunderstanding “Flattening the Curve”. https://doi.org/10.31234/osf.io/whe6q

David Oliver: Covid deniers’ precarious Jenga tower is collapsing on contact with reality. (2021, February 1). The BMJ. https://blogs.bmj.com/bmj/2021/02/01/david-oliver-covid-deniers-precarious-jenga-tower-is-collapsing-on-contact-with-reality/

Reinders Folmer, C., Brownlee, M., Fine, A., Kuiper, M. E., Olthuis, E., Kooistra, E. B., … van Rooij, B. (2020, October 7). Social Distancing in America: Understanding Long-term Adherence to Covid-19 Mitigation Recommendations. https://doi.org/10.31234/osf.io/457em

Toff, B. J., Badrinathan, S., Mont’Alverne, C., Arguedas, A. R., Fletcher, R., & Nielsen, R. K. (2020). What we think we know and what we want to know: Perspectives on trust in news in a changing world. Reuters Institute for the Study of Journalism. https://experts.umn.edu/en/publications/what-we-think-we-know-and-what-we-want-to-know-perspectives-on-tr

The impact of Covid-19 on media – rise of infodemics? (2020, September 16). https://www.youtube.com/watch?v=QapwrR9C3Z4&feature=youtu.be&ab_channel=InternationalDayofDemocracyEU

Schmid, P., Schwarzer, M., & Betsch, C. (n.d.). Weight-of-Evidence Strategies to Mitigate the Influence of Messages of Science Denialism in Public Discussions. Journal of Cognition, 3(1). https://doi.org/10.5334/joc.125

Centola, D. (n.d.). Why Social Media Makes Us More Polarized and How to Fix It. Scientific American. Retrieved October 25, 2020, from https://www.scientificamerican.com/article/why-social-media-makes-us-more-polarized-and-how-to-fix-it/

AI and control of Covid-19 coronavirus. (n.d.). Artificial Intelligence. Retrieved October 15, 2020, from https://www.coe.int/en/web/artificial-intelligence/ai-and-control-of-covid-19-coronavirus

ReconfigBehSci on Twitter. (n.d.). Twitter. Retrieved October 9, 2020, from https://twitter.com/SciBeh/status/1314493024072863744

Carter, J. (2020, September 29). The American Public Still Trusts Scientists, Says a New Pew Survey. Scientific American. https://www.scientificamerican.com/article/the-american-public-still-trusts-scientists-says-a-new-pew-survey/

Satariano, A. (2020, September 23). Young People More Likely to Believe Virus Misinformation, Study Says. The New York Times. https://www.nytimes.com/2020/09/23/technology/young-people-more-likely-to-believe-virus-misinformation-study-says.html

Gallagher, R. J., Doroshenko, L., Shugars, S., Lazer, D., & Welles, B. F. (2020). Sustained Online Amplification of COVID-19 Elites in the United States. ArXiv:2009.07255 [Physics]. http://arxiv.org/abs/2009.07255

Thread, C. (2020, June 19). Trolls and Tribulations: Social Media and Public Health. Medium. https://medium.com/@gocommonthread/trolls-and-tribulations-social-media-and-public-health-499bf5c8727c

COVIDConversations: Protecting Children/Adolescents’ Mental Health with Professors Stein & Blakemore. (2020, June 24). https://www.youtube.com/watch?v=laYyNumPQEA&feature=emb_logo

Who is behind the Qanon conspiracy? We’ve traced it to three people. (n.d.). NBC News. Retrieved August 18, 2020, from https://www.nbcnews.com/tech/tech-news/how-three-conspiracy-theorists-took-q-sparked-qanon-n900531

Jørgensen, F. J., Bor, A., Lindholt, M. F., & Petersen, M. B. (2020). Lockdown Evaluations During the First Wave of the COVID-19 Pandemic [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/4ske2

Paris, Marseille named as high-risk COVID zones, making curbs likelier. (2020, August 14). Reuters. https://uk.reuters.com/article/uk-health-coronavirus-france-idUKKCN25A0LC

Walter, N., Brooks, J. J., Saucier, C. J., & Suresh, S. (2020). Evaluating the Impact of Attempts to Correct Health Misinformation on Social Media: A Meta-Analysis. Health Communication, 0(0), 1–9. https://doi.org/10.1080/10410236.2020.1794553

@DFRLab. (2020). Op-Ed: How Brexit tribalism has influenced attitudes toward COVID-19 in Britain. Medium. https://medium.com/dfrlab/op-ed-how-brexit-tribalism-has-influenced-attitudes-toward-covid-19-in-britain-16a983a56929

Tasnim, S., Hossain, M. M., & Mazumder, H. (2020). Impact of rumors or misinformation on coronavirus disease (COVID-19) in social media [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/uf3zn

La, V.-P., Pham, T.-H., Ho, T. M., Hoàng, N. M., Linh, N. P. K., Vuong, T.-T., Nguyen, H.-K. T., Tran, T., Van Quy, K., Ho, T. M., & Vuong, Q.-H. (2020). Policy response, social media and science journalism for the sustainability of the public health system amid the COVID-19 outbreak: The Vietnam lessons [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/cfw8x

Motta, M., Stecula, D., & Farhart, C. E. (2020). How Right-Leaning Media Coverage of COVID-19 Facilitated the Spread of Misinformation in the Early Stages of the Pandemic [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/a8r3p

Krumpal, I. (2020). Soziologie in Zeiten der Pandemie [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/yqdsu

Starominski-Uehara, M. (2020). Mass Media Exposing Representations of Reality Through Critical Inquiry [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/vz9cu

Haman, M. (2020). The use of Twitter by state leaders and its impact on the public during the COVID-19 pandemic. https://doi.org/10.31235/osf.io/u4maf

Grow, A., Perrotta, D., Del Fava, E., Cimentada, J., Rampazzo, F., Gil-Clavel, S., & Zagheni, E. (2020). Addressing Public Health Emergencies via Facebook Surveys: Advantages, Challenges, and Practical Considerations [Preprint]. SocArXiv. https://doi.org/10.31235/osf.io/ez9pb

US, A. B., The Conversation. (n.d.). Coronavirus Responses Highlight How Humans Have Evolved to Dismiss Facts That Don’t Fit Their Worldview. Scientific American. Retrieved June 30, 2020, from https://www.scientificamerican.com/article/coronavirus-responses-highlight-how-humans-have-evolved-to-dismiss-facts-that-dont-fit-their-worldview/

Viglione, G. (2020). Has Twitter just had its saddest fortnight ever? Nature. https://doi.org/10.1038/d41586-020-01818-3

Velásquez, N., Leahy, R., Restrepo, N. J., Lupu, Y., Sear, R., Gabriel, N., Jha, O., Goldberg, B., & Johnson, N. F. (2020). Hate multiverse spreads malicious COVID-19 content online beyond individual platform control. ArXiv:2004.00673 [Nlin, Physics:Physics]. http://arxiv.org/abs/2004.00673

Gencoglu, O., & Gruber, M. (2020). Causal Modeling of Twitter Activity During COVID-19. ArXiv:2005.07952 [Cs]. http://arxiv.org/abs/2005.07952

Farr, C. (2020, May 23). Why scientists are changing their minds and disagreeing during the coronavirus pandemic. CNBC. https://www.cnbc.com/2020/05/23/why-scientists-change-their-mind-and-disagree.html

Gozzi, N., Tizzani, M., Starnini, M., Ciulla, F., Paolotti, D., Panisson, A., & Perra, N. (2020). Collective response to the media coverage of COVID-19 Pandemic on Reddit and Wikipedia. ArXiv:2006.06446 [Physics]. http://arxiv.org/abs/2006.06446

Gollust, Sarah E., Rebekah H. Nagler, and Erika Franklin Fowler. ‘The Emergence of COVID-19 in the U.S.: A Public Health and Political Communication Crisis’. Journal of Health Politics, Policy and Law. Accessed 5 June 2020. https://doi.org/10.1215/03616878-8641506.

Jamieson, K. H., & Albarracín, D. (2020). The Relation between Media Consumption and Misinformation at the Outset of the SARS-CoV-2 Pandemic in the US. Harvard Kennedy School Misinformation Review, 2. https://doi.org/10.37016/mr-2020-012

Communications: Working with the media | BPS. (n.d.). Retrieved April 21, 2020, from https://www.bps.org.uk/about-us/communications/working-media

Ryan, W., & Evers, E. (2020). Logarithmic Axis Graphs Distort Lay Judgment. https://doi.org/10.31234/osf.io/cwt56

Part of the problem of social media is that there is no equivalent to the scientific glassblowers’ sign, or the woodworker’s open door, or Dafna and Jesse’s sandwich boards. On the internet, if you stop speaking: you disappear. And, by corollary: on the internet, you only notice the people who are speaking nonstop.

This quote comes from a larger piece by Robin Sloan. (I don't know who that is though)

The problem with social media is that the equivalent to working with the garage door open (working in public) is repeatedly talking in public about what you're doing.

One problem with this is that you need to choose what you want to talk about, and say it. This emphasizes whatever you select, not what would catch a passerby's eye.

The other problem is that you become more visible by the more you talk. Conversely, when you stop talking, you become invisible.

Galea, S., Merchant, R. M., & Lurie, N. (2020). The Mental Health Consequences of COVID-19 and Physical Distancing: The Need for Prevention and Early Intervention. JAMA Internal Medicine. https://doi.org/10.1001/jamainternmed.2020.1562

Ferres, L. (2020 April 10). COVID19 mobility reports. Leo's Blog. https://leoferres.info/blog/2020/04/10/covid19-mobility-reports/

Romano, A., Sotis, C., Dominioni, G., & Guidi, S. (2020). COVID-19 Data: The Logarithmic Scale Misinforms the Public and Affects Policy Preferences [Preprint]. PsyArXiv. https://doi.org/10.31234/osf.io/42xfm

Rosen, Z., Weinberger-Litman, S. L., Rosenzweig, C., Rosmarin, D. H., Muennig, P., Carmody, E. R., … Litman, L. (2020, April 14). Anxiety and distress among the first community quarantined in the U.S due to COVID-19: Psychological implications for the unfolding crisis. https://doi.org/10.31234/osf.io/7eq8c

Jarynowski, A., Wójta-Kempa, M., & Belik, V. (2020, April 22). TRENDS IN PERCEPTION OF COVID-19 IN POLISH INTERNET. https://doi.org/10.31234/osf.io/dr3gm

Lades, L., Laffan, K., Daly, M., & Delaney, L. (2020, April 22). Daily emotional well-being during the COVID-19 pandemic. https://doi.org/10.31234/osf.io/pg6bw

Picturing Health. Films about coronavirus (COVID-19). picturinghealth.org/coronavirus-films/

Olapegba, P. O., Ayandele, O., Kolawole, S. O., Oguntayo, R., Gandi, J. C., Dangiwa, A. L., … Iorfa, S. K. (2020, April 12). COVID-19 Knowledge and Perceptions in Nigeria. https://doi.org/10.31234/osf.io/j356x

El Shoghri, A., et al. (2020 April 03). How mobility patterns drive disease spread: A case study using public transit passenger card travel data. 2019 IEEE 20th International Symposium on "A World of Wireless, Mobile and Multimedia Networks". DOI:10.1109/WoWMoM.2019.8793018

Koebler, J. (2020 April 09). The viral 'study' about runners spreading coronavirus is not actually a study. Vice. https://www.vice.com/en_us/article/v74az9/the-viral-study-about-runners-spreading-coronavirus-is-not-actually-a-study

I believe that many of the current challenges in public sectors link back to two causal factors: googletag.cmd.push(function() { googletag.display('div-gpt-ad-1560300455224-0'); }); The impact of increasing reactivism to politics and 24-hour media scrutiny, in public sectors (which varies across jurisdictions); and The unintended consequences of New Public Management and trying to make public sectors act like the private sector.

separate public domain illustrations

The main source images for this collage:

Borrow, George Henry, and E. J. Sullivan. "I did not like reviewing at all--it was not to my taste." Lavengro, Macmillan and Co., London, 1896, p. 296. British Library Flickr, HMNTS 012621.h.20. Accessed 1 February 2018.

Dodge, Mary Elizabeth. "A Terrible Tiger." When Life is Young: a Collection of Verse for Boys and Girls, Century Co., 1894, New York, p. 201. British Library Flickr. Accessed 1 February 2018.

On the net, you have public, or you have secrets. The private intermediate sphere, with its careful buffering. is shattered. E-mails are forwarded verbatim. IRC transcripts, with throwaway comments, are preserved forever. You talk to your friends online, you talk to the world.

massmediarefers to those means of transmission

When I ask students to post on Youth Voices, I'm asking them to participate in mass media. It's a big jump for some who do very little by friend-to-friend communication.

Land defenders are dying but the news don’t talk about this. Most of media and politics are owned by companies so, we have to force them to serve the people instead. We can’t depend on these guys.

We need to recognize different values and think that people value land entitlements, family and community, the elderly, connectivity. If we value these, we will want to hear these things reported all the time. Marketing will follow suit. Perhaps marketing will be the first to move...